Identify Blocked Resources Using Google Webmaster Tools

- Transfer

Hi, Habrahabr! It so happened that modern web content literally stands on three pillars: HTML5, CSS3 and Javascript. The tight integration of these three tools allows you to create truly impressive results, but today we are not talking about the achievements of the modern web industry, but how these achievements can be correctly conveyed to users.

Of course, browsers are responsible for displaying your site among users. But how does a search engine see a site? What will she see if part of the content (pictures, style sheets, scripts) is closed from scanning? What will users see in the search results if Google cannot index all the necessary content? We have created a tool specifically to answer these questions (as well as to correct some misunderstandings that prevent you from finding your users on Google)"Report on blocked resources . "

Today we introduce it to a wide audience of developers and webmasters, and you can be one of the first to get acquainted with it and try out the capabilities of the new tool.

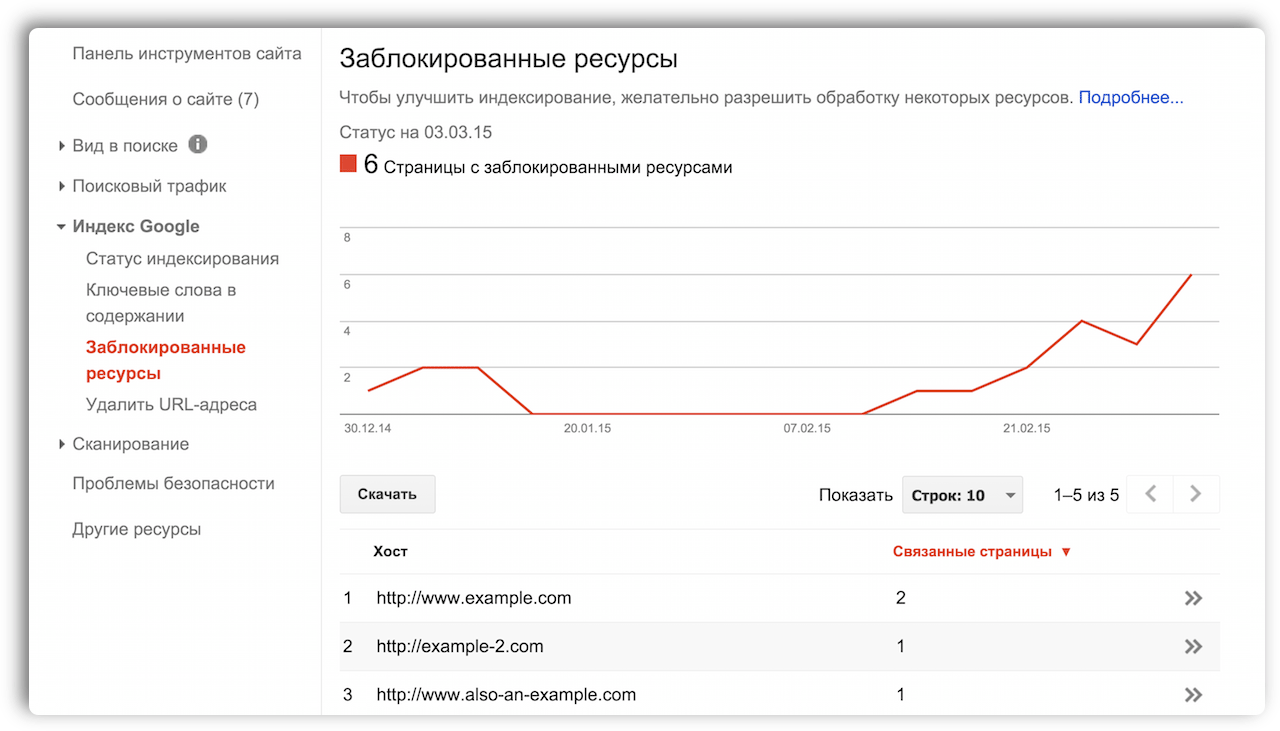

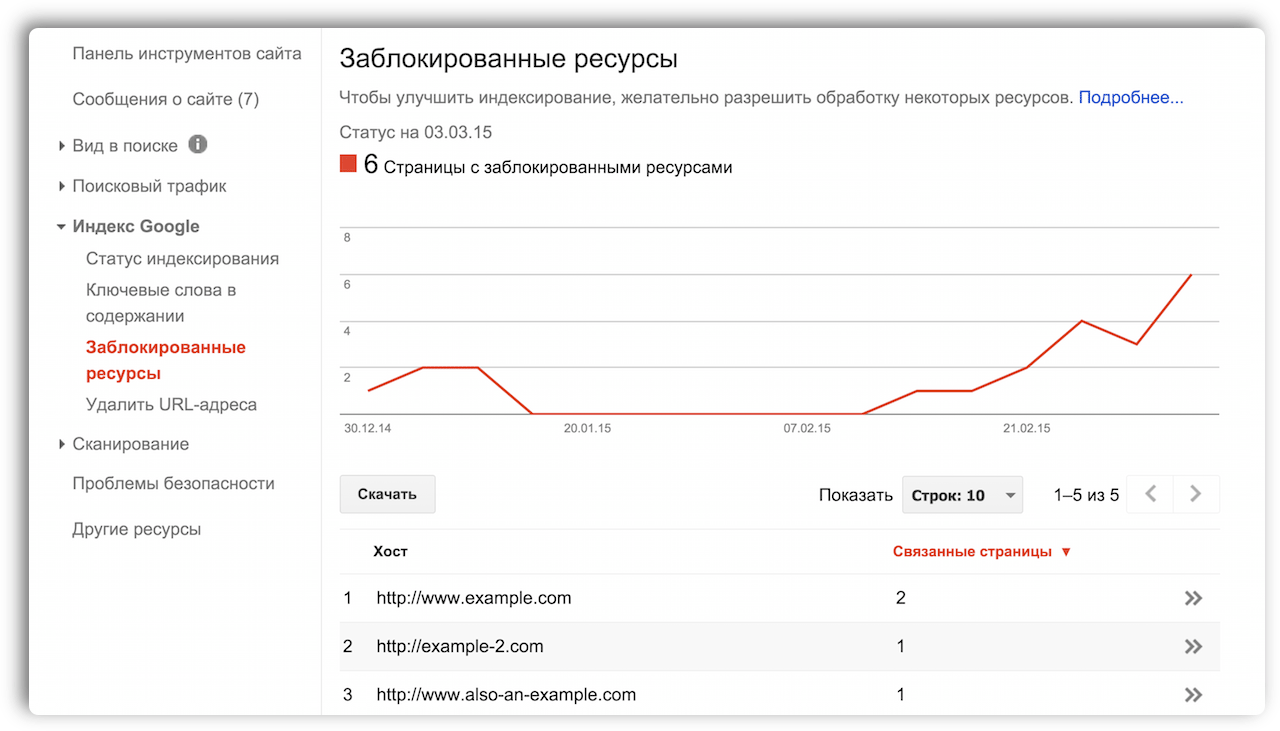

This is what the verification report looks like:

Inside the report, you will find a list of hosts inaccessible to Googlebot that your site refers to. This can be links to images, CSS, libraries and JavaScript modules.

Each line is clickable and contains a list of resources that are on this host, as well as the pages of your site on which they are located. The system will offer you some possible solutions to problems related to indexing content.

We also updated the “Fetch and Render” mode: if earlier it showed your site only from the point of view of Googlebot, then now you will receive two screenshots at once, one with the result of rendering the page for the Google search robot, the other - for site visitors. By comparing these images, you can easily identify potential problems.

We are well aware that webmasters do not always have the opportunity to influence the behavior of external resources. In Webmaster Tools, we try to display only those hosts whose scan settings you can change. In the list of “problem areas” you will not see URLs associated with some external resources, for example, popular web analytics services.

We tried to exclude the most obvious resources from the list, but if you still come across something that, it seems to you, is out of place, let us know . We carefully monitor the feedback of webmasters and developers about our tools and try to quickly respond to all comments and suggestions that come to our forum.

For various (and optionally technical) reasons, updating all robots.txt files can take a lot of time, so we recommend starting with resources whose blocking most affects the display of the page. You will find detailed instructions in this article .

We hope that our new tools will help you quickly detect blocked resources on the site and fix them. If you have questions - ask them in the comments, on our forum or in the community for webmasters .

Of course, browsers are responsible for displaying your site among users. But how does a search engine see a site? What will she see if part of the content (pictures, style sheets, scripts) is closed from scanning? What will users see in the search results if Google cannot index all the necessary content? We have created a tool specifically to answer these questions (as well as to correct some misunderstandings that prevent you from finding your users on Google)"Report on blocked resources . "

Today we introduce it to a wide audience of developers and webmasters, and you can be one of the first to get acquainted with it and try out the capabilities of the new tool.

This is what the verification report looks like:

Inside the report, you will find a list of hosts inaccessible to Googlebot that your site refers to. This can be links to images, CSS, libraries and JavaScript modules.

Each line is clickable and contains a list of resources that are on this host, as well as the pages of your site on which they are located. The system will offer you some possible solutions to problems related to indexing content.

See how Googlebot: now two screenshots instead of one

We also updated the “Fetch and Render” mode: if earlier it showed your site only from the point of view of Googlebot, then now you will receive two screenshots at once, one with the result of rendering the page for the Google search robot, the other - for site visitors. By comparing these images, you can easily identify potential problems.

We are well aware that webmasters do not always have the opportunity to influence the behavior of external resources. In Webmaster Tools, we try to display only those hosts whose scan settings you can change. In the list of “problem areas” you will not see URLs associated with some external resources, for example, popular web analytics services.

We tried to exclude the most obvious resources from the list, but if you still come across something that, it seems to you, is out of place, let us know . We carefully monitor the feedback of webmasters and developers about our tools and try to quickly respond to all comments and suggestions that come to our forum.

For various (and optionally technical) reasons, updating all robots.txt files can take a lot of time, so we recommend starting with resources whose blocking most affects the display of the page. You will find detailed instructions in this article .

We hope that our new tools will help you quickly detect blocked resources on the site and fix them. If you have questions - ask them in the comments, on our forum or in the community for webmasters .