The stages of the birth of new functionality in a software product

This article will focus on the process of adding new functionality to the program. We will consider all stages from the conception to the release, including the reporting of requirements by analysts to those who actually have to do the whole thing and that is, to our beloved (without quotes and irony) developers. The article is primarily aimed at the transfer of practical experience (including unsuccessful) of the construction of this process.

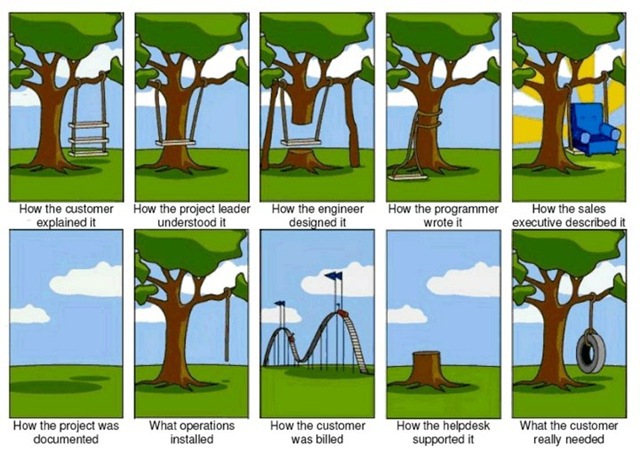

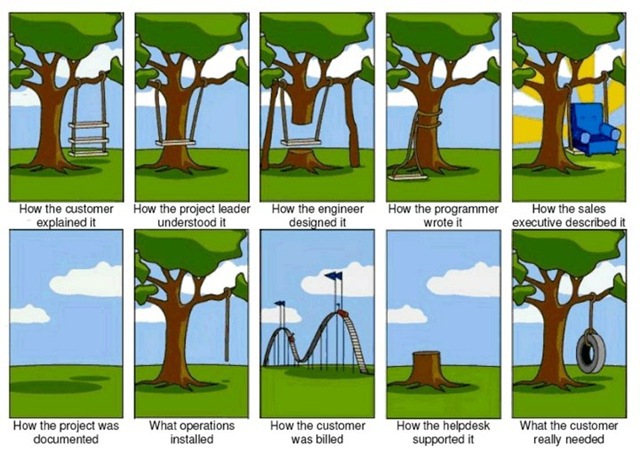

KDPV (this picture will probably never lose relevance):

Disclaimer:All the following description of the processes is based on the personal experience of the author obtained in a particular company and may have nothing to do with the objective reality of the reader. Information on each stage of development is presented in a condensed form and is intended to reveal only the main points of the process within the framework of one article.

In the beginning there was a word ...

For myself, I distinguish 3 stages of the emergence of new functionality (aka “features”):

1. There is an idea described in general terms.

For example: "Add email notification support to the program."

2. The idea is developed from the point of view of the user, that is, use cases are written.

For example: “When certain operations are completed, the program will send an e-mail to the user-specified address through a predefined SMTP server.”

3. The idea is explained to the developers / testers and the implementation begins directly.

Accordingly, in order to reach the 3rd stage, the analyst needs to thoroughly study the subject area and, first of all, for himself to understand how “it” will work from the user's point of view, including (to a lesser extent) from the point of view of development. An insufficient elaboration of the requirements at the first stage can lead to the fact that the implemented functionality will not work at all as expected by the analyst, but only as the developer understood it (which, unfortunately, happens quite often in the modern world).

Even such a seemingly simple “feature” without proper elaboration can be ruined if the details, such as:

Nevertheless, no matter how much time was spent on the initial development of the requirements, unaccounted use scenarios almost always pop up, especially if the new functionality is quite voluminous. For this, it is necessary to discuss the “feature” with developers and testers who look at new opportunities from a completely different angle and can come up with scripts that would never occur to a simple user. This process is described in more detail in the next section of the article.

Below is an example of the importance of knowing your own product when designing a new "feature" from real life. I warn you that there will be many letters and specific terms. Who is not afraid - they can look into the spoiler:

After compiling the initial description (stage No. 2) of a new “feature” by the analyst, this text is read and analyzed by at least two people: the developer (who will implement it) and the tester (which will make the test scripts).

The discussion in our case is carried out “on the couch”: the analyst and the tester and / or developer sit down with a cup ofirish coffee and clarify all the controversial points and inaccuracies in the description of the “feature”. All discussion results are documented and lead to changes in the original description. Read more about the design of the description (and not only) in the next section.

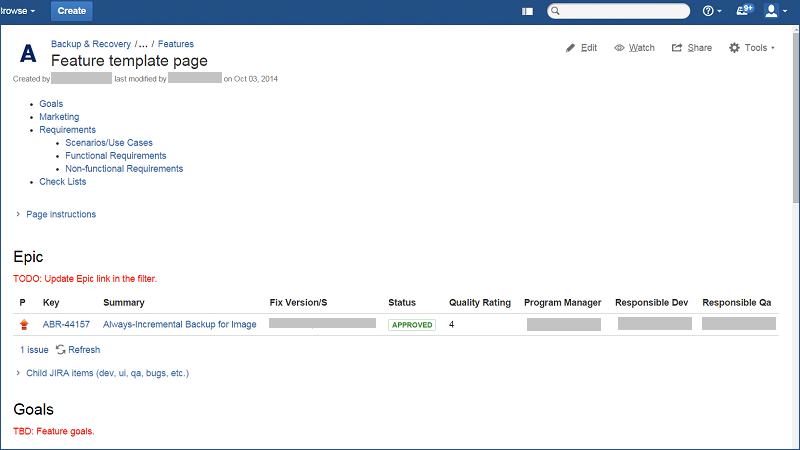

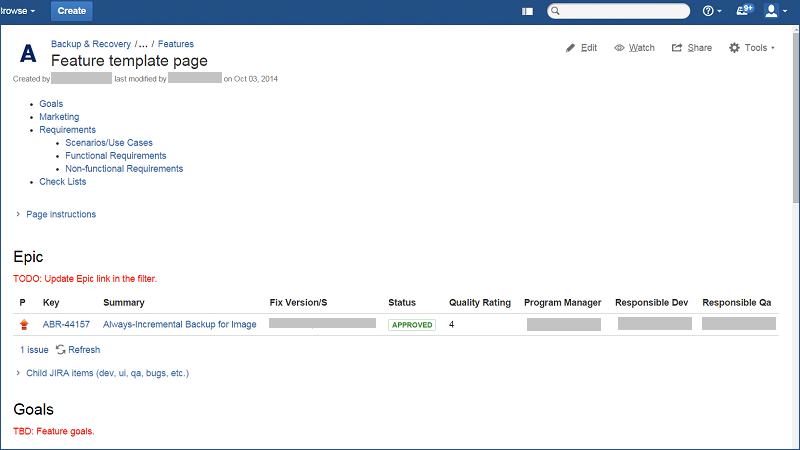

At home, we use JIRA to track all activities (bugs, QA tasks, tickets from technical support, etc.), keep statistics, build metrics. Confluence is used to describe processes, internal documentation, describe functional requirements, etc.

JIRA <-> Confluence was chosen for its great flexibility in building links between the elements of these two systems. For example, in Confluence you can build a table according to predefined filters from JIRA, build beautiful graphs and track the status of several JIRA projects on one page. In addition, the built-in Confluence text editor is much more flexible than its counterpart in JIRA and is therefore better suited to describe functionality.

There are no strict rules in the description of new functionality in Confluence, but there are templates that are recommended to adhere to. Here is an example of our template:

Heading, linking to a ticket in JIRA, and main goals:

Information on marketing and instructions on how to

draw up requirements: Link to tickets in which the user interface is drawn (“mockups”), usage scenarios, functional and non-functional requirements:

The design consists of the following steps:

1. An “Epic” type ticket is created in JIRA with a brief description of the required functionality.

2. A decision is made in which version / update this “feature” will be implemented.

3.The page in Confluence is created according to the template (example above) and filled out by the analyst with the scheduling of all kinds of scripts in free form.

4. The page in Confluence is shown to testers (QA) and developers to study and write test cases.

5. JIRA creates tickets of the “dev task” type associated with the original “Epic” and evaluates it.

Further, development is carried out directly, at the end of which the functionality is transferred to testers in order to “run” it according to previously created scripts.

At the same time, considerable time may elapse between steps 1 and 2, taking into account that there are a lot of new functionality and first of all it is necessary to implement “features” with the highest priority depending on the current goals of the company.

In our case, the development is divided into many iterations (sprints) 2-4 weeks long, after which a demonstration is made. The main purpose of the demonstrations is to make sure that the functionality being developed corresponds to the original idea and that there are no additional risks. That is, we adhere (at least try) to the principles of agile development .

At first, our developers were skeptical of such demonstrations, considering it a waste of time, but, as practice has shown, it was during the demonstrations that additional ideas arise, missed scenarios are revealed and the vision of all process participants (analysts, testers, developers and superiors) can be synchronized. .

During the development process, especially if the functionality is voluminous, there may be a need for changes in the initial requirements (to ensure their compatibility with reality). All changes are documented in Confluence / JIRA and task scores are adjusted accordingly.

Acceptance of the "feature" is carried out in several stages:

1. The developer says " Done!" You can see build No.XX »

2. The tester runs new functionality according to the compiled test scenarios and causes bugs.

3. The analyst looks at the implemented “feature” from the point of view of user scenarios (in fact, this is a formal step, because the same thing was already done during the demonstrations).

4. The "feature" is given to other departments for internal testing:

First of all (after QA) we test all our products on our "production systems". Our valiant adminshave the opportunity to be the first to taste all the horrorsdelights of new versions and report problems found.

Only after all the previous steps is the product considered worthy for our end users to see.

The public release of the product itself is a separate process with a lot of subtleties - it's a painMr. Shaya topic worthy of a separate article.

Summarizing the above:

A. Carefully think through your ideas and draw them up correctly (at least adhere to one agreed standard)

B. Communicate with programmers and testers throughout the entire development period

B. Hold intermediate demonstrations without waiting for the deadline for product development

G. Drinkirish coffee

If you use a similar methodology when developing a product, tell us how you could improve, speed up, optimize the process in the comments.

KDPV (this picture will probably never lose relevance):

Disclaimer:All the following description of the processes is based on the personal experience of the author obtained in a particular company and may have nothing to do with the objective reality of the reader. Information on each stage of development is presented in a condensed form and is intended to reveal only the main points of the process within the framework of one article.

Stages

In the beginning there was a word ...

For myself, I distinguish 3 stages of the emergence of new functionality (aka “features”):

1. There is an idea described in general terms.

For example: "Add email notification support to the program."

2. The idea is developed from the point of view of the user, that is, use cases are written.

For example: “When certain operations are completed, the program will send an e-mail to the user-specified address through a predefined SMTP server.”

3. The idea is explained to the developers / testers and the implementation begins directly.

Accordingly, in order to reach the 3rd stage, the analyst needs to thoroughly study the subject area and, first of all, for himself to understand how “it” will work from the user's point of view, including (to a lesser extent) from the point of view of development. An insufficient elaboration of the requirements at the first stage can lead to the fact that the implemented functionality will not work at all as expected by the analyst, but only as the developer understood it (which, unfortunately, happens quite often in the modern world).

Even such a seemingly simple “feature” without proper elaboration can be ruined if the details, such as:

- Should multiple destination email addresses be supported?

- Do I need to support SSL / TLS encryption?

- What specific operations should send email notifications?

- Do I need to send scheduled notifications?

- What kind of text should be in the notifications?

- Do I need templates when setting the subject of the letter?

- Should I send operation logs in notifications? If so, in the form of text or as an attached text file?

- Etc. etc.

Nevertheless, no matter how much time was spent on the initial development of the requirements, unaccounted use scenarios almost always pop up, especially if the new functionality is quite voluminous. For this, it is necessary to discuss the “feature” with developers and testers who look at new opportunities from a completely different angle and can come up with scripts that would never occur to a simple user. This process is described in more detail in the next section of the article.

Below is an example of the importance of knowing your own product when designing a new "feature" from real life. I warn you that there will be many letters and specific terms. Who is not afraid - they can look into the spoiler:

Example

In our product Acronis Backup there is such a thing as a backup plan (in common people “backup plan”). A backup plan, in turn, consists of several activities (called "tasks") that are responsible for the different stages of the plan. So, the direct backup is performed in a backup type task (1 task per each machine included in the plan), and additional activities like copying created backups to additional storage (aka “staging”) or clearing the storage of old copies in “type tasks” replication ”and“ cleanup ”respectively.

At one time, the option “Replication / cleanup inactivity time” was added, which, according to the idea, should limit the period during which backups of created backups can be performed, that is, limit the period of activity of a task of type “replication” / “cleanup” (for example, allow this operation only from 1 to 3 nights, so as not to load the network during business hours).

It turned out that the behavior of this option differs from users' expectations in the case when the backup task does not have time to complete BEFORE the time specified in the settings of the “Replication / cleanup inactivity time” option. Instead of waiting for the end of the backup task and preventing the “replication” task from starting, the program slows down the backup task itself and waits for the end of the “inactivity” period.

Why did this happen? The problematic option turned out to be “bolted” not to a separate activity of the “replication” type, but to the entire backup plan as a whole, which gave rise to this behavior.

This problem could have been avoided if the condition had been initially set (taking into account knowledge of the structure of backup tasks) that this option should apply exclusively to “replication” activity and not affect other activities. Accordingly, to establish such a condition, the analyst needs to know the structure of the backup plan, that is, he needs to know not only what kind of behavior the user expects, but also to imagine where to spoil the implementation of the functional.

At one time, the option “Replication / cleanup inactivity time” was added, which, according to the idea, should limit the period during which backups of created backups can be performed, that is, limit the period of activity of a task of type “replication” / “cleanup” (for example, allow this operation only from 1 to 3 nights, so as not to load the network during business hours).

It turned out that the behavior of this option differs from users' expectations in the case when the backup task does not have time to complete BEFORE the time specified in the settings of the “Replication / cleanup inactivity time” option. Instead of waiting for the end of the backup task and preventing the “replication” task from starting, the program slows down the backup task itself and waits for the end of the “inactivity” period.

Why did this happen? The problematic option turned out to be “bolted” not to a separate activity of the “replication” type, but to the entire backup plan as a whole, which gave rise to this behavior.

This problem could have been avoided if the condition had been initially set (taking into account knowledge of the structure of backup tasks) that this option should apply exclusively to “replication” activity and not affect other activities. Accordingly, to establish such a condition, the analyst needs to know the structure of the backup plan, that is, he needs to know not only what kind of behavior the user expects, but also to imagine where to spoil the implementation of the functional.

Discussion

After compiling the initial description (stage No. 2) of a new “feature” by the analyst, this text is read and analyzed by at least two people: the developer (who will implement it) and the tester (which will make the test scripts).

The discussion in our case is carried out “on the couch”: the analyst and the tester and / or developer sit down with a cup of

Hint

Some especially cunning testers record these conversations on the recorder to create evidence in situations like:

- Why is this not tested?

- And you said that this scenario is not valid and can be excluded!

- When did I say that ?!

- Here you are, audio recording. We have all the moves recorded.

- Why is this not tested?

- And you said that this scenario is not valid and can be excluded!

- When did I say that ?!

- Here you are, audio recording. We have all the moves recorded.

Design and maintenance

At home, we use JIRA to track all activities (bugs, QA tasks, tickets from technical support, etc.), keep statistics, build metrics. Confluence is used to describe processes, internal documentation, describe functional requirements, etc.

JIRA <-> Confluence was chosen for its great flexibility in building links between the elements of these two systems. For example, in Confluence you can build a table according to predefined filters from JIRA, build beautiful graphs and track the status of several JIRA projects on one page. In addition, the built-in Confluence text editor is much more flexible than its counterpart in JIRA and is therefore better suited to describe functionality.

There are no strict rules in the description of new functionality in Confluence, but there are templates that are recommended to adhere to. Here is an example of our template:

Heading, linking to a ticket in JIRA, and main goals:

Information on marketing and instructions on how to

draw up requirements: Link to tickets in which the user interface is drawn (“mockups”), usage scenarios, functional and non-functional requirements:

The design consists of the following steps:

1. An “Epic” type ticket is created in JIRA with a brief description of the required functionality.

2. A decision is made in which version / update this “feature” will be implemented.

3.The page in Confluence is created according to the template (example above) and filled out by the analyst with the scheduling of all kinds of scripts in free form.

4. The page in Confluence is shown to testers (QA) and developers to study and write test cases.

5. JIRA creates tickets of the “dev task” type associated with the original “Epic” and evaluates it.

Further, development is carried out directly, at the end of which the functionality is transferred to testers in order to “run” it according to previously created scripts.

At the same time, considerable time may elapse between steps 1 and 2, taking into account that there are a lot of new functionality and first of all it is necessary to implement “features” with the highest priority depending on the current goals of the company.

Development

In our case, the development is divided into many iterations (sprints) 2-4 weeks long, after which a demonstration is made. The main purpose of the demonstrations is to make sure that the functionality being developed corresponds to the original idea and that there are no additional risks. That is, we adhere (at least try) to the principles of agile development .

At first, our developers were skeptical of such demonstrations, considering it a waste of time, but, as practice has shown, it was during the demonstrations that additional ideas arise, missed scenarios are revealed and the vision of all process participants (analysts, testers, developers and superiors) can be synchronized. .

During the development process, especially if the functionality is voluminous, there may be a need for changes in the initial requirements (to ensure their compatibility with reality). All changes are documented in Confluence / JIRA and task scores are adjusted accordingly.

Hint

The general rule that we adhere to is: “ No entry in JIRA = no work done ”. In other words, any development activity should be reflected in the JIRA. To some this may seem like an excessive formalization of processes, but the transition to this rule has helped us reduce the entropy of the documentation universe and the chaos accompanied by the written code.

Release date

Acceptance of the "feature" is carried out in several stages:

1. The developer says " Done!" You can see build No.XX »

2. The tester runs new functionality according to the compiled test scenarios and causes bugs.

3. The analyst looks at the implemented “feature” from the point of view of user scenarios (in fact, this is a formal step, because the same thing was already done during the demonstrations).

4. The "feature" is given to other departments for internal testing:

First of all (after QA) we test all our products on our "production systems". Our valiant admins

Only after all the previous steps is the product considered worthy for our end users to see.

The public release of the product itself is a separate process with a lot of subtleties - it's a pain

Conclusion

Summarizing the above:

A. Carefully think through your ideas and draw them up correctly (at least adhere to one agreed standard)

B. Communicate with programmers and testers throughout the entire development period

B. Hold intermediate demonstrations without waiting for the deadline for product development

G. Drink

If you use a similar methodology when developing a product, tell us how you could improve, speed up, optimize the process in the comments.