Category: essence of composition

- Transfer

This is the second article in the series "Category Theory for Programmers."

Category is a very simple concept.

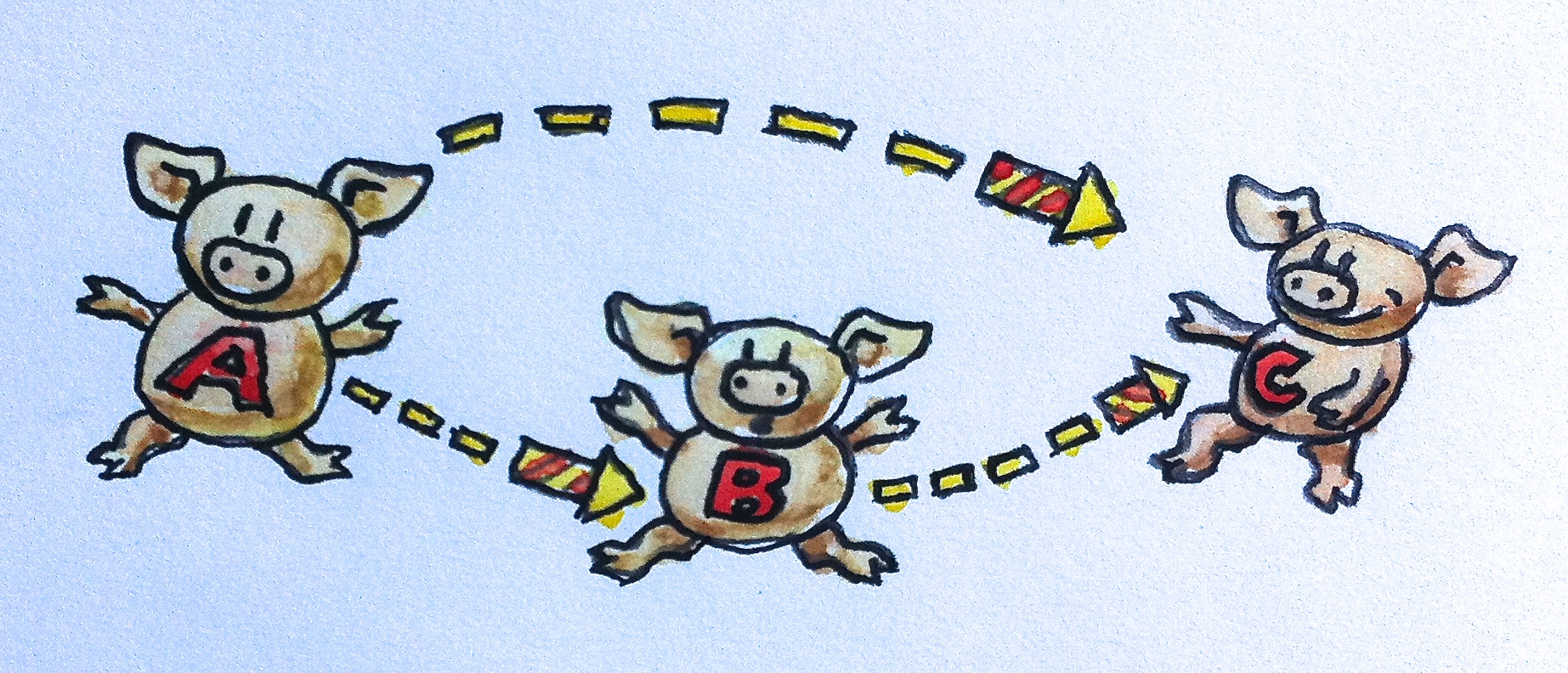

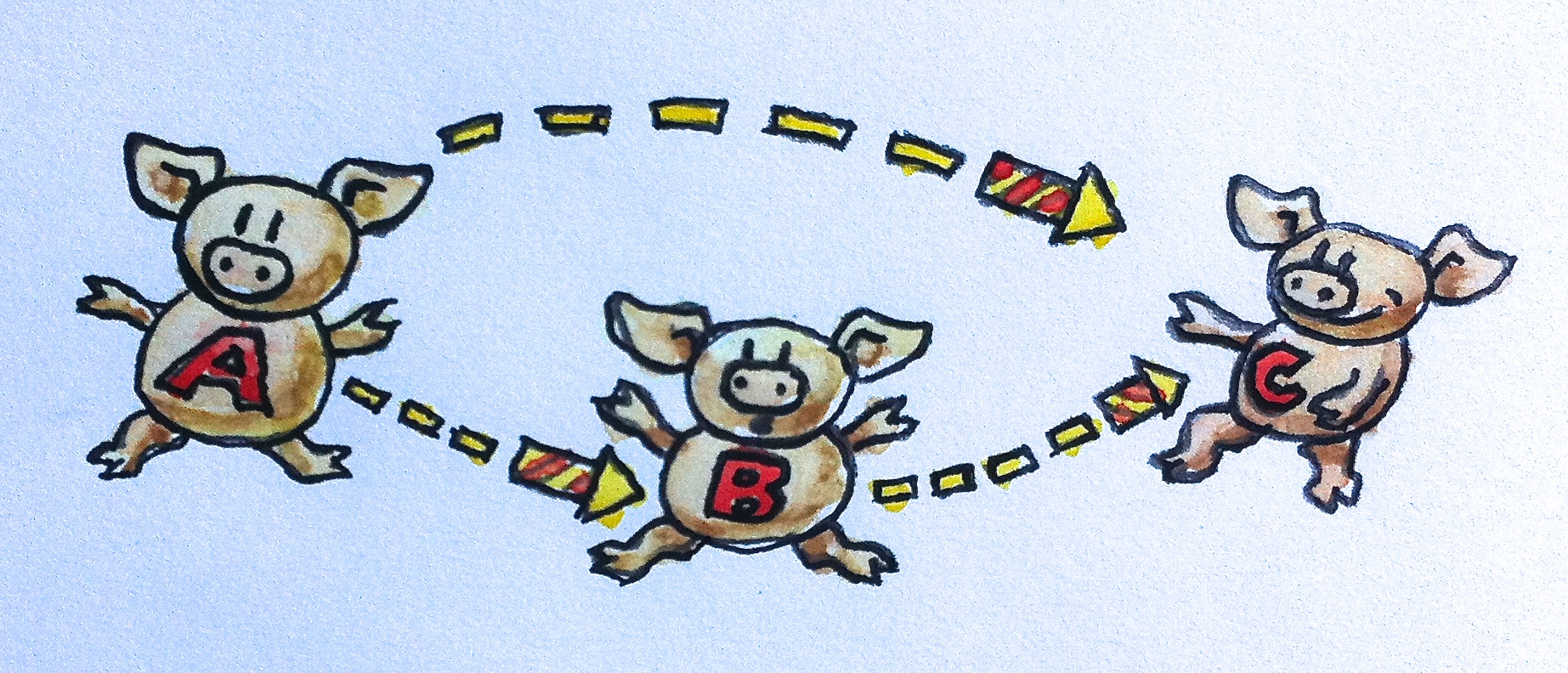

A category consists of objects and arrows that are directed between them. Therefore, categories are so easy to represent graphically. An object can be drawn in the form of a circle or a point, and the arrows are just arrows between them. (Just for a change, from time to time I will draw objects like piglets and arrows like fireworks.) But the essence of the category is composition. Or, if you prefer, the essence of the composition is category. The arrows are arranged so that if you have an arrow from object A to object B, and another arrow from object B to C, then there must be an arrow, their composition, from A to C.

In the category, if there is an arrow from A to B and an arrow from B to C, then there should be an arrow from A to C, which is called their composition. This scheme is not a complete definition of a category because there are not enough identical morphisms (see below).

Already too much abstract bullshit? Do not despair. Let's look at some examples. Think of arrows, also called morphisms, as functions. You have a function f that takes an argument of type A and returns a value of type B. There is another function g that takes B and returns C. You can combine them by passing the result from f to g. You just described a new function that takes A and returns C.

In mathematics, such a composition is indicated by a small circle between the functions: g :f. Pay attention to the composition order from right to left. This is confusing for some people. You can see the similarities with the pipe designations on Unix, for example:

or composition >> in F #, both go from left to right. But in mathematics and in Haskell functions, composition is directed from right to left. This helps if you read g∘f as “g after f.”

Let's show this even more explicitly with C code. We have one function f that takes an argument of type A and returns a value of type B:

and another:

Their combination will be:

Here you again see the composition from right to left: g (f (a)) ;, now in C.

I would like to tell you that there is a template in the C ++ standard library that takes two functions and returns their composition, but there is none.

Translator's note: but it’s not difficult to write one in C ++ 14 (I omit tons of ownership details and template magic to verify that these functions and the type of argument can indeed be composed):

So let's try a bit of Haskell for a change. Here is a function declaration from A to B:

Similarly:

Their composition will be:

As soon as you see how simple it is in Haskell, the inability to express simple functional concepts in C ++ is a bit confusing. Haskell even allows you to use Unicode characters, so you can write the composition like this:

You can even use double colons and arrows from Unicode:

Here is the first Haskell lesson: a double colon means "has a type ..." A function type is created by writing an arrow between the two types. The composition of two functions is recorded by a dot between them (or a circle from Unicode).

There are two very important properties that a composition must satisfy in any category.

1. The composition is associative. If you have three morphisms, f, g, and h that can be composed (that is, their types are consistent with each other), you do not need parentheses to compose them. Mathematically, this is written like this:

In (pseudo) Haskell:

I said “pseudo” because the comparison is not defined for functions.

Associativity is pretty obvious when it comes to functions, but may not be so obvious in other categories.

2. For each object A there is an arrow that will be a unit of composition. This arrow is from an object to itself. To be a unit of composition - means that when composing a unit with any arrow that either starts on A or ends on A, respectively, the composition returns the same arrow. The unit arrow of an object A is called idA (unit on A). In mathematical notation, if f goes from A to B, then

and

When working with functions, the unit arrow is implemented as an identical function that simply returns its argument. The implementation is the same for each type, which means this function is universally polymorphic. In C ++, you can write it as a template:

Translator's note: in a good way, an identical function should be defined as follows:

Of course, nothing is so simple in C ++, because you must take into account not only what you pass but also how (that is, by value, by reference, by constant link, by move, and so on).

In Haskell, an identity function is part of a standard library (called Prelude). Here is her announcement and definition:

You can see that the polymorphic functions in Haskell are very simple. In the declaration, you simply replace the concrete type with a type variable. It should be remembered that the names of specific types always begin with a capital letter, the names of type variables begin with a lowercase letter.

A Haskell function definition consists of a function name followed by formal parameters - there is only one: x. The body of the function follows the equal sign. This succinctness is often shocking for beginners, but you will quickly see that this is a great syntax. The definition of a function and its call are the main tools of functional programming, so their syntax is minimized. There are no brackets around the arguments, no commas between them (you will see this later when we define functions with several arguments).

The body of a function is always an expression - there are no stats. The result of the function is this expression - here, just x.

This concludes our second Haskell tutorial.

Conditions for composition with a single arrow can be written (again, on the pseudo-Haskell), like this:

You can ask yourself the question: why would anyone bother with an identical function - a function that does nothing? Again, why are we messing with the number zero? Zero is a symbol of emptiness. The ancient Romans did not have a zero in the calculus, and they built excellent roads and aqueducts, some of which have survived to this day.

Non-neutral values, such as zero or id, are extremely useful when working with character variables. That is why the Romans were not very good in algebra, while the Arabs and Persians, who were familiar with the concept of zero, were. An identity function is very convenient as an argument, or as a return value from, of a higher order function. Higher-order functions are what make symbolic function conversions possible. They are an algebra of functions.

To summarize: a category consists of objects and arrows (morphisms). Arrows can be arranged and composition is associative. Each object has a single arrow, which serves as a unit of composition.

Functional programmers have a peculiar way of approaching problems. They start by asking very Zen-like questions. For example, when designing an interactive program, they will ask: what is interactivity? When implementing the game Life, they will probably think about the meaning of life. On this wave, I'm going to ask: what is programming? At the most basic level, programming is to tell the computer what to do. “Take the contents of the memory at address x and add it to the contents of the EAX register.” But, even when we program in assembler, the instructions that we give the computer are an expression of something more important. We solve a non-trivial problem (if it were trivial, we would not need the help of a computer). And how do we solve problems? We decompose large tasks into smaller ones. If the small ones are still too big, then we also lay them out, and so on. Finally, we write code that solves all the small problems. And here the essence of programming is manifested: we compose these pieces of code to create solutions to more serious problems. Decomposition would not make sense if we could not put the pieces together.

This process of hierarchical decomposition and recomposition is not imposed on us by a computer. It reflects the limitations of the human mind. Our brain can deal with only a few concepts at a time. In one of the most cited works in the field of psychology, “The magic number seven plus or minus two,” it is postulated that we can only hold 7 ± 2 “pieces” of information in our minds. The details of our understanding of short-term human memory may vary, but we know for sure that it is limited. The bottom line is that we are not able to handle the soup of objects or spaghetti code. We must structure the programs not because they are so pleasant to look at, but because otherwise our brains cannot process them. We often call a piece of code elegant or beautiful, but in fact we mean that it is easy to understand by our limited mind. An elegant code consists of pieces of exactly the right size for assimilation using our mental powers.

So which pieces are right for programming?

Their surface area should be less than their volume. (I love this analogy because the surface area of a geometric object grows in proportion to the square of its size — slower than the volume that grows in proportion to the cube of its size).

Surface area is the information we need in order to combine the pieces.

Volume is the information that is needed in order to realize them.

The idea is that as soon as a piece is implemented, we can forget about the details of its implementation and focus on how it interacts with other pieces. In object-oriented programming, a surface is a class declaration or its abstract interface. In functional programming, it is a function declaration. I simplify a little, but this is the essence of it.

Category theory is an extreme case in the sense that it actively prevents us from looking inside objects. An object in category theory is an abstract foggy entity. All you can know about it is how it relates to another object - how it is linked with them using arrows. This is how search engines rank websites by analyzing inbound and outbound links (unless they are tricky).Translator’s note: in fact, it’s not at all :) In object-oriented programming, an idealized object is visible only through an abstract interface (only a surface, without volume), with methods that play the role of arrows. As soon as you need to look at the implementation of an object to understand how to compose it with other objects, you have lost the value of OOP.

To be continued.

Category theory for programmers: a preface

Category: the essence of the composition

Types and functions

Categories, large and small

Categories Klesley

PS By the way, I can’t disregard that Bartosh will speak at a conference in Moscow in February on this topic: category theory for programmers .

Category is a very simple concept.

A category consists of objects and arrows that are directed between them. Therefore, categories are so easy to represent graphically. An object can be drawn in the form of a circle or a point, and the arrows are just arrows between them. (Just for a change, from time to time I will draw objects like piglets and arrows like fireworks.) But the essence of the category is composition. Or, if you prefer, the essence of the composition is category. The arrows are arranged so that if you have an arrow from object A to object B, and another arrow from object B to C, then there must be an arrow, their composition, from A to C.

In the category, if there is an arrow from A to B and an arrow from B to C, then there should be an arrow from A to C, which is called their composition. This scheme is not a complete definition of a category because there are not enough identical morphisms (see below).

Arrows as functions

Already too much abstract bullshit? Do not despair. Let's look at some examples. Think of arrows, also called morphisms, as functions. You have a function f that takes an argument of type A and returns a value of type B. There is another function g that takes B and returns C. You can combine them by passing the result from f to g. You just described a new function that takes A and returns C.

In mathematics, such a composition is indicated by a small circle between the functions: g :f. Pay attention to the composition order from right to left. This is confusing for some people. You can see the similarities with the pipe designations on Unix, for example:

lsof | grep Chrome

or composition >> in F #, both go from left to right. But in mathematics and in Haskell functions, composition is directed from right to left. This helps if you read g∘f as “g after f.”

Let's show this even more explicitly with C code. We have one function f that takes an argument of type A and returns a value of type B:

B f(A a);

and another:

C g(B b);

Their combination will be:

C g_after_f(A a)

{

return g(f(a));

}

Here you again see the composition from right to left: g (f (a)) ;, now in C.

I would like to tell you that there is a template in the C ++ standard library that takes two functions and returns their composition, but there is none.

Translator's note: but it’s not difficult to write one in C ++ 14 (I omit tons of ownership details and template magic to verify that these functions and the type of argument can indeed be composed):

template

struct function_arg: public function_arg {};

template

struct function_arg {

using type = Arg;

};

template

struct function_arg {

using type = Arg;

};

template

using function_arg_t = typename function_arg::type;

template

auto compose(F&& f, G&& g) {

return [f = std::forward(f), g = std::forward(g)]

(function_arg_t&& a) {return g(f(std::forward>(a)));};

}

So let's try a bit of Haskell for a change. Here is a function declaration from A to B:

f :: A -> B

Similarly:

g :: B -> C

Their composition will be:

g . f

As soon as you see how simple it is in Haskell, the inability to express simple functional concepts in C ++ is a bit confusing. Haskell even allows you to use Unicode characters, so you can write the composition like this:

g ∘ f

You can even use double colons and arrows from Unicode:

f ∷ A → B

Here is the first Haskell lesson: a double colon means "has a type ..." A function type is created by writing an arrow between the two types. The composition of two functions is recorded by a dot between them (or a circle from Unicode).

Composition properties

There are two very important properties that a composition must satisfy in any category.

1. The composition is associative. If you have three morphisms, f, g, and h that can be composed (that is, their types are consistent with each other), you do not need parentheses to compose them. Mathematically, this is written like this:

h∘(g∘f) = (h∘g)∘f = h∘g∘f

In (pseudo) Haskell:

f :: A -> B

g :: B -> C

h :: C -> D

h . (g . f) == (h . g) . f == h . g . f

I said “pseudo” because the comparison is not defined for functions.

Associativity is pretty obvious when it comes to functions, but may not be so obvious in other categories.

2. For each object A there is an arrow that will be a unit of composition. This arrow is from an object to itself. To be a unit of composition - means that when composing a unit with any arrow that either starts on A or ends on A, respectively, the composition returns the same arrow. The unit arrow of an object A is called idA (unit on A). In mathematical notation, if f goes from A to B, then

f∘idA = f

and

idB∘f = f

When working with functions, the unit arrow is implemented as an identical function that simply returns its argument. The implementation is the same for each type, which means this function is universally polymorphic. In C ++, you can write it as a template:

template T id(T x) { return x; }

Translator's note: in a good way, an identical function should be defined as follows:

template auto id(T&& x) { return std::forward(x); }

Of course, nothing is so simple in C ++, because you must take into account not only what you pass but also how (that is, by value, by reference, by constant link, by move, and so on).

In Haskell, an identity function is part of a standard library (called Prelude). Here is her announcement and definition:

id :: a -> a

id x = x

You can see that the polymorphic functions in Haskell are very simple. In the declaration, you simply replace the concrete type with a type variable. It should be remembered that the names of specific types always begin with a capital letter, the names of type variables begin with a lowercase letter.

A Haskell function definition consists of a function name followed by formal parameters - there is only one: x. The body of the function follows the equal sign. This succinctness is often shocking for beginners, but you will quickly see that this is a great syntax. The definition of a function and its call are the main tools of functional programming, so their syntax is minimized. There are no brackets around the arguments, no commas between them (you will see this later when we define functions with several arguments).

The body of a function is always an expression - there are no stats. The result of the function is this expression - here, just x.

This concludes our second Haskell tutorial.

Conditions for composition with a single arrow can be written (again, on the pseudo-Haskell), like this:

f . id == f

id . f == f

You can ask yourself the question: why would anyone bother with an identical function - a function that does nothing? Again, why are we messing with the number zero? Zero is a symbol of emptiness. The ancient Romans did not have a zero in the calculus, and they built excellent roads and aqueducts, some of which have survived to this day.

Non-neutral values, such as zero or id, are extremely useful when working with character variables. That is why the Romans were not very good in algebra, while the Arabs and Persians, who were familiar with the concept of zero, were. An identity function is very convenient as an argument, or as a return value from, of a higher order function. Higher-order functions are what make symbolic function conversions possible. They are an algebra of functions.

To summarize: a category consists of objects and arrows (morphisms). Arrows can be arranged and composition is associative. Each object has a single arrow, which serves as a unit of composition.

Composition is the essence of programming

Functional programmers have a peculiar way of approaching problems. They start by asking very Zen-like questions. For example, when designing an interactive program, they will ask: what is interactivity? When implementing the game Life, they will probably think about the meaning of life. On this wave, I'm going to ask: what is programming? At the most basic level, programming is to tell the computer what to do. “Take the contents of the memory at address x and add it to the contents of the EAX register.” But, even when we program in assembler, the instructions that we give the computer are an expression of something more important. We solve a non-trivial problem (if it were trivial, we would not need the help of a computer). And how do we solve problems? We decompose large tasks into smaller ones. If the small ones are still too big, then we also lay them out, and so on. Finally, we write code that solves all the small problems. And here the essence of programming is manifested: we compose these pieces of code to create solutions to more serious problems. Decomposition would not make sense if we could not put the pieces together.

This process of hierarchical decomposition and recomposition is not imposed on us by a computer. It reflects the limitations of the human mind. Our brain can deal with only a few concepts at a time. In one of the most cited works in the field of psychology, “The magic number seven plus or minus two,” it is postulated that we can only hold 7 ± 2 “pieces” of information in our minds. The details of our understanding of short-term human memory may vary, but we know for sure that it is limited. The bottom line is that we are not able to handle the soup of objects or spaghetti code. We must structure the programs not because they are so pleasant to look at, but because otherwise our brains cannot process them. We often call a piece of code elegant or beautiful, but in fact we mean that it is easy to understand by our limited mind. An elegant code consists of pieces of exactly the right size for assimilation using our mental powers.

So which pieces are right for programming?

Their surface area should be less than their volume. (I love this analogy because the surface area of a geometric object grows in proportion to the square of its size — slower than the volume that grows in proportion to the cube of its size).

Surface area is the information we need in order to combine the pieces.

Volume is the information that is needed in order to realize them.

The idea is that as soon as a piece is implemented, we can forget about the details of its implementation and focus on how it interacts with other pieces. In object-oriented programming, a surface is a class declaration or its abstract interface. In functional programming, it is a function declaration. I simplify a little, but this is the essence of it.

Category theory is an extreme case in the sense that it actively prevents us from looking inside objects. An object in category theory is an abstract foggy entity. All you can know about it is how it relates to another object - how it is linked with them using arrows. This is how search engines rank websites by analyzing inbound and outbound links (unless they are tricky).Translator’s note: in fact, it’s not at all :) In object-oriented programming, an idealized object is visible only through an abstract interface (only a surface, without volume), with methods that play the role of arrows. As soon as you need to look at the implementation of an object to understand how to compose it with other objects, you have lost the value of OOP.

To be continued.

Category theory for programmers: a preface

Category: the essence of the composition

Types and functions

Categories, large and small

Categories Klesley

PS By the way, I can’t disregard that Bartosh will speak at a conference in Moscow in February on this topic: category theory for programmers .