How Docker helped us achieve the (almost) impossible

- Transfer

Since we started working on Iron.io, we have been trying to solve the problem of keeping our IronWorker containers up-to-date regarding the new Linux runtimes and packages. IronWorker has been using the same runtime environment unchanged over the past two years. So far, a few weeks ago, we did not release various environments for programming languages in production.

Since we started working on Iron.io, we have been trying to solve the problem of keeping our IronWorker containers up-to-date regarding the new Linux runtimes and packages. IronWorker has been using the same runtime environment unchanged over the past two years. So far, a few weeks ago, we did not release various environments for programming languages in production. Since the creation of our service, we have used only one container that contained a set of language environments and binary packages - Ruby, Python, PHP, Java, .NET and other languages, as well as libraries such as ImageMagick, SoX and others.

This container and its usage strategy began to become obsolete, as did Ruby 1.9.1, Node 0.8, Mono 2 and other languages with older versions that were used on the stack by default. Over time, the problem became even more acute, as people began to use new things, but were forced to change their code to work with older versions of languages.

Limited to one LXC container

IronWorker uses LXC containers to isolate resources and provide security while performing tasks. LXC worked great as a component of execution, but crashed from time to time when it came to integrating with all sorts of environments necessary for processing tasks. We were at a dead end when it came to creating runtime environments. On the one hand, we couldn’t just update the versions in the existing container, otherwise we would risk destroying a million or more tasks that are performed every day. (We tried it once at the beginning of the launch of the service, and it didn’t lead to anything good)

We also could not store various LXC containerswith different versions of languages, since they contain full copies of the operating system and libraries (i.e. ~ 2 GB per image). In fact, this would work just fine in a PaaS environment such as Heroku where the processes go on forever, and you can just get the right container before starting the process. In such a situation, there would be large user images for each client, but in the case of IronWorker, everything is different.

IronWorker is a large multi-user task processing system where users add tasks to the queue, and these tasks are performed by thousands of processors. It can be used to offload the main execution thread by running in the background, launching scheduled tasks, continuously processing transactions and message flows, or performing parallel processing on a large number of cores. The advantage is that users have the ability to process on demand and at the same time very large concurrency without any effort.

Inside, the service works as follows: it receives a task from a set of queues, installs a runtime on a specific VM, downloads the task code, and then starts the process. The essence of the service implies that all machines are constantly used by all customers. We do not allocate a machine for specific applications or customers for a long period of time. Tasks, as a rule, are not carried out for long. Some work for just a few seconds or minutes, and the maximum run time is limited to sixty minutes.

LXC worked as it should, but we wondered how to update or add something to our existing container without breaking backward compatibility and not using an insane amount of disk space. Our options seemed rather limited and therefore we put off the decision.

... and then Docker came

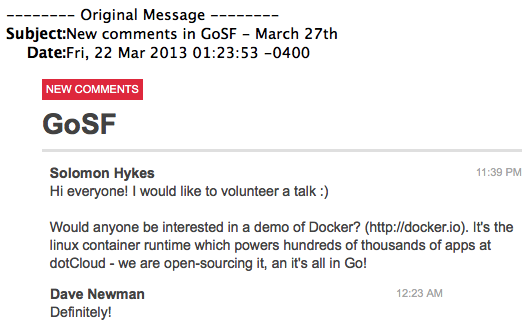

We first heard about Docker over a year ago. We helped organize GoSF MeetUp and Solomon Heiks , the creator of Docker attended the conference in March 2013 and demonstrated his new Docker project, which was written in Go. In fact, he published it on that day, this was the first time someone saw him.

The demo was great, and over a hundred developers in the audience were impressed with what he and his team did. (And immediately, as one of his comments testifies, Solomon started a new development methodology called Shame Driven Development )

“Alas, he was too raw,” we said the day before, “the project was not ready for production, but it was really worthy praise. "

Solomon Hykes and Travis Reader at the 2013 OpenStack Summit.

A month later, I met with Solomon at the OpenStack Summit in Portland to work together and see how we could use Docker to solve our problem. (I thought I would only go to one meeting, but instead we spent a lot of time working with Solomon and other developers).

I played with Docker, and Solomon helped me understand what he can do and how it works. It was not just a good project, it solved a difficult task in a well-designed way. And it did not make him flawed that he was a pioneer written in the Go language and did not have a decent technical duty, at least from my point of view.

Research and development

Before Docker, we tried various package managers, including trying to work with Nix. Nix is a great project and it has many good qualities, but unfortunately, this was not quite what we needed. Nix supports atomic updates, rollbacks and has a declarative approach to system configuration.

Unfortunately, it was difficult to maintain scripts for various programs and libraries that we use in our images, and it was also difficult to add some custom packages and programs. The efforts needed to integrate scripts, libraries, and more were more like patches for our system. We were looking for something else that could bring us closer to meeting our requirements.

In the beginning there were such requirements:

Provide different versions of the same languages (i.e. ruby 1.9 and ruby 2.1)

Have a safe way to update one part of the system without breaking other parts (for example, update only python libraries and not touch ruby libraries)

Use a declarative approach to system configuration ( simple scripts that describe what should be inside the image)

Create a simple way to perform updates and roll them back

In the process, it became clear that there are several other advantages of using Docker, which we did not expect to exist. These include:

Creating separate and isolated environments for each runtime / language.

Getting support for the CoW file system.(which takes us to a more secure and efficient level in image management).

Getting a reliable way to switch between different runtime environments on the fly

Working with Docker

When working with Docker, it was not difficult to integrate it, since we already used LXC. (Docker complements the LXC with a high-level, process-level API. See the StackOverflow link below.)

After we migrated our existing shell scripts to Dockerfiles and created the images, all we had to do to switch from using LXC directly was docker run ”(Instead of“ lxc-execute ') and specify the ID of the image required for each task.

The command to launch the LXC image:

lxc-execute -n VM_NAME -f CONFIG_FILE COMMANDThe command to launch the Docker image:

docker run -i -name=VM_NAME worker:STACK_NAME COMMANDIt should be noted that we are a bit away from the recommended approaches for creating and installing containers.

The standard approach is to either create images at runtime using Dockerfiles, or store them in private / open repositories in the cloud. Instead, we create images and then make snapshots of them and store them in EBS attached to our system. This is because the system must start very quickly. Creating images at runtime was a bad option, even loading them from external storage would be too slow.

Base Images Plus Diffs

Using Docker also solved the problem of disk space, since each image is just a set of changes (diff) from the base image. This means that we can have one basic image containing the operating system and Linux libraries that we use in all images, and use it as the basis for many other images. The size of the inherited image includes only the size of the differences from the base image.

For example, if you install Ruby, the new image will contain only the files that were installed with Ruby. So that this does not seem confusing, let's think of it as a Git repository containing all the files on the computer, where the base image is the master branch, and all other images are different branches produced from the base image. This ability to incorporate differences and create images based on existing containers is very useful because it allows us to constantly release new versions, add libraries, packages and concentrate more on solving problems.

Some problems

We had several problems when creating and implementing new environments using Docker, but there were no serious ones.

We had some difficulties related to the removal of containers after starting the task. The container removal process sometimes fell, but we found a fairly clean solution.

When configuring some software components, we found that Docker incorrectly emulates some low-level functions, such as fuse. As a result, we had to resort to some magic in order to get a correctly working Java image.

That's it. Questions to the Docker developers mostly came down to a few fixes.As for the new functionality of Docker, it’s enough for us. (We still have not tried to add anything to the functional, since the existing set of functions is quite extensive).

LXC, Containers and Docker

LXC (LinuX Containers) is an operating system-level virtualization system that provides a safe way to isolate one or more processes from other processes running on the same Linux system. When using containers, resources can be isolated, services are limited, and processes are allocated an isolated space of the operating system with its own structure of the file system and network interfaces. Several containers can use the same core, but each container can be limited to using only a certain amount of resources, such as CPU, memory, and I / O. As a result, applications, tasks, and other processes can be configured to run as several lightweight, isolated Linux instances on the same machine.

Docker is built on top of the LXC, allowing for image and deployment management. Here's an article on StackOverflow by Solomon about the differences and compatibility between LXC and Docker:

If you look at the features of Docker, most of them are already provided in LXC. So what does Docker add? Why should I use Docker and not a simple LXC?

Docker is not a replacement for LXC. “LXC” refers to the capabilities of the Linux kernel (in particular namespaces and control groups) that allow isolating processes from each other and controlling the distribution of their resources.

Docker offers a high-level tool with several powerful features on top of the low-level kernel functions.

Read more >>

Docker in production

Docker is the foundation of IronWorker's 'stacks'

We currently use Docker in production as part of the IronWorker service. You can choose one of 10 different “stacks” (containers) for your tasks by setting the “stack” parameter when loading the code. If you think about it, this is a convenient opportunity - you can specify the language version for a short-term task that will be performed on any number of cores.

Using Docker for image management allows you to update images without fear of damaging other parts of the system. In other words, we can update the Ruby 1.9 image without touching the Ruby 2.1 image. (Maintaining consistency is paramount in any large-scale system, especially when you support a large set of languages).

We also have a more automated process for updating images using Dockerfiles, which allows us to deploy updates according to a predictable schedule. In addition, we have the ability to create custom images. They can be formed according to a specific version of the language and / or include certain frameworks and libraries.

Looking to the future

The decision to use Docker in production was not an extremely risky step. A year ago, perhaps it was, but now it is a stable product. The fact that this is a new product is an advantage in our eyes. It has a minimal set of features and is built for large-scale and dynamic cloud environments like ours.

We looked at Docker from the inside and recognized the people behind it, but even without it, Docker would be a natural choice. There are a lot of pluses, but almost no minuses.

And, on the rights of advice, we suggest using “ready-to-use” Dockerfiles, scripts and publicly available images. There are many useful things to start with. In fact, we will probably make our Dockerfiles and images publicly available, which means that people will be able to easily run their workers locally, and we will also be able to send pull reqeusts to improve them.

Processing tens of thousands of hours of CPU time and millions of tasks every day in almost every language is not easy. Docker allowed us to solve some serious problems with a little effort. This has increased our ability to innovate as well as create new opportunities for IronWorker. But, just as importantly, this allows us to maintain and even exceed the guaranteed service conditions for the improvement of which we work a lot.

Docker has a great future, and we are pleased to have decided to include it in our technology stack.