Mix favorite bits in Unity 5.0

- Transfer

Work on sound is one of the main directions in the preparation of Unity 5.0. After it became clear what functions you had to sweat over, we included the sound in the list of priorities.

So that everything was on the ointment, we had to go back and place emphasis on those areas of sound that would be involved in Unity. So that you could combine any number of good, high-quality sounds in your games, we had to decide on the codecs and make sure that such a scheme would really work. I will try to describe all the nuances in a more detailed article, but for now I’ll tell you about our first sound add-on for Unity 5.0, AudioMixer.

If you use Unity, your sound cannot be bad. Or so-so. He simply must be the best! Because in addition to a solid foundation for working with him in the future, you have something else - a new super function that proves that we are not wasting over small things and are doing everything to arm you to the teeth in the war with sound.

In future releases, we will improve more and more areas of work with sound. Sometimes these will be minor changes, such as fixing bugs in the existing set of functions, which has already shown its best. Some will be more serious, such as the ability to create stunning interactive sounds with complete immersion and control the entire sound landscape as a whole.

The answer to this question is quite simple - before this function was not available. In the past, all sounds were played on AudioSource, where you could add various effects as Components, and then mix all the game sounds in AudioListener and incorporate them into the sound landscape.

As a solution, we chose AudioMixer, and in order not to poke our fingers at the sky, we decided to do more and put together a lot of functions that can be found in the well-known Digital Audio Workstation applications.

Every sound engineer knows how convenient it is to work with sounds categorized instead of processing each sound individually. The ability to collect all the sounds together while working on the parameters of sound effects in the context of the game provides global control over the entire sound landscape. And this is very important! Perhaps this is the best way to create the mood you need and the level of immersion in the game. A good sound mix and a suitable track will allow the player to feel the full range of emotions, and create an atmosphere that the graphics alone are not capable of.

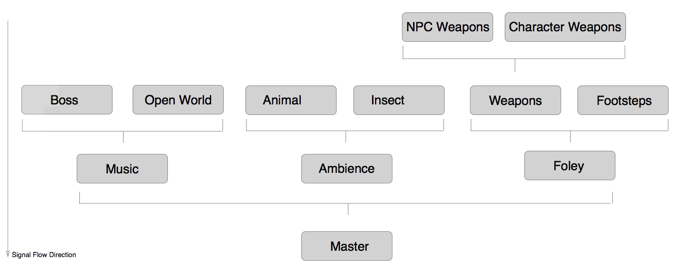

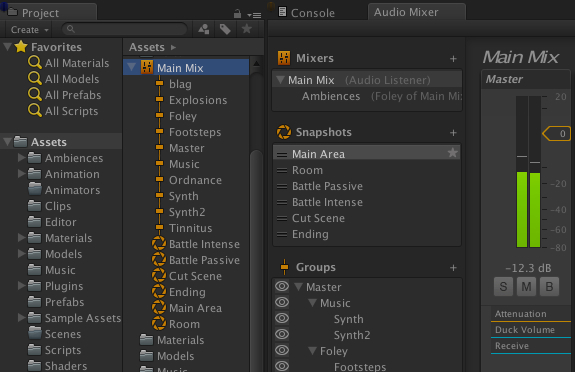

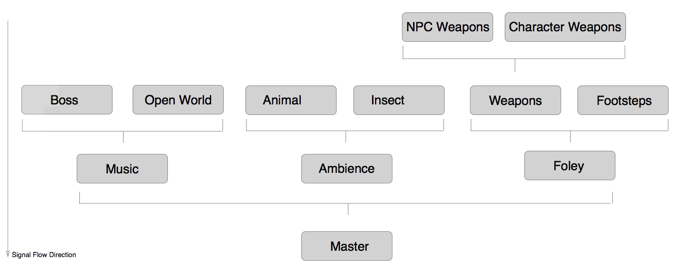

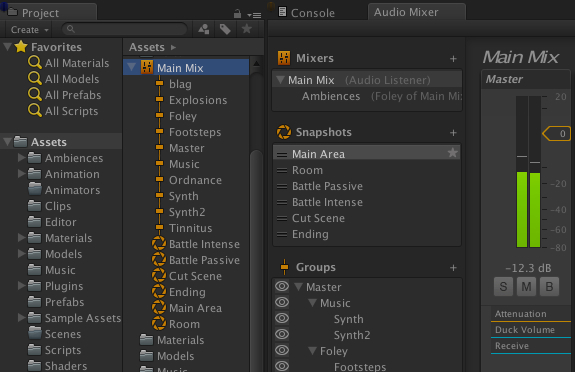

This is the main goal of AudioMixer - with its help, users can exercise control over the set of all sounds in the game. Thus, all the sounds that are played in the scene are included in one, two, any number of AudioMixers, which break them down into categories and then edit them.

In each AudioMixer, you can create branched category systems, which in our context are called AudioGroup. Before your eyes you will have a selection of these same AudioGroups and a classic mixing panel, as is customary in the music and film industry.

But that is not all! Each AudioGroup can contain a bunch of different DSP audio effects that are activated one after the other as the signal passes through the AudioGroup.

But that is not all! Each AudioGroup can contain a bunch of different DSP audio effects that are activated one after the other as the signal passes through the AudioGroup.

This is already interesting, right? Now you can not only create custom trace schemes and apply mixing to individual categories. You can distribute any kind of cool DSP pieces along the entire length of the signal, add any effects and enjoy unlimited power over the sound landscape. You can even add an empty track to process only a specific part of the signal.

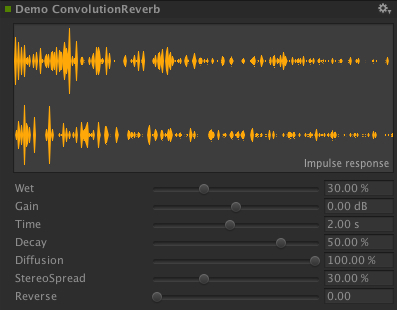

But what if the built-in effects of Unity are not enough for you? Previously, this was solved by returning the OnAudioFilterRead script and only that allowed you to directly process the audio samples in your scripts yourself. This is enough for simple effects and the development of your own filters. But sometimes, to increase your immersion in the game, you may want to compile the desired effects yourself, right? From now on, you can prescribe complex effects such as tuning echo convolution or multi-band EQ.

We are glad to make you happy, now Unity supports custom effects of the DSP plugin. This means that users can write their own DSPs, or maybe even use the Asset Store to exchange super creative ideas with others. A whole world of new possibilities opens up before you, such as creating your own engine or managing other sound applications like Pure Data. These custom DSP plugins can request sidechain support and use the right information from any part of the mix! Super!

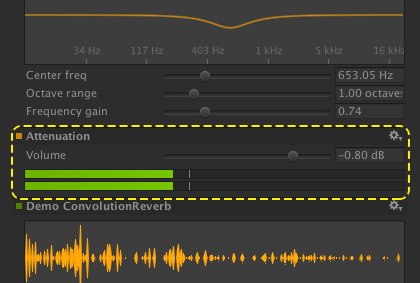

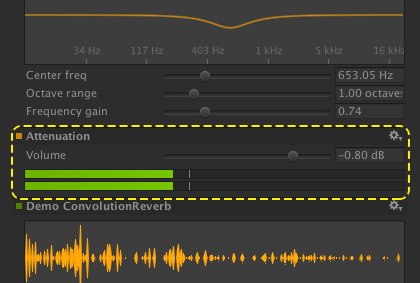

One of the interesting innovations when working with a set of effects in AudioGroup is that you can use fading wherever you want. You can even bring the signal to + 20dB (we worked on this as well). Use the built-in VU meter in test mode to see how the signal changes when it attenuates.

Together with the non-linear DSP, Sends / Receives and our new Ducking chip (which I will describe in detail later in the article), you get a super powerful tool for controlling the audio signal through the mix.

I already mentioned the use of sound landscape mixes to control the atmosphere in the game: you can add or remove new components of music or create background sounds. Or play with the mood of the mix itself. For example, to change the volume level in different segments or, when it comes to working on sound effects, switch from one state parameter to another, immersing the player in the environment you need.

Speaking frankly, AudioMixer has the ability to recognize snapshots that capture the state of all parameters. Here you can save and perform transitions between absolutely any parameters.

You can even use saved snapshots in any order, creating new complex states, and apply them as you like.

Imagine that you are leaving an open area on a map and entering a sinister cave. What do you hear there? You can use the transition between mixes to emphasize other, subtle sounds, or include new instruments in your ensemble and change the characteristics of the echo in a new noise design. Imagine that for all this you do not need to write a single line of new code.

In addition to inserting DSP effects already available in Unity, you can insert “Send” into any part of the mix. Thus, you can send a sound signal to where you left “Send” and decide how much signal you want to use.

Further more! Given that the signal level used for branching is part of the snapshot system, you might want to consider how to combine changes in signal flows and transitions between snapshots. And away we go ... potential installation opportunities are expanding like a snowball.

But where does the branch signal go? In the latest version of Unity, Send is used in conjunction with two other functions: Receives and Volume Ducking.

... fairly simple processing elements. These are the same inserts as all other effects, and they just collect all the branched audio from all the Sends that previously selected and mixed them, and they already transmit all this to the next effect in the AudioGroup.

Of course, when it comes to effect groups or the fade point in an AudioGroup, you can insert Receives anywhere. This gives you tremendous freedom of action in terms of when to use the tap signal in the mix.

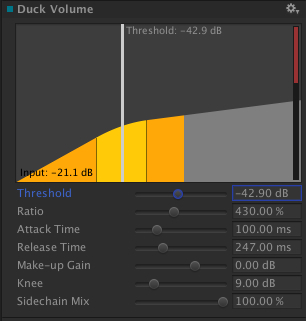

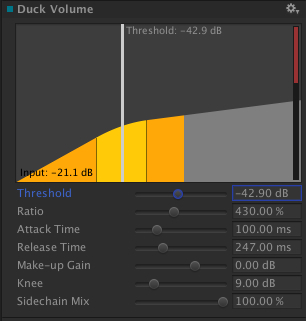

Among other things, Sends interact with Volume Ducking inserts. As with Receives, these elements can be inserted in parallel with other DSP effects anywhere in the mix.

When Send aims to insert Volume Ducking, it behaves in much the same way as the side chain compressor protocol, which means that you can use the side chain from anywhere in the mix and from there redirect the sound attenuation to anywhere!

When Send aims to insert Volume Ducking, it behaves in much the same way as the side chain compressor protocol, which means that you can use the side chain from anywhere in the mix and from there redirect the sound attenuation to anywhere!

What does this mean for those who are not in the subject? Imagine that you are busy mixing sounds for your shooter and you really want to stun the player with the sounds of gunfire and explosions. Well, that’s reasonable, but what about this situation: here you go up to a non-playable character on the battlefield and try to listen to his thoughtful muttering. And ... you can't make out anything. Volume Ducking allows you to dynamically lower the volume of individual segments of the mix (in this case, artillery sounds), completely and completely, without completely affecting other segments (character conversation). Just use Send from the AudioGroup, which contains the character dialogue, and redirect it to Volume Ducking in the AudioGroup containing the sounds of the battlefield.

For global tuning of all effects, you could use sidechain compression in dynamic mode to compress other instruments separately from the bass track.

Well, the cool thing is that you can do it all in the editor without creating a single line of code!

And although I only superficially outlined the capabilities of AudioMixer in this article, I hope this is enough to interest people in working with sound in Unity 5.0.

From now on, we will bring the future of sound processing in games closer and provide you with a set of tools to create a first-class sound landscape!

From a translator: I am still happy with questions and criticism, as well as suggestions on translation topics about Unity

So that everything was on the ointment, we had to go back and place emphasis on those areas of sound that would be involved in Unity. So that you could combine any number of good, high-quality sounds in your games, we had to decide on the codecs and make sure that such a scheme would really work. I will try to describe all the nuances in a more detailed article, but for now I’ll tell you about our first sound add-on for Unity 5.0, AudioMixer.

Step one

If you use Unity, your sound cannot be bad. Or so-so. He simply must be the best! Because in addition to a solid foundation for working with him in the future, you have something else - a new super function that proves that we are not wasting over small things and are doing everything to arm you to the teeth in the war with sound.

In future releases, we will improve more and more areas of work with sound. Sometimes these will be minor changes, such as fixing bugs in the existing set of functions, which has already shown its best. Some will be more serious, such as the ability to create stunning interactive sounds with complete immersion and control the entire sound landscape as a whole.

Why AudioMixer?

The answer to this question is quite simple - before this function was not available. In the past, all sounds were played on AudioSource, where you could add various effects as Components, and then mix all the game sounds in AudioListener and incorporate them into the sound landscape.

As a solution, we chose AudioMixer, and in order not to poke our fingers at the sky, we decided to do more and put together a lot of functions that can be found in the well-known Digital Audio Workstation applications.

Sound categories

Every sound engineer knows how convenient it is to work with sounds categorized instead of processing each sound individually. The ability to collect all the sounds together while working on the parameters of sound effects in the context of the game provides global control over the entire sound landscape. And this is very important! Perhaps this is the best way to create the mood you need and the level of immersion in the game. A good sound mix and a suitable track will allow the player to feel the full range of emotions, and create an atmosphere that the graphics alone are not capable of.

Mixing in Unity

This is the main goal of AudioMixer - with its help, users can exercise control over the set of all sounds in the game. Thus, all the sounds that are played in the scene are included in one, two, any number of AudioMixers, which break them down into categories and then edit them.

In each AudioMixer, you can create branched category systems, which in our context are called AudioGroup. Before your eyes you will have a selection of these same AudioGroups and a classic mixing panel, as is customary in the music and film industry.

DSP

But that is not all! Each AudioGroup can contain a bunch of different DSP audio effects that are activated one after the other as the signal passes through the AudioGroup.

But that is not all! Each AudioGroup can contain a bunch of different DSP audio effects that are activated one after the other as the signal passes through the AudioGroup. This is already interesting, right? Now you can not only create custom trace schemes and apply mixing to individual categories. You can distribute any kind of cool DSP pieces along the entire length of the signal, add any effects and enjoy unlimited power over the sound landscape. You can even add an empty track to process only a specific part of the signal.

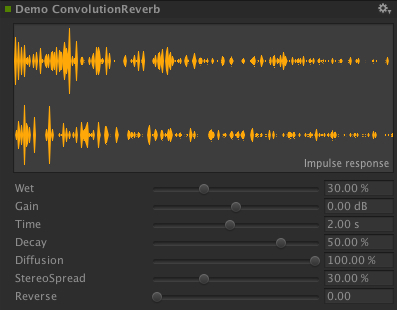

But what if the built-in effects of Unity are not enough for you? Previously, this was solved by returning the OnAudioFilterRead script and only that allowed you to directly process the audio samples in your scripts yourself. This is enough for simple effects and the development of your own filters. But sometimes, to increase your immersion in the game, you may want to compile the desired effects yourself, right? From now on, you can prescribe complex effects such as tuning echo convolution or multi-band EQ.

We are glad to make you happy, now Unity supports custom effects of the DSP plugin. This means that users can write their own DSPs, or maybe even use the Asset Store to exchange super creative ideas with others. A whole world of new possibilities opens up before you, such as creating your own engine or managing other sound applications like Pure Data. These custom DSP plugins can request sidechain support and use the right information from any part of the mix! Super!

One of the interesting innovations when working with a set of effects in AudioGroup is that you can use fading wherever you want. You can even bring the signal to + 20dB (we worked on this as well). Use the built-in VU meter in test mode to see how the signal changes when it attenuates.

Together with the non-linear DSP, Sends / Receives and our new Ducking chip (which I will describe in detail later in the article), you get a super powerful tool for controlling the audio signal through the mix.

The atmosphere in the game

I already mentioned the use of sound landscape mixes to control the atmosphere in the game: you can add or remove new components of music or create background sounds. Or play with the mood of the mix itself. For example, to change the volume level in different segments or, when it comes to working on sound effects, switch from one state parameter to another, immersing the player in the environment you need.

Speaking frankly, AudioMixer has the ability to recognize snapshots that capture the state of all parameters. Here you can save and perform transitions between absolutely any parameters.

You can even use saved snapshots in any order, creating new complex states, and apply them as you like.

Imagine that you are leaving an open area on a map and entering a sinister cave. What do you hear there? You can use the transition between mixes to emphasize other, subtle sounds, or include new instruments in your ensemble and change the characteristics of the echo in a new noise design. Imagine that for all this you do not need to write a single line of new code.

Multidirectional signals

Sends

In addition to inserting DSP effects already available in Unity, you can insert “Send” into any part of the mix. Thus, you can send a sound signal to where you left “Send” and decide how much signal you want to use.

Further more! Given that the signal level used for branching is part of the snapshot system, you might want to consider how to combine changes in signal flows and transitions between snapshots. And away we go ... potential installation opportunities are expanding like a snowball.

But where does the branch signal go? In the latest version of Unity, Send is used in conjunction with two other functions: Receives and Volume Ducking.

Receives

... fairly simple processing elements. These are the same inserts as all other effects, and they just collect all the branched audio from all the Sends that previously selected and mixed them, and they already transmit all this to the next effect in the AudioGroup.

Of course, when it comes to effect groups or the fade point in an AudioGroup, you can insert Receives anywhere. This gives you tremendous freedom of action in terms of when to use the tap signal in the mix.

Volume ducking

Among other things, Sends interact with Volume Ducking inserts. As with Receives, these elements can be inserted in parallel with other DSP effects anywhere in the mix.

What does this mean for those who are not in the subject? Imagine that you are busy mixing sounds for your shooter and you really want to stun the player with the sounds of gunfire and explosions. Well, that’s reasonable, but what about this situation: here you go up to a non-playable character on the battlefield and try to listen to his thoughtful muttering. And ... you can't make out anything. Volume Ducking allows you to dynamically lower the volume of individual segments of the mix (in this case, artillery sounds), completely and completely, without completely affecting other segments (character conversation). Just use Send from the AudioGroup, which contains the character dialogue, and redirect it to Volume Ducking in the AudioGroup containing the sounds of the battlefield.

For global tuning of all effects, you could use sidechain compression in dynamic mode to compress other instruments separately from the bass track.

Well, the cool thing is that you can do it all in the editor without creating a single line of code!

Instead of a conclusion

And although I only superficially outlined the capabilities of AudioMixer in this article, I hope this is enough to interest people in working with sound in Unity 5.0.

From now on, we will bring the future of sound processing in games closer and provide you with a set of tools to create a first-class sound landscape!

From a translator: I am still happy with questions and criticism, as well as suggestions on translation topics about Unity