How and why to protect Internet access at the enterprise - part 1

Today we will cynically talk about the good old task - protecting employees and their workstations while accessing Internet resources. In this article, we will consider common myths, modern threats, and typical requirements of organizations for protecting web traffic.

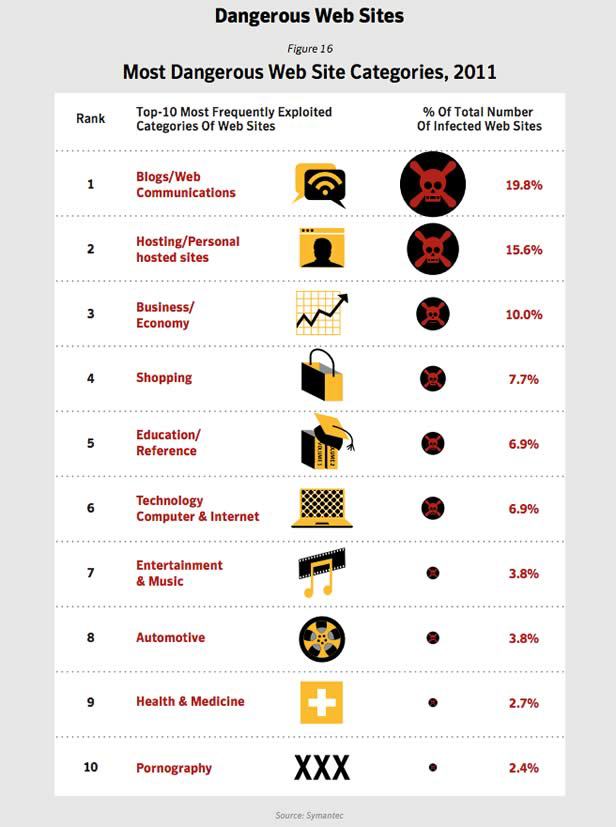

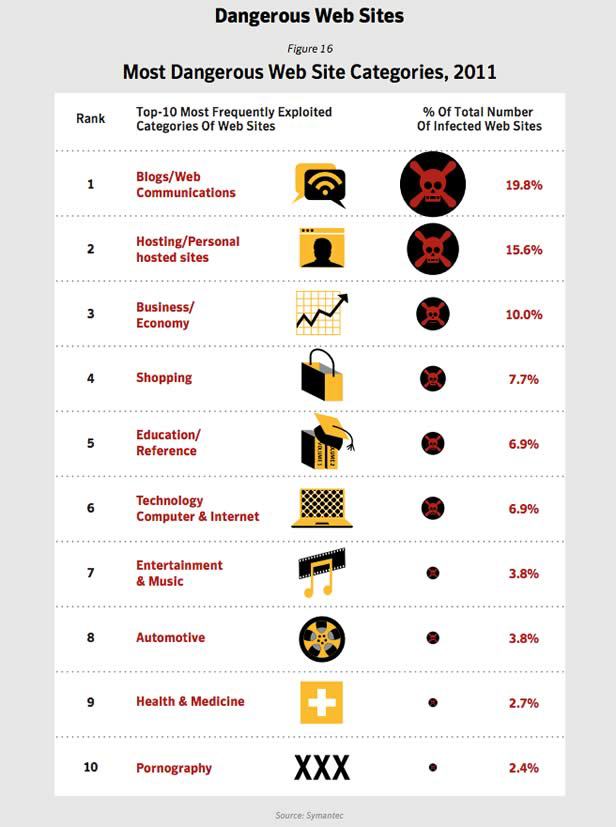

If you remember only one fact from the whole article, then you should know that on websites for adults there is a ten times less chance of catching malware than on conventional Internet resources.

Today, most Russian companies already provide employees with Internet access from work computers. Usually, state organizations, law enforcement agencies and companies processing a large amount of personal data lag behind in this regard. But even in such organizations, there are always separate network segments or workstations connected to the Internet.

Not providing Internet access to employees has long been considered bad form. About a third of the candidates simply will not come to you to find out that you do not have or have very limited access to the Internet, since they consider it as necessary as clean water and ventilation. This is especially true for the current generation of 20-25 year olds who are accustomed from school that any information can be quickly found in a search engine, and updates on social networks should be checked no less than half an hour.

Enough intros, move on to myths.

No matter how. According to the Symantec's Internet Security Threat Report, only 2.4% of adult sites distribute malware, which is several times less than blogs, news portals and online stores.

It’s enough to recall examples of hacking sites of the New York Times, NBC, Russian Railways, the Vedomosti portal, sites of the Georgian government, TechCrunch Europe and others, from the pages of which viruses were spread and phishing attacks were carried out.

On the other hand, you as a responsible employee can make a strong-willed decision not to visit slippery websites, but can your colleagues resist? Do they resist the temptation to click on the link in the "Nigerian letter" or click on the banner with a message about the winnings of 100.5 million dollars?

There is a chance, but it is very small. Before the release of the light, the creators of the malware verify that their creations are not detected by current versions of antiviruses. After the first versions of the malware are discovered by antivirus analysts, it takes from 6 to 24 hours to research and develop the signature. The speed of distribution of signatures and patches for vulnerable software depends entirely on your infrastructure, but the bill usually goes on days and weeks. All this time, users are vulnerable.

Please note that the anti-virus vendors themselves offer dedicated specialized solutions for protecting web traffic. Hosted solutions may have basic functionality for filtering websites by categories and risks, but they cannot afford to store the database of malicious sites locally and dynamically update it without sacrificing performance. The host approach to solving the problem is also not applicable for companies in which not all workstations are included in the domain and / or are not centrally managed.

Modern malware is actually more humane compared to its counterparts from the 90s - most often they try to influence the victim minimally, systematically receiving commands from the master, sending spam, attacking websites and stealing your passwords from bank clients. In addition to them, there are more aggressive types - crypto-lockers that encrypt the entire contents of the hard drive and require a ransom, “porn banners” that show insignificance and require the sending of very expensive SMS, network worms that disable the network, not to mention targeted attacks.

Imagine that all this can happen with any computer or server in your organization. Agree, it is unpleasant to lose or allow the leakage of all data from the computer of a lawyer, chief accountant or director. Even the failure of individual nodes of your infrastructure during the treatment or refilling of a workstation can damage the business.

I hope that the colors have thickened enough and it's time to move on to the description of typical tasks and requirements for an ideal solution for protecting web traffic. While I intentionally do not use the names of products or devices since the problem can be solved in various ways.

The solution should provide the ability to block access to knowingly malicious websites or their individual sections. At the same time, information about the level of risk is pulled from the cloud analytics center and dynamically updated every few minutes. If the site has recently appeared, then the decision should be able to independently analyze the content and decide on the degree of danger.

The administrator can only determine the acceptable level of risk and, for example, block all sites with a reputation of less than six on a scale from -10 to +10.

By category and reputation should be broken down not only sites, but also their subsections and individual page elements. For example, on the most popular website with weather forecasts, there are links to dubious resources offering to lose weight, stop eating just one product, or find out how Pugacheva gave birth to twins. In this case, employees should leave access to the main site and block the display of questionable parts of the web page.

It should be possible to block access to certain categories of sites, for example, file sharing, online casinos and hacker forums. Category information should also be pulled from the cloud analytics center. The more categories the device understands and the more accurately they are identified, the better.

It is also worth paying attention to the speed of response of the analytics center to your requests for changing categories of websites. Sites that your employees use for business purposes may not be correctly categorized as blocked. The reverse situation is also possible when the site is not assigned to a blocked category. These difficulties can be solved manually by adding individual resources to the white list or black list, but this approach is not applicable if you have to do this every day, and even on several devices.

Special attention should be paid to the support of the Cyrillic alphabet and the correct classification of Russian-language sites. As a rule, Western vendors do not give them due attention. Fortunately, the divisions of Ironport, a company developing web traffic protection solutions and acquired by Cisco, are located in / in Ukraine, so there are no problems mentioned above.

It should be possible to scan potentially dangerous files with anti-virus engines before giving them to end users. If there are several such engines, this slightly increases the chances of detecting malware. You also implement the principle of layered defense and reduce the chances of infection in the event that the antivirus is disabled, not updated or simply absent on the final host.

The peak of excellence in analyzing potential malware is the use of Advanced Malware Protection (AMP) engine or analogues to protect against targeted attacks. In such attacks, malicious files are designed specifically for several organizations, are not common on the Internet and, as a rule, have not yet fallen into the traps of anti-virus vendors. The VRT Sourcefire and Cisco SIO analytics centers check to see if this particular file was previously encountered during an attack in another organization, and if not, it tests it in the sandbox, analyzing the actions performed. Earlier we wrote about AMP on Habr It

will also be useful to filter files by extensions and headers, banning executable files, encrypted archives, audio and video files, .torrent files, magnet links, etc.

Traditional access control lists on firewalls are practically no longer safe. For ten years or more, one could be relatively confident that TCP 80 and 443 were used only for accessing the Internet through a web browser, and TCP 25 for sending e-mail. Today, Skype, Dropbox, TeamViewer, torrent clients and thousands of other applications work via HTTP and port 80. English-speaking colleagues call this situation “HTTP is new TCP”. Many of these applications can be used to transfer sensitive files, video streams, and even remotely control workstations. Naturally, these are not the types of activity that we are pleased to see in the corporate network.

Here, a solution restricting the use of applications and their individual components can help us. For example, allow Skype and Facebook, but prohibit the transfer of files and video calls. It will also be useful to prohibit as a class all applications for p2p file sharing, anonymizers and utilities for remote management.

The definition of applications is carried out on the basis of “application signatures”, which are automatically updated from the manufacturer’s website. A huge plus is the ability to create “application signatures” on their own or to load them openly from the community site. Many manufacturers develop “application signatures” only on their own, and often do not have time to monitor updates of Russian applications or simply do not deal with them. Of course, they are unlikely to take up the development of signatures for industry applications or applications of their own design.

As practice shows, many computers can be members of botnets for several years. The arms race has reached such a level that malware not only pulls updates of its versions from the control center, but also patch holes in the OS and applications through which they got to the computer to prevent the appearance of competitors. Not all anti-virus solutions are able to establish the fact of infection, given that such malware works at a very low level, hiding its processes, connections and existence in general from the antivirus.

Here, solutions that analyze traffic from workstations to Internet botnet control centers come to our aid. The main detection method is to monitor connections to servers in the “black lists” - already known botnet control centers, “dark” zones of the Internet, etc.

If this is a targeted attack or an unknown botnet, then detection is carried out using behavioral analysis. For example, a “phone home” session between a zombie and a master can be distinguished by content encryption, a small amount of transmitted data and a long connection time.

Almost all modern security features have the ability to assign policies not only based on IP addresses, but also based on user accounts in AD and their membership in AD groups. Consider an example of a simple policy:

As practice shows, many companies have organizational difficulties that do not immediately allow them to begin restricting access to Internet resources. A formalized policy is either absent or in practice there are too many exceptions for it in the form of privileged employees and their friends. In such cases, it is worth acting on the 80/20 principle and starting with minimal restrictions, for example, blocking sites with the highest level of risk, certain categories and sites that are obviously not related to work responsibilities. It also helps to install the solution in monitoring mode and provide reports on the use of Internet resources to company management.

Already today, many mail services and social networks encrypt their traffic by default, which does not allow to analyze the transmitted information. According to research, encrypted SSL traffic in 2013 amounted to 25-35% of the total volume of transmitted data, and its share will only increase.

Fortunately, SSL traffic from a user to Internet resources can be decrypted. To do this, we replace the server certificate with the device certificate and terminate the connection on it. After the user's requests are analyzed and recognized as legitimate, the device establishes a new encrypted connection to the web server on its behalf.

Decryption of traffic can be carried out both on the same node that performs analysis and filtering, and on a dedicated specialized device. When performing all tasks on a single node, performance itself decreases, sometimes at times.

In order for a project to protect web traffic to take place, it is extremely useful to show management and benefits that are not directly related to security:

It is also important to understand whether the solution in question is suitable for your organization, here it is worth paying attention to:

Naturally, it is impossible to talk about everything in one article, and here are some topics that were not covered:

In the next article it is planned to tell by means of what Cisco solutions and how it is possible to solve the above tasks.

I hope that the article was useful to you, I will be glad to hear additions and suggestions about new topics in the comments.

Stay tuned;)

Link to continue article

If you remember only one fact from the whole article, then you should know that on websites for adults there is a ten times less chance of catching malware than on conventional Internet resources.

Today, most Russian companies already provide employees with Internet access from work computers. Usually, state organizations, law enforcement agencies and companies processing a large amount of personal data lag behind in this regard. But even in such organizations, there are always separate network segments or workstations connected to the Internet.

Not providing Internet access to employees has long been considered bad form. About a third of the candidates simply will not come to you to find out that you do not have or have very limited access to the Internet, since they consider it as necessary as clean water and ventilation. This is especially true for the current generation of 20-25 year olds who are accustomed from school that any information can be quickly found in a search engine, and updates on social networks should be checked no less than half an hour.

Enough intros, move on to myths.

Myth # 1 If you don’t go to adult sites, everything will be fine

No matter how. According to the Symantec's Internet Security Threat Report, only 2.4% of adult sites distribute malware, which is several times less than blogs, news portals and online stores.

It’s enough to recall examples of hacking sites of the New York Times, NBC, Russian Railways, the Vedomosti portal, sites of the Georgian government, TechCrunch Europe and others, from the pages of which viruses were spread and phishing attacks were carried out.

On the other hand, you as a responsible employee can make a strong-willed decision not to visit slippery websites, but can your colleagues resist? Do they resist the temptation to click on the link in the "Nigerian letter" or click on the banner with a message about the winnings of 100.5 million dollars?

Myth # 2 Even if I get to the infected site, the antivirus will save me

There is a chance, but it is very small. Before the release of the light, the creators of the malware verify that their creations are not detected by current versions of antiviruses. After the first versions of the malware are discovered by antivirus analysts, it takes from 6 to 24 hours to research and develop the signature. The speed of distribution of signatures and patches for vulnerable software depends entirely on your infrastructure, but the bill usually goes on days and weeks. All this time, users are vulnerable.

Myth # 3. A solution for protecting hosts can filter categories of sites by risk, and that's enough

Please note that the anti-virus vendors themselves offer dedicated specialized solutions for protecting web traffic. Hosted solutions may have basic functionality for filtering websites by categories and risks, but they cannot afford to store the database of malicious sites locally and dynamically update it without sacrificing performance. The host approach to solving the problem is also not applicable for companies in which not all workstations are included in the domain and / or are not centrally managed.

Myth # 4 If you pick up a virus, then nothing bad will happen.

Modern malware is actually more humane compared to its counterparts from the 90s - most often they try to influence the victim minimally, systematically receiving commands from the master, sending spam, attacking websites and stealing your passwords from bank clients. In addition to them, there are more aggressive types - crypto-lockers that encrypt the entire contents of the hard drive and require a ransom, “porn banners” that show insignificance and require the sending of very expensive SMS, network worms that disable the network, not to mention targeted attacks.

Imagine that all this can happen with any computer or server in your organization. Agree, it is unpleasant to lose or allow the leakage of all data from the computer of a lawyer, chief accountant or director. Even the failure of individual nodes of your infrastructure during the treatment or refilling of a workstation can damage the business.

I hope that the colors have thickened enough and it's time to move on to the description of typical tasks and requirements for an ideal solution for protecting web traffic. While I intentionally do not use the names of products or devices since the problem can be solved in various ways.

Requirements for Technical Solutions

Reputation filtering of Internet resources

The solution should provide the ability to block access to knowingly malicious websites or their individual sections. At the same time, information about the level of risk is pulled from the cloud analytics center and dynamically updated every few minutes. If the site has recently appeared, then the decision should be able to independently analyze the content and decide on the degree of danger.

The administrator can only determine the acceptable level of risk and, for example, block all sites with a reputation of less than six on a scale from -10 to +10.

By category and reputation should be broken down not only sites, but also their subsections and individual page elements. For example, on the most popular website with weather forecasts, there are links to dubious resources offering to lose weight, stop eating just one product, or find out how Pugacheva gave birth to twins. In this case, employees should leave access to the main site and block the display of questionable parts of the web page.

Filtering Internet resources by category (URL filtering)

It should be possible to block access to certain categories of sites, for example, file sharing, online casinos and hacker forums. Category information should also be pulled from the cloud analytics center. The more categories the device understands and the more accurately they are identified, the better.

It is also worth paying attention to the speed of response of the analytics center to your requests for changing categories of websites. Sites that your employees use for business purposes may not be correctly categorized as blocked. The reverse situation is also possible when the site is not assigned to a blocked category. These difficulties can be solved manually by adding individual resources to the white list or black list, but this approach is not applicable if you have to do this every day, and even on several devices.

Special attention should be paid to the support of the Cyrillic alphabet and the correct classification of Russian-language sites. As a rule, Western vendors do not give them due attention. Fortunately, the divisions of Ironport, a company developing web traffic protection solutions and acquired by Cisco, are located in / in Ukraine, so there are no problems mentioned above.

Scan Download Files

It should be possible to scan potentially dangerous files with anti-virus engines before giving them to end users. If there are several such engines, this slightly increases the chances of detecting malware. You also implement the principle of layered defense and reduce the chances of infection in the event that the antivirus is disabled, not updated or simply absent on the final host.

The peak of excellence in analyzing potential malware is the use of Advanced Malware Protection (AMP) engine or analogues to protect against targeted attacks. In such attacks, malicious files are designed specifically for several organizations, are not common on the Internet and, as a rule, have not yet fallen into the traps of anti-virus vendors. The VRT Sourcefire and Cisco SIO analytics centers check to see if this particular file was previously encountered during an attack in another organization, and if not, it tests it in the sandbox, analyzing the actions performed. Earlier we wrote about AMP on Habr It

will also be useful to filter files by extensions and headers, banning executable files, encrypted archives, audio and video files, .torrent files, magnet links, etc.

Understanding applications and their components

Traditional access control lists on firewalls are practically no longer safe. For ten years or more, one could be relatively confident that TCP 80 and 443 were used only for accessing the Internet through a web browser, and TCP 25 for sending e-mail. Today, Skype, Dropbox, TeamViewer, torrent clients and thousands of other applications work via HTTP and port 80. English-speaking colleagues call this situation “HTTP is new TCP”. Many of these applications can be used to transfer sensitive files, video streams, and even remotely control workstations. Naturally, these are not the types of activity that we are pleased to see in the corporate network.

Here, a solution restricting the use of applications and their individual components can help us. For example, allow Skype and Facebook, but prohibit the transfer of files and video calls. It will also be useful to prohibit as a class all applications for p2p file sharing, anonymizers and utilities for remote management.

The definition of applications is carried out on the basis of “application signatures”, which are automatically updated from the manufacturer’s website. A huge plus is the ability to create “application signatures” on their own or to load them openly from the community site. Many manufacturers develop “application signatures” only on their own, and often do not have time to monitor updates of Russian applications or simply do not deal with them. Of course, they are unlikely to take up the development of signatures for industry applications or applications of their own design.

Establishing infected hosts based on established connections

As practice shows, many computers can be members of botnets for several years. The arms race has reached such a level that malware not only pulls updates of its versions from the control center, but also patch holes in the OS and applications through which they got to the computer to prevent the appearance of competitors. Not all anti-virus solutions are able to establish the fact of infection, given that such malware works at a very low level, hiding its processes, connections and existence in general from the antivirus.

Here, solutions that analyze traffic from workstations to Internet botnet control centers come to our aid. The main detection method is to monitor connections to servers in the “black lists” - already known botnet control centers, “dark” zones of the Internet, etc.

If this is a targeted attack or an unknown botnet, then detection is carried out using behavioral analysis. For example, a “phone home” session between a zombie and a master can be distinguished by content encryption, a small amount of transmitted data and a long connection time.

Flexible access control policies

Almost all modern security features have the ability to assign policies not only based on IP addresses, but also based on user accounts in AD and their membership in AD groups. Consider an example of a simple policy:

- Internet access is available to all domain users from computers included in the domain and corporate tablets

- With the exception of users in the domain groups “Call Center Operators” and “Without Internet Access”

- At a rate of no more than 512 Kbps per employee

- All employees are denied access to the categories "Online Casino", "Job Search" and "Adult Sites"

- With the exception of the Leadership group

- All employees are denied access to sites with a reputation of less than six

- The use of social networks and streaming video services is prohibited for all employees, with the exception of the group “marketing and public relations department”

- Video viewing is limited in speed to 128Kbps

- It is forbidden to use all game applications, with the exception of a game created by the company

- It is forbidden to use torrent clients, Internet messengers and utilities for remote administration

- Scan downloaded files with two of the three anti-virus engines, except for .AVI files

- Deny downloading .mp3 files and encrypted archives

As practice shows, many companies have organizational difficulties that do not immediately allow them to begin restricting access to Internet resources. A formalized policy is either absent or in practice there are too many exceptions for it in the form of privileged employees and their friends. In such cases, it is worth acting on the 80/20 principle and starting with minimal restrictions, for example, blocking sites with the highest level of risk, certain categories and sites that are obviously not related to work responsibilities. It also helps to install the solution in monitoring mode and provide reports on the use of Internet resources to company management.

Interception and verification of SSL traffic

Already today, many mail services and social networks encrypt their traffic by default, which does not allow to analyze the transmitted information. According to research, encrypted SSL traffic in 2013 amounted to 25-35% of the total volume of transmitted data, and its share will only increase.

Fortunately, SSL traffic from a user to Internet resources can be decrypted. To do this, we replace the server certificate with the device certificate and terminate the connection on it. After the user's requests are analyzed and recognized as legitimate, the device establishes a new encrypted connection to the web server on its behalf.

Decryption of traffic can be carried out both on the same node that performs analysis and filtering, and on a dedicated specialized device. When performing all tasks on a single node, performance itself decreases, sometimes at times.

Additional requirements

In order for a project to protect web traffic to take place, it is extremely useful to show management and benefits that are not directly related to security:

- Quoting traffic by time and user, for example, no more than 1GB of traffic per month or no more than two hours on the Internet per day

- Bandwidth limit for individual sites, their parts or applications, for example, 100Kbps when downloading streaming video or audio

- Web traffic caching - will allow both to accelerate the download speed of popular sites, and save traffic when downloading the same files multiple times

- Channel shaping - uniform bandwidth allocation and prioritization among all users

It is also important to understand whether the solution in question is suitable for your organization, here it is worth paying attention to:

- Single sign on - transparent user authorization without additional login / password, including for smartphones and tablets

- Support for several ways to integrate into your existing infrastructure

- The ability to install solutions in the form of specialized hardware devices or virtual machines

- Centralized management of both the devices themselves and their policies

- Centralized reporting on all devices with the ability to create custom reports

- The ability to delegate the administration of individual regions, features, or policies to other administrators

- Solution performance

- Availability of standard functionality for connection logging and leak prevention (DLP) or the ability to integrate with external DLP solutions

- Possibility of integration with SIEM systems

- Licensing Model - By User or Device or IP Address or Bandwidth

- The cost of renewing annual subscriptions

Naturally, it is impossible to talk about everything in one article, and here are some topics that were not covered:

- Checking domain membership, antivirus and OS updates before providing Internet access

- How to protect employees who are more often on business trips than in the office

- What difficulties arise when protecting the web traffic of workstations with shift workers, virtual workstations (VDI), tablets and smartphones

- When is it cheaper and more convenient to use a cloud service to filter web traffic

- Is it worth trying to implement similar functionality based on Squid and other open source solutions

In the next article it is planned to tell by means of what Cisco solutions and how it is possible to solve the above tasks.

I hope that the article was useful to you, I will be glad to hear additions and suggestions about new topics in the comments.

Stay tuned;)

Link to continue article