An amateurish approach to computer linguistics

- From the sandbox

- Tutorial

With this post I want to draw attention to an interesting area of applied programming that has been booming in recent years - computer linguistics. Namely, to systems capable of parsing and understanding the text in Russian. But I want to shift the main focus of attention from academic and industrial systems, in which tens and thousands of man-hours are invested, to a description of the ways that amateurs can achieve success in this field.

For success, we need several components: the ability to parse text into words and lemmas, the ability to conduct morphological and syntactic analysis, and, most interestingly, the ability to search for meaning in a text.

In the Russian segment of the Internet, there are free syntax engines for non-commercial use, with good quality solving problems of morphological and syntactic analysis. I want to mention two of them.

The first one I use with great benefit myself is the SDK of the grammar dictionary of the Russian language .

The second is a tomita parser from Yandex.

They contain a dictionary, a thesaurus, parsing functions and have a fairly low entry threshold.

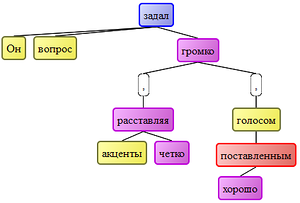

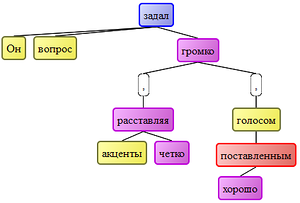

Syntax engines allow you to turn any text into a machine-friendly format. This can be a parse tree, by which you can trace the relationship of words in a phrase:

for the phrase “He asked the question out loud, clearly emphasizing, with a well-posed voice.” source

It's just a vector of indices of normal forms of words.

Unfortunately, for the Russian language, powerful networks of the FreeBase level (1.9 billion connections) are not currently presented, and the Russian segment of DBPedia is much poorer than the English one. Commercial sources, such as the manually verified network used by Compreno from ABBYY, are also not available to amateurs. Therefore, the network will have to be formed independently.

We need - dictionaries, grammatical and semantic connections between words.

I used two main sources:

Wiktionary and a set of dictionaries from the Academician site .

Wiktionary gives us the semantic properties of most common words and the connection of words with related words. Later it will be described how this can be used. The Academician presents dictionaries of synonyms and antonyms - perfectly complementing the Wiktionary.

This is harder. The semantic links are defined in thesauri, including the RuThes thesaurus , which recently opened in public access , but the common problem of all thesauri is their limitations. Too few words, too few links. Therefore, the connection between words can be accumulated independently - by engaging in a collection of statistics of agreed and inconsistent ngrams from fiction libraries and news feeds.

The process of processing large amounts of text, however, is relatively fast - 1 gigabyte of text in single-byte encoding can be processed in less than a week.

A network that combines most of the currently used words of the Russian language with 32 types of connections between words. Relationships such as “synonym”, “antonym”, “characteristic”, “definition”, etc. For comparison, in FreeBase types of links - more than 14 thousand. But even this modest network allows you to get non-trivial results.

Imagine that at the input of the system, as a training sample, a question-and-answer pair was received:

What color is the cucumber? Green cucumber.

and we want the system to correctly answer the question What is the color of an orange?

How to do it? It is necessary to find such a path through the network that connects the "cucumber" and "green". And which can be applied to the "orange". And you need to do this automatically. The abundance of connections between words in the network allows us to solve this problem as follows:

1. green is a hyponym for color ( Wiktionary ).

2. Cucumber has a high-frequency connection with green (agreed upon grams. This means that in the processed literature there was often a connection between green and cucumber "there were green cucumbers on the table").

3. Therefore, the path through the network is defined as "cucumber <ngram" characteristic "> GOAL <hyperonym (inverse to hyponym)> color".

Actually, the task of finding a path over a network is a classic task of finding a path along an undirected graph. It is clear that there can be several such paths, and each of them leads not only to the goal we need - “green”, but also to other similar words. For example, yellow. Yellow cucumbers (overripe) are also found in literature, although less frequently than green ones. And yellow, of course, is exactly the same connected with the word “color” as green. Therefore, it is necessary to carry out the weighing of each of the paths by weight coefficients so that the goal of the search has the highest rating. Reformulating a little, we can say that we are forming a self-learning network that perceives words rather than numerical values as input signals.

So, let's try to apply the found path to other arguments:

The orange is orange, the sea is blue, the clouds are gray, and the clouds are white. The grass usually turns green, although sometimes the purple color erupts. Apparently, with the accumulation of ngrams, several fantastic stories came across.

But also, the ocean is deep, the puddle is shallow, and the seed is small. The path is universal, and works not only for color. The path works for most issues focused on getting the value of the characteristic “what color / size / depth ...”.

We can use our network to form metrics - calculating the degree of similarity between different words. What do grass and cucumber have in common? They both have a connection with the word green. But they also have connections with the words "eat", "grow" and many others. Therefore, if we calculate the number of matching two different words of relations, we can calculate the degree of similarity between these words. Even if these words are not represented in the dictionaries and all the connections between the words are obtained as a result of accumulating statistics.

How can we use the numerical value of the degree of similarity between words? For example, to determine coreferential bonds. The words “mayor” and “official” are often referred to in the same context, and therefore have a close to each other structure of relations with other words. We can reasonably assume that in the analyzed text the same person is hiding behind the words “mayor” and “official”. That is, to establish a connection between them.

Similarly, having met “he went” in the text, it can be calculated that we are talking about an object that walks - a person or an animal. Or an official, because an official is very similar to a person. And having met in the text “it was closed”, it can be calculated that we are talking about an enterprise, or objects similar to the enterprise.

Thus, the calculation of similarity allows the assignment of a word, taking into account its context, to one of the well-known classes “person”, “enterprise”, “place”, etc., which brings us closer to highlighting the meaning of the text.

For example, this approach allows us to separate such texts and correctly determine the meaning of the word “she”: the

director of the Sokolova factory spoke at the meeting. We remind you that it opened in early May. and the director of the Sokolov factory spoke at the meeting. She announced plans to increase production.

As part of the recent international conference on machine linguistics "Dialogue", a review of computer linguistics systems was held, in which I participated. My system was developed specifically for the competition for 1.5 months and was based on the described technology for calculating the similarity between words. In the near future, the results of the competition will be published.

In any case, I want to pay special attention to the fact that “technology has matured” and anyone interested can literally come within a few months to the issues of understanding the text, extracting meaning. That is - to experiments in the field of artificial intelligence, previously available only in academic circles.

For success, we need several components: the ability to parse text into words and lemmas, the ability to conduct morphological and syntactic analysis, and, most interestingly, the ability to search for meaning in a text.

Text parsing

In the Russian segment of the Internet, there are free syntax engines for non-commercial use, with good quality solving problems of morphological and syntactic analysis. I want to mention two of them.

The first one I use with great benefit myself is the SDK of the grammar dictionary of the Russian language .

The second is a tomita parser from Yandex.

They contain a dictionary, a thesaurus, parsing functions and have a fairly low entry threshold.

Syntax engines allow you to turn any text into a machine-friendly format. This can be a parse tree, by which you can trace the relationship of words in a phrase:

for the phrase “He asked the question out loud, clearly emphasizing, with a well-posed voice.” source

It's just a vector of indices of normal forms of words.

Building your own semantic network

Unfortunately, for the Russian language, powerful networks of the FreeBase level (1.9 billion connections) are not currently presented, and the Russian segment of DBPedia is much poorer than the English one. Commercial sources, such as the manually verified network used by Compreno from ABBYY, are also not available to amateurs. Therefore, the network will have to be formed independently.

We need - dictionaries, grammatical and semantic connections between words.

Dictionaries

I used two main sources:

Wiktionary and a set of dictionaries from the Academician site .

Wiktionary gives us the semantic properties of most common words and the connection of words with related words. Later it will be described how this can be used. The Academician presents dictionaries of synonyms and antonyms - perfectly complementing the Wiktionary.

Semantic links

This is harder. The semantic links are defined in thesauri, including the RuThes thesaurus , which recently opened in public access , but the common problem of all thesauri is their limitations. Too few words, too few links. Therefore, the connection between words can be accumulated independently - by engaging in a collection of statistics of agreed and inconsistent ngrams from fiction libraries and news feeds.

The process of processing large amounts of text, however, is relatively fast - 1 gigabyte of text in single-byte encoding can be processed in less than a week.

What is the result?

A network that combines most of the currently used words of the Russian language with 32 types of connections between words. Relationships such as “synonym”, “antonym”, “characteristic”, “definition”, etc. For comparison, in FreeBase types of links - more than 14 thousand. But even this modest network allows you to get non-trivial results.

Conclusion by analogy

Imagine that at the input of the system, as a training sample, a question-and-answer pair was received:

What color is the cucumber? Green cucumber.

and we want the system to correctly answer the question What is the color of an orange?

How to do it? It is necessary to find such a path through the network that connects the "cucumber" and "green". And which can be applied to the "orange". And you need to do this automatically. The abundance of connections between words in the network allows us to solve this problem as follows:

1. green is a hyponym for color ( Wiktionary ).

2. Cucumber has a high-frequency connection with green (agreed upon grams. This means that in the processed literature there was often a connection between green and cucumber "there were green cucumbers on the table").

3. Therefore, the path through the network is defined as "cucumber <ngram" characteristic "> GOAL <hyperonym (inverse to hyponym)> color".

Actually, the task of finding a path over a network is a classic task of finding a path along an undirected graph. It is clear that there can be several such paths, and each of them leads not only to the goal we need - “green”, but also to other similar words. For example, yellow. Yellow cucumbers (overripe) are also found in literature, although less frequently than green ones. And yellow, of course, is exactly the same connected with the word “color” as green. Therefore, it is necessary to carry out the weighing of each of the paths by weight coefficients so that the goal of the search has the highest rating. Reformulating a little, we can say that we are forming a self-learning network that perceives words rather than numerical values as input signals.

So, let's try to apply the found path to other arguments:

The orange is orange, the sea is blue, the clouds are gray, and the clouds are white. The grass usually turns green, although sometimes the purple color erupts. Apparently, with the accumulation of ngrams, several fantastic stories came across.

But also, the ocean is deep, the puddle is shallow, and the seed is small. The path is universal, and works not only for color. The path works for most issues focused on getting the value of the characteristic “what color / size / depth ...”.

Similarity Calculation

We can use our network to form metrics - calculating the degree of similarity between different words. What do grass and cucumber have in common? They both have a connection with the word green. But they also have connections with the words "eat", "grow" and many others. Therefore, if we calculate the number of matching two different words of relations, we can calculate the degree of similarity between these words. Even if these words are not represented in the dictionaries and all the connections between the words are obtained as a result of accumulating statistics.

How can we use the numerical value of the degree of similarity between words? For example, to determine coreferential bonds. The words “mayor” and “official” are often referred to in the same context, and therefore have a close to each other structure of relations with other words. We can reasonably assume that in the analyzed text the same person is hiding behind the words “mayor” and “official”. That is, to establish a connection between them.

Similarly, having met “he went” in the text, it can be calculated that we are talking about an object that walks - a person or an animal. Or an official, because an official is very similar to a person. And having met in the text “it was closed”, it can be calculated that we are talking about an enterprise, or objects similar to the enterprise.

Thus, the calculation of similarity allows the assignment of a word, taking into account its context, to one of the well-known classes “person”, “enterprise”, “place”, etc., which brings us closer to highlighting the meaning of the text.

For example, this approach allows us to separate such texts and correctly determine the meaning of the word “she”: the

director of the Sokolova factory spoke at the meeting. We remind you that it opened in early May. and the director of the Sokolov factory spoke at the meeting. She announced plans to increase production.

Final part

As part of the recent international conference on machine linguistics "Dialogue", a review of computer linguistics systems was held, in which I participated. My system was developed specifically for the competition for 1.5 months and was based on the described technology for calculating the similarity between words. In the near future, the results of the competition will be published.

In any case, I want to pay special attention to the fact that “technology has matured” and anyone interested can literally come within a few months to the issues of understanding the text, extracting meaning. That is - to experiments in the field of artificial intelligence, previously available only in academic circles.