Zero Downtime Upgrade for an application in Microsoft Azure. Part 2: IaaS

The last time we reviewed the methods Zero Downtime Upgrade, which can be applied within the PaaS options Microsoft Azure application deployment. Today we will focus on ways that can be applied not only to cloud services, but to regular virtual machines as part of an IaaS deployment.

As we know, any virtual machine that discusses requests for your application does this through a specific open port (for example, 80, 8080, 443, etc.). If there are multiple virtual machines, then the Microsoft Azure internal load balancer distributes the traffic between these virtual machines. Let's think about how to use this feature for the Zero Downtime Upgrade.

Imagine that we have several virtual machines within a single cloud service. Let me remind you that the internal load balancer redistributes traffic only within the framework of one DNS, that is, within the framework of one cloud service.

Let these virtual machines use version 1.0 of our application. The task, as always, is to upgrade the application to version 1.1 invisibly to the user.

The Load Balanced Endpoint approach offers the following mechanism: you deploy additional virtual machines within an existing cloud service using the same ports and configuration as the original version. The easiest way to do this is through scaling. Let's say there were 2 cars, it became 4. This will also help to avoid a drawdown in application performance during the update.

After that, one after another, you update the version of the application on virtual machines as part of a cloud service. Naturally, in order to avoid redirecting traffic to the currently updated virtual machine, we must disable the corresponding port for it. After that, the internal load balancer begins to redistribute traffic between the old and new versions of the application.

You can update all virtual machines, or you can update 2 of 4, for example, and then simply delete unnecessary ones with the old version of the application. Suppose again with scaling down.

Let us now look at the pros and cons of this approach.

Pros:

Minuses:

As we see this method is almost a tracing-paper with Fault / Update domains, which Microsoft uses to provide 99.95% SLA for several virtual machines as part of cloud services or availability set. The only difference is that the update mechanism must be done manually or automated using Microsoft Azure Automation, for example.

So this update mechanism is quite applicable in practice for applications deployed as part of IaaS.

All the previous methods that we considered are suitable for use only within the framework of one approach to application deployment. However, a logical question arises. Is there really no such tool that could be used in both PaaS and IaaS deployments?

There really is such a service and it is called Traffic Manager. While it is not possible to configure an internal load balancer for a particular cloud service, Traffic Manager provides the ability to configure traffic distribution.

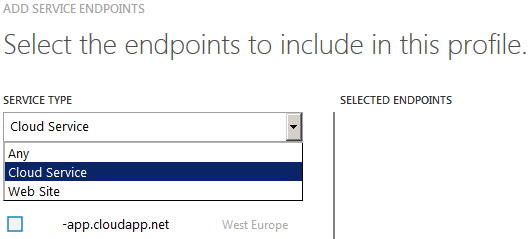

You can distribute traffic not only between different virtual machines, but also cloud services, as well as determine the algorithm by which this same traffic will be distributed.

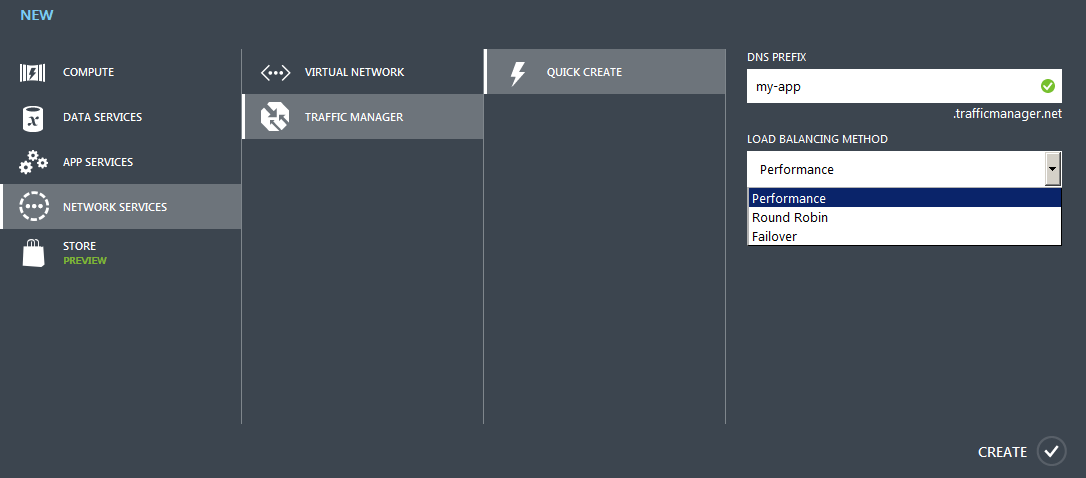

So, in order to implement the Zero Downtime Upgrade scenario when using the Traffic Manager, we need to direct user traffic not to the DNS of a particular cloud service, but to the DNS, which is determined when creating the Traffic Manager profile. It will look like {name} .trafficmanager.net. Naturally, if necessary, you can bind it to the desired CNAME of the name registrar.

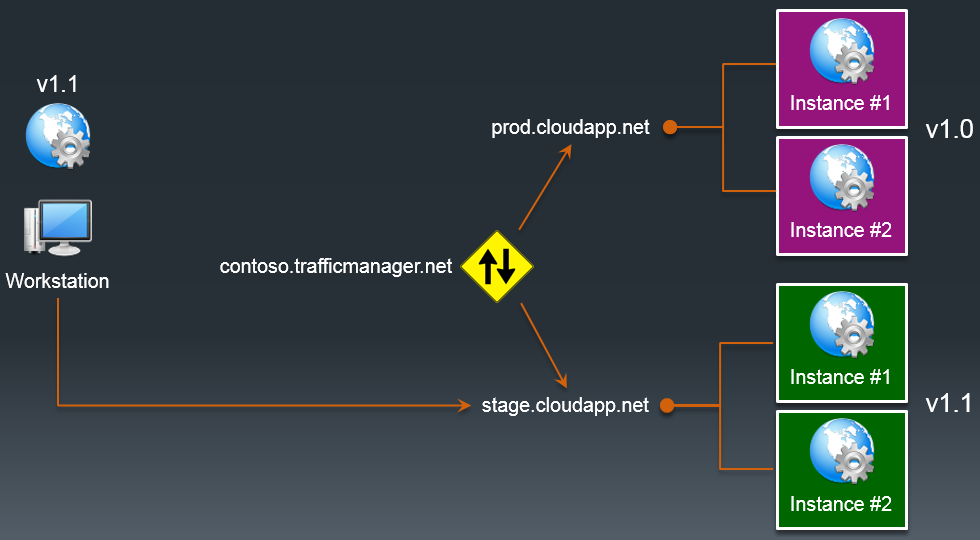

Let's say version 1.0 of our application is hosted within the prod.cloudapp.net cloud service. For the new version, we are creating a separate cloud service. Lets say stage.cloudapp.net. After deploying a new version of the application, we can redirect traffic from the old version of the application to the new one.

Accordingly, as soon as all the traffic has gone to the new version of the application, the old environment can be deleted.

Let us now look at the pros and cons of this approach.

Pros:

Minuses:

The described service has several rather serious advantages over all those described earlier. Using it in a production environment is entirely justified, but you must remember about the additional costs when using it.

That's all I wanted to tell you about the Zero Downtime Upgrade mechanisms that can be applied on the Microsoft Azure platform. It is possible that I missed something. Unsubscribe in the comments about this!

Thank you all for your attention!

Load balanced endpoint

As we know, any virtual machine that discusses requests for your application does this through a specific open port (for example, 80, 8080, 443, etc.). If there are multiple virtual machines, then the Microsoft Azure internal load balancer distributes the traffic between these virtual machines. Let's think about how to use this feature for the Zero Downtime Upgrade.

Imagine that we have several virtual machines within a single cloud service. Let me remind you that the internal load balancer redistributes traffic only within the framework of one DNS, that is, within the framework of one cloud service.

Let these virtual machines use version 1.0 of our application. The task, as always, is to upgrade the application to version 1.1 invisibly to the user.

The Load Balanced Endpoint approach offers the following mechanism: you deploy additional virtual machines within an existing cloud service using the same ports and configuration as the original version. The easiest way to do this is through scaling. Let's say there were 2 cars, it became 4. This will also help to avoid a drawdown in application performance during the update.

After that, one after another, you update the version of the application on virtual machines as part of a cloud service. Naturally, in order to avoid redirecting traffic to the currently updated virtual machine, we must disable the corresponding port for it. After that, the internal load balancer begins to redistribute traffic between the old and new versions of the application.

You can update all virtual machines, or you can update 2 of 4, for example, and then simply delete unnecessary ones with the old version of the application. Suppose again with scaling down.

Let us now look at the pros and cons of this approach.

Pros:

- Simple scaling process

- IaaS Deployment Support

- No performance drawdown during application update

- Additional financial costs are needed only at the time of updating

Minuses:

- The update process is completely manual or requires automation

- Additional costs during the upgrade process

- The configuration of the old and new virtual machines must match

- Application update as part of PaaS deployment is not supported

As we see this method is almost a tracing-paper with Fault / Update domains, which Microsoft uses to provide 99.95% SLA for several virtual machines as part of cloud services or availability set. The only difference is that the update mechanism must be done manually or automated using Microsoft Azure Automation, for example.

So this update mechanism is quite applicable in practice for applications deployed as part of IaaS.

Traffic manager

All the previous methods that we considered are suitable for use only within the framework of one approach to application deployment. However, a logical question arises. Is there really no such tool that could be used in both PaaS and IaaS deployments?

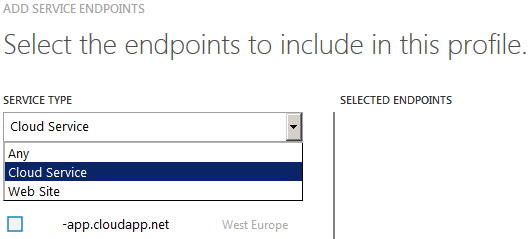

There really is such a service and it is called Traffic Manager. While it is not possible to configure an internal load balancer for a particular cloud service, Traffic Manager provides the ability to configure traffic distribution.

You can distribute traffic not only between different virtual machines, but also cloud services, as well as determine the algorithm by which this same traffic will be distributed.

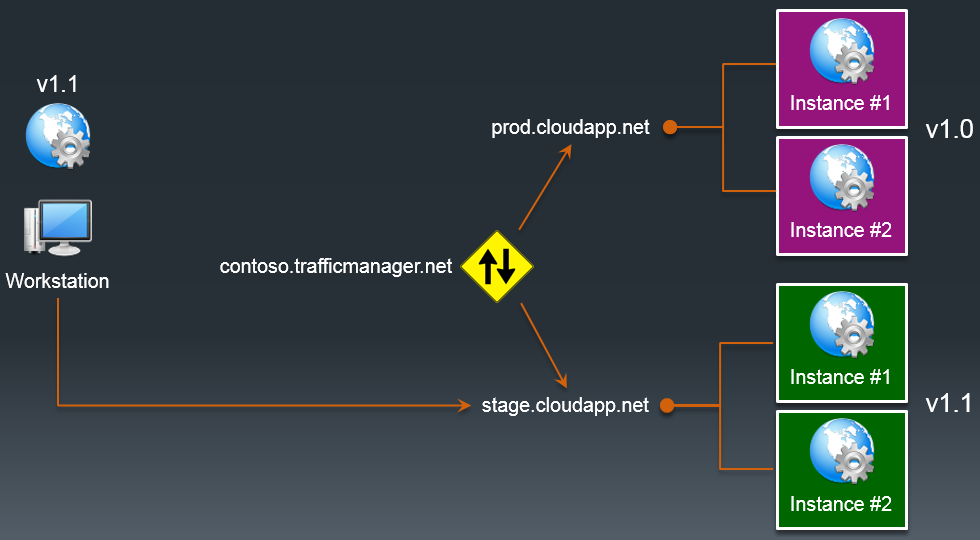

So, in order to implement the Zero Downtime Upgrade scenario when using the Traffic Manager, we need to direct user traffic not to the DNS of a particular cloud service, but to the DNS, which is determined when creating the Traffic Manager profile. It will look like {name} .trafficmanager.net. Naturally, if necessary, you can bind it to the desired CNAME of the name registrar.

Let's say version 1.0 of our application is hosted within the prod.cloudapp.net cloud service. For the new version, we are creating a separate cloud service. Lets say stage.cloudapp.net. After deploying a new version of the application, we can redirect traffic from the old version of the application to the new one.

Accordingly, as soon as all the traffic has gone to the new version of the application, the old environment can be deleted.

Let us now look at the pros and cons of this approach.

Pros:

- Isolated Test Environment

- Support for virtual machines, cloud services, and Microsoft Azure websites

- The configuration of the virtual machines of the new and old versions of the application may vary

- If necessary, there is the opportunity to "return everything back"

Minuses:

- Additional costs for Traffic Manager service

- Additional costs during the upgrade process

- The update process is completely manual or requires automation

- Updating the DNS cache of the name registrar takes time

The described service has several rather serious advantages over all those described earlier. Using it in a production environment is entirely justified, but you must remember about the additional costs when using it.

That's all I wanted to tell you about the Zero Downtime Upgrade mechanisms that can be applied on the Microsoft Azure platform. It is possible that I missed something. Unsubscribe in the comments about this!

Thank you all for your attention!