Crash Response: Stretched cluster against DR site

We have two approaches to Disaster Recovery: a “stretched” cluster (active-active installation) and a platform with virtual machines (replicas) turned off. They have several points for saving snapshots.

There is a request for disaster tolerance, and many of our clients really need it. Therefore, we began to work out both schemes as part of our production.

Methods have pros and cons, now I’ll tell you about them.

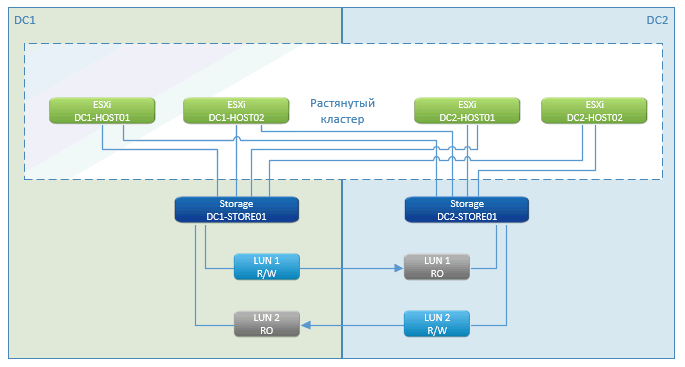

Stretched Cluster

As you can see, this is a standard metro cluster story. In the pros - almost zero downtime, a pause only at the time of starting virtual machines. This feature works out - VMware High Availability (HA). She sees that the hosts are lost, and immediately restarts the VM on the remote site.

The launch is done immediately from the storage, which is located in the cluster.

Storage with a geo-distributed cluster is a marketing feature of NetApp. Other manufacturers have something with a similar name. In essence, this is thought-out asynchronous replication from one side to the other. We write to one node in the local network and synchronize through specialized communication channels with another.

In the event of a failure of one of the storage systems, the remaining (at another site) presents the path to the disks to the remaining hosts. VMs that have died are restarted on them. Everything happens automatically - the data center crashed, everything rebooted, the storage worked, VMware worked. The client saw that everything blinked and restarted.

The only cache from the RAM of the VM can be lost. But if the database threw it off, then the loss is zero in time.

If we lose communication between the sites, then everything continues to work in its place and, as soon as the connection is restored, it starts to synchronize.

The downside is the high price. Because you actually need a double SHD (moreover, similar in type, speed and volume of disks of the first SHD on the main site), which can not be used somehow, except as a reserve. Plus binding to storage for the metro cluster, these are FC bridges, FC network and more.

We have two DPCs, between them an FC bundle along two beams (four dark optics lines and DWDM). These are two pieces of iron, each provides 200 Gbps bandwidth for FC and Ethernet.

Alternative with DR

There is software with an intuitively memorable name - VMware vCloud Availability for Cloud-to-Cloud DR.

This is a system for creating an identical VM on a remote site once, relatively speaking, in 15 minutes. A system for presenting all this in the right way to the cloud control mechanisms is attached to it on electrical tape.

That is, the VMware Replication technology is in the backend. In the event of a failure, we manually run the DR plan on the second site, it automatically stops trying to replicate, then registers the VM in vCloud Director, customizes the IP addresses (so that it does not have to be changed to the VM) and starts the VM in the necessary order. In our solution, it is not necessary to change the addressing, we stretch the networks to both data centers.

Machines are constantly being replicated, but not the entire data center, but only the selected ones are critical processes. It is replicated once in a while, the minimum interval is 15 minutes (this is an ideal case when everything flies and there is a dedicated replication server and a minimum of changes to the VM). In practice, you have a copy half an hour or an hour ago. If something went wrong, then the data that fell into the interval was lost. 15 minutes is the question of the agent who collects the new replication. Veeam say that they can take less than 15 minutes, but in fact it’s also longer in practice if they do not use storage features. I did not see on an industrial machine (not on a test) that it would be otherwise.

For a long time, NetApp, like many other manufacturers of storage systems, has SnapMirror technology, which allows you to shift the work of replication from hypervisors to storage systems, and VMware Replication can use it.

As the replication service runs, the train goes far. But it’s cheap.

Why is it still cheap - because you can use any storage on any side (from different manufacturers, different classes), you do not need to allocate a large volume of disks in advance.

No need to allocate a large disk group, inside which the moons are cut. It just takes a place on the local storage and is applied upon the fact of the availability of the record from the virtual machine. Due to this, the place on the storage system is optimally occupied, if it is used for other tasks. And it is used, since we do not give such a service to all customers.

Minus - you need to configure replication at the VM level, that is, control that everything is correctly configured, that this is the machine, make sure that replication passes, that there are no errors. Create DR plans for each client, conduct their tests.

In the first case, storage is taken, conditionally, infrastructurally, almost by sectors (more precisely, by objects). And then one machine can fall off due to a task falling off due to some software reasons related to a bug at high levels, or due to accessibility problems. This happens a little more often than if you take just low levels.

In plus - DR stores several points. You can roll back a few snapshots.

Outside the guest OS, you need additional software.

In order to get all the necessary networks to Vcloud Director, we need the work of our administrator. In general, all network connectivity in this version remains with our administrator. For a cloud client, this means an application, which also takes time.

Replication is also configured through the application. Added VM - you need to send a request that you need to replicate it. It doesn’t fall into replication tasks automatically. It is necessary to pay attention to the administrator.

Difference

As a result, the price may differ by more than two times. Replication will multiply the cost of disk space by two or more (two full copies + change history), plus something for the service and the reservation of computing resources. In the case of the metro cluster, the cost of space will be multiplied by two, but the space itself will cost significantly more, plus you need to firmly reserve nodes at a remote site. That is, computing resources must be multiplied by two, we can not utilize them for anything else.

In the case of the metro cluster, we can use only the same types of disks so that there is a full mirror. If on the main data center some of the drives are fast, some slow at 10 thousand revolutions per minute, then an identical configuration is needed. In the case of a replica, slower disks on the backup site are possible, which is cheaper due to storage. But when switching to a reserve, it will turn out to be less in performance. That is, if it stores something on the SSD in the main cluster, and is replicated to regular disks, then storage will be much cheaper at the cost of slowing down the reserve infrastructure.

Right now we are choosing what will be included in an earlier release, so we want to consult: can you tell us briefly how you organize your DR sites and what would you like them to do in general?