The door that greets us by name and opens only to employees of the department

We had a couple of days between the big projects, and we decided to make fun of the unit manager’s door. Just for fun. Because the drones in our face will already recognize us - why is the door worse?

At the time of the start of the IT project, we already had an overlay panel printed on a 3D printer. For two days, we made a prototype of a device from iron, ready-made libraries and some mother, which takes into account employees or allows you to check that a person went through the turnstile exactly by his pass.

No money We used open source only.

You can repeat this in 15-20 minutes with our script.

The idea and approach to implementation

It all started with the cottage, where we rested after the delivery of a major project. There was a moment when it was very difficult to get the key into the gate. In the sense that we immediately thought that in the winter it was not good to get our hands out of our pockets, well, we came up with a whole bunch of applications, including checking for passes and employees' personalities.

Actually, we wanted to make a corridor with laser beams, like in the movie Resident Evil, but we did not have time. In general, I wanted the effect of interaction with the system, so that it also responded with a voice.

Since we wanted a prototype, there is a maximum orientation on functionality and quick results to the detriment of appearance, dimensions and beautiful architecture. It turned out scary, but it works quickly.

At first we broke the door control into modules and each began to write his own. The recognition module on the first day was external from the partner’s web service, there was also our old internal neural network engine from another project, plus there were open source solutions. As a result, OpenSource won: our own engine required refactoring and updating (this is a long time), the partner web resource wanted money and didn’t let it go inside, but open source is an open source.

Electronics and housing

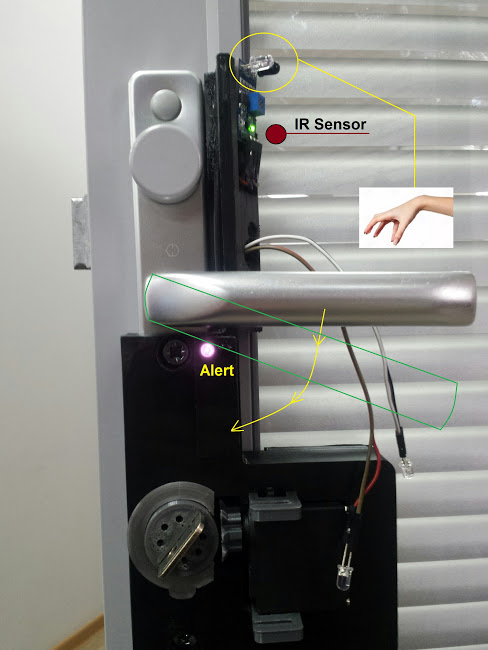

Originally considered the option to simply buy a lock with electronic control and replace them with a standard internal door lock. But because of the presence of glass with shutters in the doors, the internal lock was an unusually narrow lock and there was simply no electronic replacement on the market. Therefore, our engineer printed a removable invoice lock, providing for the capacity for the control unit and the lock opening unit.

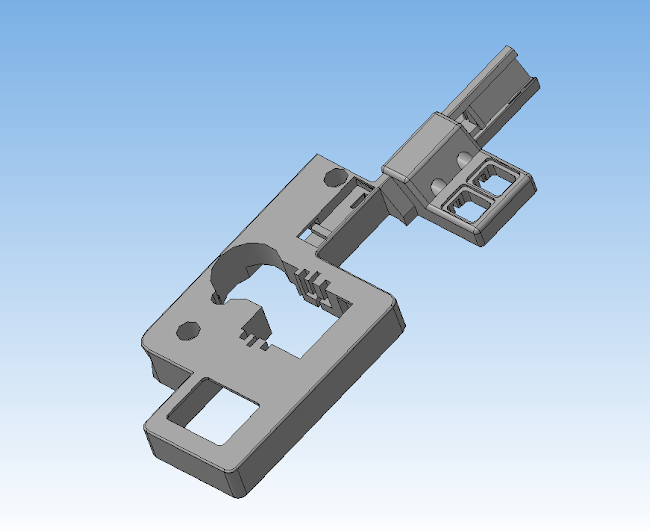

So, the design of the case:

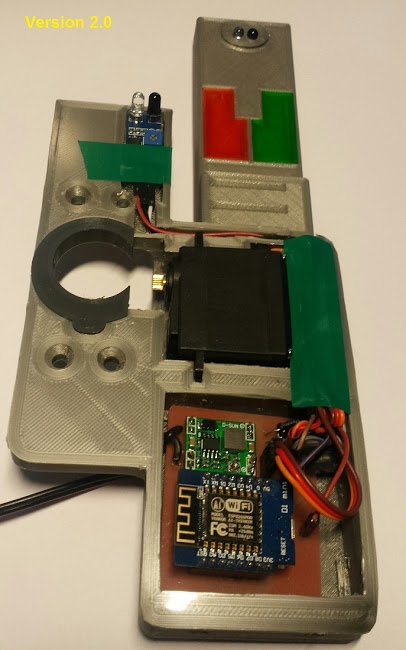

We printed it from a model that we made before the project (modeling and fitting took more time than writing the code):

The design of the hull is made in Compass-3D. As a result, Dima made 3 versions of the case, while he could find the optimal arrangement of elements and fasteners.

Inside the case of the lock, an MG-995 motor with a force of 10 kg (real turning power - 5-7 kg), a pair of infrared sensors were installed to the inserted key on the gears, to control the automation at the entrance and exit, Esp8266-microcontroller with a Wi-Fi module and a web server that, for simplicity, received http control commands for opening and closing the door. The same controller turned the gears key.

The program part of the microcontroller is written in the C language.

Plunging into the details of manufacturing the case and the filling, involuntarily thought about the complexity of seemingly simple objects.

Total: the lock control unit can receive opening commands via Wi-Fi.

Part of the face recognition

We need a face recognition unit, which will send the “open” command to the lock if it is necessary to open it. Because of the modular architecture, it can work on a mini-server in a smart home or be a web service on the Internet. We also need a Wi-Fi point on the local network with a lock control unit: this is either a thin client or a home server. We deployed the server right on the old laptop, that is, we combined the server and the thin client. A USB stick was inserted into the camera.

For the recognition itself, they stopped at the face recognition Python-library under an open license of the Massachusetts University of Technology.

Several photos of each project participant were uploaded to the model - in front, in profile and in nature. In theory, the more photos you upload to the model, the higher its accuracy will be, but in most cases there will be enough avatars from Facebook or pictures from the Eychara database.

We deployed the infrastructure as a service in our Cloud Cloud Technoserv and quickly debugged. Depla new virtual machine with all services took 20 minutes. Then they poured all the code with libraries on a working laptop for autonomous work.

The system selects the largest face in the frame and recognizes it twice with a frequency of 0.5 seconds.

The webcam from the box of forgotten things from the 90s went into action. What really was, but it was enough.

The total recognition time is just over three seconds. This is because the laptop is really old and it does not have a video card. With vidyuhi and fast web-camera recognition rate will increase significantly.

Immediately they wrote a voice recognition connector, because only by the face is unreliable enough (more on that later), two factors are necessary.

It took 6 hours to more or less debugged prototype after choosing a library.

We also connected a bluetooth speaker to a laptop so that it can talk. The laptop itself was hidden in a cabinet with thank-you letters, such as an embedded device.

Tests

The description of the library says: “Built using dlib ’ s state-of-the-art face recognition built with deep learning. 99.38% on the Labeled Faces of the Wild benchmark. Naturally, there is not 99.38%, but much less. Because you need to load normal photos (and more), put a normal camera and align the light. But since, while you are on the door, she manages to take 4-5 frames, she usually opens almost immediately.

Errors: a couple of times the door was confused and did not let us go right away. Strangers from other departments could not enter the door.

You can make faces at the door, she understands everything and opens up.

According to the photos of the employee, of course, opens. We added the whole person's recognition module in the next version (outside of these couple of days), and it began to open only the bust.

Then they checked the chromaticity check (the library downsamplits and takes black and white images in processing), mimic movements and blinking - you need a better camera to see a person when he is still fit, and everything will be fine.

That is, as a replacement key, of course not, because we need a second factor - for example, a voice. But for non-critical doors suitable. To account for past employees, too. To check if the employee matches the pass at the checkpoint, too. Another door can recognize the dog returned home. If you remember the history of pattern recognition, then one of the first industrial applications was that the American developer was pulled out by a neighbor cat, teasing on the lawn. He made cat recognition in a half-ride and connected the irrigation system to his mini-server. After a couple of days, the cat realized that it was better not to crap. Never. Nowhere.

The case turned out to be futuristic and cumbersome, in the commercial version there should be nothing on the door at all, except for a camera with a microphone and a small screen with a visual and audio assistant.

Repeat at home

Link to the library above.

Do it once

Make two

#/usr/bin/env python"""

Скрипт распознавания людей и отмыкания замка:

"""import os

import face_recognition

import cv2

import random

from pygame import mixer

import requests

import datetime

import time

known_faces = [

‘Emp1’

, ‘Emp2’

, ‘Emp3’

]

faces_folder = 'faces'

welcome_folder = 'welcome'

known_faces_encodings = [face_recognition.face_encodings(face_recognition.load_image_file(os.path.join(faces_folder, i +".jpg")))[0] for i in known_faces]

definit_recognition():passdefface_detection(face_encodings):

name = "Unknown"for face_encoding in face_encodings:

matches = face_recognition.compare_faces(known_faces_encodings, face_encoding)

ifTruein matches:

first_match_index = matches.index(True)

name = known_faces[first_match_index]

return name

defunlock():

unlock_url = 'http://192.168.4.1/yes'

unlock_req = requests.get(unlock_url)

#return unlock_req.status_codedeflock():

lock_url = 'http://192.168.4.1/no'

lock_req = requests.get(lock_url)

#return lock_req.status_codedefwelcome(type):

messages = {

'hello': [os.path.join(welcome_folder, file) for file in ['hello_01.wav', 'hello_02.wav', 'hello_03.wav', 'hello_04.wav', 'hello_05.wav']],

'welcome': [os.path.join(welcome_folder, file) for file in ['welcome_01.wav', 'welcome_02.wav', 'welcome_03.wav']],

'unwelcome': [os.path.join(welcome_folder, file) for file in ['unwelcome_01.mp3', 'unwelcome_02.mp3', 'unwelcome_03.wav', 'unwelcome_04.wav', 'unwelcome_05.wav']],

'whoareyou': [os.path.join(welcome_folder, file) for file in ['whoareyou_01.wav', 'whoareyou_02.wav', 'whoareyou_03.wav', 'whoareyou_04.wav']]

}

mixer.init()

mixer.music.load(random.choice(messages[type]))

mixer.music.play()

defmain():

current = datetime.datetime.now()

vc = cv2.VideoCapture(0)

if vc.isOpened():

is_capturing = vc.read()[0]

else:

is_capturing = False

user_counter = 0while is_capturing:

is_capturing, frame = vc.read()

small_frame = cv2.resize(frame, (0, 0), fx=0.25, fy=0.25)

rgb_small_frame = small_frame[:, :, ::-1]

face_locations = face_recognition.face_locations(rgb_small_frame)

face_encodings = face_recognition.face_encodings(rgb_small_frame, face_locations)

if len(face_encodings) > 0:

user_counter += 1if user_counter > 6:

welcome('hello')

print('hello')

time.sleep(2)

user = face_detection(face_encodings)

timing = datetime.datetime.now() - current

if timing.seconds > 4:

if user in [‘Emp3’]:

welcome('unwelcome')

print('go home, ' + user)

lock()

elif user in known_faces:

welcome('welcome')

print('welcome, ' + user)

unlock()

else:

welcome('whoareyou')

print('who is there?')

current = datetime.datetime.now()

user_counter = 0

time.sleep(0.5)

if __name__ == '__main__':

main()

In the script we have the names of employees (Emp1, Emp2) correspond to the files with their photos in the directory, you need to add your own. Resolution does not matter, you can at least 640x480. There are two categories of employees - targeted, who miss, and those who immediately send home.

We used the simplest web-camera logitech as a camera. Instead of a laptop, it is quite possible to use a microcontroller, but you need to figure out how to put a python on it and the selected libraries.

Result

The technology was felt by salespeople from neighboring departments working with us on the same floor. The waybill of the lock looks especially cool, and with what sound it opens the door! Do not believe it, but the internal popularization of technology is also necessary, as well as external.

We were asked a couple of times why we did it during off-hours.

The answer is because I really wanted to amuse our guys so that they could feel the technologies we used on a working device. Because this is a prototype on hardware and software right away and this is such a small workout before larger projects. It is unlikely that the prototype itself will be used somewhere, but it has an educational function and morality.

Well, we have seen how fast industrial prototypes can be made. We usually do quick (2-3 weeks) pilot projects for production. By the way, if it’s interesting to quickly calculate something in terms of automation and make a pilot, this is exactly the specialization of our team. Write to tmishin@technoserv.com , if that.

Feels like a good hakaton.

And now we have a toy.

A colleague came running yesterday and showed a drone video with a flamethrower . It seems we have a couple of ideas ...

I told all this also to the fact that literally 3-4 years ago it was very expensive and long to write industrial detectors for video surveillance. To control the size of the fraction of material on the conveyor belt, to stop in the event of contact with foreign objects, to turn off when working in a hazardous area, and so on. Today it is done very, very quickly. And much cheaper. Not as fast as the door case, but a 2 week pilot is a very realistic estimate.