The evolution of architecture: from self-written services to HandlerSocket

Today we will talk about how Badoo has changed its approach to designing loaded “key-value” services. You will learn by what scheme we created such services several years ago (using the database as repositories and a specialized daemon as an interface to data), what difficulties we encountered and what architecture we came to as a result of, solving the problems that appeared.

Modern Internet projects actively use internal services that allow access to values by key. It can be either ready-made solutions or in-house developments. Since 2006, Badoo specialists have created a number of such services, including:

- a service that reports on the location of user data by its identifier;

- a service that stores information about the number of calls to the user profile;

- list of user interests;

- several "geoservices" to determine the location of the user.

Despite their diversity, a unified design approach was applied, according to which the service should consist of the following components:

- A repository database that stores the reference version of data.

- A fast C or C ++ daemon that processes requests for data and is updated along with the repository database.

- PHP classes that provide work with the daemon and the repository base.

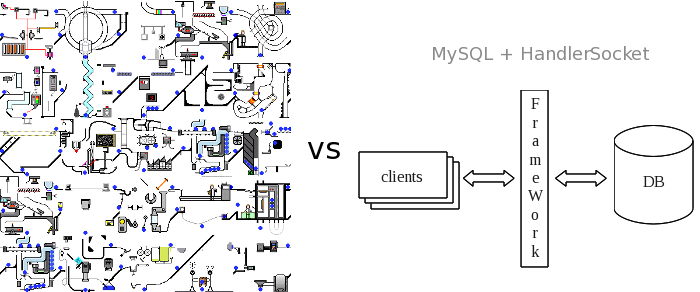

It was important for us that any of the services could handle a large number of simultaneous requests, so a solution based on only one MySQL was not suitable. From here an additional component appeared in the form of a fast daemon, which could not be replaced by the memcached system, because we needed to use specific data indices.

At the end of 2010, the HandlerSocket MySQL plug-in, written by a Japanese craftsman , provided NoSQL with an interface to data stored in MySQL. Already in the spring of 2011, Badoo experts turned their attention to the new technology, hoping with it to simplify the development and support of the “key-value” services of the company.

“The first victim”

In the network to search for new friends Badoo registered a huge number of users who receive various notifications by e-mail. And each of these users has the opportunity to choose the type of notifications that he would like to receive. Accordingly, you need to store mail settings somewhere and provide access to this data. Over 99% of requests to them are read requests. The main "readers" are script-generators and senders of e-mail, which on the basis of these data decide on the possibility or inability to send correspondence of a certain type to a specific user. At first, only the database was used to store data, but it ceased to cope with the ever-growing number of read requests. To remove this load, a special daemon was created - EmailNotification.

“Old school” service implementation

A key component of the service was a C-daemon, which stores all the settings in memory and provides them with read and write access via the simplest protocol over TCP. In addition, this daemon collected statistics of hits, on which we built graphs.

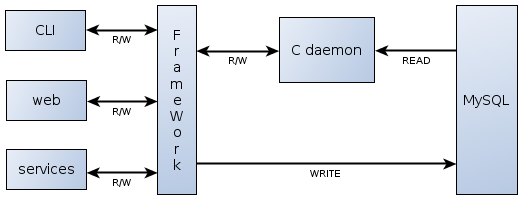

At first, the service architecture was quite simple and looked like this:

Settings were constantly stored in the database on one DB server, and the C-daemon worked on another. At start, the daemon selected all the data from the database and built an index on it ( judy arrays) The initialization process took about 15 minutes, but since this operation was required only a few times during the entire existence of the service, this was not a significant drawback. In the process, clients (CLI scripts, web and other services) accessed the daemon through a special API, for example, asking if this user can be sent this letter or not, and the daemon, using the built-in logic, looked for a setting in its memory and issued an answer . The "Writer Clients" instructed the daemon to change certain settings for a specific user.

The task of writing data to MySQL was entirely the responsibility of the EmailNotification API. At the same time, data synchronization could potentially occur, for example, when a record successfully passed to the database but did not go to the daemon, or vice versa. However, the service worked great. Until in 2007 there was a “little trouble” in Badoo, namely, a second data center appeared, geographically remote from the first and designed to serve users of the New World. It immediately became clear that the usual duplication of the architectural solution on the new site could not be dispensed with. Since letters to the same user can be sent from both sites, it is required that both services operate on the same data.

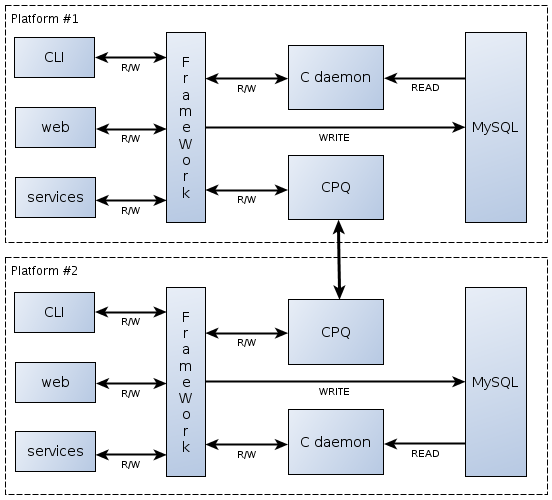

Fortunately, especially for such cases, the company has a system of CPQ events (CPQ - Cross Platform Queue, cross-platform queues. - Approx. Author), which allows you to quickly, and most importantly, guarantee and in a given sequence to transfer information about events that have occurred between sites. As a result, on two sites, the service architecture took the following form:

Now any write requests went not only to the base and C-daemon of the local site, but also to CPQ. CPQ sent the request to the adjacent queue of another site, and that already played back the recording request via the EmailNotification API on the same site.

The system became more complicated, but nevertheless continued to work stably for several years. And everything would be fine if there was no discrepancy of data on the sites. The two bases of the available sites had a different number of settings. And although the difference was less than 0.1%, the sensation of “cleanliness and security” was gone. Moreover, we found that the difference appeared not only between the bases of the sites, but it was present between the base and the C-demon within the same site. I had to think about how to make the service more reliable.

New approach

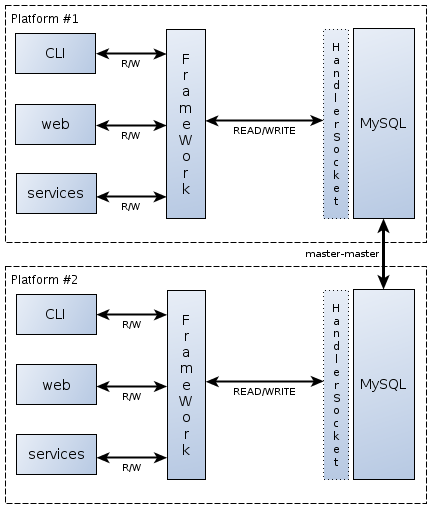

Initially, the basic requirements for the EmailNotification service were two: the first was the high speed of processing read requests, which the C-daemon did very well; the second is the identity of the data on both sites with which there were problems. Instead of struggling with synchronization, we decided to completely redo the architecture of the service, following the path of simplifying it:

First of all, we connected the HandlerSocket plugin to MySQL and taught our API to work with it through the database. Thanks to this, we were able to refuse to use the C-demon. Then, simplifying the API, we removed the CPQ service from the scheme, replacing it with the well-established “master-master” replication between sites. As a result, we got a very simple and reliable circuit, which has the following advantages:

- Replication is transparent; no code is written that works with the internal CPQ service. At the same time, the delay in transferring updates between sites decreased from a few seconds to fractions of a second.

- Atomic data recording (finally!). If the EmailNotification API request to write to the HandlerSocket has completed successfully, then the task is completed, the recording will be exactly duplicated on another site, and we do not need to inform any other components about it.

Did we have problems switching to the new scheme? There are no serious ones. AUTO_INCREMENT is already supported by the HandlerSocket plugin, composite indexes work, ENUMs as well, we just had to abandon the CURRENT_TIMESTAMP default value for one of the timestamp fields.

As you know, the advantage of HandlerSocket is not in the speed of its work - it is rather acceptable than impressive, but in its ability to work stably under a large number of requests per unit time. Considering that now the service serves only 2 - 2.5 thousand requests per second on one site, then we have a large margin of safety.

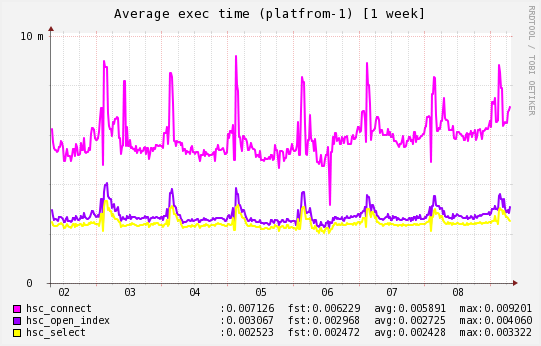

But for those who are especially interested in the speed of the HandlerSocket plug-in, here is a graph with the average execution time of three commands: connecting to the HandlerSocket, opening the index and selecting data on it (values on the Y axis in milliseconds):

Final word

Over the past year, Badoo has tended to use HandlerSocket as a “key-value” repository with persistent data storage. This allows us to write simpler and more understandable code, saves C programmers from working on trivial tasks and significantly simplifies support. And while everything says that the movement in this direction will continue.

But do not think that using HandlerSocket inside Badoo is limited to the simplest tasks. For example, we have extensive experience in using it to solve problems with a predominance of the recording function, where a number of nuances appear under really heavy load. If you are interested in details - comment, ask, and we will certainly continue this topic with new articles.

Thanks!