Using OpenGL Shaders in QML

This post participates in the competition “Smart Phones for Smart Posts”.

This post is devoted to the use of OpenGL shaders together with elements of the declarative language QML. The topic, in my opinion, is relevant, since in the future version of QML 2.0 it is planned to widely use OpenGL, as a backend for rendering graphical interface elements. Writing shaders is not an easy topic, and the purpose of this post is that, first of all, a person, after reading it, can immediately try to do something interesting for himself and experiment, getting, for example, these examples:

In the end, I will provide useful links where you can look at material for further, deeper study of this topic, if it interests you of course, and implement even more interesting shaders, using them together with elements of the QML language. Working with shaders can be seen in the example of various QML elements: ShaderEffectItem , many Qt3D classes that also use OpenGL, etc. In this post, I will demonstrate some examples using the element

The following is the outline of this article as a whole:

Setting the ShaderEffectItem and ShaderEffectSource Element

A Little Shader Theory

Linking QML Elements to Shaders

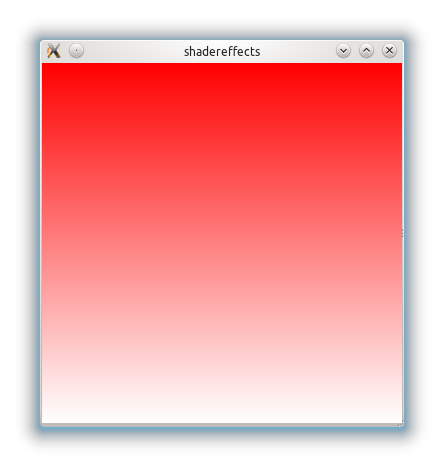

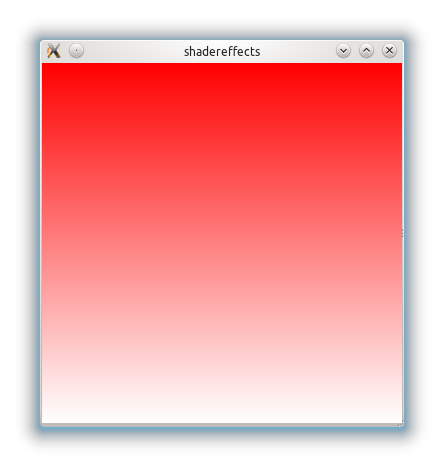

Example 1. Implementing a gradient using shaders

Example 2.1 A simple animation

Example 2.2 Creating a menu with animation

Example 3. Select a certain area of the texture depending on the mouse pointer

Example 4. Mixing two images

Conclusion

Useful links

Let's start by setting the necessary elements.

First you need to check whether you have installed all the components of OpenGL.

1) Follow the link and you will see the address in the git repository where shadersplugin lies. If nothing has changed, then it is like this:

2) Do

3) Go to the folder and do make install (this is how I do it under Linux, see how similar elements are installed under your OS). If there are no OpenGL components, then there are problems with the installation. If this check is difficult for you, just create an empty Qt application and add the line:. To the project file (* .pro)

Familiar with the concept of shaders can skip this small chapter. In it, I will give a brief overview of this topic. Why do we need shaders? In simple terms, shaders allow the programmer to "intervene" in the process of rendering primitives, i.e. make changes to the stages of the pipeline (which will be discussed below) by writing the actual code. To write shaders, there is GLSL (OpenGL Shading Language), created by the OpenGL committee. Its syntax is based on the programming language C. GLSL was designed specifically so that programmers have control over the programmable points of the OpenGL pipeline, which is a sequence of stages through which OpenGL commands go). Both one and the conveyor options are shown in the figure below:

The top of any object is passed to the pipeline. First, the coordinate transformation (Vertex Transformation) is performed - the application of the world, view and projection matrices of the incoming vertex. This applies to the vertex shader. After performing these operations, the assembly of the primitive (Assembly) begins: at this stage, the spatial coordinates (x, y, z) are transformed using matrices of dimension (4 x 4). The main task is to obtain screen, two-dimensional coordinates from three-dimensional (world) coordinates. In this part of the pipeline, the vertices are grouped into triangles and fed into the rasterization (Rasterization). The rasterizer divides the triangle into fragments (pixels) for which texture coordinates and color are interpolated. Then comes the work of the fragment shader. He is responsible for determining the color of each pixel of the screen inside the area, limited by the contour of the projected surface projected onto the screen. After processing all these methods, the resulting fragment is placed in the frame buffer, which is subsequently displayed on the screen (Pixel updates).

As you already understood, there are two types of shaders: vertex and fragment (or they are also called pixel). A vertex shader is executed earlier and processes each vertex, while a fragment shader is executed for each pixel to which a certain set of attributes is assigned, such as color (

It is also worth mentioning some elements of the GLSL language, which will be found in the examples below:

It should also be mentioned that GLSL defines many built-in functions oriented to computations, in particular for working with vectors and matrices. In the course of the analysis of the examples below, some functions will be described.

A mandatory requirement for shaders to work with QML elements is to install OpenGL for rendering in the object of the QDeclarativeView class :

This piece of code is taken from the main function of the application generated in QtCreator (by the Qt Quick application wizard)

As mentioned above, to demonstrate work with OpenGL shaders, an element will be used

It is allowed to define one or several

QML has the ability to define its properties for an element (using

If we consider the whole process as abstract as possible, the graphical representation of the QML element is transmitted by texture to the vertex and fragment shaders and then the final result is displayed, which will be displayed on the screen. Accordingly, you can make changes to the rendering of this texture in shader programs (this again we return to why shaders are needed at all).

Next, several examples will be considered and some explanations will be given to them. In order not to complicate the material, they will everywhere show work on the example of writing fragment shaders, that is, we will work with pixels.

Let's start with a very simple example. QML has a fairly often used Rectangle element and it has a property

Now let's pay attention to the property

The variable parameter

Since it

on the

Instead of vector coordinates

Let's try to do some kind of animation using the shader mechanism.

’ll apply the shaders to work with the image of the planet: We’ll do a little, of course, a silly effect, but still ... As if the planet beats like a heart. First you need to, using

The amplitude of the oscillations is set with

Here is the result video using such a fragment shader for a picture of the planet:

Source code is available here.

It looks pretty pretty and I decided to try applying the same effect to the menu buttons. So that when you hover over the button there is an effect similar to that of a planet. This description provides an example of creating menus in QML, from this guide. Each button is described in

Button.qml file:

Well, the menu.qml file itself

I want to draw attention to the fact that in the event

Source code is available here.

Next, I want to give an example of changing the color of the pixels of a certain part of the image. The area will be determined by the position of the mouse pointer, which will be the center of the circle with a radius of 50 pixels. And this circle will have pixel colors different from the original.

Firstly, in this example, it is necessary to define 3 properties in the element

They will respectively determine the coordinates of the mouse transferred to the shader code and the radius of the circle. An element

Можно заметить, что применяется функция возведения в квадрат pow (она работает аналогично функции с таким же названием в C/C++ из библиотеки math) для того чтобы определить попадает ли точка данного пикселя с координатой (

Соответственно, если координата пикселя попадает в нашу окружность, то результатом выдаём пиксель, скалярно умноженный на вектор, определяющий серый цвет (функция

Результат выполнения следующий:

It is worth noting that here we use conditional operators (exactly the same as in C) that are available in GLSL.

Source code is available here.

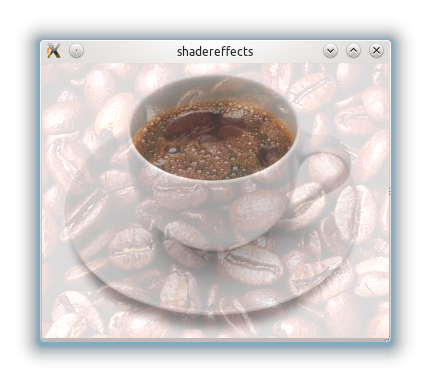

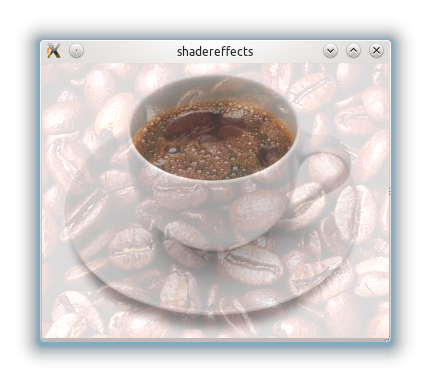

Let us have two pictures:

Coffee mug

and coffee beans:

We want to make a background in the form of coffee beans and on it a coffee mug. To solve this problem, we will again need to work with texture coordinates. The

The

You can make a lot of experimental options with the parameters of the mix function and the values of the vectors (for example, the fourth element of the vector responsible for transparency, 1.0 and 0.4 in the example above) and get different, interestingly mixed textures.

Source code is available here.

In conclusion, I want to say that I think the above examples are quite simple and trivial, but on the other hand, they can be useful to those who are not at all familiar with this topic and want to try to do something similar.

Summing up, we can say that due to the possibility of writing shader programs, we get very flexible mechanisms for working with the most important stages of processing OpenGL graphics when rendering QML elements. It is also worth noting that the GLSL language, as already mentioned, is very similar to C, but as stated in the official specification there are differences. For example, there are no pointers (data is passed to the function by value), you cannot use recursion, etc. It should be remembered that a poorly or incorrectly written shader program can greatly affect performance. The work of these plugins is tested on platforms: Symbian ^ 3, Maemo 5, Mac OS X, Windows 7 and Ubuntu. The platform requirements themselves are the Qt SDK 4.7.x version and QtOpenGL support. The future version of QML - QML2 in its Scene Graph will support API combining GL / GLES shaders with QML code. You can consider an element in Qt 5.0The ShaderEffect . If I understand this correctly, there is some semblance of what I wrote above.

This post is devoted to the use of OpenGL shaders together with elements of the declarative language QML. The topic, in my opinion, is relevant, since in the future version of QML 2.0 it is planned to widely use OpenGL, as a backend for rendering graphical interface elements. Writing shaders is not an easy topic, and the purpose of this post is that, first of all, a person, after reading it, can immediately try to do something interesting for himself and experiment, getting, for example, these examples:

In the end, I will provide useful links where you can look at material for further, deeper study of this topic, if it interests you of course, and implement even more interesting shaders, using them together with elements of the QML language. Working with shaders can be seen in the example of various QML elements: ShaderEffectItem , many Qt3D classes that also use OpenGL, etc. In this post, I will demonstrate some examples using the element

ShaderEffectItemtogether with the ShaderEffectSource . The following is the outline of this article as a whole:

Setting the ShaderEffectItem and ShaderEffectSource Element

A Little Shader Theory

Linking QML Elements to Shaders

Example 1. Implementing a gradient using shaders

Example 2.1 A simple animation

Example 2.2 Creating a menu with animation

Example 3. Select a certain area of the texture depending on the mouse pointer

Example 4. Mixing two images

Conclusion

Useful links

Let's start by setting the necessary elements.

Installing the necessary plugins

First you need to check whether you have installed all the components of OpenGL.

1) Follow the link and you will see the address in the git repository where shadersplugin lies. If nothing has changed, then it is like this:

git://gitorious.org/qt-labs/qml1-shadersplugin.git2) Do

git clone git://gitorious.org/qt-labs/qml1-shadersplugin.git3) Go to the folder and do make install (this is how I do it under Linux, see how similar elements are installed under your OS). If there are no OpenGL components, then there are problems with the installation. If this check is difficult for you, just create an empty Qt application and add the line:. To the project file (* .pro)

QT += declarative opengl. If everything compiles, then there should not be any problems during installation.A bit of shader theory

Familiar with the concept of shaders can skip this small chapter. In it, I will give a brief overview of this topic. Why do we need shaders? In simple terms, shaders allow the programmer to "intervene" in the process of rendering primitives, i.e. make changes to the stages of the pipeline (which will be discussed below) by writing the actual code. To write shaders, there is GLSL (OpenGL Shading Language), created by the OpenGL committee. Its syntax is based on the programming language C. GLSL was designed specifically so that programmers have control over the programmable points of the OpenGL pipeline, which is a sequence of stages through which OpenGL commands go). Both one and the conveyor options are shown in the figure below:

The top of any object is passed to the pipeline. First, the coordinate transformation (Vertex Transformation) is performed - the application of the world, view and projection matrices of the incoming vertex. This applies to the vertex shader. After performing these operations, the assembly of the primitive (Assembly) begins: at this stage, the spatial coordinates (x, y, z) are transformed using matrices of dimension (4 x 4). The main task is to obtain screen, two-dimensional coordinates from three-dimensional (world) coordinates. In this part of the pipeline, the vertices are grouped into triangles and fed into the rasterization (Rasterization). The rasterizer divides the triangle into fragments (pixels) for which texture coordinates and color are interpolated. Then comes the work of the fragment shader. He is responsible for determining the color of each pixel of the screen inside the area, limited by the contour of the projected surface projected onto the screen. After processing all these methods, the resulting fragment is placed in the frame buffer, which is subsequently displayed on the screen (Pixel updates).

As you already understood, there are two types of shaders: vertex and fragment (or they are also called pixel). A vertex shader is executed earlier and processes each vertex, while a fragment shader is executed for each pixel to which a certain set of attributes is assigned, such as color (

.r, .g, .b, .a), depth, texture coordinates ( .x, .y, .z, .wor .s, .t, .p, .q). The entry point to the shader is a function void main(). If the program uses both types of shaders, then there are two main entry points. Before entering the function main, global variables are initialized. GLSL defines special types of variables: uniform- the connection of the shader with external data (in the case of QML, these will be the properties of the elements), it should be noted that this type of variables is read-only;varying- this type of variable is necessary for linking the fragment shader with the vertex shader, that is, for transferring data from the vertex shader to the fragment shader. In the vertex shader, they can be changed, and in the fragment shader they are read-only; attribute- variables of the global scope; It is also worth mentioning some elements of the GLSL language, which will be found in the examples below:

sampler2D- one of the types of the GLSL language that represents the texture (there is also sampler1D, sampler3D, samplerCube, sampler1Dshadow, sampler2Dshadow); vec4 texture2D(sampler2D s, vec2 coord) - a function used to read a pixel from a texture s, with texture coordinates coord. gl_FrontColorIs a vector into which the final color data of the texture is written and which is available only in the fragment shader.It should also be mentioned that GLSL defines many built-in functions oriented to computations, in particular for working with vectors and matrices. In the course of the analysis of the examples below, some functions will be described.

Associating QML Elements with Shaders

A mandatory requirement for shaders to work with QML elements is to install OpenGL for rendering in the object of the QDeclarativeView class :

QmlApplicationViewer viewer;

...

QGLWidget* glWidget = new QGLWidget(format);

...

viewer.setViewport(glWidget);

...

This piece of code is taken from the main function of the application generated in QtCreator (by the Qt Quick application wizard)

main, the class QmlApplicationViewerinherits from QDeclarativeView. After each example, I will provide a link to the full source code. As mentioned above, to demonstrate work with OpenGL shaders, an element will be used

ShaderEffectItemthat allows you to make changes to the display of various QML elements on the screen using OpenGL mechanisms. It is available in the module Qt.labs.shaders 1.0(as it is under development), but now you can try to use it. To write vertex and fragment shaders code, the properties (type string) of fragmentShader and vertexShader are defined, respectively .ShaderEffectSourcerequired to specify the QML component that will be available in the shader. It will mainly use the sourceItem and hideSource properties . The first indicates a specific QML element (its identifier) that will be “exposed” by the shaders, and hideSource“says” that the original element will be hidden when the shader effect is applied. It is allowed to define one or several

ShaderEffectItemsas the source (s) for another ShaderEffectItems, but should not be declared ShaderEffectItemsas a child of the element defined in source, as this will most likely lead to a redrawing loop. QML has the ability to define its properties for an element (using

property), and they will also be available as variables in shader programs. This happens automatically if their names match and the variable in the shader is declared with the already mentioned qualifier uniform- the so-called binding. When we get to the examples, this moment will be immediately clear. If we consider the whole process as abstract as possible, the graphical representation of the QML element is transmitted by texture to the vertex and fragment shaders and then the final result is displayed, which will be displayed on the screen. Accordingly, you can make changes to the rendering of this texture in shader programs (this again we return to why shaders are needed at all).

Next, several examples will be considered and some explanations will be given to them. In order not to complicate the material, they will everywhere show work on the example of writing fragment shaders, that is, we will work with pixels.

Example 1. Implementing a gradient using shaders

Let's start with a very simple example. QML has a fairly often used Rectangle element and it has a property

gradient. In the example below, I want to show how you can achieve the gradient using the shader mechanism. So, an element Rectanglewith dimensions of 360 by 360 will be created . It is also necessary to add an element ShaderEffectItemas a child to Rectangle, with the specified property anchors.fillwith a value parent. Thus, we say that the shader “covers” the entire parent element. The code is presented below:

import QtQuick 1.0

import Qt.labs.shaders 1.0

Rectangle {

width: 360

height: 360

ShaderEffectItem {

anchors.fill: parent

fragmentShader: "

varying highp vec2 qt_TexCoord0;

void main(void)

{

lowp vec4 c0 = vec4( 1.0, 1.0, 1.0, 1.0 );

lowp vec4 c1 = vec4( 1.0, 0.0, 0.0, 1.0 );

gl_FragColor = mix( c0, c1, qt_TexCoord0.y );

}

"

}

}

Now let's pay attention to the property

fragmentShader- it contains the text of the fragment shader program. First, we define the variable varying highp vec2 qt_TexCoord0that we get from the vertex shader, although we don’t have one, we have a default implementation and we can get data from there. qt_TexCoord0determines, as I understand it, the texture coordinates of the scene as a whole (I will be glad if someone corrects me and says how it is called correctly, from the point of view of computer graphics). Now let's turn to the function main. We define two vectors in it that c0contain white (the color is represented as, rgba) and c1 - red, and then we assign the gl_FragColorvalue obtained for each pixel using the function mix, a linear interpolation function between two values , to the output vector :mix (vec4 x, vec4 y, float a)- expressed by the formula: x * ( 1.0 - a )+y * aThe variable parameter

ahere is the value of the .ytexture vector corresponding to the vector coordinate along the y axis. Accordingly, the result of the execution will be as follows:

Since it

qt_TexCoord0.yrepresents the vector coordinate along the axis y, the gradient will be from top to bottom, if, for example, we want the gradient from left to right, then we need to replace the line:

gl_FragColor = mix( c0, c1, qt_TexCoord0.y );

on the

gl_FragColor = mix( c0, c1, qt_TexCoord0.x );

.xmeans the vector coordinate with respect to x. And if we just want to paint everything in red, without any gradient, then there will be such a code (here absolutely all pixels are painted in red):

void main(void)

{

gl_FragColor = vec4 ( 1.0, 0.0, 0.0, 1.0 );

}

Instead of vector coordinates

xand y, you can use texture sand, trespectively. The result will be similar. The source code is available here.Let's try to do some kind of animation using the shader mechanism.

Example 2.1 The simplest animation

We

’ll apply the shaders to work with the image of the planet: We’ll do a little, of course, a silly effect, but still ... As if the planet beats like a heart. First you need to, using

ShaderEffectSource, define a property in the element Item, for example, under the name source. In the shader itself, we indicate here uniform lowp sampler2D source;by linking our texture (planet image) with the shader code and the ability to make changes to its rendering. To create any animation, you need to change some data over time. For this I will use the QML PropertyAnimation element. And what data do we need to change? Here I want to show an example of how, instead of the data of one pixel, we can substitute the data of another and thereby get an animation effect. Those. for example, we have a pixel with a texture coordinate x, y (as well as color data), and instead of it we substitute some neighboring pixel (with our own color data) and we will select it as some kind of increment obtained by some functions, let it be a function sin. Therefore, as variable data, it is desirable to have an angle of 0 to 360 degrees. Thus, if you look at the code below, the PropertyAnimationangle property is set to change from 0.0to 360.0.

import QtQuick 1.0

import Qt.labs.shaders 1.0

Item {

width: img.width

height: img.height

Image {

id: img

source: "images/space.jpg"

}

ShaderEffectItem {

property variant source: ShaderEffectSource {

sourceItem: img;

hideSource: true

}

anchors.fill: img

fragmentShader: "

varying highp vec2 qt_TexCoord0;

uniform lowp sampler2D source;

uniform highp float angle;

void main() {

highp float wave = 0.01;

highp float wave_x = qt_TexCoord0.x + wave * sin( radians( angle + qt_TexCoord0.x * 360.0 ) );

highp float wave_y = qt_TexCoord0.y + wave * sin( radians( angle + qt_TexCoord0.y * 360.0 ) );

highp vec4 texpixel = texture2D( source, vec2( wave_x, wave_y ) );

gl_FragColor = texpixel;

}"

property real angle : 0.0

PropertyAnimation on angle {

to: 360.0

duration: 800

loops: Animation.Infinite

}

}

}

The amplitude of the oscillations is set with

highp float wave = 0.01. Why do radiansI need a function, I think no need to explain. But if we simply substitute the value of the angle, the anglepicture will simply move different sides, but we need something more spectacular - a “heartbeat”. Texture coordinates vary from 0 to 1, respectively, for each pixel there will be its own “multiplication in the sin function by an angle of 360”. In wave_xand wave_yI will write down the coordinates of a pixel from some neighboring neighborhood, taken along the axis xand along the axis, yrespectively. With the help of texture2D( source, vec2( wave_x, wave_y ) );we take the values of this new pixel and write them into the already familiar to us gl_FragColor. Here is the result video using such a fragment shader for a picture of the planet:

Source code is available here.

Example 2.2. Creating a menu with animation

It looks pretty pretty and I decided to try applying the same effect to the menu buttons. So that when you hover over the button there is an effect similar to that of a planet. This description provides an example of creating menus in QML, from this guide. Each button is described in

Button.qml. I added a little to her description of working with shaders. The fragment shader code is almost the same as the example above, only I slightly increased the amplitude of the oscillations wave = 0.02: Button.qml file:

import QtQuick 1.0

import Qt.labs.shaders 1.0

Item

{

width: but.width

height: but.height

property alias text: textItem.text

Rectangle {

id: but

width: 130;

height: 40

border.width: 1

radius: 5

smooth: true

gradient: Gradient {

GradientStop { position: 0.0; color: "darkGray" }

GradientStop { position: 0.5; color: "black" }

GradientStop { position: 1.0; color: "darkGray" }

}

Text {

id: textItem

anchors.centerIn: parent

font.pointSize: 20

color: "white"

}

MouseArea {

property bool ent: false

id: moousearea

anchors.fill: parent

onEntered: {

ent = true

}

onExited: {

ent = false

effect.angle = 0.0

}

hoverEnabled: true

}

}

ShaderEffectItem {

id: effect

property variant source: ShaderEffectSource {

sourceItem: but;

hideSource: true

}

anchors.fill: but

property real angle : 0.0

PropertyAnimation on angle {

id: prop1

to: 360.0

duration: 800

loops: Animation.Infinite

running: moousearea.ent

}

fragmentShader: "

varying highp vec2 qt_TexCoord0;

uniform lowp sampler2D source;

uniform highp float angle;

void main() {

highp float wave = 0.02;

highp float wave_x = qt_TexCoord0.x + wave * sin( radians( angle + qt_TexCoord0.x * 360.0 ) );

highp float wave_y = qt_TexCoord0.y + wave * sin( radians( angle + qt_TexCoord0.y * 360.0 ) );

highp vec4 texpixel = texture2D( source, vec2( wave_x, wave_y ) );

gl_FragColor = texpixel;

}"

}

}

Well, the menu.qml file itself

import QtQuick 1.0

import Qt.labs.shaders 1.0

Item {

width: 150

height: 190

Column {

anchors.horizontalCenter: parent.horizontalCenter

Button { text: "Apple" }

Button { text: "Red" }

Button { text: "Green" }

Button { text: "Blue" }

}

}

I want to draw attention to the fact that in the event

onExitedit is necessary to reset the property of the angleelement angle effectto 0.0, otherwise the angle substituted in the calculation of the neighboring pixel will start to be calculated not from 0, but from the last value and it will turn out not quite what we expect. The result is this effect:Source code is available here.

Example 3. Select some texture area depending on the mouse pointer

Next, I want to give an example of changing the color of the pixels of a certain part of the image. The area will be determined by the position of the mouse pointer, which will be the center of the circle with a radius of 50 pixels. And this circle will have pixel colors different from the original.

Firstly, in this example, it is necessary to define 3 properties in the element

ShaderEffectItem: property real xPos: 65.0property real yPos: 65.0property real radius: 50They will respectively determine the coordinates of the mouse transferred to the shader code and the radius of the circle. An element

MouseAreaand event handling are defined to track mouse movement onPositionChanged. Below is the source code and further explanations are given:

Rectangle {

width: img.width

height: img.height

Image {

id: img

source: "images/nature.jpg"

}

ShaderEffectItem {

id: effect

anchors.fill: parent

MouseArea {

id: coords

anchors.fill: parent

onPositionChanged: {

effect.xPos = mouse.x

effect.yPos = coords.height - mouse.y

}

}

property real xPos: 65.0

property real yPos: 65.0

property real radius: 50

property int widthImage: img.width

property int heightImage: img.height

property variant source: ShaderEffectSource {

sourceItem: img;

hideSource: true

}

fragmentShader:

"varying highp vec2 qt_TexCoord0;

uniform highp float xPos;

uniform highp float yPos;

uniform highp float radius;

uniform highp int widthImage;

uniform highp int heightImage;

highp vec2 pixcoords = qt_TexCoord0.st * vec2( widthImage, heightImage );

uniform sampler2D source;

void main(void)

{

lowp vec4 texColor = texture2D(source, qt_TexCoord0.st);

lowp float gray = dot( texColor, vec4( 0.6, 0.5, 0.1, 0.0 ) );

if ( ( pow( ( xPos - pixcoords.x ), 2 ) + pow( ( yPos - pixcoords.y ), 2 ) )

< pow( radius, 2 ) )

{

gl_FragColor = vec4( gray, gray, gray, texColor.a) ;

}

else

{

gl_FragColor = texture2D( source, qt_TexCoord0 );

}

}"

}

}

Можно заметить, что применяется функция возведения в квадрат pow (она работает аналогично функции с таким же названием в C/C++ из библиотеки math) для того чтобы определить попадает ли точка данного пикселя с координатой (

pixcoords.x; pixcoords.y ) в окружность с центром в точке xPos и yPos и радиусом radius.Соответственно, если координата пикселя попадает в нашу окружность, то результатом выдаём пиксель, скалярно умноженный на вектор, определяющий серый цвет (функция

dot осуществляет скалярное произведение). Если нет, то конкретный пиксель никак не изменяется. Опять же можно заметить, как связаны паременные QML элемента со переменными программы-шейдера — они имеют одинаковое имя и эквивалентные типы: real эквивалентен highp float.Результат выполнения следующий:

It is worth noting that here we use conditional operators (exactly the same as in C) that are available in GLSL.

Source code is available here.

Example 4. Mixing two images (textures)

Let us have two pictures:

Coffee mug

and coffee beans:

We want to make a background in the form of coffee beans and on it a coffee mug. To solve this problem, we will again need to work with texture coordinates. The

ShaderEffectItemtwo images will be defined texture0and texture1, as elements ShaderEffectSource. In the fragment shader code, these two images will be stored as two textures in uniform sampler2D texture0and uniform sampler2D texture1. Into the variables s1and s2we get the texture coordinates of each pixel of the first image and the second, respectively, as shown in the code below:

import QtQuick 1.0

import Qt.labs.shaders 1.0

Rectangle {

width: coffee.width

height: coffee.height

Image {

id: coffee

source: "images/coffee.jpg"

}

Image {

id: granules

source: "images/granules.jpg"

}

ShaderEffectItem {

anchors.fill: parent

id: effect

property variant texture0: ShaderEffectSource {

sourceItem: coffee;

hideSource: true

}

property variant texture1: ShaderEffectSource {

sourceItem: granules;

hideSource: true

}

fragmentShader:

"

varying highp vec2 qt_TexCoord0;

uniform sampler2D texture0;

uniform sampler2D texture1;

void main(void)

{

vec4 s1 = texture2D( texture0, qt_TexCoord0.st );

vec4 s2 = texture2D( texture1, qt_TexCoord0.st ) ;

gl_FragColor = mix( vec4( s1.r, s1.g, s1.b, 1.0 ),

vec4( s2.r * 0.6, s2.g * 0.6, s2.b * 0.6, 0.4 ),

0.35 );

}"

}

}

The

gl_FrontColorresult of linear interpolation of two vectors containing pixel color parameters will be written into the resulting vector, which is already familiar to us (during the creation of the gradient). Moreover, each color channel in the s2 texture (coffee beans will be multiplied by 0.6, since we need it as a background). As a result, we have this result of execution:

You can make a lot of experimental options with the parameters of the mix function and the values of the vectors (for example, the fourth element of the vector responsible for transparency, 1.0 and 0.4 in the example above) and get different, interestingly mixed textures.

Source code is available here.

In conclusion, I want to say that I think the above examples are quite simple and trivial, but on the other hand, they can be useful to those who are not at all familiar with this topic and want to try to do something similar.

Conclusion

Summing up, we can say that due to the possibility of writing shader programs, we get very flexible mechanisms for working with the most important stages of processing OpenGL graphics when rendering QML elements. It is also worth noting that the GLSL language, as already mentioned, is very similar to C, but as stated in the official specification there are differences. For example, there are no pointers (data is passed to the function by value), you cannot use recursion, etc. It should be remembered that a poorly or incorrectly written shader program can greatly affect performance. The work of these plugins is tested on platforms: Symbian ^ 3, Maemo 5, Mac OS X, Windows 7 and Ubuntu. The platform requirements themselves are the Qt SDK 4.7.x version and QtOpenGL support. The future version of QML - QML2 in its Scene Graph will support API combining GL / GLES shaders with QML code. You can consider an element in Qt 5.0The ShaderEffect . If I understand this correctly, there is some semblance of what I wrote above.

useful links

- I recommend that you familiarize yourself with the official specification of OpenGL ES .

- QML ShaderEffectItem on QGraphicsView - here you can see examples of video, work of shaders on Nokia N8.

- The GLSL manual , the theory of the work of shaders is described very well (sometimes they have a website, so don’t be scared :))

- 6 interesting shader implementations

- Qt Quick 2 QML Scene Graph GLSL fragment shader tutorial