GPU path tracing, part 1

Iron and rendering

The most popular processor architectures today are x86-64. They are classified as CISC. They have a huge set of commands, which led to a large area of the core on the chip. This, in turn, entailed a difficulty in implementing several cores on a chip. X86 processors are not ideal for multi-threaded computing, where multiple small instruction set (RISC) execution is required.

In turn, rendering is an algorithm that perfectly parallelizes to an almost unlimited number of cores.

Unbiased renders

In view of the fact that the productivity of iron is steadily growing, technical issues (for example, sampling of reflections of materials in V-Ray, the amount of biases in antialiasing, motion blur, depth of field, soft shadows) are increasingly shifting to the shoulders of iron. So, a few years ago, the first commercial unbiased render appeared - Maxwell Render.

Its main advantage was the quality of the final picture, a minimum of settings, all kinds of “biases”. Over time, the picture quality approaches the "ideal". And the drawback was and is - rendering time. It took a very long time to wait until the noise came down, and many people immediately refused it after several trials. Things were even worse with animation (for obvious reasons).

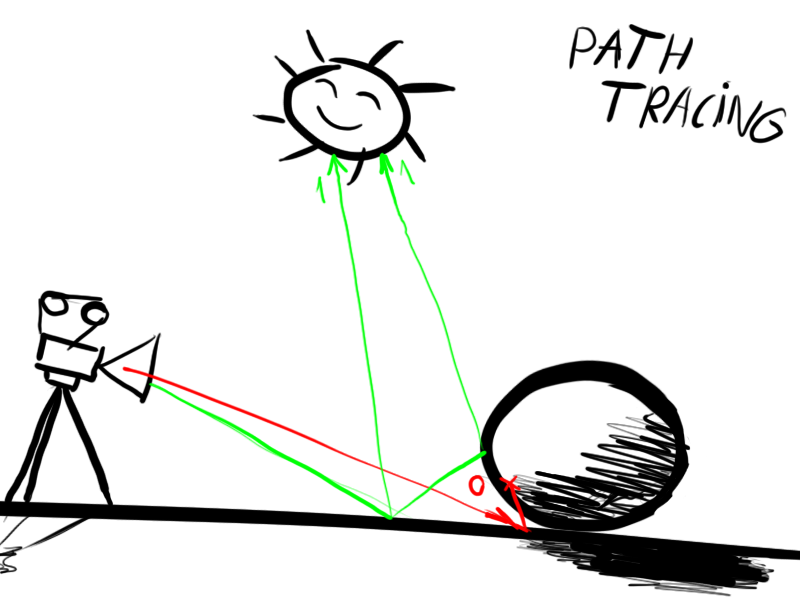

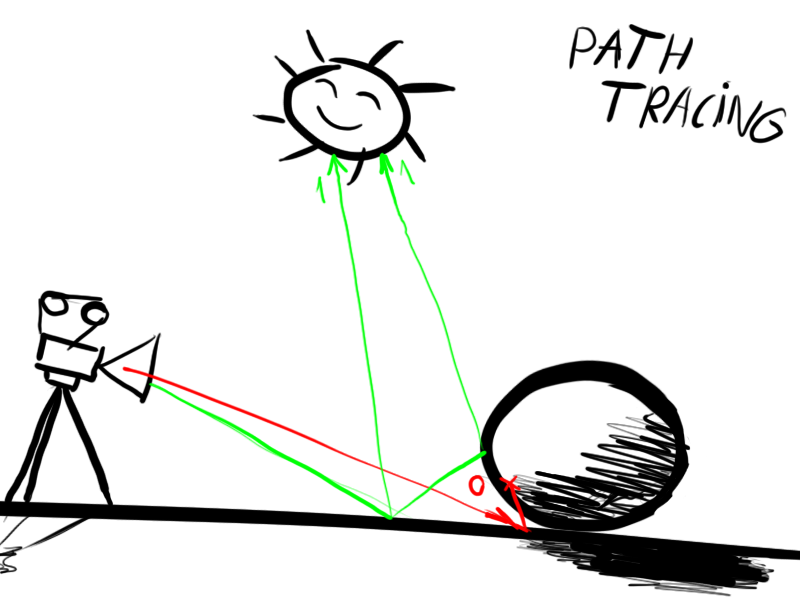

Algorithm

1. The beam (the starting point corresponding to a specific pixel on the screen) is released from the camera.

2. Check if one of the geometry elements crossed the beam. If not, go to step 1.

3. Determine the point of intersection of the beam and geometry closest to the camera.

4. Release a new beam from the point of intersection towards the light source.

5. If there is an obstacle between the point of intersection of the beam and the light source, go to step 7

6. Paint the pixel with color (simplified, the color of the surface at this point, multiplied by the intensity of the light source at the point of contact with the beam)

7. Release a new ray from the intersection point in an arbitrary direction, go to step 2 until the maximum number of reflections is reached (in most cases 4-8, if there are a lot of reflections or refractions in the scene, then this number must be increased).

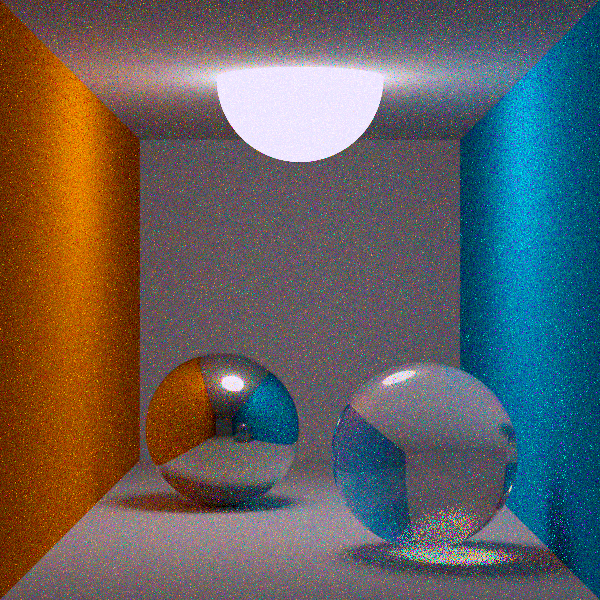

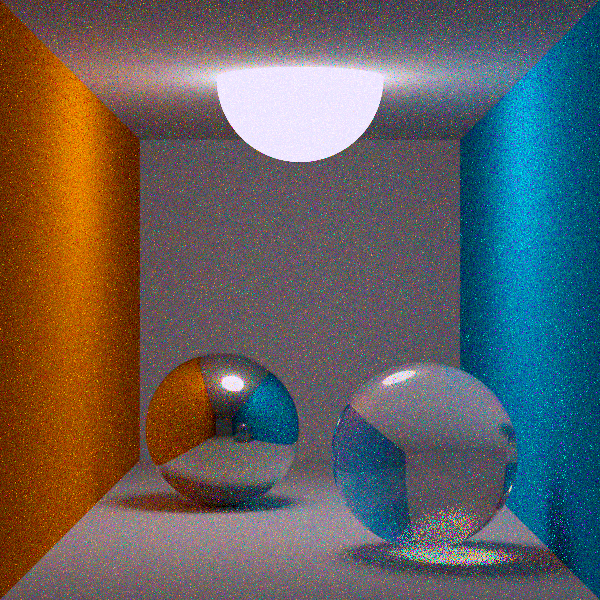

An example of a “noisy” picture.

The number of samples per pixel to achieve good quality can be measured in thousands. For example, 10 thousand (depending on the scene) The

number of rays per image FullHD 2mpix * 10tys - 20 billion.

There are several types of path tracking optimization: Bi-Directional Path Tracing, Metropolis Light Transport, Energy Redistribution Path Tracing, designed to let the rays go where it should ". Most renders on the CPU use the MLT algorithm (Maxwell, Fry, Lux) for this purpose.

GPU role

The algorithm reuses floating point operations, and multithreading is vital for this algorithm. Therefore, this task is gradually accepted by the GPU itself.

Existing technologies: CUDA, FireStream, OpenCL, DirectCompute, and it is also possible to write programs directly on shaders.

The situation is this:

CUDA - write to everyone who is not lazy (iRay, Octane Render, Arion Render, Cycles, etc).

FireStream - nothing is visible at all.

OpenCL - SmallLuxGPU, Cycles, Indigo Render. Nobody seems to be taking it seriously.

DirectCompute - nothing is visible.

Shaders are just one example. WebGL implementation of path tracing on shaders .

Comparison of the renderings will be in part 2.

The most popular processor architectures today are x86-64. They are classified as CISC. They have a huge set of commands, which led to a large area of the core on the chip. This, in turn, entailed a difficulty in implementing several cores on a chip. X86 processors are not ideal for multi-threaded computing, where multiple small instruction set (RISC) execution is required.

In turn, rendering is an algorithm that perfectly parallelizes to an almost unlimited number of cores.

Unbiased renders

In view of the fact that the productivity of iron is steadily growing, technical issues (for example, sampling of reflections of materials in V-Ray, the amount of biases in antialiasing, motion blur, depth of field, soft shadows) are increasingly shifting to the shoulders of iron. So, a few years ago, the first commercial unbiased render appeared - Maxwell Render.

Its main advantage was the quality of the final picture, a minimum of settings, all kinds of “biases”. Over time, the picture quality approaches the "ideal". And the drawback was and is - rendering time. It took a very long time to wait until the noise came down, and many people immediately refused it after several trials. Things were even worse with animation (for obvious reasons).

Algorithm

1. The beam (the starting point corresponding to a specific pixel on the screen) is released from the camera.

2. Check if one of the geometry elements crossed the beam. If not, go to step 1.

3. Determine the point of intersection of the beam and geometry closest to the camera.

4. Release a new beam from the point of intersection towards the light source.

5. If there is an obstacle between the point of intersection of the beam and the light source, go to step 7

6. Paint the pixel with color (simplified, the color of the surface at this point, multiplied by the intensity of the light source at the point of contact with the beam)

7. Release a new ray from the intersection point in an arbitrary direction, go to step 2 until the maximum number of reflections is reached (in most cases 4-8, if there are a lot of reflections or refractions in the scene, then this number must be increased).

An example of a “noisy” picture.

The number of samples per pixel to achieve good quality can be measured in thousands. For example, 10 thousand (depending on the scene) The

number of rays per image FullHD 2mpix * 10tys - 20 billion.

There are several types of path tracking optimization: Bi-Directional Path Tracing, Metropolis Light Transport, Energy Redistribution Path Tracing, designed to let the rays go where it should ". Most renders on the CPU use the MLT algorithm (Maxwell, Fry, Lux) for this purpose.

GPU role

The algorithm reuses floating point operations, and multithreading is vital for this algorithm. Therefore, this task is gradually accepted by the GPU itself.

Existing technologies: CUDA, FireStream, OpenCL, DirectCompute, and it is also possible to write programs directly on shaders.

The situation is this:

CUDA - write to everyone who is not lazy (iRay, Octane Render, Arion Render, Cycles, etc).

FireStream - nothing is visible at all.

OpenCL - SmallLuxGPU, Cycles, Indigo Render. Nobody seems to be taking it seriously.

DirectCompute - nothing is visible.

Shaders are just one example. WebGL implementation of path tracing on shaders .

Comparison of the renderings will be in part 2.