Draw water on Direct3D. Part 1. Graphics pipeline architecture and API

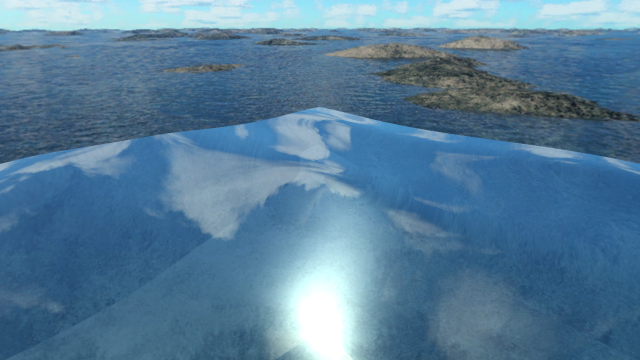

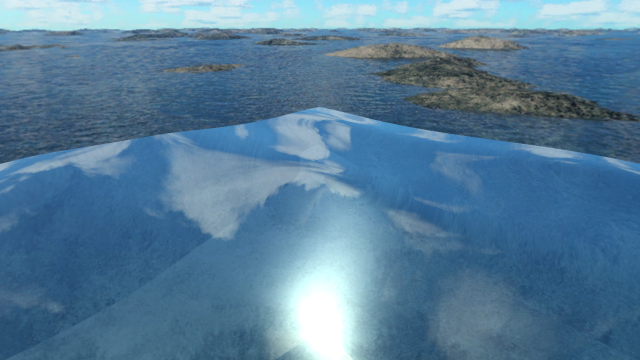

In this article, divided into several parts, I will outline the architecture of modern versions of Direct3D (10-11), and also show how to use this API to draw such a coral reef scene, the main advantage of which is simple to implement, but beautiful and relatively convincing looking water:

Start by describing the Direct3D architecture.

The Direct3D API is a direct reflection of the architecture of modern video cards, and encapsulates a graphic pipeline of the following form:

In the figure, the fully programmable stages of the pipeline are indicated by rounded rectangles, and the configurable ones by ordinary.

Now I will explain each of the stages separately.

Input Assembler (abbreviated as IA)- receives data about the vertices from the buffers located in the system memory and collects from them the primitives that are used by the subsequent stages of the pipeline. Also, IA attaches to the vertices some metadata generated by the D3D runtime, such as the vertex number.

Vertex Shader (vertex shader, VS) is a mandatory and fully programmable pipeline stage. As you can guess, is launched for each vertex (Eng. Vertex), and receives input about it from the Input Assembler. It is used for various transformation of vertices, for the transformation of their coordinates from one coordinate system to another, for the generation of normals, texture coordinates, light calculations, and more. Data from the vertex shader is either sent directly to the rasterizer, or to Stream Output (if this stage of the pipeline is installed, but the geometric shader is not), or to the geometric shader, or to the surface shader.

Hull Shader (surface shader, HS), Domain Shader (domain shader, DS) and Tesselator (tessellator)- stages added to Shader Model 5.0 (and D3D11, respectively), and used in the tessellation process (splitting primitives into smaller ones to increase image detail). These stages of the conveyor are optional, and since they are not used in my scene, I will not dwell on them in detail. Those who wish can read about them, for example, on MSDN .

Geometry Shader (Geometric Shader, GS)- processes primitives (points, lines or triangles) collected from vertices processed by the previous stages of the pipeline. Geometric shaders can generate new primitives on the fly (that is, their exhaust does not have to be 1-to-1, as in the case of, for example, vertex shaders). They are used to generate the geometry of shadows (shadow volume), sprites (particle systems, etc.), reflections (e.g. single-pass rendering in a cube map), and the like. Although they can also be used for tessellation, this is not recommended. Data from the geometric shader is either sent to Stream Output or to the rasterizer.

Stream Output (SO)- an optional stage of the pipeline, used to download the vertices processed by the pipeline back to the system memory (so that the SO exhaust can be read by the CPU or used by the pipeline at the next start). It receives data either from a geometric shader, or, in the absence of it, from a vertex or domain shader.

Rasterizer (Rasterizer, RS)- The "heart" of the graphics pipeline. The purpose of this stage, as the name implies, is the rasterization of primitives, that is, dividing them into pixels (although the name "pixel" is not entirely correct - a pixel usually means what is located directly in the framebuffer, that is, what is displayed on the screen , so the “fragments” will be more correct). The rasterizer will receive vector information about the vertices from the previous stages of the pipeline, and convert it to raster, cutting off primitives outside the scope, interpolating the values associated with the vertices (such as texture coordinates) and projecting their positions into the two-dimensional viewing region (English viewport ). Data from the rasterizer goes to the pixel shader, if one is installed.

Pixel Shader (Pixel Shader, PS)- works with image fragments received from the rasterizer. It is used to implement a huge variety of graphic effects, and gives the color of the fragment and, optionally, the depth value (the value used to determine which fragments are closer to the camera) to the output Merger stage.

Output Merger (OM) is the last stage of the graphics pipeline. For each fragment received from the pixel shader, it performs a depth test and a stencil test, determining whether the fragment should get into the framebuffer and mix colors if it is on.

Now about the API itself.

The Direct3D API is based on the lightweight COM ( Microsoft Component Object Model ). So lightweight that from the "full-fledged" COM in it there remains only the concept of interfaces.

For those who are unfamiliar with the concept of a COM interface - a small lyrical digression.

The concept of a COM interface is inherently close to the concept of interfaces from .NET (because .NET is, in fact, a development of COM). At its core, it is an abstract class that has only methods and is assigned a 16-byte identifier ( GUID) Physically, an interface is a collection of functions, that is, a pointer to an array with pointers to functions (with a C-compliant ABI, and usually with a stdcall calling convention), for which the first argument is a pointer to the interface itself. Behind each interface is an object that implements it, and each object can implement several different types of interfaces. Microsoft postulates that the only way to contact an object that implements an interface is through a pointer to that interface, namely by calling its methods.

Interfaces can be inherited from each other, and most COM interfaces, including in Direct3D, inherit from a special interface - IUnknown, which implements control over the lifetime of an object that implements the interface through reference counting, and allows you to get pointers to interfaces of various types from the object by their GUID.

I must say that although the program for this article is written in C ++, but since the COM interfaces have a C-compatible ABI, you can work with them from any language that can work with native code, either directly or through FFI . In the case of MSVC ++ and .NET, this is especially convenient, since MS seamlessly integrated COM into the C ++ and .NET object systems, respectively.

Interfaces Direct3D 10 and 11 can be divided into several types:

DXGI interfaces.DXGI is the low-level API on which all the new components of the Windows graphics subsystem are based. In the case of D3D, we are especially interested in the IDXGISwapChain interface - it encapsulates a chain of buffers into which the graphics pipeline draws, and is responsible for binding them to a specific winapi window (HWND). Although using this interface is not necessary at all (even for rendering “to the window” - we can draw into a texture and then transfer it to HDC in GDI), it is often used, as it is very convenient.

Virtual adapter interfaces They are used to create various resources, to configure the graphics pipeline and to launch it. In D3D10, one interface was responsible for all this, ID3D10Device (or ID3D10Device1, for D3D10.1. In general, in the naming of D3D and DXGI interfaces, the prefix “I” in the name means that the type is an interface type, a prefix like “DXGI” or “D3D11” means a specific API, and the suffix, if any, indicates a minor version of the API), in D3D11 was divided into two - ID3D11Device (creating resources), and ID3D11DeviceContext (the remaining two tasks).

Objects that implement these interfaces also implement some DXGI interfaces - for example, we can request the IDXGIDevice interface from ID3D11Device (by calling the QueryInterface method (which is included in IUnknown , and ID3D11Device inherits from IUnknown ) from the first one)

Here it should be mentioned that using the newest API we absolutely do not have to require the hardware on which our program will work to fully comply with this API. Microsoft introduced the “feature level” concept in D3D10.1, which allows programs using newer versions of the API to run even on the D3D9 hardware (unless they require features from the API to a specific feature level that are not included, of course). In the case of my scene, I will use D3D11, but I will create a virtual adapter with the D3D_FEATURE_LEVEL_10_0 flag, and use the shaders of the 4th model, respectively.

Helper Interfaces. Such as ID3D11Debug , ID3D11InfoQueue , ID3D11Counter or ID3D11ShaderReflection- are used to obtain additional information about the state of the pipeline, about shaders, to measure performance and other such.

Resource Interfaces In D3D, a resource refers to a texture (for example, ID3D11Texture2D - a two-dimensional texture), or just a buffer (containing, for example, vertex data). Resource objects also implement various DXGI interfaces, such as IDXGIResource , and this is the key to interoperability between different graphics subsystems (such as Direct2D, GDI) and different versions of the same subsystem (D3D9, 10 and 11), in new versions of Windows.

Interfaces of representation (English view ).We can use each resource for several different purposes, and possibly even at the same time. In newer versions of D3D, we do not directly supply texture and buffer interfaces to the pipeline (except vertex, index, and constant buffers), instead we create an object that implements one of the presentation interfaces and supplies it to the pipeline.

The following types of view interfaces are present in D3D11:

Shader Interfaces For example, ID3D11VertexShader . Encapsulate the shader code for a specific programmable stage of the graphics pipeline, and are used to set the shader for this stage.

Interfaces of a state (English state ). Used to configure various non-programmable conveyor stages. Examples are ID3D11RasterizerState (configures the rasterizer), ID3D11InputLayout (stores information about the vertices supplied from the vertex buffers in the Input Assembler), ID3D11BlendState (configures the color mixing process in the Output Merger).

The program itself, which draws the scene mentioned at the very beginning, will be described in the following parts of the article, namely, in the second part I will describe in detail the process of creating and initializing the virtual adapter and the resources needed to render the scene, and the third part will be devoted to the shaders used in the program .

The full code is available on github at the following link: github.com/Lovesan/Reef

Start by describing the Direct3D architecture.

The Direct3D API is a direct reflection of the architecture of modern video cards, and encapsulates a graphic pipeline of the following form:

In the figure, the fully programmable stages of the pipeline are indicated by rounded rectangles, and the configurable ones by ordinary.

Now I will explain each of the stages separately.

Input Assembler (abbreviated as IA)- receives data about the vertices from the buffers located in the system memory and collects from them the primitives that are used by the subsequent stages of the pipeline. Also, IA attaches to the vertices some metadata generated by the D3D runtime, such as the vertex number.

Vertex Shader (vertex shader, VS) is a mandatory and fully programmable pipeline stage. As you can guess, is launched for each vertex (Eng. Vertex), and receives input about it from the Input Assembler. It is used for various transformation of vertices, for the transformation of their coordinates from one coordinate system to another, for the generation of normals, texture coordinates, light calculations, and more. Data from the vertex shader is either sent directly to the rasterizer, or to Stream Output (if this stage of the pipeline is installed, but the geometric shader is not), or to the geometric shader, or to the surface shader.

Hull Shader (surface shader, HS), Domain Shader (domain shader, DS) and Tesselator (tessellator)- stages added to Shader Model 5.0 (and D3D11, respectively), and used in the tessellation process (splitting primitives into smaller ones to increase image detail). These stages of the conveyor are optional, and since they are not used in my scene, I will not dwell on them in detail. Those who wish can read about them, for example, on MSDN .

Geometry Shader (Geometric Shader, GS)- processes primitives (points, lines or triangles) collected from vertices processed by the previous stages of the pipeline. Geometric shaders can generate new primitives on the fly (that is, their exhaust does not have to be 1-to-1, as in the case of, for example, vertex shaders). They are used to generate the geometry of shadows (shadow volume), sprites (particle systems, etc.), reflections (e.g. single-pass rendering in a cube map), and the like. Although they can also be used for tessellation, this is not recommended. Data from the geometric shader is either sent to Stream Output or to the rasterizer.

Stream Output (SO)- an optional stage of the pipeline, used to download the vertices processed by the pipeline back to the system memory (so that the SO exhaust can be read by the CPU or used by the pipeline at the next start). It receives data either from a geometric shader, or, in the absence of it, from a vertex or domain shader.

Rasterizer (Rasterizer, RS)- The "heart" of the graphics pipeline. The purpose of this stage, as the name implies, is the rasterization of primitives, that is, dividing them into pixels (although the name "pixel" is not entirely correct - a pixel usually means what is located directly in the framebuffer, that is, what is displayed on the screen , so the “fragments” will be more correct). The rasterizer will receive vector information about the vertices from the previous stages of the pipeline, and convert it to raster, cutting off primitives outside the scope, interpolating the values associated with the vertices (such as texture coordinates) and projecting their positions into the two-dimensional viewing region (English viewport ). Data from the rasterizer goes to the pixel shader, if one is installed.

Pixel Shader (Pixel Shader, PS)- works with image fragments received from the rasterizer. It is used to implement a huge variety of graphic effects, and gives the color of the fragment and, optionally, the depth value (the value used to determine which fragments are closer to the camera) to the output Merger stage.

Output Merger (OM) is the last stage of the graphics pipeline. For each fragment received from the pixel shader, it performs a depth test and a stencil test, determining whether the fragment should get into the framebuffer and mix colors if it is on.

Now about the API itself.

The Direct3D API is based on the lightweight COM ( Microsoft Component Object Model ). So lightweight that from the "full-fledged" COM in it there remains only the concept of interfaces.

For those who are unfamiliar with the concept of a COM interface - a small lyrical digression.

The concept of a COM interface is inherently close to the concept of interfaces from .NET (because .NET is, in fact, a development of COM). At its core, it is an abstract class that has only methods and is assigned a 16-byte identifier ( GUID) Physically, an interface is a collection of functions, that is, a pointer to an array with pointers to functions (with a C-compliant ABI, and usually with a stdcall calling convention), for which the first argument is a pointer to the interface itself. Behind each interface is an object that implements it, and each object can implement several different types of interfaces. Microsoft postulates that the only way to contact an object that implements an interface is through a pointer to that interface, namely by calling its methods.

Interfaces can be inherited from each other, and most COM interfaces, including in Direct3D, inherit from a special interface - IUnknown, which implements control over the lifetime of an object that implements the interface through reference counting, and allows you to get pointers to interfaces of various types from the object by their GUID.

I must say that although the program for this article is written in C ++, but since the COM interfaces have a C-compatible ABI, you can work with them from any language that can work with native code, either directly or through FFI . In the case of MSVC ++ and .NET, this is especially convenient, since MS seamlessly integrated COM into the C ++ and .NET object systems, respectively.

Interfaces Direct3D 10 and 11 can be divided into several types:

DXGI interfaces.DXGI is the low-level API on which all the new components of the Windows graphics subsystem are based. In the case of D3D, we are especially interested in the IDXGISwapChain interface - it encapsulates a chain of buffers into which the graphics pipeline draws, and is responsible for binding them to a specific winapi window (HWND). Although using this interface is not necessary at all (even for rendering “to the window” - we can draw into a texture and then transfer it to HDC in GDI), it is often used, as it is very convenient.

Virtual adapter interfaces They are used to create various resources, to configure the graphics pipeline and to launch it. In D3D10, one interface was responsible for all this, ID3D10Device (or ID3D10Device1, for D3D10.1. In general, in the naming of D3D and DXGI interfaces, the prefix “I” in the name means that the type is an interface type, a prefix like “DXGI” or “D3D11” means a specific API, and the suffix, if any, indicates a minor version of the API), in D3D11 was divided into two - ID3D11Device (creating resources), and ID3D11DeviceContext (the remaining two tasks).

Objects that implement these interfaces also implement some DXGI interfaces - for example, we can request the IDXGIDevice interface from ID3D11Device (by calling the QueryInterface method (which is included in IUnknown , and ID3D11Device inherits from IUnknown ) from the first one)

Here it should be mentioned that using the newest API we absolutely do not have to require the hardware on which our program will work to fully comply with this API. Microsoft introduced the “feature level” concept in D3D10.1, which allows programs using newer versions of the API to run even on the D3D9 hardware (unless they require features from the API to a specific feature level that are not included, of course). In the case of my scene, I will use D3D11, but I will create a virtual adapter with the D3D_FEATURE_LEVEL_10_0 flag, and use the shaders of the 4th model, respectively.

Helper Interfaces. Such as ID3D11Debug , ID3D11InfoQueue , ID3D11Counter or ID3D11ShaderReflection- are used to obtain additional information about the state of the pipeline, about shaders, to measure performance and other such.

Resource Interfaces In D3D, a resource refers to a texture (for example, ID3D11Texture2D - a two-dimensional texture), or just a buffer (containing, for example, vertex data). Resource objects also implement various DXGI interfaces, such as IDXGIResource , and this is the key to interoperability between different graphics subsystems (such as Direct2D, GDI) and different versions of the same subsystem (D3D9, 10 and 11), in new versions of Windows.

Interfaces of representation (English view ).We can use each resource for several different purposes, and possibly even at the same time. In newer versions of D3D, we do not directly supply texture and buffer interfaces to the pipeline (except vertex, index, and constant buffers), instead we create an object that implements one of the presentation interfaces and supplies it to the pipeline.

The following types of view interfaces are present in D3D11:

- ID3D11RenderTargetView - representation of the rendering target (English render target). As the name implies (in general, I must say, the type names in D3D and DXGI are very speaking, albeit long), the graphic pipeline draws to the resource associated with this view

- ID3D11ShaderResourceView - representation for resources used by shaders (an example is a texture from which a pixel shader selects a texel to form a fragment color).

- ID3D11DepthStencilView - view for resources used as a depth and stencil buffer.

- ID3D11UnorderedAccessView - representation for resources used by computational shaders (Compute Shader, CS - since they practically do not belong to the rendering process, I will not describe them).

Shader Interfaces For example, ID3D11VertexShader . Encapsulate the shader code for a specific programmable stage of the graphics pipeline, and are used to set the shader for this stage.

Interfaces of a state (English state ). Used to configure various non-programmable conveyor stages. Examples are ID3D11RasterizerState (configures the rasterizer), ID3D11InputLayout (stores information about the vertices supplied from the vertex buffers in the Input Assembler), ID3D11BlendState (configures the color mixing process in the Output Merger).

The program itself, which draws the scene mentioned at the very beginning, will be described in the following parts of the article, namely, in the second part I will describe in detail the process of creating and initializing the virtual adapter and the resources needed to render the scene, and the third part will be devoted to the shaders used in the program .

The full code is available on github at the following link: github.com/Lovesan/Reef