The book "Deep learning in Python"

Deep learning - Deep learning is a set of machine learning algorithms that model high-level abstractions in data using architectures made up of many non-linear transformations. Agree, this phrase sounds ominously. But everything is not so bad if Francois Chollet, who created Keras, the most powerful library for working with neural networks, tells us about deep learning. Get to know deep learning from practical examples from a wide variety of areas. The book is divided into two parts: the first one contains the theoretical foundations, the second is devoted to solving specific problems. This will allow you to not only understand the basics of DL, but also learn how to use the new features in practice.

Deep learning - Deep learning is a set of machine learning algorithms that model high-level abstractions in data using architectures made up of many non-linear transformations. Agree, this phrase sounds ominously. But everything is not so bad if Francois Chollet, who created Keras, the most powerful library for working with neural networks, tells us about deep learning. Get to know deep learning from practical examples from a wide variety of areas. The book is divided into two parts: the first one contains the theoretical foundations, the second is devoted to solving specific problems. This will allow you to not only understand the basics of DL, but also learn how to use the new features in practice.Learning is a life-long journey, especially in the field of artificial intelligence, where uncertainties are much more than certainties. Inside is an excerpt “Research and monitoring deep learning models using Keras and TensorBoard callbacks”.

About this book

The book is written for everyone to start learning the technology of deep learning from scratch or to expand their knowledge. Engineers working in the field of machine learning, software developers and students will find a lot of value on these pages.

This book offers a real practical study of deep learning. We tried to avoid mathematical formulas, preferring to explain quantitative concepts with the help of code fragments and to form a practical understanding of the basic ideas of machine and deep learning.

You will see more than 30 examples of program code with detailed comments, practical recommendations and simple generalized explanations of everything you need to know to use in-depth training in solving specific problems.

The examples use the Keras deep learning framework written in Python and the TensorFlow library as an internal mechanism. Keras is one of the most popular and rapidly developing deep learning frameworks. It is often recommended as the most successful tool for beginners to learn deep learning.

After reading this book, you will clearly understand what deep learning is, when it is applicable and what limitations it has. You will become familiar with the standard process of interpreting and solving machine learning problems and learn how to deal with common problems. You will learn how to use Keras to solve practical problems - from pattern recognition to natural language processing: image classification, temporal prediction, emotion analysis, image and text generation, and more.

Research and monitoring deep learning models using Keras and TensorBoard callbacks

In this section, we will look at ways to get more complete access to the internal mechanisms of the model during training and management. Running a learning procedure on a large dataset and lasting for dozens of epochs with a model.fit () or model.fit_generator () call resembles the launch of a paper airplane: by giving an initial impetus, you can no longer control the trajectory of its flight or the landing site. To avoid negative results (and the loss of a paper airplane), it is better to use not a paper airplane, but a controlled UAV analyzing the environment, sending information about it back to the operator and automatically steering the rudders depending on its current state. Techniques that will be presented here will transform the call model.fit () from a paper airplane into an intelligent autonomous drone,

Using callbacks to influence the model during training

Many aspects of learning patterns cannot be predicted in advance. For example, it is impossible to predict in advance the number of epochs that provide the optimal loss value on the test set. In the examples cited so far, a learning strategy with a fairly large number of epochs was used. Thus, the effect of retraining was achieved when the first run is first carried out to find out the required number of learning epochs, and then the second - the new one, from the very beginning - with the selected optimal number of epochs. Of course, this is a pretty wasteful strategy.

It would have been much better to stop learning as soon as it became clear that the loss estimate on the test set had ceased to improve. This can be implemented using the Keras callback mechanism. A callback is an object (an instance of a class that implements specific methods) that is passed to the model through the call to fit and which will be called by the model at different points in the learning process. He has access to all information about the state of the model and its quality and can take the following actions: interrupt training, save models, load different sets of weights, or otherwise change the state of the model.

Here are some examples of using callbacks:

- fixing the state of the model in the control points - maintaining the current weights of the model at different points during training;

- early stop - interruption of training, when the assessment of losses on the test data ceases to improve (and, of course, the preservation of the best version of the model obtained during the training);

- Dynamic adjustment of the values of some parameters in the learning process, such as the optimizer learning step;

- Logging grades for training and test data sets during training or visualizing the views received by the model as they are updated - the progress indicator in Keras, with which you are already familiar, is a callback!

The keras.callbacks module includes a number of built-in callbacks. This is not a complete list:

keras.callbacks.ModelCheckpointkeras.callbacks.EarlyStoppingkeras.callbacks.LearningRateSchedulerkeras.callbacks.ReduceLROnPlateaukeras.callbacks.CSVLoggerConsider some of them to get an idea of how to use them: ModelCheckpoint, EarlyStopping and ReduceLROnPlateau.

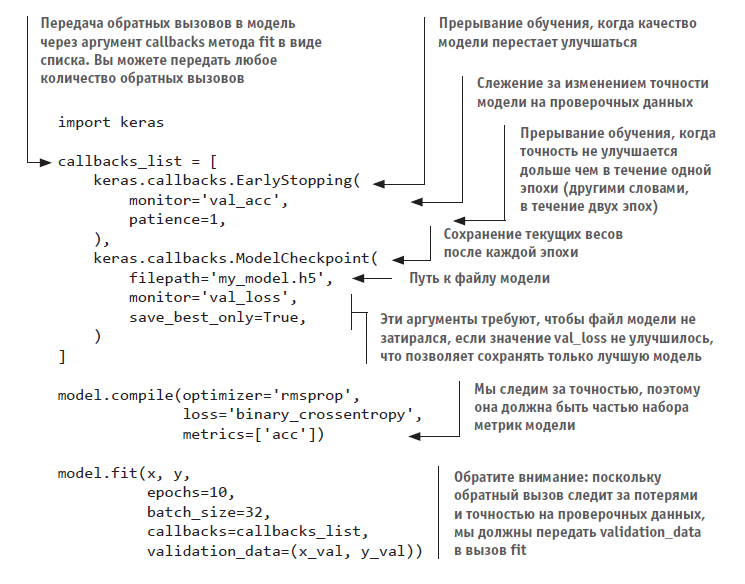

Callbacks ModelCheckpoint and EarlyStopping

You can use the EarlyStopping callback to interrupt the learning process if the monitored target metric has not improved over a given number of epochs. For example, this callback will allow you to interrupt training after the onset of the effect of retraining and thereby avoid re-training the model for fewer epochs. This callback is usually used in combination with ModelCheckpoint, which allows you to save the state of the model during training (and, if necessary, save only the best model: the version of the model that achieved the best quality by the end of an era):

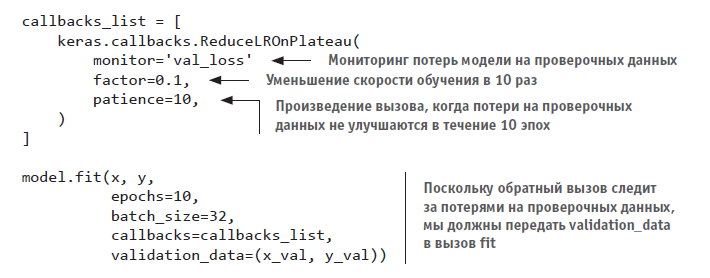

Callback ReduceLROnPlateau

This callback can be used to reduce the speed of learning when the loss of verification data ceases to decrease. Reducing or increasing the learning rate at the inflection point of the loss curve is an effective strategy for getting out of the local minimum during training. The following example demonstrates the use of the ReduceLROnPlateau callback:

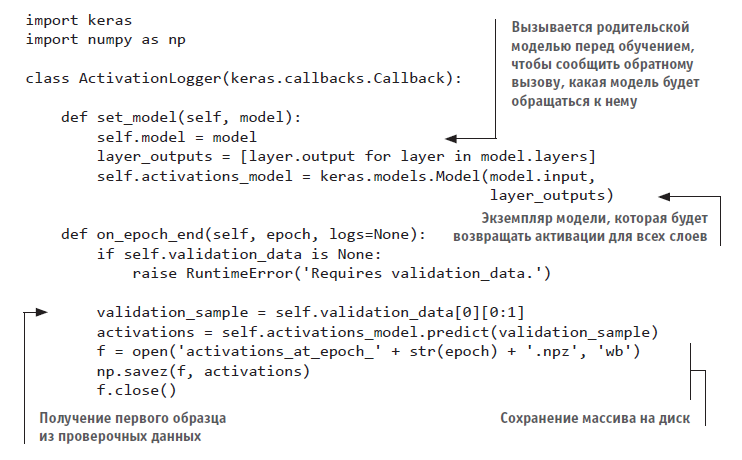

Designing your own callback

If you need to perform some special actions during training, not covered by any of the built-in callbacks, you can write your own callback. Callbacks are implemented by inheriting the keras.callbacks.Callback class. You can implement any of the following methods with speaking names that will be called at the appropriate moments.

All of these methods are invoked with the logs argument — a dictionary containing information about the previous packet, epoch or cycle of instruction: metrics for learning and testing, etc. In addition, the callback has access to the following attributes:

- self.model is the instance of the model that invoked this callback;

- self.validation_data is the value passed to the fit method as verification data.

Here is a simple example of a non-standard callback that saves to disk (as Numpy arrays) activation of all layers of the model after the end of each era, calculated from the first sample in the test set:

This is all you need to know about callbacks, everything else is technical details, information about which you can easily find yourself. Now you can log any information or control the Keras model during training.

about the author

François Chollet is engaged in the field of deep learning at Google, in Mountain View, California. He is the creator of Keras - a deep learning library, as well as a participant in a project to develop the TensorFlow machine learning framework. He is also engaged in machine learning research, focusing on pattern recognition and the application of machine learning to formal reasoning. He spoke at major conferences in this area, including Conference on Computer Vision and Pattern Recognition (CVPR), Conference and Workshop on Neural Information Processing Systems (NIPS), International Conference on Learning Representations (ICLR), etc. .

François Chollet is engaged in the field of deep learning at Google, in Mountain View, California. He is the creator of Keras - a deep learning library, as well as a participant in a project to develop the TensorFlow machine learning framework. He is also engaged in machine learning research, focusing on pattern recognition and the application of machine learning to formal reasoning. He spoke at major conferences in this area, including Conference on Computer Vision and Pattern Recognition (CVPR), Conference and Workshop on Neural Information Processing Systems (NIPS), International Conference on Learning Representations (ICLR), etc. . "For more information on the book can be found on the website of the publisher

" Table of contents

» Fragment

For Habrozhiteley 20% discount coupon - Deep Learning with Python