KinectFusion - building a 3D scene in real time

Microsoft Research at SIGGRAPH demonstrated a very interesting development - KinectFusion . The software allows in _real_ time to restore a 3D scene based on data from Kinect, as well as perform segmentation and tracking of objects.

The technology was impressive, I think that now games are becoming real, in which it will be possible to transfer objects and the environment from reality. By the way, you can, after all, and vice versa, now there is a boom in the development of 3D printing technology, it is quite possible that it will be available soon. Having such affordable scanning and printing, we get the ability to electronically transfer real objects. But this of course is just one use case.

Under the cat a little video analysis:

- Building a 3D model (triangular grid)

- Texturing Models

- Augmented Reality - throw balls into the scene

- Augmented reality - throw balls into the scene, the scene is changeable

- Segmentation - the item that is removed is kept

- Tracking - track selected object

- Segmentation and tracking - draw with your fingers on objects

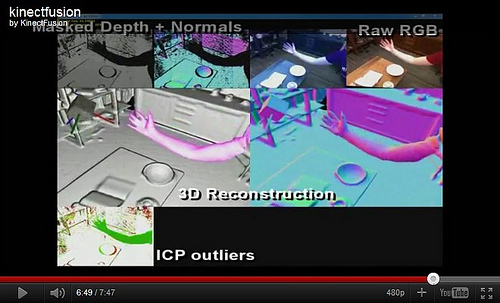

Building a 3D model (a triangular grid)

It is noted that Kinect can be moved in space rather casually, jitter and sudden movements are not scary, in real time the current 3D point cloud is combined with the existing scene and the scene is modified / completed. At this stage, only depth data is needed. Triangulation is somewhat blurred, because the grid of rays is 640x480, so you need to come closer to clarify the details. I think in the future they will be able to increase the resolution and then the device will become much more serious. Another option is to set up optics so that closer objects can be scanned in detail, now the minimum scanning distance is quite large - 1.2 m.

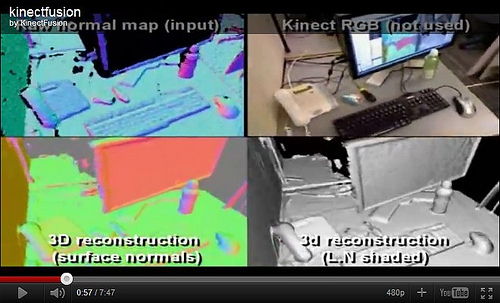

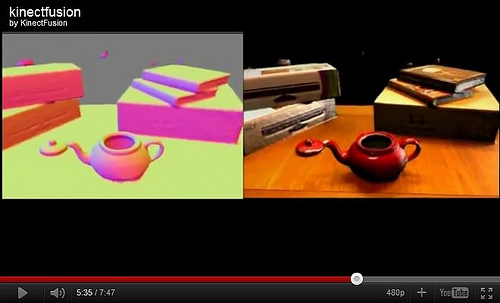

Texturing the model

Kinect has a regular camera, the color is taken from there and the textures are built. In the picture to the right is a textured model around which a light source flies.

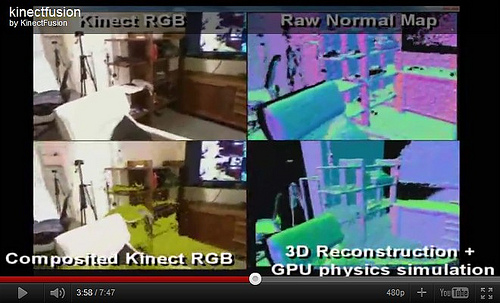

Augmented reality - we throw balls into the scene We throw a

bunch of balls into the scene, on the GPU in real time they scatter along a triangulated 3D scene, and on a regular video from Kinect we draw these same balls taking into account the clipping of invisible sections.

Augmented reality - throw the balls into the scene, the scene

changes. Again we throw the balls onto the stage, the person in the frame shakes off the towel and the balls interact with the changing 3D scene.

Segmentation - the item that is removed is kept.

There is a kettle on the table, the scene is static. We take a teapot and take it out of the scene, it is recognized as a separate object.

Tracking - we track the selected object

Next, return the kettle to its place, the program understands that it is back, then we move it and the software tracks its movement, combining it with the prototype.

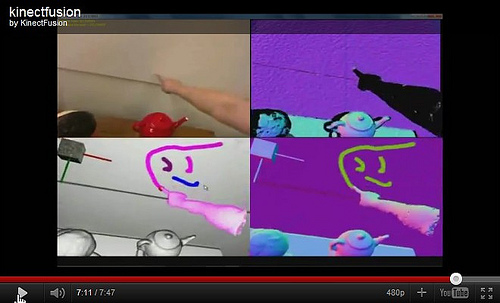

Segmentation and tracking - we draw with our fingers on objects The

hand stands out against the background of a static scene, it is tracked and determined by multi-touch touching the surfaces of objects.