Versioned migration of the database structure: why it is better not to do this

Background

A lot of various articles on the migration of the database structure appear in the network, which offer many options for a painless solution to this problem (it is worth considering that the need for this kind of migration is itself a serious problem). It is curious, but to eliminate the need for such a solution is offered much less frequently. The author is sure that instead of finding a solution to a complex problem, it is better to try to prevent it. You need to do this as soon as possible. Below you will find a story about a project that demonstrates the negative side of migration of the database structure.

Articles worth paying attention to:

- Versioned migration of the database structure: from theory to practice

- Versioned Migration of Database Structures: Key Approaches

Why the author can be trusted

It is impossible to trust anyone, but the author sincerely hopes that he will be treated with due skepticism, continuing to be guided by common sense and logic. Nevertheless, the author will be glad if instead of solving often far-fetched problems, you will spend time on something more pleasant. The ideas, assessments, and assumptions described in this article are the result of many years of software development experience. Among other things, the author has led the support of existing and development of several new products for more than five years, for which the described problem is more than relevant. After several years, finally an opportunity to share information. Below you can find real examples of serious problems, ways to solve them and prevent them. The project that will be discussed is rather small, but, nevertheless,

Summary

I will try to summarize all the comments, assumptions and conclusions to facilitate the discussion that follows:

- The modern development process is focused on short iterations, frequent builds and continuous integration

- Incremental database structure changes are very expensive due to the obvious limitations of the DBMS

- In the early stages of development, DBMS limitations are not always obvious / interesting (shown below)

- A successful product is faced with most problems, reaching significant sizes, which is when the cost of fixing many problems is huge

- The choice of data storage and processing determines the application architecture

- Making significant architectural changes in the later stages is a very expensive pleasure.

- It is in the late stages of development that they try to speed up the process due to the obvious advantages in terms of business and profitability

- Changes to the database structure often entail additional processing / conversion of the stored data.

- In the later stages, any changes to the database structure, due to the many limitations of the DBMS, are problematic.

- For many projects, versioned migration of the database structure in the early stages will seem an obvious solution, subsequently becoming a serious limitation. More or less universal recipe - it is necessary to avoid frequent changes in the structure of the database.

Another example

Features of modern development

The modern style of development imposes a lot of limitations and requirements that have to be reckoned with. For example, you may be asked to build assemblies ready for testing, with a period of one week to several hours. It should be understood that the team responsible for testing or another development team that independently implements any functionality may already have the previous product build installed (the database has already been created and contains test data that no one wants to lose), respectively, it’s worth respect people's time and maintain a transparent upgrade between builds. With intensive development, database structure changes can occur with each new assembly.

The final release may include many changes to the database structure (often mutually exclusive), providing an amazingly slow upgrade on the client side, which, in turn, can lead to dissatisfaction on its part, because regardless of the real purpose of the system, the time of forced inactivity is very critical factor. In other words, the development process may not be ideal, it may require additional time to solve various problems with migration, but for the client everything should go as quickly as possible, without any problems and outside interference.

Short:

- intensive development - frequent assemblies and frequent changes to the database structure

- developers do not work in isolation - remember testers and dependent developers

- problems can occur at any stage of migration

- very often migration problems are difficult to identify immediately

- “Broken base” always leads to time costs

- a client who waits for several hours while the database is "updated", upset or even angry

- customer failure means several orders of magnitude more problems and the time required to solve them

Example One, a 14-year-old system

Short description

The main problem to be solved is managing an average number of several types of network devices. The size of real installations ranged from 1 to 60 devices. From the system, the devices receive configuration and control commands, sending confirmations, various statistics and "telemetry" in response. The system also implements a user interface for editing a variety of device configurations. It is worth noting the complexity of the configuration (and user interface), the need to store a history, support a huge number of options, specific user data types, etc. The configuration could include from ten to several hundred different parameters. To store all the data, a database was used (mainly MySQL).

Simplified model

In essence, the task of the system is to store several hundred parameters for devices of each type, provide the user with a convenient interface for editing them and form the resulting configuration that is sent to the device.

Timeline: The Beginning

As with any other product, the development of this system began with a single device, several parameters and a very simple database structure. According to the first idea, a separate column was used for each parameter in the table that stores the configuration. Such a solution, being straightforward and far from optimal, did not last long: the growing client base required the addition of more and more functional, in connection with which the number of parameters for each device grew, new types of devices were added, a story appeared with the ability to roll back to one of the previous ones versions, etc.

The growing customer base demanded that the company more and more active development style, the implementation of new customer requests and the transition to a more stringent release schedule. The need to accelerate development led to the creation of several, almost independent, teams: someone focused on developing devices, someone continued to develop a control system, several more teams were responsible for testing the entire “zoo” of solutions, which, suddenly, began to bring significant profit.

Problems at this stage

Since users interacted with devices through the control system, the tasks of developing and improving the latter have become very important. The implementation of the new functionality was reduced to adding, deleting or replacing one or more parameters (columns) in the database and the corresponding edits of the user interface and the generated configuration. But, as it turned out later, most of the time developers spent on solving a variety of problems associated with changes in the database structure. No one wanted to part with their test data, no one wanted to take additional steps to bring the system to working condition after the next changes.

The company's clients had no less problems: few wanted to lose the ability to manage devices for a long period of time and clearly no one wanted to be without a control system at all. But since the size of the database ranged from a few tens of megabytes to a gigabyte, there were also dissatisfied customers spending several hours waiting for the migration to complete (often tens or even hundreds of changes were included in the release) and even frankly evil customers who received one or another error during the installation process the new version of the system and waiting for the support service to pay attention to them.

Technical details

Modifying existing tables using ALTER TABLE will most likely not be a cheap operation. The author omits the ideal options in advance, which come down to changing the table metadata, inviting the reader to think about the indexes that may exist, the need to convert the data, the physical placement of the data on the disk, and the difference between the idealized table view and the actual placement of the data and the corresponding performance problems.

By the way, you often come across a call not to use ALTER TABLE, preferring copy-rename to a procedure (for example: Don't Alter Table. Do Copy and Rename )

To convert data of not the most trivial complexity, in any case, you will have to use additional logic implemented in any programming language.

Decision

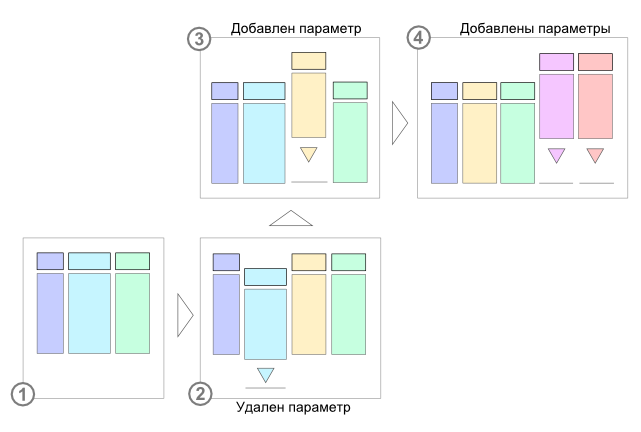

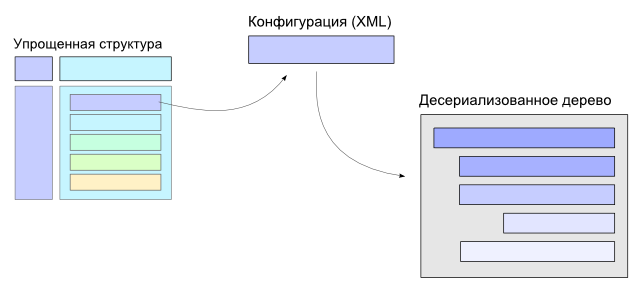

At this stage, the bright minds of the company decided to completely get rid of the need to change the database structure and store the device configuration in a very fashionable (just started to gain popularity) XML format. Agree, it’s very convenient: we deserialize XML into an object tree, work with them, then serialize it back into XML and save it to the database. Naturally, BLOB appears in the database, but after some hesitation, it was decided to leave this problem to the database. To convert the data, small independent programs were used (initially perl scripts, then logic in java), which ensured sequential conversion of the database, say, from version X to version Y.

the effect

Since the addition of new functionality (new options) was reduced to changing the XML representation, changes to the database structure were reduced to zero, greatly simplifying the process (at least from the point of view of working with the database). The development process accelerated, as it became possible to work with more complex data structures without worrying about almost anything. Now you can save history (just save a few XML), etc.

Timeline: Becoming

After significant processing of the configuration storage method, the development of the system accelerated and the number of problems, at first glance, decreased. The company released a new version, which, during installation, converted the old database into a new format, once again spending customers time for no apparent reason, but promising to save them from all troubles in the future. Customers believed and suffered. Then new versions followed that expanded the functionality of the system and solved the problems of the previous ones. Switching to a newer version of the system took from 15 to 30 minutes, which looked like a very serious achievement. But that was not for long. The increase in functionality has led to explosive growth in stored data. Far from the last role in this was played by the relative cheapness of configuration changes. The developers chose the easiest way to implement it completely without caring for the future.

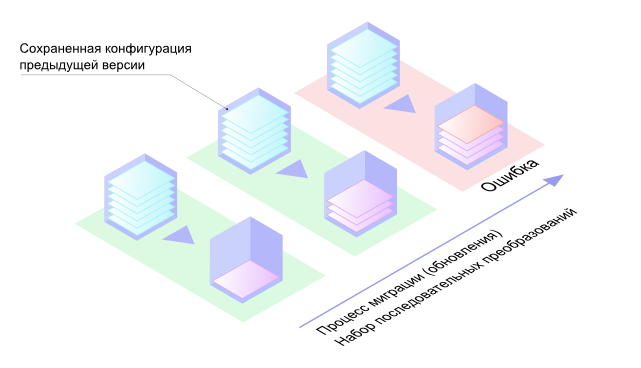

The result of this approach was a mountain of XML code and a large number of necessary transformations during the transition to each subsequent version. At this stage, in order to transfer the database from the X.1 state to the X.2 state (new functionality was added), it was necessary to perform one or several transformations on the configuration representations stored in the XML database. Only after successfully performing such transformations the system could start working (updated code could deserialize the saved view).

Problems at this stage

Configuration transformations were a set of sequential transformations of saved XML files (xml files were really stored in the database, which in the early stages were even completely compatible with devices). Some of these transformations treated the XML representation as a string and used the usual replacement. Other programmers preferred to work with the DOM tree or write “optimized” options using SAX parsers. Such transformations were independent and, for example, the presence of a large number of them in the release, could mean the need to perform several transformations for one type of configuration, each of which independently deserialized the DOM tree, modified the tree, and subsequent serialization, thus repeating resource-intensive and completely optional steps several times.

For developers, this operation took a short time, due to the extremely small size of test data used during development. The size of the real configuration stored by real systems was several orders of magnitude larger (from 100 kilobytes to 1-2 megabytes, with a total database size of up to 1-2 GB). The transition to a new version of the system, once again, has become a big problem for customers.

The complexity of the code that was involved in converting the stored XML was constantly increasing as the number of parameters, the number of possible options, the number of clients, etc. increased. Errors appeared that led to the appearance of a “bat” or simply incorrect configuration from the point of view of the application logic. Most of these problems were hidden and appeared only in the most complex and sophisticated cases - among the largest and most valuable clients. The situation was complicated by the fact that during development, the transformations of the database were started manually. Then, before the release of the next public version, one of the developers collected all the code responsible for converting the database into one application and formed the final build. Given the huge number of options and very tight (as always) time frames, It was not possible to test all the nuances of the system. Clients found bugs, lost databases (if they forgot to take care of the backup), lost databases later when they got to problem branches, etc.

It is possible to describe the global armageddon for a long time, I can only say that after the next release, the developers spent several weeks, manually editing the configuration in the customer databases. The overall situation was becoming threatening.

Decision

This time, bright heads for some time doubted their lordship, but there was no turning back. Once again, a global redesign of the system was not possible due to the enormous amount of work. After a lengthy meeting decided:

- introduce the concept of upgrade between builds and during development (testing) use mechanisms that, in the future, will be used by clients

- carefully write code to convert configuration

- test the system more intensively using customer databases (testing costs have increased significantly)

the effect

The effect was not significant, in addition, it was the merit of customers who finally upgraded (finally) and tested the system on live tasks.

Timeline: mature system

The system continued to be sold, becoming more and more complex. Clients put up with problems, the support service did what it could, often passing problems to the developers, because only they could try to fix the base with their hands. A variety of patches appeared, in a friendly atmosphere, people exchanged working “spells” that prevented a particular problem. In a word - life was in full swing.

Problems at this stage

At this stage, the task was set to solve the problem of long-term updates when switching to a new version, streamline the logic for updating the database and simplify the work of other teams, which, by the way, still had to spend considerable time editing and catching various bugs related to the converted configuration.

In addition, some, especially active, clients managed to bring the configuration size to 10 megabytes and the total database size to 5 gigabytes, after which they encountered a new class of very interesting problems. To complete the picture, you can wish the leadership to get a system compatible with devices of previous versions. All the time, the devices and the control system developed synchronously. Example: System.1 sent the configuration to Device.1, System.2 to Device.2, etc. The task was set to provide an opportunity for the System. 4 to send the correct configuration to the Devices. 2, 3, 4. The differences, by the way, were often very significant.

Decision

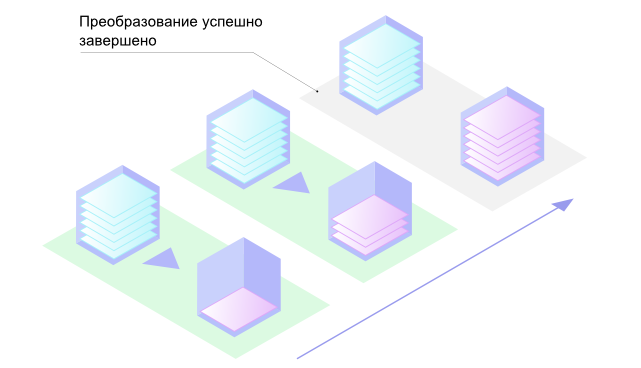

To write the logic for converting the configuration, a special mechanism was implemented that, among other things, was able to verify the correctness of the changes made at each stage, thus preventing the appearance of broken bases. An automated system for testing and verifying the entire product did its job, revealing even hidden and sometimes possible problems only in theory.

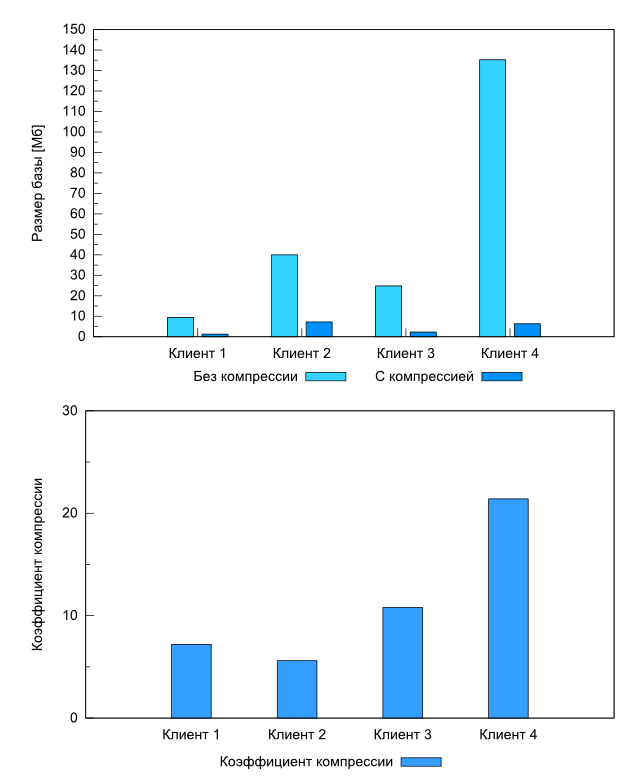

They began to store the configuration in archived form, solving both the problem of size and the problem of read and conversion speed (processor performance is growing, but the disk subsystem was a terrible brake, it remained so. Database (MySQL) performance really increased). Using a stable and debugged update mechanism, an inverse transformation mechanism was implemented, which made it possible to support devices of different versions. Automated tests were also used in this case. The life of developers, among other things, was greatly simplified by a universal device emulator, which not only showed what the control system sent, but also checked the configuration received, indicating potential errors.

Garbage was removed from the databases of all clients, after which their size decreased by an order of magnitude and the installation time for new versions was significantly reduced. Nevertheless, it was not possible to solve all the problems, cleverly and very carefully designed even at the very early stages, for obvious reasons.

Conclusion

The author received this project in a rather poor state. Together with the project, several hundred bugs, real clients, and many requests for additional functionality, including a terrible request for a system with backward compatibility and adaptation of the entire farm to the new-fangled Agile, were received. By the way, the implementation of continuous integration for a large project that has existed for a long time and checking everything that can be checked after each commit is a separate and very interesting topic. They managed to cope with the task, solely thanks to the excellent team and unlimited optimism. Many bugs have been fixed:

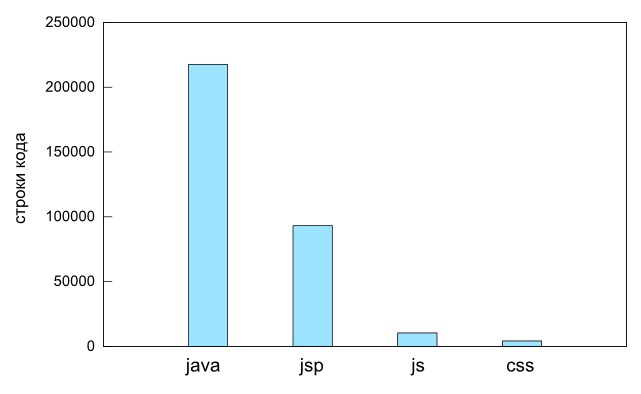

The development process was quite intense:

Remember, a real client from two systems will choose the most stable and convenient option that will not require expensive maintenance and will provide the minimum time of forced inactivity. To develop a system in the 21st century that requires a service stop is somewhat strange. Developing a “lying” system from a few hours to a day is at least stupid.

In the next article (if this opportunity presents itself), the author will talk about working on another system created from scratch and devoid of almost all the problems mentioned in this article. The new design can significantly reduce development and testing time, providing the ability to provide an updated assembly within a few hours. Naturally, the database is still in use, but no additional steps are required when upgrading to the new version; everything happens transparently, stably and quickly.

Bonus

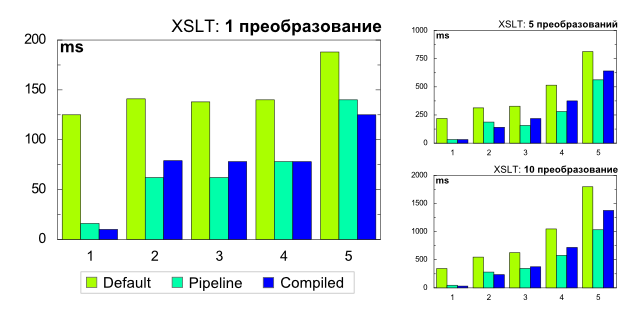

Solving the problem of updating the documents stored in the XML database, among others, the option of using XSLT transformations was considered. The solution seemed attractive and successful, allowing the use of several optimization techniques. For example, several transformations can be represented in the form of a conveyor and, if there are enough of them, get a noticeable performance gain. Below are the results of a small assessment test. Tests 1-5 differ in the size of the processed data. Do not take the results as a guide to action or the ultimate truth.