Interview: Jim Johnson of Standish Group

- Transfer

Jim Johnson is the founder and chairman of the Board of Directors of Standish Group, a respected worldwide company providing research and analysis of the effectiveness of IT projects. It is widely known, first of all, thanks to the study “Why do projects fail?” And other works on system costs and availability. In addition, he is a pioneer of modern research technologies such as virtual focus groups and use case analytics.

Undoubtedly the greatest glory of Standish GroupThe CHAOS Chronicles study brought them together over 12 years of research from more than 50,000 completed IT projects through focus groups, in-depth surveys, and senior management interviews. The objective of this study is to record the extent of failures among software application development projects, the decisive factors of failure, and ways to reduce such risks. In 1994, the Standish Group published its first report on the CHAOS study, which recorded the facts of spending billions of dollars on projects that were never completed. Since then, this report has been most often quoted in the context of this industry.

Spending some time on vacation, Jim Johnson talked with me this week about how this study was conducted and the role of Agile methods in the context of research results. We are also joined by Gordon Divitt, Executive Vice President of Acxsys Corporation, a software manufacturing veteran who has participated in CHAOS University events since its inception.

Please tell us how the first CHAOS report was compiled?

JJ : We have been doing research for quite some time, so let me tell you a little about him too.

Our first object of study was the sale of sub-software - we then led the IBM group in Belgium, about 100 people, and could not correctly track these sales. I want to say that when you sell a toolkit, you can expect some number of usage agreements. But we did not see what we were counting on, so we had to interview people about the reasons why such agreements were not concluded. They replied that their projects would not be completed. At that time, according to our data, the share of such canceled projects was about 40%. What people talked about was a real problem.

Therefore, we began to conduct focus groups, etc. to get feedback so that you know how to deal with it. We conducted surveys and testing in order to verify the reliability of the sample, corrected and improved it until we received a sample that would be representative of different industries and companies of various sizes.

Do you think the CHAOS sample is generally representative in the context of application development?

JJ : Yes.

In this case, does it include, say, small software manufacturers?

J.J.: Oh no. It consists of the following categories: only state or commercial organizations - without distributors, suppliers or consultants. So Microsoft is not represented in our sample. And organizations with a turnover of more than 10 million dollars also, with rare exceptions, like the company of Gordon.

GD : Does participation in CHAOS University events seem to be limited to small companies?

JJ : Yes, we have really big customers. But at the same time, when we interview people, we try to avoid bias and cover the entire industry. We do not just interview our clients - we pay people to fill out questionnaires, and this happens completely separately. You understand how the church and the state (smiles).

Do you pay people to fill out questionnaires?

J.J.: Well, they come to focus groups, and we pay for their time. If they fill out a questionnaire, we pay them a fee or give some kind of gift. This is already a tradition in studying this industry. This way we get a clean result based on unbiased answers. If you don’t pay, then you will be biased in order to somehow influence the result of the study. And payment helps to keep opinions neutral. However, many donate about a quarter of the charitable fee - most of those who work for the state do not take money in any case.

Yes, we use precedents when analysts want us to, but we cannot use this information for advertising purposes or for our own reports.

The main quality of the information we receive - its delimitation from a particular company - everything is always grouped, absolutely confidential, we do not indicate names. Otherwise, we would not receive data.

GD : This is the property of the company.

JJ : Yes, for sure, and we never publish polls.

The results of your demographic survey are posted on your site. But, it seems to me, something is missing, namely, the principle by which you select companies for your base. Since you are focused specifically on development failures, are you looking for companies with some particular type of failure? Do you select companies with a higher failure rate than the average in the IT industry? Or do they turn to you? Or, in short: is your sample representative of failed development in general?

JJ : We can consider a serious setback as a precedent for study, we are looking for instructive setbacks. But not as the data for the survey. We do not request failed projects. Our initial study was a massive distribution based on the widest sample, perhaps due to the fact that the answer to it was extremely weak (Editor's Note: In a 1994 study, more than 8 thousand application development projects were presented).

Now we invite people to participate using our SURF database, and we have certain input criteria.

Participants must:

Our database currently has about 3,000 active participants. You ask whether they themselves volunteered on the basis that they have certain problems - but no, I would not say that. I think they are participating, because it will allow them to get acquainted with the data - and we provide them only to the participants. But I don’t think that there is any kind of biased interest ... I mean, we are constantly reviewing and adjusting everything.

Correct? In what sense?

JJ : We look at the data, by calling, to clarify or exclude something, if it looks incorrect, or if we are not sure. That it has the necessary authenticity. We are not just filling the databases, we need clear data.

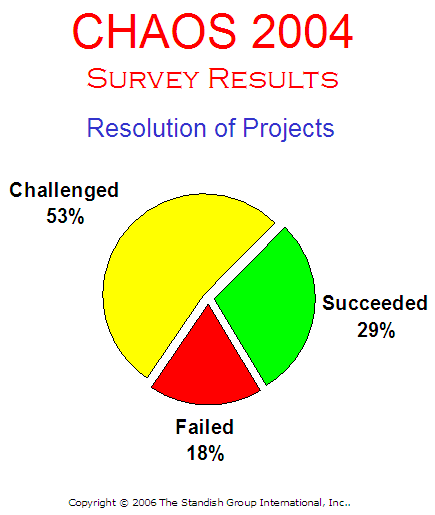

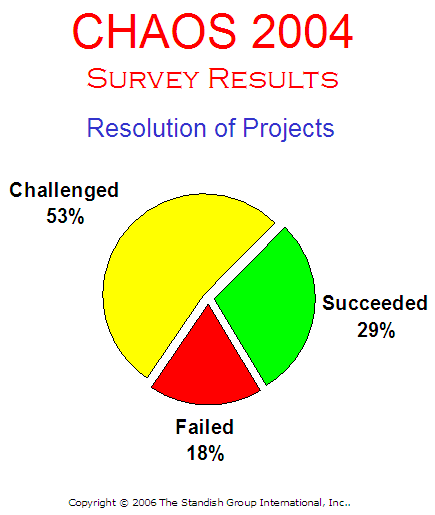

2004 CHAOS Study Results: Project Distribution

Complex - 53%, successful - 29%, failed - 18%.

As you know, I wrote a review on how Robert Glass questioned the results of CHAOS. Did anyone question your figures until then?

JJ : Not that. Most often I hear: “the numbers are too optimistic” - people are mostly surprised. Their reaction to the percentage of projects that failed (18%) due to the cancellation or non-use of the results is quite logical - they understand the reasons for this phenomenon: if everyone strives to achieve success and come to the finish line first, then some amount may not come, as it is on the verge.

Everyone knows that the most common scenario in our industry is to exceed the budget, miss deadlines, and not provide the planned functionality. Most comment this: “I don’t know a single project where everything would be done on time.”

We presented the results of our research in many cities of the world. They can be found on the Internet. And you don’t have to be a genius to understand our methodology - it is known to all.

GD : And no one has yet turned back from cooperation to doubts - people depend on research, they have been participating in events and surveys for 12 years. They always come back, and that says something.

I have no doubt that the process is completely transparent, as it always has been in CHAOS, and I can confirm the openness of Standish Group. And, more importantly, in my opinion, I can confirm the acceptance of the results by clients - this is confirmed by their long-term cooperation with the group and participation. If “something was wrong in the Danish kingdom”, then it would have come to the surface a long time ago.

You mentioned once that people tend to confuse complex projects and failures - and I myself have sinned this way - and that you would not advise to think so. Could you say a few words on this subject?

J.J.: I think many people mix these two concepts - complex and disastrous. This is a mistake. The fact is that projects that have value, and those that have no value, are knocked down in one heap. I would consider projects from a position of level of value. A project that exceeded the million dollar bar might have more value, as the new system could bring more benefits. On the other hand, in complex projects, a lot of money is wasted. We are trying to separate the failure of the project from the failure of project management, which is still of value. This topic is very interesting to me now, and it is precisely it that our new studies reveal.

There is “success”, which immediately contradicts three parameters - but the project is still successful and important. We always ask: “How would an objectively inclined person describe this project?” We do not want to put anyone at a disadvantage ... but if the project was completed, but was not closed, then it is complex and not a failure. It is not easy to draw a clear line here.

Indeed, projects change so much over time that it is difficult to obtain reliable information about the initial scale and final, for example?

JJ : We make a lot of efforts to establish the difference between a complex project and a successful one. And then they are sometimes reinsured: they increase the project budget in order to avoid failure - we should also pay attention to this.

Ten Success Factors

1. User involvement

2. Support for senior executives

3. Clear business goals

4. Scale optimization

5.

Workflow flexibility 6. Project manager experience

7. Financial management

8. Experienced employees

9. Formalized methodology

10. Standard tools and infrastructure

Your research unsuccessful projects - an attempt to find the secret to success, is not it? I noticed that on your list of project success factors, number five is “workflow flexibility”. Meaning, how is Agile software development?

J.J.: Yes exactly! I am a big fan of Agile - I used iteration work in the early 90s, and then there were CHAOS reports on quick returns. We are very rooting for small projects, small teams, for Agile in the manufacturing process. Kent Back gave lectures at CHAOS University, and I spoke at his seminars. I am a true fan of extreme programming. In the new book, My Life Is a Failure, I talk about Scrum, RUP, XP.

GD : Agile methodology helped break down projects into smaller subprojects. Even if things go wrong, you can understand it in time. Not like in old projects that worked according to the waterfall method, when you bury yourself in a hole, and you get bad news only after two years ...

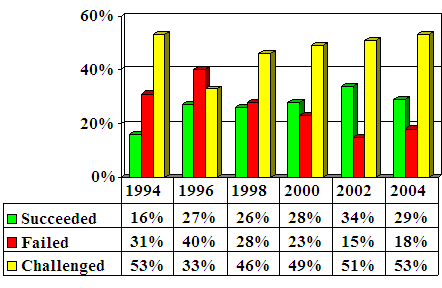

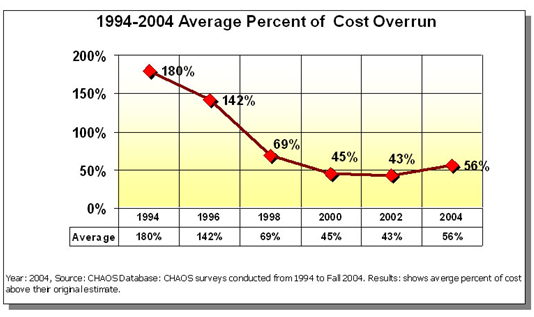

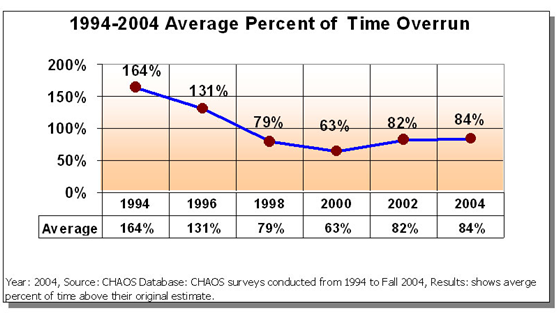

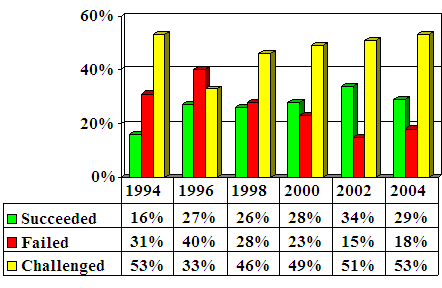

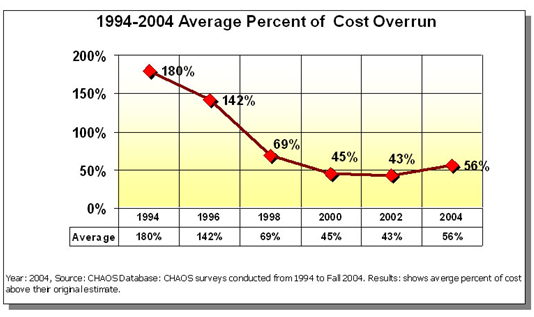

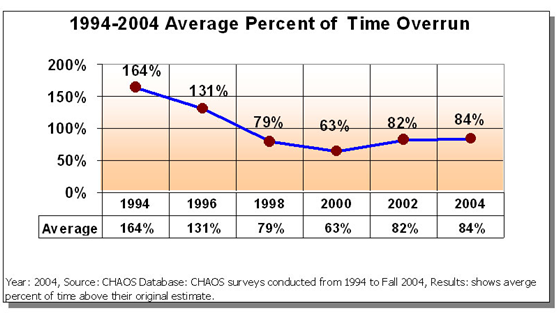

J. J.: I think the secret is step-by-step. I think that’s why we see improvements. (Note. Ed. Here are some diagrams used by Jim Johnson in lectures and taken from his 2004 study.)

Comparison: Successful, failed, complex.

The average percentage of extra costs for 1994-2004.

The average percentage of deadlines for 1994-2004.

The big problem was that the projects “swelled up”, deadlines were broken, the budget was overspended, and unnecessary functionality was developed. Especially in government projects. The agile methodology really helps here - the project is almost not growing. You know, as they say, “I need this,” and then “it’s not as important as I previously thought.”

G.D.: Yes, prioritizing functionality helps a lot ... asking all the time, "what is the business benefit?" Determine the invaluable characteristics of the product and leave them at the end of the list, and if hands never reach them, then that means they were not needed at all.

Have you collected any data about Agile in your research?

JJ : Yes, we tried. I hope we can show some of this. I inserted such questions into the questionnaires, but few answered them - it is difficult to obtain unambiguous data on them. We tried to achieve something a couple of studies to the present ... I hope this time we will succeed. Within a few days, we will take the final approach to SURF members to complete the 2006 study.

Agile may raise new questions. What do you call a phenomenon when the planned scale is reached for a project with adaptive planning?

JJ : Good question. In the case of companies like Webex, Google, Amazon, eBay, it is very difficult to say, because they have “streaming” updates (“pipelining”), and not releases. “Whatever we did in two weeks, everything will go to the world.” They are successful because users face minor changes. And, introducing new changes, they are protected - remember, many projects fail after the development stage is completed, and it is necessary to proceed to implementation.

Streaming updates? Sounds like zoom.

J.J.: Yes. It is very informative to work on a variable scale - people are much happier when they see that work is going on. People like to see progress in seeing results, and Agile is the embodiment of it all.

G.D. : For these companies, users are young, enthusiastic, easily adaptable, they like change, like to try new things. Large banks do not tolerate this approach. So I see this process - isn't it too rude?

JJ : Not every methodology is applicable to any project. Agile is difficult for many, because they have a difficult culture.

G.D.: One of the things that I like about Agile is that you do something, see it, users say “I like it”, or “I don't like it, can you change it?” Or “no, this is not the right way.” You have quick feedback and stream quality debugging is much better. With the “waterfall” approach, even if you bring the project to the end as you expected, you will still deal with all these quality problems later on. With Agile in testing and feedback, quality improves.

JJ : People think Agile is very liberating. If they looked closely, they would see that everything was just as tough there, at the "waterfall" or something else that James Martin would have to deal with. To say that over the past ten years there has been no improvement is stupidity. We have made significant progress.

Well, I promised that this would be the last question. Who will take the final word?

G.D. : You know, Jim is a prominent fighter, as is his entire team. This is what makes me work with them.

Jim, thank you very much for taking so much time for our conversation!

Undoubtedly the greatest glory of Standish GroupThe CHAOS Chronicles study brought them together over 12 years of research from more than 50,000 completed IT projects through focus groups, in-depth surveys, and senior management interviews. The objective of this study is to record the extent of failures among software application development projects, the decisive factors of failure, and ways to reduce such risks. In 1994, the Standish Group published its first report on the CHAOS study, which recorded the facts of spending billions of dollars on projects that were never completed. Since then, this report has been most often quoted in the context of this industry.

Spending some time on vacation, Jim Johnson talked with me this week about how this study was conducted and the role of Agile methods in the context of research results. We are also joined by Gordon Divitt, Executive Vice President of Acxsys Corporation, a software manufacturing veteran who has participated in CHAOS University events since its inception.

Please tell us how the first CHAOS report was compiled?

JJ : We have been doing research for quite some time, so let me tell you a little about him too.

Our first object of study was the sale of sub-software - we then led the IBM group in Belgium, about 100 people, and could not correctly track these sales. I want to say that when you sell a toolkit, you can expect some number of usage agreements. But we did not see what we were counting on, so we had to interview people about the reasons why such agreements were not concluded. They replied that their projects would not be completed. At that time, according to our data, the share of such canceled projects was about 40%. What people talked about was a real problem.

Therefore, we began to conduct focus groups, etc. to get feedback so that you know how to deal with it. We conducted surveys and testing in order to verify the reliability of the sample, corrected and improved it until we received a sample that would be representative of different industries and companies of various sizes.

Do you think the CHAOS sample is generally representative in the context of application development?

JJ : Yes.

In this case, does it include, say, small software manufacturers?

J.J.: Oh no. It consists of the following categories: only state or commercial organizations - without distributors, suppliers or consultants. So Microsoft is not represented in our sample. And organizations with a turnover of more than 10 million dollars also, with rare exceptions, like the company of Gordon.

GD : Does participation in CHAOS University events seem to be limited to small companies?

JJ : Yes, we have really big customers. But at the same time, when we interview people, we try to avoid bias and cover the entire industry. We do not just interview our clients - we pay people to fill out questionnaires, and this happens completely separately. You understand how the church and the state (smiles).

Do you pay people to fill out questionnaires?

J.J.: Well, they come to focus groups, and we pay for their time. If they fill out a questionnaire, we pay them a fee or give some kind of gift. This is already a tradition in studying this industry. This way we get a clean result based on unbiased answers. If you don’t pay, then you will be biased in order to somehow influence the result of the study. And payment helps to keep opinions neutral. However, many donate about a quarter of the charitable fee - most of those who work for the state do not take money in any case.

Yes, we use precedents when analysts want us to, but we cannot use this information for advertising purposes or for our own reports.

The main quality of the information we receive - its delimitation from a particular company - everything is always grouped, absolutely confidential, we do not indicate names. Otherwise, we would not receive data.

GD : This is the property of the company.

JJ : Yes, for sure, and we never publish polls.

The results of your demographic survey are posted on your site. But, it seems to me, something is missing, namely, the principle by which you select companies for your base. Since you are focused specifically on development failures, are you looking for companies with some particular type of failure? Do you select companies with a higher failure rate than the average in the IT industry? Or do they turn to you? Or, in short: is your sample representative of failed development in general?

JJ : We can consider a serious setback as a precedent for study, we are looking for instructive setbacks. But not as the data for the survey. We do not request failed projects. Our initial study was a massive distribution based on the widest sample, perhaps due to the fact that the answer to it was extremely weak (Editor's Note: In a 1994 study, more than 8 thousand application development projects were presented).

Now we invite people to participate using our SURF database, and we have certain input criteria.

Participants must:

- have access to certain project information

- use specific applications

- Use specific platforms.

Our database currently has about 3,000 active participants. You ask whether they themselves volunteered on the basis that they have certain problems - but no, I would not say that. I think they are participating, because it will allow them to get acquainted with the data - and we provide them only to the participants. But I don’t think that there is any kind of biased interest ... I mean, we are constantly reviewing and adjusting everything.

Correct? In what sense?

JJ : We look at the data, by calling, to clarify or exclude something, if it looks incorrect, or if we are not sure. That it has the necessary authenticity. We are not just filling the databases, we need clear data.

2004 CHAOS Study Results: Project Distribution

Complex - 53%, successful - 29%, failed - 18%.

As you know, I wrote a review on how Robert Glass questioned the results of CHAOS. Did anyone question your figures until then?

JJ : Not that. Most often I hear: “the numbers are too optimistic” - people are mostly surprised. Their reaction to the percentage of projects that failed (18%) due to the cancellation or non-use of the results is quite logical - they understand the reasons for this phenomenon: if everyone strives to achieve success and come to the finish line first, then some amount may not come, as it is on the verge.

Everyone knows that the most common scenario in our industry is to exceed the budget, miss deadlines, and not provide the planned functionality. Most comment this: “I don’t know a single project where everything would be done on time.”

We presented the results of our research in many cities of the world. They can be found on the Internet. And you don’t have to be a genius to understand our methodology - it is known to all.

GD : And no one has yet turned back from cooperation to doubts - people depend on research, they have been participating in events and surveys for 12 years. They always come back, and that says something.

I have no doubt that the process is completely transparent, as it always has been in CHAOS, and I can confirm the openness of Standish Group. And, more importantly, in my opinion, I can confirm the acceptance of the results by clients - this is confirmed by their long-term cooperation with the group and participation. If “something was wrong in the Danish kingdom”, then it would have come to the surface a long time ago.

You mentioned once that people tend to confuse complex projects and failures - and I myself have sinned this way - and that you would not advise to think so. Could you say a few words on this subject?

J.J.: I think many people mix these two concepts - complex and disastrous. This is a mistake. The fact is that projects that have value, and those that have no value, are knocked down in one heap. I would consider projects from a position of level of value. A project that exceeded the million dollar bar might have more value, as the new system could bring more benefits. On the other hand, in complex projects, a lot of money is wasted. We are trying to separate the failure of the project from the failure of project management, which is still of value. This topic is very interesting to me now, and it is precisely it that our new studies reveal.

There is “success”, which immediately contradicts three parameters - but the project is still successful and important. We always ask: “How would an objectively inclined person describe this project?” We do not want to put anyone at a disadvantage ... but if the project was completed, but was not closed, then it is complex and not a failure. It is not easy to draw a clear line here.

Indeed, projects change so much over time that it is difficult to obtain reliable information about the initial scale and final, for example?

JJ : We make a lot of efforts to establish the difference between a complex project and a successful one. And then they are sometimes reinsured: they increase the project budget in order to avoid failure - we should also pay attention to this.

Ten Success Factors

1. User involvement

2. Support for senior executives

3. Clear business goals

4. Scale optimization

5.

Workflow flexibility 6. Project manager experience

7. Financial management

8. Experienced employees

9. Formalized methodology

10. Standard tools and infrastructure

Your research unsuccessful projects - an attempt to find the secret to success, is not it? I noticed that on your list of project success factors, number five is “workflow flexibility”. Meaning, how is Agile software development?

J.J.: Yes exactly! I am a big fan of Agile - I used iteration work in the early 90s, and then there were CHAOS reports on quick returns. We are very rooting for small projects, small teams, for Agile in the manufacturing process. Kent Back gave lectures at CHAOS University, and I spoke at his seminars. I am a true fan of extreme programming. In the new book, My Life Is a Failure, I talk about Scrum, RUP, XP.

GD : Agile methodology helped break down projects into smaller subprojects. Even if things go wrong, you can understand it in time. Not like in old projects that worked according to the waterfall method, when you bury yourself in a hole, and you get bad news only after two years ...

J. J.: I think the secret is step-by-step. I think that’s why we see improvements. (Note. Ed. Here are some diagrams used by Jim Johnson in lectures and taken from his 2004 study.)

Comparison: Successful, failed, complex.

The average percentage of extra costs for 1994-2004.

The average percentage of deadlines for 1994-2004.

The big problem was that the projects “swelled up”, deadlines were broken, the budget was overspended, and unnecessary functionality was developed. Especially in government projects. The agile methodology really helps here - the project is almost not growing. You know, as they say, “I need this,” and then “it’s not as important as I previously thought.”

G.D.: Yes, prioritizing functionality helps a lot ... asking all the time, "what is the business benefit?" Determine the invaluable characteristics of the product and leave them at the end of the list, and if hands never reach them, then that means they were not needed at all.

Have you collected any data about Agile in your research?

JJ : Yes, we tried. I hope we can show some of this. I inserted such questions into the questionnaires, but few answered them - it is difficult to obtain unambiguous data on them. We tried to achieve something a couple of studies to the present ... I hope this time we will succeed. Within a few days, we will take the final approach to SURF members to complete the 2006 study.

Agile may raise new questions. What do you call a phenomenon when the planned scale is reached for a project with adaptive planning?

JJ : Good question. In the case of companies like Webex, Google, Amazon, eBay, it is very difficult to say, because they have “streaming” updates (“pipelining”), and not releases. “Whatever we did in two weeks, everything will go to the world.” They are successful because users face minor changes. And, introducing new changes, they are protected - remember, many projects fail after the development stage is completed, and it is necessary to proceed to implementation.

Streaming updates? Sounds like zoom.

J.J.: Yes. It is very informative to work on a variable scale - people are much happier when they see that work is going on. People like to see progress in seeing results, and Agile is the embodiment of it all.

G.D. : For these companies, users are young, enthusiastic, easily adaptable, they like change, like to try new things. Large banks do not tolerate this approach. So I see this process - isn't it too rude?

JJ : Not every methodology is applicable to any project. Agile is difficult for many, because they have a difficult culture.

G.D.: One of the things that I like about Agile is that you do something, see it, users say “I like it”, or “I don't like it, can you change it?” Or “no, this is not the right way.” You have quick feedback and stream quality debugging is much better. With the “waterfall” approach, even if you bring the project to the end as you expected, you will still deal with all these quality problems later on. With Agile in testing and feedback, quality improves.

JJ : People think Agile is very liberating. If they looked closely, they would see that everything was just as tough there, at the "waterfall" or something else that James Martin would have to deal with. To say that over the past ten years there has been no improvement is stupidity. We have made significant progress.

Well, I promised that this would be the last question. Who will take the final word?

G.D. : You know, Jim is a prominent fighter, as is his entire team. This is what makes me work with them.

Jim, thank you very much for taking so much time for our conversation!