Robot Ethics - Can One Be Killed to Save Five?

I wanted to talk about an interesting experiment by scientists who tried to program the cultural imperatives of human morality in the memory of robots in order to check whether they can behave like people when making decisions. I note that the experiments are rather cruel, however, it seemed impossible for scientists to evaluate the possibilities of artificial intelligence differently. Today, robots are designed to exist autonomously, without human intervention. How to make a fully autonomous machine able to act in accordance with human morality and ethics?

It was this question that laid the foundation of the problem - “Machine Ethics”. Can a machine be programmed so that it can act in accordance with morality and ethics? Can a machine act and evaluate its actions from the point of view of morality?

The famous fundamental laws of Isaac Asimov (Three Laws of WIKI Robotics) are intended to impose ethical behavior on autonomous machines. In many films, you can find elements of the ethical behavior of robots, i.e. autonomous machines that make decisions that are peculiar to man. So what is this “human inherent”?

An article was published in the International Journal of Reasoning-based Intelligent Systems that describes programming methods for computers that enable machines to do things based on hypothetical moral reasoning.

The work is called - “Modeling Morality with Prospective Logic“

Authors of the work - Luis Moniz Pereira (Luis Moniz Pereira Portugal) and Ari Saptawijaya (Indonesia) stated that “Ethics” is no longer inherent only in human nature.

Scientists believe that they successfully conducted experiments that simulated complex moral dilemmas for robots.

“ The trolley problem” - this is the name they gave the dilemma offered to robots to solve. And they believe that they were able to program in accordance with the human moral and ethical principles of man.

“Trolley Problem” - models a typical moral dilemma - is it possible to harm one or several people in order to save the lives of other people.

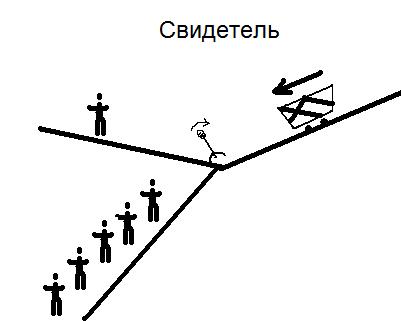

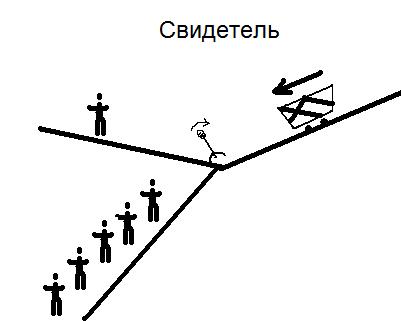

The first experiment. ”Witness”

The trolley extends upward from the tunnel with an automatic cable. Almost at the very top, the cable breaks and the carriage flies down, and there are 5 people on its way who will not have time to escape, since it goes too fast. But there is a solution - translate the arrows and put the trolley on a siding. However, on this way there is one person who does not know anything about the accident and also does not have time to hide. The robot - stands on the arrow and having received information about the cable breakage, must make a moral decision - what is more correct to do in this situation - let 5 people die, or save them and sacrifice one on the siding.

Is it permissible, on the moral side, to switch the switch and let a person die on the siding? The authors conducted cross-cultural studies in which people were asked the same question. Most people in all cultures agreed that the robot could turn the arrow and save more people. And, in fact, this is what the robot did. The complexity of programming was in logic, which not only subtracts the number of dead, but also crawls into the depths of morality. To prove that the solution is not as simple as it seems, scientists conducted another experiment.

Second experiment. “Pedestrian bridge”

The situation is the same as in the above case (cable break), but there is no siding. Now the robot is on the bridge, a man is standing next to him. Directly under the bridge is a path along which, after a cable break, a trolley will rush. A robot can push a person in the path in front of the trolley, then it will stop, or not do anything, and then the trolley will crush the five people who are on the paths below.

Is it possible to shove a person along the way to save the rest from a moral point of view? Again, scientists conducted cross-cultural studies with this issue and got the result - NO this is not permissible.

In the two options described above, people give different answers. Is it possible to teach a machine to think also? Scientists claim that they have succeeded in programming computer logic in solving such complex moral problems. They achieved this by learning the hidden rules.that people use when creating their moral judgments and then they managed to simulate these processes in logical programs.

As a result, it can be noted that computer models of the transfer of morality still use a person as an image and likeness. Thus, in many respects, it depends on ourselves what new, responsible machines will be that will make certain decisions on the basis of ethical imperatives embedded in their programs.

This article was prepared by Eugene euroeugene , who received an invite for this rejected article in the sandbox from hellt , for which I thank him.

It was this question that laid the foundation of the problem - “Machine Ethics”. Can a machine be programmed so that it can act in accordance with morality and ethics? Can a machine act and evaluate its actions from the point of view of morality?

The famous fundamental laws of Isaac Asimov (Three Laws of WIKI Robotics) are intended to impose ethical behavior on autonomous machines. In many films, you can find elements of the ethical behavior of robots, i.e. autonomous machines that make decisions that are peculiar to man. So what is this “human inherent”?

An article was published in the International Journal of Reasoning-based Intelligent Systems that describes programming methods for computers that enable machines to do things based on hypothetical moral reasoning.

The work is called - “Modeling Morality with Prospective Logic“

Authors of the work - Luis Moniz Pereira (Luis Moniz Pereira Portugal) and Ari Saptawijaya (Indonesia) stated that “Ethics” is no longer inherent only in human nature.

Scientists believe that they successfully conducted experiments that simulated complex moral dilemmas for robots.

“ The trolley problem” - this is the name they gave the dilemma offered to robots to solve. And they believe that they were able to program in accordance with the human moral and ethical principles of man.

“Trolley Problem” - models a typical moral dilemma - is it possible to harm one or several people in order to save the lives of other people.

The first experiment. ”Witness”

The trolley extends upward from the tunnel with an automatic cable. Almost at the very top, the cable breaks and the carriage flies down, and there are 5 people on its way who will not have time to escape, since it goes too fast. But there is a solution - translate the arrows and put the trolley on a siding. However, on this way there is one person who does not know anything about the accident and also does not have time to hide. The robot - stands on the arrow and having received information about the cable breakage, must make a moral decision - what is more correct to do in this situation - let 5 people die, or save them and sacrifice one on the siding.

Is it permissible, on the moral side, to switch the switch and let a person die on the siding? The authors conducted cross-cultural studies in which people were asked the same question. Most people in all cultures agreed that the robot could turn the arrow and save more people. And, in fact, this is what the robot did. The complexity of programming was in logic, which not only subtracts the number of dead, but also crawls into the depths of morality. To prove that the solution is not as simple as it seems, scientists conducted another experiment.

Second experiment. “Pedestrian bridge”

The situation is the same as in the above case (cable break), but there is no siding. Now the robot is on the bridge, a man is standing next to him. Directly under the bridge is a path along which, after a cable break, a trolley will rush. A robot can push a person in the path in front of the trolley, then it will stop, or not do anything, and then the trolley will crush the five people who are on the paths below.

Is it possible to shove a person along the way to save the rest from a moral point of view? Again, scientists conducted cross-cultural studies with this issue and got the result - NO this is not permissible.

In the two options described above, people give different answers. Is it possible to teach a machine to think also? Scientists claim that they have succeeded in programming computer logic in solving such complex moral problems. They achieved this by learning the hidden rules.that people use when creating their moral judgments and then they managed to simulate these processes in logical programs.

As a result, it can be noted that computer models of the transfer of morality still use a person as an image and likeness. Thus, in many respects, it depends on ourselves what new, responsible machines will be that will make certain decisions on the basis of ethical imperatives embedded in their programs.

This article was prepared by Eugene euroeugene , who received an invite for this rejected article in the sandbox from hellt , for which I thank him.