Zabbix, time series and TimescaleDB

- Transfer

Each monitoring system faces three types of performance problems.

Firstly, a good monitoring system should very quickly receive, process and record data coming from outside. The account goes to microseconds. Offhand, this may seem unobvious, but when the system becomes large enough, all these fractions of seconds are summed up, turning into clearly noticeable delays.

The second task is to provide convenient access to large arrays of previously collected metrics (in other words, to historical data). Historical data is used in a wide variety of contexts. For example, reports and graphs are generated from them, aggregate checks are built on them, triggers depend on them. If there are any delays in accessing the history, then this immediately affects the speed of the entire system as a whole.

Thirdly, historical data takes up a lot of space. Even relatively modest monitoring configurations very quickly acquire a solid history. But hardly anyone wants to keep the load history of the processor five years old on hand, so the monitoring system should be able to not only record well, but also delete history well (in Zabbix this process is called “housekeeping”). Deleting old data does not have to be as effective as collecting and analyzing new ones, but heavy deletion operations draw on precious DBMS resources and can slow down more critical operations.

The first two problems are solved by caching. Zabbix supports several specialized caches to speed up data read and write operations. The DBMS mechanisms themselves are not suitable here, because even the most advanced general-purpose caching algorithm will not know which data structures require instant access at a given point in time.

Everything is fine as long as the data is in the Zabbix server memory. But the memory is not infinite and at some point the data needs to be written (or read) to the database. And if the database performance is seriously behind the speed of collecting metrics, then even the most advanced special caching algorithms will not help for a long time.

The third problem also comes down to database performance. To solve it, you need to choose a reliable deletion strategy that would not interfere with other database operations. By default, Zabbix deletes historical data in batches of several thousand records per hour. You can configure longer housekeeping periods or larger packet sizes if the speed of data collection and the place in the database allow it. But with a very large number of metrics and / or a high frequency of collecting them, proper housekeeping setup can be a daunting task, since a data deletion schedule may not keep up with the pace of recording new ones.

Summarizing, the monitoring system solves performance problems in three directions - collecting new data and writing it to the database using SQL INSERT queries, accessing data using SELECT queries, and deleting data using DELETE. Let's see how a typical SQL query is executed:

In general, there is a lot of work. Most DBMSs provide a ton of settings for query optimization, but they are usually focused on some average workflows in which inserting and deleting records occurs at about the same frequency as the change.

However, as mentioned above, for monitoring systems, the most typical operations are adding and periodic deletion in batch mode. Changing previously added data almost never occurs, and accessing the data involves the use of aggregated functions. In addition, usually the values of the added metrics are ordered by time. Such data is commonly referred to as time series :

From the database point of view, time series have the following properties:

Obviously, traditional SQL databases are not suitable for storing such data, since general-purpose optimizations do not take these qualities into account. Therefore, in recent years, quite a few new, time-oriented DBMSs have appeared, such as, for example, InfluxDB. But all the popular DBMSs for time series have one significant drawback - the lack of full SQL support. Moreover, most of them are not even CRUD (Create, Read, Update, Delete).

Can Zabbix use these DBMSs in any way? One of the possible approaches is to transfer historical data for storage to an external database specialized in the time series. Given that the Zabbix architecture supports external backends for storing historical data (for example, Elasticsearch support is implemented in Zabbix), at first glance this option looks very reasonable. But if we supported one or several DBMS for time series as external servers, then users would have to reckon with the following points:

For some users, the benefits of dedicated dedicated storage for historical data may outweigh the inconvenience of having to worry about another system. But for many, this is an unnecessary complication. It is also worth remembering that since most of these specialized solutions have their own APIs, the complexity of the universal layer for working with Zabbix databases will increase markedly. And we, ideally, prefer to create new functions, rather than fight against other APIs.

The question arises - is there a way to take advantage of the DBMS for time series, but without losing the flexibility and advantages of SQL? Naturally, a universal answer does not exist, but one specific solution got very close to the answer - TimescaleDB .

TimescaleDB (TSDB) is a PostgreSQL extension that optimizes work with time series in a regular PostgreSQL (PG) database. Although, as mentioned above, there is no shortage of well-scalable time series solutions on the market, a unique feature of TimescaleDB is its ability to work well with time series without sacrificing the compatibility and benefits of traditional CRUD relational databases. In practice, this means that we get the best of both worlds. The database knows which tables should be considered as time series (and apply all the necessary optimizations), but you can work with them in the same way as with regular tables. Moreover, applications are not required to know that the data is controlled by TSDB!

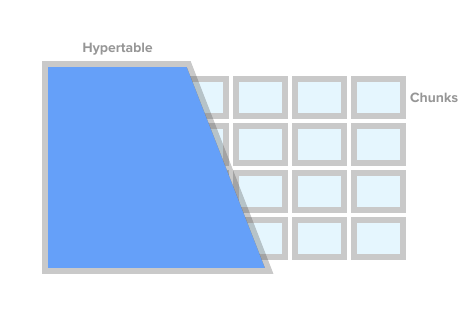

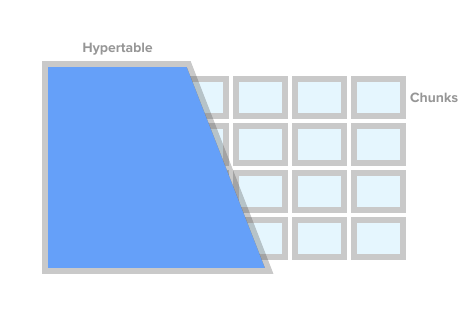

To mark a table as a time series table (in TSDB this is called a hypertable), just call the create_ hypertable () TSDB procedure. Under the hood, TSDB divides this table into so-called fragments (the English term is chunk) according to specified conditions. Fragments can be represented as automatically controlled sections of a table. Each fragment has a corresponding time range. For each fragment, TSDB also sets special indexes so that working with one data range does not affect access to others.

Hypertable Image from timescaledb.com

When the application adds a new value for the time series, the extension directs this value to the desired fragment. If the range for the time of the new value is not defined, then TSDB will create a new fragment, assign it the desired range and insert the value there. If an application requests data from a hypertable, then before executing the request, the extension checks which fragments are associated with this request.

But that is not all. TSDB complements the robust and time-tested PostgreSQL ecosystem with a host of performance and scalability changes. These include the quick addition of new records, quick time queries and virtually free batch deletions.

As noted earlier, in order to control the size of the database and comply with retention policies (i.e. do not store data for longer than necessary), a good monitoring solution should effectively delete a large amount of historical data. With TSDB, we can delete the desired story simply by deleting certain fragments from the hypertable. In this case, the application does not need to track fragments by name or any other links, TSDB will delete all the necessary fragments according to the specified time condition.

At first glance, it might seem that TSDB is a nice wrapper around the standard partitioning of PG tables ( declarative partitioning , as it is officially called in PG10). Indeed, to store historical data, you can use the standard partitioning PG10. But if you look closely, the fragments of the TSDB and the PG10 section are far from identical concepts.

To begin with, setting up partitioning in PG requires a deeper understanding of the details, which the application itself or the DBMS should do in a good way. First, you need to plan your section hierarchy and decide whether to use nested partitions. Secondly, you need to come up with a section naming scheme and somehow transfer it to the scripts for creating the scheme. Most likely, the naming scheme will include the date and / or time, and such names will need to be automated somehow.

Next, you need to think about how to delete expired data. In TSDB, you can simply call the drop_chunks () command, which determines the fragments to be deleted for a given period of time. In PG10, if you need to remove a certain range of values from standard PG sections, you will have to calculate the list of section names for this range yourself. If the selected partitioning scheme involves nested sections, this further complicates the deletion.

Another problem that needs to be addressed is what to do with data that goes beyond the current time ranges. For example, data may come from a future for which sections have not yet been created. Or from the past for already deleted sections. By default in PG10, adding such a record will not work and we will simply lose the data. In PG11, you can define a default section for such data, but this only temporarily masks the problem, and does not solve it.

Of course, all of the above problems can be solved in one way or another. You can hang the base with triggers, cron-jabs and sprinkle liberally with scripts. It will be ugly, but functional. There is no doubt that PG sections are better than giant monolithic tables, but what is definitely not solved through scripts and triggers is time-series improvements that PG does not have.

Those. Compared to PG sections, the TSDB hypertables are favorably distinguished not only by saving the nerves of DB administrators, but also by optimizing both access to data and adding new ones. For example, fragments in TSDB are always a one-dimensional array. This simplifies fragment management and speeds up inserts and selections. To add new data, TSDB uses its own routing algorithm in the desired fragment, which, unlike the standard PG, does not immediately open all sections. With a large number of sections, the difference in performance can vary significantly. Technical details about the difference between standard partitioning in PG and TSDB can be found in this article .

Of all the options, TimescaleDB seems to be the safest choice for Zabbix and its users:

Let's see what needs to be done to start TSDB with a freshly installed Zabbix. After installing Zabbix and running PostgreSQL database creation scripts, you need to download and install TSDB on the desired platform. See installation instructions here . After installing the extension, you need to enable it for the Zabbix base, and then run the timecaledb.sql script that comes with Zabbix. It is located either in database / postgresql / timecaledb.sql if the installation is from source, or in /usr/share/zabbix/database/timecaledb.sql.gz if the installation is from packages. That's all! Now you can start the Zabbix server and it will work with TSDB.

The timescaledb.sql script is trivial. All he does is convert the regular Zabbix historical tables to TSDB hypertables and change the default settings - sets the parameters Override item history period and Override item trend period. Now (version 4.2) the following Zabbix tables work under TSDB control - history, history_uint, history_str, history_log, history_text, trends and trends_uint. The same script can be used to migrate these tables (note that the migrate_data parameter is set to true). It must be borne in mind that data migration is a very long process and can take several hours.

The chunk_time_interval => 86400 parameter may also require changes before running timecaledb.sql. Chunk_time_interval is the interval that limits the time of values falling into this fragment. For example, if you set the chunk_time_interval interval to 3 hours, then the data for the whole day will be distributed over 8 fragments, with the first fragment No. 1 covering the first 3 hours (0: 00-2: 59), the second fragment No. 2 - the second 3 hours ( 3: 00-5: 59), etc. The last fragment No. 8 will contain values with a time of 21: 00-23: 59. 86400 seconds (1 day) is the average default value, but users of loaded systems may want to reduce it.

In order to roughly estimate the memory requirements, it is important to understand how much space one piece can occupy on average. The general principle is that the system must have enough memory to arrange at least one fragment from each hypertable. In this case, of course, the sum of the fragment sizes should not only fit into the memory with a margin, but also be less than the value of the shared_buffers parameter from postgresql.conf. Further information on this topic can be found in the TimescaleDB documentation.

For example, if you have a system that collects mainly integer metrics and you decide to split the history_uint table into 2-hour fragments, and divide the rest of the tables into one-day fragments, then you need to change this row in timecaledb.sql:

After a certain amount of historical data has accumulated, you can check the fragment sizes for the history_uint table by calling chunk_relation_size ():

This call can be repeated to find the fragment sizes for all hypertables. If, for example, it was found that the fragment size history_uint is 13MB, fragments for other history tables, say, 20MB and for trend tables 10MB, then the total memory requirement is 13 + 4 x 20 + 2 x 10 = 113MB. We must also leave space from shared_buffers to store other data, say 20%. Then the value of shared_buffers must be set to 113MB / 0.8 = ~ 140MB.

For finer tuning of TSDB, the timescaledb-tune utility has recently appeared. It analyzes postgresql.conf, correlates it with the system configuration (memory and processor), and then gives recommendations on setting memory parameters, parameters for parallel processing, WAL. The utility changes the postgresql.conf file, but you can run it with the -dry-run parameter and check the proposed changes.

We will dwell on the Zabbix parameters Override item history period and Override item trend period (available in Administration -> General -> Housekeeping). They are needed to delete historical data as whole fragments of TSDB hypertables, not records.

The fact is that Zabbix allows you to set the housekeeping period for each data element (metric) individually. However, this flexibility is achieved by scanning the list of elements and calculating individual periods in each iteration of housekeeping. If the system has individual housekeeping periods for individual elements, then the system obviously cannot have a single cut-off point for all metrics together and Zabbix will not be able to give the correct command to delete the necessary fragments. Thus, by turning off Override history for metrics, Zabbix will lose the ability to quickly delete history by calling the drop_chunks () procedure for history_ * tables, and, accordingly, turning off Override trends will lose the same function for trends_ * tables.

In other words, to take full advantage of the new housekeeping system, you need to make both options global. In this case, the housekeeping process will not read data item settings at all.

It's time to check whether all of the above really works in practice. Our test bench is Zabbix 4.2rc1 with PostgreSQL 10.7 and TimescaleDB 1.2.1 for Debian 9. The test machine is a 10-core Intel Xeon with 16 GB of RAM and 60 GB of storage space on the SSD. By today's standards, this is a very modest configuration, but our goal is to find out how effective TSDB is in real life. In configurations with an unlimited budget, you can simply insert 128-256 GB of RAM and put most (if not all) of the database into memory.

Our test configuration consists of 32 active Zabbix agents that transfer data directly to the Zabbix Server. Each agent serves 10,000 items. The Zabbix historical cache is set to 256MB, and shared_buffers PG is set to 2GB. This configuration provides sufficient load on the database, but at the same time does not create a large load on the Zabbix server processes. To reduce the number of moving parts between the data sources and the database, we did not use Zabbix Proxy.

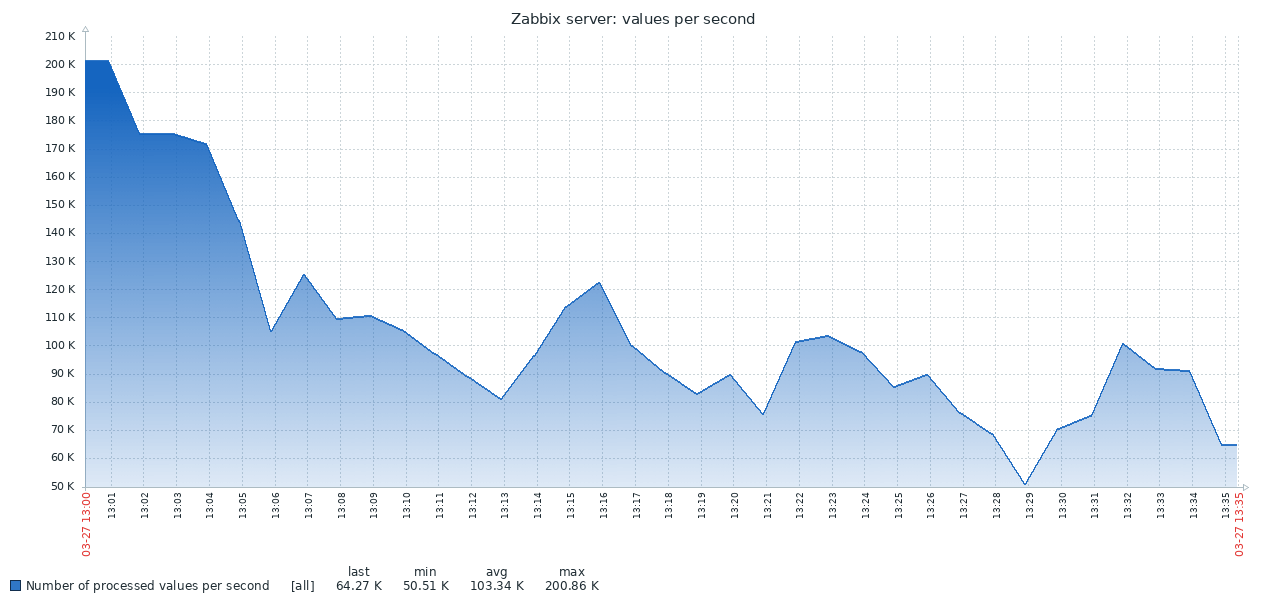

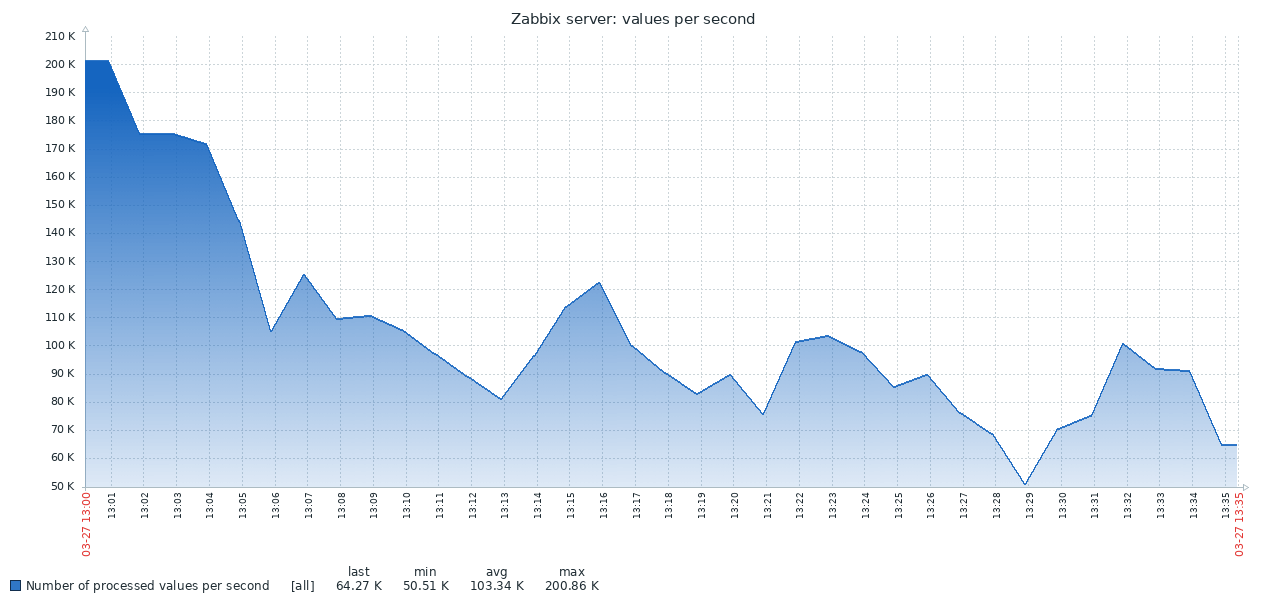

Here is the first result obtained from the standard PG system:

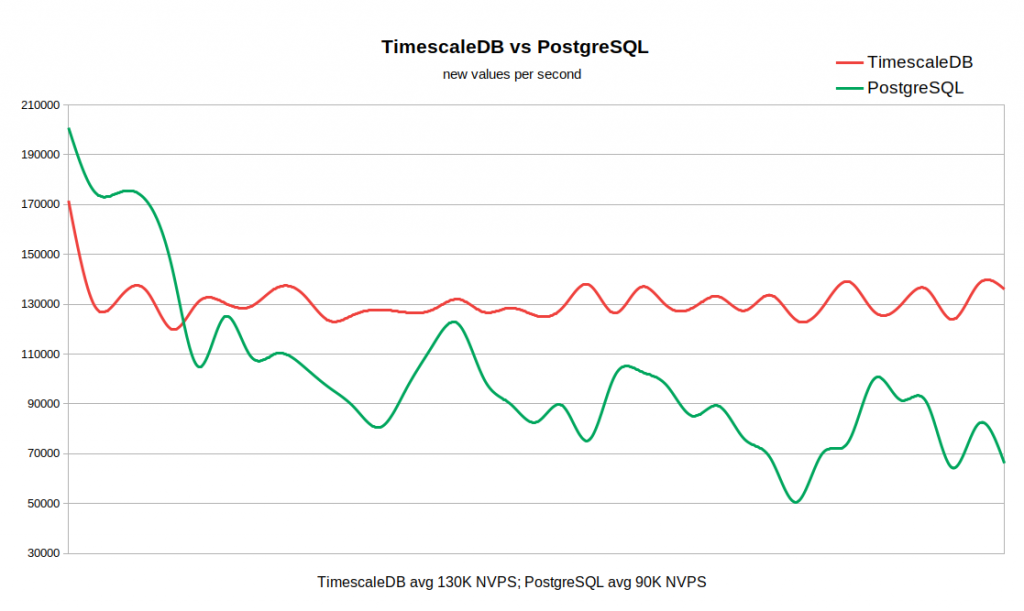

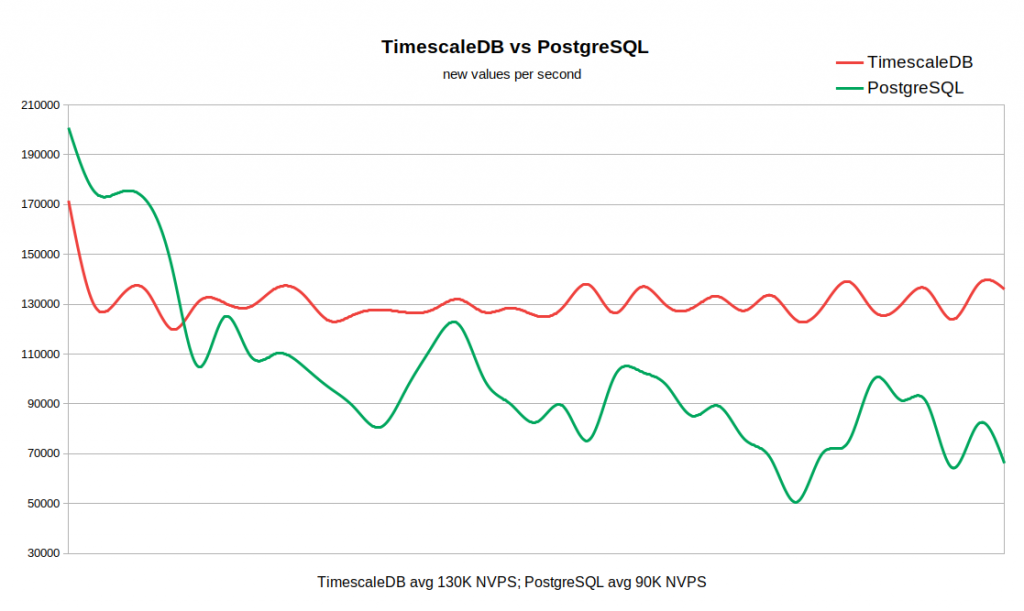

The result of TSDB looks completely different:

The graph below combines both results. Work begins with fairly high NVPS values in 170-200K, because It takes some time to fill the history cache before synchronization with the database begins.

When the history table is empty, the write speed in TSDB is comparable to the write speed in PG, and even with a small margin of the latter. As soon as the number of records in history reaches 50-60 million, the throughput of PG drops to 110K NVPS, but, what is more unpleasant, it continues to change inversely with the number of records accumulated in the historical table. At the same time, TSDB maintains a stable speed of 130K NVPS throughout the test from 0 to 300 million records.

In total, in our example, the difference in average performance is quite significant (130K versus 90K without taking into account the initial peak). It is also seen that the insertion rate in standard PG varies over a wide range. Thus, if a workflow requires storing tens or hundreds of millions of records in history, but there are no resources for very aggressive caching strategies, then TSDB is a strong candidate for replacing the standard PG.

The advantage of TSDB is already obvious for this relatively modest system, but most likely the difference will become even more noticeable on large arrays of historical data. On the other hand, this test is by no means a generalization of all possible scenarios of working with Zabbix. Naturally, there are many factors influencing the results, such as hardware configurations, operating system settings, Zabbix server settings and additional load from other services running in the background. That is, your mileage may vary.

TimescaleDB is a very promising technology. It has already been successfully operated in serious production environments. TSDB works well with Zabbix and offers significant advantages over the standard PostgreSQL database.

Does TSDB have any flaws or reasons to postpone using it? From a technical point of view, we do not see any arguments against. But it should be borne in mind that the technology is still new, with an unstable release cycle and an unclear strategy for the development of functionality. In particular, new versions with significant changes are released every month or two. Some functions may be removed, as, for example, happened with adaptive chunking. Separately, as another factor of uncertainty, it is worth mentioning the licensing policy. It is very confusing since there are three levels of licensing. The TSDB kernel is made under the Apache license, some functions are released under their own Timescale License, but there is also a closed version of Enterprise.

If you use Zabbix with PostgreSQL, then there is no reason at least not to try TimescaleDB. Perhaps this thing will pleasantly surprise you :) Just keep in mind that the support for TimescaleDB in Zabbix is still experimental - for the time being, we collect user reviews and gain experience.

Firstly, a good monitoring system should very quickly receive, process and record data coming from outside. The account goes to microseconds. Offhand, this may seem unobvious, but when the system becomes large enough, all these fractions of seconds are summed up, turning into clearly noticeable delays.

The second task is to provide convenient access to large arrays of previously collected metrics (in other words, to historical data). Historical data is used in a wide variety of contexts. For example, reports and graphs are generated from them, aggregate checks are built on them, triggers depend on them. If there are any delays in accessing the history, then this immediately affects the speed of the entire system as a whole.

Thirdly, historical data takes up a lot of space. Even relatively modest monitoring configurations very quickly acquire a solid history. But hardly anyone wants to keep the load history of the processor five years old on hand, so the monitoring system should be able to not only record well, but also delete history well (in Zabbix this process is called “housekeeping”). Deleting old data does not have to be as effective as collecting and analyzing new ones, but heavy deletion operations draw on precious DBMS resources and can slow down more critical operations.

The first two problems are solved by caching. Zabbix supports several specialized caches to speed up data read and write operations. The DBMS mechanisms themselves are not suitable here, because even the most advanced general-purpose caching algorithm will not know which data structures require instant access at a given point in time.

Monitoring and Time Series Data

Everything is fine as long as the data is in the Zabbix server memory. But the memory is not infinite and at some point the data needs to be written (or read) to the database. And if the database performance is seriously behind the speed of collecting metrics, then even the most advanced special caching algorithms will not help for a long time.

The third problem also comes down to database performance. To solve it, you need to choose a reliable deletion strategy that would not interfere with other database operations. By default, Zabbix deletes historical data in batches of several thousand records per hour. You can configure longer housekeeping periods or larger packet sizes if the speed of data collection and the place in the database allow it. But with a very large number of metrics and / or a high frequency of collecting them, proper housekeeping setup can be a daunting task, since a data deletion schedule may not keep up with the pace of recording new ones.

Summarizing, the monitoring system solves performance problems in three directions - collecting new data and writing it to the database using SQL INSERT queries, accessing data using SELECT queries, and deleting data using DELETE. Let's see how a typical SQL query is executed:

- The DBMS analyzes the query and checks it for syntax errors. If the request is syntactically correct, then the engine builds a syntax tree for further processing.

- The query planner analyzes the syntax tree and calculates the various ways (paths) for executing the request.

- The scheduler calculates the cheapest way. In the process, it takes into account a lot of things - how large are the tables, is it necessary to sort the results, are there indexes applicable to the query, etc.

- When the optimal path is found, the engine executes the query by accessing the desired data blocks (using indexes or sequential scanning), applies the sorting and filtering criteria, collects the result and returns it to the client.

- For insert, modify, and delete queries, the engine must also update indexes for the corresponding tables. For large tables, this operation may take longer than working with the data itself.

- Most likely, the DBMS will also update the internal statistics of data usage for subsequent calls to the query scheduler.

In general, there is a lot of work. Most DBMSs provide a ton of settings for query optimization, but they are usually focused on some average workflows in which inserting and deleting records occurs at about the same frequency as the change.

However, as mentioned above, for monitoring systems, the most typical operations are adding and periodic deletion in batch mode. Changing previously added data almost never occurs, and accessing the data involves the use of aggregated functions. In addition, usually the values of the added metrics are ordered by time. Such data is commonly referred to as time series :

Time series is a series of data points indexed (or listed or graffiti) in a temporary order.

From the database point of view, time series have the following properties:

- Time series can be located on a disk as a sequence of time-ordered blocks.

- Time series tables can be indexed using a time column.

- Most SQL SELECT queries will use WHERE, GROUP BY, or ORDER BY clauses on a time-indicating column.

- Typically, time series data has an “expiration date” after which it can be deleted.

Obviously, traditional SQL databases are not suitable for storing such data, since general-purpose optimizations do not take these qualities into account. Therefore, in recent years, quite a few new, time-oriented DBMSs have appeared, such as, for example, InfluxDB. But all the popular DBMSs for time series have one significant drawback - the lack of full SQL support. Moreover, most of them are not even CRUD (Create, Read, Update, Delete).

Can Zabbix use these DBMSs in any way? One of the possible approaches is to transfer historical data for storage to an external database specialized in the time series. Given that the Zabbix architecture supports external backends for storing historical data (for example, Elasticsearch support is implemented in Zabbix), at first glance this option looks very reasonable. But if we supported one or several DBMS for time series as external servers, then users would have to reckon with the following points:

- Another system that needs to be explored, configured and maintained. Another place to keep track of settings, disk space, storage policies, performance, etc.

- Reducing the fault tolerance of the monitoring system, as a new link appears in the chain of related components.

For some users, the benefits of dedicated dedicated storage for historical data may outweigh the inconvenience of having to worry about another system. But for many, this is an unnecessary complication. It is also worth remembering that since most of these specialized solutions have their own APIs, the complexity of the universal layer for working with Zabbix databases will increase markedly. And we, ideally, prefer to create new functions, rather than fight against other APIs.

The question arises - is there a way to take advantage of the DBMS for time series, but without losing the flexibility and advantages of SQL? Naturally, a universal answer does not exist, but one specific solution got very close to the answer - TimescaleDB .

What is TimescaleDB?

TimescaleDB (TSDB) is a PostgreSQL extension that optimizes work with time series in a regular PostgreSQL (PG) database. Although, as mentioned above, there is no shortage of well-scalable time series solutions on the market, a unique feature of TimescaleDB is its ability to work well with time series without sacrificing the compatibility and benefits of traditional CRUD relational databases. In practice, this means that we get the best of both worlds. The database knows which tables should be considered as time series (and apply all the necessary optimizations), but you can work with them in the same way as with regular tables. Moreover, applications are not required to know that the data is controlled by TSDB!

To mark a table as a time series table (in TSDB this is called a hypertable), just call the create_ hypertable () TSDB procedure. Under the hood, TSDB divides this table into so-called fragments (the English term is chunk) according to specified conditions. Fragments can be represented as automatically controlled sections of a table. Each fragment has a corresponding time range. For each fragment, TSDB also sets special indexes so that working with one data range does not affect access to others.

When the application adds a new value for the time series, the extension directs this value to the desired fragment. If the range for the time of the new value is not defined, then TSDB will create a new fragment, assign it the desired range and insert the value there. If an application requests data from a hypertable, then before executing the request, the extension checks which fragments are associated with this request.

But that is not all. TSDB complements the robust and time-tested PostgreSQL ecosystem with a host of performance and scalability changes. These include the quick addition of new records, quick time queries and virtually free batch deletions.

As noted earlier, in order to control the size of the database and comply with retention policies (i.e. do not store data for longer than necessary), a good monitoring solution should effectively delete a large amount of historical data. With TSDB, we can delete the desired story simply by deleting certain fragments from the hypertable. In this case, the application does not need to track fragments by name or any other links, TSDB will delete all the necessary fragments according to the specified time condition.

TimescaleDB and PostgreSQL Partitioning

At first glance, it might seem that TSDB is a nice wrapper around the standard partitioning of PG tables ( declarative partitioning , as it is officially called in PG10). Indeed, to store historical data, you can use the standard partitioning PG10. But if you look closely, the fragments of the TSDB and the PG10 section are far from identical concepts.

To begin with, setting up partitioning in PG requires a deeper understanding of the details, which the application itself or the DBMS should do in a good way. First, you need to plan your section hierarchy and decide whether to use nested partitions. Secondly, you need to come up with a section naming scheme and somehow transfer it to the scripts for creating the scheme. Most likely, the naming scheme will include the date and / or time, and such names will need to be automated somehow.

Next, you need to think about how to delete expired data. In TSDB, you can simply call the drop_chunks () command, which determines the fragments to be deleted for a given period of time. In PG10, if you need to remove a certain range of values from standard PG sections, you will have to calculate the list of section names for this range yourself. If the selected partitioning scheme involves nested sections, this further complicates the deletion.

Another problem that needs to be addressed is what to do with data that goes beyond the current time ranges. For example, data may come from a future for which sections have not yet been created. Or from the past for already deleted sections. By default in PG10, adding such a record will not work and we will simply lose the data. In PG11, you can define a default section for such data, but this only temporarily masks the problem, and does not solve it.

Of course, all of the above problems can be solved in one way or another. You can hang the base with triggers, cron-jabs and sprinkle liberally with scripts. It will be ugly, but functional. There is no doubt that PG sections are better than giant monolithic tables, but what is definitely not solved through scripts and triggers is time-series improvements that PG does not have.

Those. Compared to PG sections, the TSDB hypertables are favorably distinguished not only by saving the nerves of DB administrators, but also by optimizing both access to data and adding new ones. For example, fragments in TSDB are always a one-dimensional array. This simplifies fragment management and speeds up inserts and selections. To add new data, TSDB uses its own routing algorithm in the desired fragment, which, unlike the standard PG, does not immediately open all sections. With a large number of sections, the difference in performance can vary significantly. Technical details about the difference between standard partitioning in PG and TSDB can be found in this article .

Zabbix and TimescaleDB

Of all the options, TimescaleDB seems to be the safest choice for Zabbix and its users:

- TSDB is designed as a PostgreSQL extension, and not as a standalone system. Therefore, it does not require additional hardware, virtual machines, or any other changes in the infrastructure. Users can continue to use their chosen tools for PostgreSQL.

- TSDB allows you to save almost all the code for working with the database in Zabbix unchanged.

- TSDB significantly improves the performance of history syncer and housekeeper.

- Low entry threshold - The basic concepts of TSDB are simple and straightforward.

- Easy installation and configuration of both the extension itself and Zabbix will greatly help users of small and medium-sized systems.

Let's see what needs to be done to start TSDB with a freshly installed Zabbix. After installing Zabbix and running PostgreSQL database creation scripts, you need to download and install TSDB on the desired platform. See installation instructions here . After installing the extension, you need to enable it for the Zabbix base, and then run the timecaledb.sql script that comes with Zabbix. It is located either in database / postgresql / timecaledb.sql if the installation is from source, or in /usr/share/zabbix/database/timecaledb.sql.gz if the installation is from packages. That's all! Now you can start the Zabbix server and it will work with TSDB.

The timescaledb.sql script is trivial. All he does is convert the regular Zabbix historical tables to TSDB hypertables and change the default settings - sets the parameters Override item history period and Override item trend period. Now (version 4.2) the following Zabbix tables work under TSDB control - history, history_uint, history_str, history_log, history_text, trends and trends_uint. The same script can be used to migrate these tables (note that the migrate_data parameter is set to true). It must be borne in mind that data migration is a very long process and can take several hours.

The chunk_time_interval => 86400 parameter may also require changes before running timecaledb.sql. Chunk_time_interval is the interval that limits the time of values falling into this fragment. For example, if you set the chunk_time_interval interval to 3 hours, then the data for the whole day will be distributed over 8 fragments, with the first fragment No. 1 covering the first 3 hours (0: 00-2: 59), the second fragment No. 2 - the second 3 hours ( 3: 00-5: 59), etc. The last fragment No. 8 will contain values with a time of 21: 00-23: 59. 86400 seconds (1 day) is the average default value, but users of loaded systems may want to reduce it.

In order to roughly estimate the memory requirements, it is important to understand how much space one piece can occupy on average. The general principle is that the system must have enough memory to arrange at least one fragment from each hypertable. In this case, of course, the sum of the fragment sizes should not only fit into the memory with a margin, but also be less than the value of the shared_buffers parameter from postgresql.conf. Further information on this topic can be found in the TimescaleDB documentation.

For example, if you have a system that collects mainly integer metrics and you decide to split the history_uint table into 2-hour fragments, and divide the rest of the tables into one-day fragments, then you need to change this row in timecaledb.sql:

SELECT create_hypertable('history_uint', 'clock', chunk_time_interval => 7200, migrate_data => true);After a certain amount of historical data has accumulated, you can check the fragment sizes for the history_uint table by calling chunk_relation_size ():

zabbix=> SELECT chunk_table,total_bytes FROM chunk_relation_size('history_uint');

chunk_table | total_bytes

-----------------------------------------+-------------

_timescaledb_internal._hyper_2_6_chunk | 13287424

_timescaledb_internal._hyper_2_7_chunk | 13172736

_timescaledb_internal._hyper_2_8_chunk | 13344768

_timescaledb_internal._hyper_2_9_chunk | 13434880

_timescaledb_internal._hyper_2_10_chunk | 13230080

_timescaledb_internal._hyper_2_11_chunk | 13189120This call can be repeated to find the fragment sizes for all hypertables. If, for example, it was found that the fragment size history_uint is 13MB, fragments for other history tables, say, 20MB and for trend tables 10MB, then the total memory requirement is 13 + 4 x 20 + 2 x 10 = 113MB. We must also leave space from shared_buffers to store other data, say 20%. Then the value of shared_buffers must be set to 113MB / 0.8 = ~ 140MB.

For finer tuning of TSDB, the timescaledb-tune utility has recently appeared. It analyzes postgresql.conf, correlates it with the system configuration (memory and processor), and then gives recommendations on setting memory parameters, parameters for parallel processing, WAL. The utility changes the postgresql.conf file, but you can run it with the -dry-run parameter and check the proposed changes.

We will dwell on the Zabbix parameters Override item history period and Override item trend period (available in Administration -> General -> Housekeeping). They are needed to delete historical data as whole fragments of TSDB hypertables, not records.

The fact is that Zabbix allows you to set the housekeeping period for each data element (metric) individually. However, this flexibility is achieved by scanning the list of elements and calculating individual periods in each iteration of housekeeping. If the system has individual housekeeping periods for individual elements, then the system obviously cannot have a single cut-off point for all metrics together and Zabbix will not be able to give the correct command to delete the necessary fragments. Thus, by turning off Override history for metrics, Zabbix will lose the ability to quickly delete history by calling the drop_chunks () procedure for history_ * tables, and, accordingly, turning off Override trends will lose the same function for trends_ * tables.

In other words, to take full advantage of the new housekeeping system, you need to make both options global. In this case, the housekeeping process will not read data item settings at all.

Performance with TimescaleDB

It's time to check whether all of the above really works in practice. Our test bench is Zabbix 4.2rc1 with PostgreSQL 10.7 and TimescaleDB 1.2.1 for Debian 9. The test machine is a 10-core Intel Xeon with 16 GB of RAM and 60 GB of storage space on the SSD. By today's standards, this is a very modest configuration, but our goal is to find out how effective TSDB is in real life. In configurations with an unlimited budget, you can simply insert 128-256 GB of RAM and put most (if not all) of the database into memory.

Our test configuration consists of 32 active Zabbix agents that transfer data directly to the Zabbix Server. Each agent serves 10,000 items. The Zabbix historical cache is set to 256MB, and shared_buffers PG is set to 2GB. This configuration provides sufficient load on the database, but at the same time does not create a large load on the Zabbix server processes. To reduce the number of moving parts between the data sources and the database, we did not use Zabbix Proxy.

Here is the first result obtained from the standard PG system:

The result of TSDB looks completely different:

The graph below combines both results. Work begins with fairly high NVPS values in 170-200K, because It takes some time to fill the history cache before synchronization with the database begins.

When the history table is empty, the write speed in TSDB is comparable to the write speed in PG, and even with a small margin of the latter. As soon as the number of records in history reaches 50-60 million, the throughput of PG drops to 110K NVPS, but, what is more unpleasant, it continues to change inversely with the number of records accumulated in the historical table. At the same time, TSDB maintains a stable speed of 130K NVPS throughout the test from 0 to 300 million records.

In total, in our example, the difference in average performance is quite significant (130K versus 90K without taking into account the initial peak). It is also seen that the insertion rate in standard PG varies over a wide range. Thus, if a workflow requires storing tens or hundreds of millions of records in history, but there are no resources for very aggressive caching strategies, then TSDB is a strong candidate for replacing the standard PG.

The advantage of TSDB is already obvious for this relatively modest system, but most likely the difference will become even more noticeable on large arrays of historical data. On the other hand, this test is by no means a generalization of all possible scenarios of working with Zabbix. Naturally, there are many factors influencing the results, such as hardware configurations, operating system settings, Zabbix server settings and additional load from other services running in the background. That is, your mileage may vary.

Conclusion

TimescaleDB is a very promising technology. It has already been successfully operated in serious production environments. TSDB works well with Zabbix and offers significant advantages over the standard PostgreSQL database.

Does TSDB have any flaws or reasons to postpone using it? From a technical point of view, we do not see any arguments against. But it should be borne in mind that the technology is still new, with an unstable release cycle and an unclear strategy for the development of functionality. In particular, new versions with significant changes are released every month or two. Some functions may be removed, as, for example, happened with adaptive chunking. Separately, as another factor of uncertainty, it is worth mentioning the licensing policy. It is very confusing since there are three levels of licensing. The TSDB kernel is made under the Apache license, some functions are released under their own Timescale License, but there is also a closed version of Enterprise.

If you use Zabbix with PostgreSQL, then there is no reason at least not to try TimescaleDB. Perhaps this thing will pleasantly surprise you :) Just keep in mind that the support for TimescaleDB in Zabbix is still experimental - for the time being, we collect user reviews and gain experience.