Next Generation Billing Architecture: Transitioning to Tarantool

Why does a corporation such as MegaFon, Tarantool in billing? From the outside it seems that the vendor usually comes, brings some big box, plugs the plug into the socket - here comes the billing! It used to be, but now it’s an archaic, and such dinosaurs have already become extinct or are becoming extinct. Initially, billing is a billing system - a reader or calculator. In modern telecom, this is a system for automating the entire life cycle of interaction with the subscriber from the conclusion of the contract to termination , including real-time billing, payment acceptance and much more. Billing in telecom companies is similar to a combat robot - large, powerful and hung with weapons.

And here is Tarantool? Oleg Ivlev and Andrey Knyazev will talk about this.. Oleg is the chief architect of MegaFon with extensive experience working in foreign companies, Andrey is the director of business systems. From the transcript of their report at the Tarantool Conference 2018, you will learn why R&D is needed in corporations, what Tarantool is, how the deadlock for vertical scaling and globalization became the prerequisites for the emergence of this database in the company, about technological challenges, the transformation of architecture, and how MegaFon's technostek resembles Netflix, Google and Amazon.

The project that will be discussed is called "Single Billing." It was in him that Tarantool showed its best qualities.

Performance growth of Hi-End equipment did not keep pace with the growth of the subscriber base and the growth in the number of services, further growth in the number of subscribers and services due to M2M, IoT was expected, and branch features led to a deterioration of the time-to-market. The company decided to create a unified business system with a unique world-class modular architecture, instead of 8 current different billing systems.

MegaFon - it's eight companies in one. In 2009, the reorganization was completed: branches throughout Russia merged into a single company MegaFon OJSC (now PJSC). Thus, the company has 8 billing systems with its own “custom” solutions, branch features and a different organizational structure, IT and marketing.

Everything was fine until I had to launch one common federal product. A lot of difficulties appeared here: for some, tariffing with rounding up, for others, to a lesser extent, and for others, according to the arithmetic mean. There are thousands of such moments.

Despite the fact that the version of the billing system is the same, one supplier, the settings diverged so that the glue for a long time. We tried to reduce their number, and came across a second problem that is familiar to many corporations.

Vertical scaling. Even the coolest iron at that time did not meet the needs. We used Hewlett-Packard equipment, the Superdome Hi-End line, but it did not draw the need for even two branches. I wanted horizontal scaling without large transaction costs and capital investments.

Expectation of growth in the number of subscribers and services . The consultants have long brought stories about IoT and M2M to the telecom world: there will come a time when each phone and iron will have a SIM card, and two in the refrigerator. Today we have one number of subscribers, and in the near future there will be an order of magnitude more.

These four reasons drove us to major changes. There was a choice between upgrading the system and designing from scratch. They thought for a long time, made serious decisions, played tenders. As a result, they decided to design from the very beginning, and took up interesting challenges - technological challenges.

If earlier there were, let's say, 8 billing accounts for 15 million subscribers , and now 100 million subscribers and more should have turned out - the load is an order of magnitude higher.

But further movement to increase the load and the subscriber base posed serious challenges for us.

Between Kaliningrad and Vladivostok 7500 km and 10 time zones . The speed of light is finite and at such delay distances are already significant. 150 ms on the coolest modern optical channels is a bit much for real-time billing, especially such as it is now in telecom in Russia. In addition, you need to update in one business day, and with different time zones - this is a problem.

We do not just provide services for a monthly fee, we have complex tariffs, packages, various modifiers. We need not only to allow or prohibit the subscriber to talk, but to give him a certain quota - to calculate calls and actions in real time so that he does not notice.

If we collect all subscribers in one system, then any emergency events and disasters are disastrous for business. Therefore, we design the system in such a way as to exclude the effect of accidents on the entire subscriber base.

This is again a consequence of the rejection of vertical scaling. When we went into horizontal scaling, we increased the number of servers from hundreds to thousands. They need to be managed and interchangeable, automatically backed up IT infrastructure and restored distributed system.

Such interesting challenges confronted us. We designed the system, and at that moment we tried to find the world best practices in order to check how much we are in trend, how much we follow advanced technologies.

Europe fell by the number of subscribers and scale, the United States - by the plane of its tariffs. We looked at something in China, but found something in India and took specialists from Vodafone India.

To analyze the architecture, the Dream Team was assembled, led by IBM, architects from various fields. These people could adequately evaluate what we are doing and bring certain knowledge to our architecture.

A few numbers to illustrate.

We design a system for 80 million subscribers with a reserve of one billion . So we remove future thresholds. This is not because we are going to take over China, but because of the pressure of IoT and M2M.

300 million documents are processed in real time . Although we have 80 million subscribers, we work with potential customers and those who have left us if we need to collect receivables. Therefore, real volumes are much larger.

2 billion transactions daily change the balance - these are payments, charges, calls and other events. 200 TB of data changes actively , 8 PB of data changes a little slower , and this is not an archive, but live data in a single billing. Data Center Scale -5 thousand servers at 14 sites .

When we planned the architecture and set about assembling the system, we imported the most interesting and advanced technologies. The result was a technological stack that is familiar to any Internet player and corporations that make highly loaded systems.

The stack is similar to the stacks of other major players: Netflix, Twitter, Viber. It consists of 6 components, but we want to reduce and unify it.

We are not going to change the same Oracle to Tarantool. In the realities of large companies, this is utopia, or a crusade for 5-10 years with an incomprehensible outcome. But Cassandra and Couchbase can be replaced with Tarantool, and we are committed to this.

There are 4 simple criteria why we chose this database.

Speed . We conducted stress tests on MegaFon industrial systems. Tarantool won - it showed the best performance.

This is not to say that other systems do not meet the needs of MegaFon. Current memory solutions are so productive that this stock of the company is more than enough. But we are interested in dealing with the leader, and not with the one who weaves in the tail, including the load test.

TCO cost . Support for Couchbase on MegaFon volumes costs space money, with Tarantool the situation is much nicer, and in terms of functionality they are close.

Another nice feature that slightly influenced our choice - Tarantool works better than other databases with memory. It shows maximum efficiency .

Reliability . MegaFon is invested in reliability, probably like no other. Therefore, when we looked at Tarantool, we realized what needs to be done so that it satisfies our requirements.

We invested our time and finances, and together with Mail.ru we created an enterprise version, which is now used by several other companies.

The most important thing for me is direct contact with the developer . This is exactly what the Tarantool guys bribed.

If you come to a player, especially one who works with an anchor client, and say that you need the database to be able to do this, this and that, he usually answers:

- Well, put the requirements under the bottom of that pile - sometime, probably, we get to them.

Many have a roadmap for the next 2-3 years, and it’s almost impossible to integrate there, and Tarantool developers bribe with openness, and not only with MegaFon, and adapt their system to the customer. This is cool and we really like it.

At us Tarantool is used in several elements. The first is in the pilot , which we made on the address directory system. At one time, I wanted it to be a system that is similar to Yandex.Maps and Google Maps, but it turned out a little differently.

For example, the address directory in the sales interface. On Oracle, finding the address you need takes 12-13 s. - uncomfortable numbers. When we switch to Tarantool, replace Oracle with another database in the console, and perform the same search, we get 200 times acceleration! The city pops up after the third letter. Now we are adapting the interface so that this happens after the first. However, the response speed is completely different - already milliseconds instead of seconds.

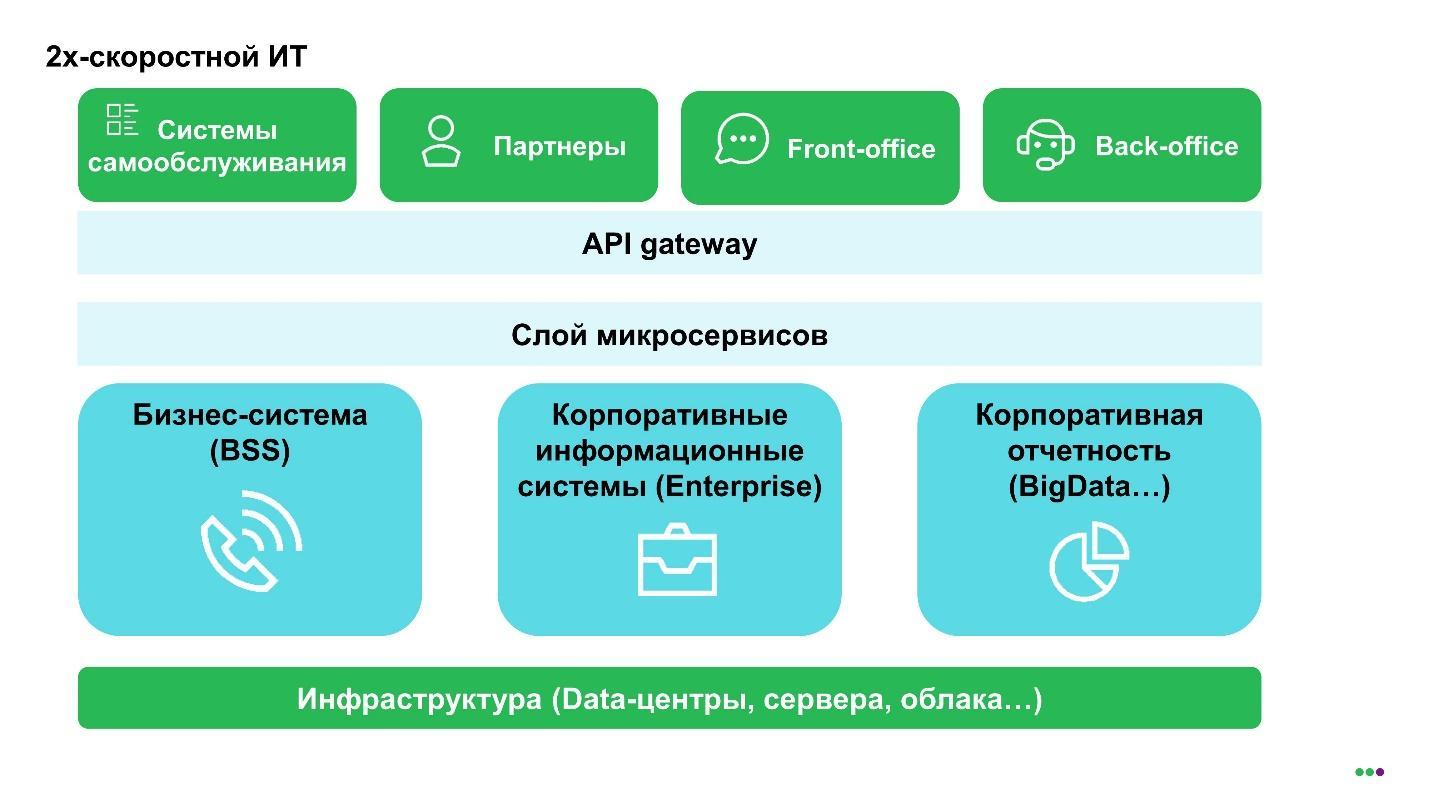

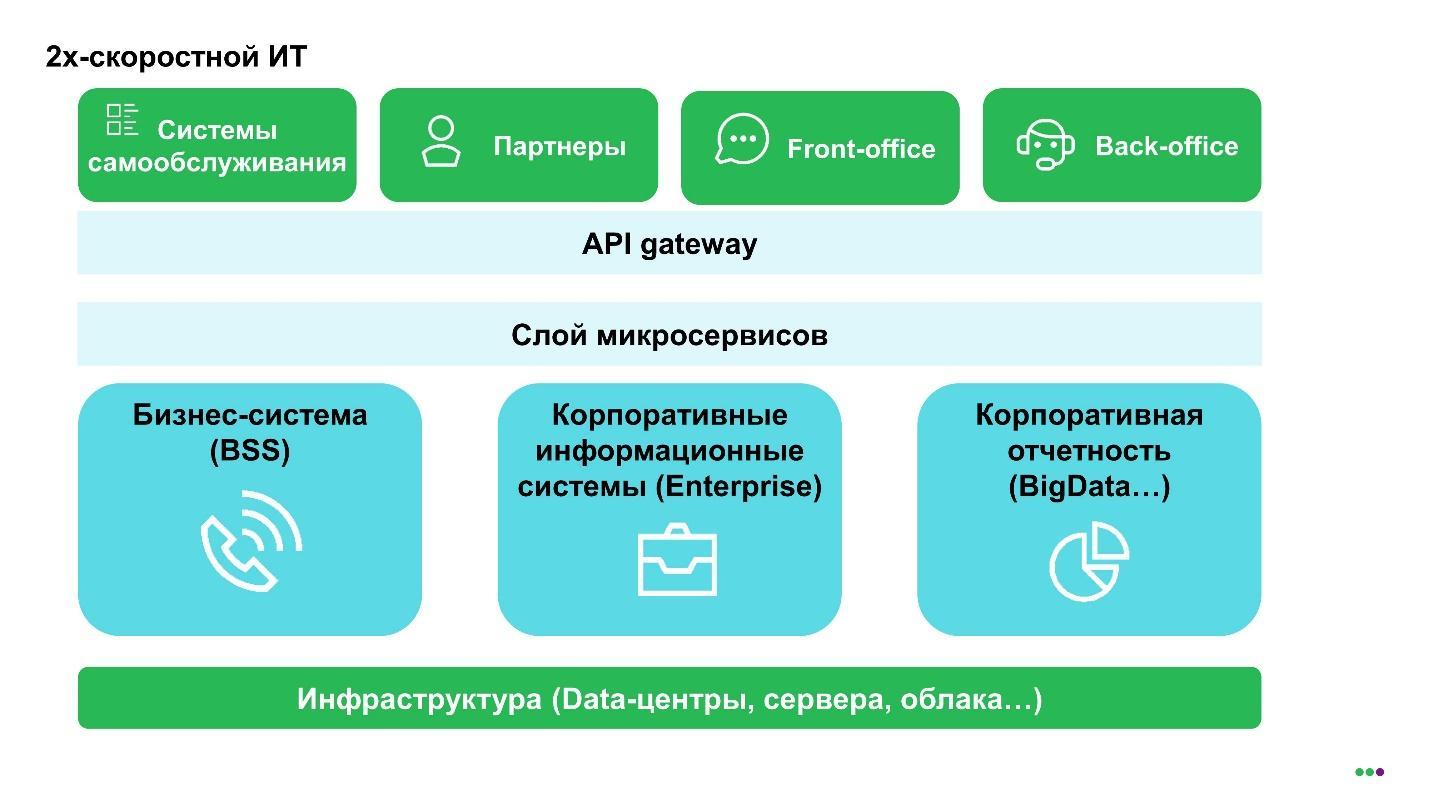

The second application is a trendy topic called two-speed IT.. This is because consultants from each iron say that corporations should go there.

There is a layer of infrastructure, domains above it, for example, a billing system, like a telecom, corporate systems, corporate reporting. This is the core that does not need to be touched. That is, of course, it is possible, but paranoid in providing quality, because it brings money to the corporation.

Next comes the layer of microservices - that which differentiates the operator or another player. Microservices can be quickly created on the basis of certain caches, lifting data from different domains there. Here is a field for experiments - if something did not work out, closed one microservice, opened another. This provides a truly enhanced time-to-market and increases the reliability and speed of the company.

If we compare our successful billing project with transformation programs at Deutsche Telekom, Svyazkome, Vodafone India, it is surprisingly dynamic and creative. In the process of implementing this project, not only MegaFon and its structure were transformed, but also Tarantool-enterprise appeared at Mail.ru, and our vendor Nexign (formerly Peter-Service) had a BSS Box (boxed billing solution).

In a sense, this is a historical project for the Russian market. It can be compared with what is described in the book of Frederick Brooks “Mythical Man-Month”. Then, in the 60s, IBM attracted 5,000 people to develop a new OS / 360 operating system for mainframes. We have less - 1,800, but ours are in vests, and taking into account the use of open source and new approaches, we work more productively.

The billing domains or, more broadly, business systems are displayed below. People at enterprise know CRM very well. Everyone should already have other systems: Open API, Gateway API.

Let's look again at the numbers and how the Open API works now. Its load is 10,000 transactions per second . Since we plan to actively develop the microservice layer and build the MegaFon public API, we expect more growth in the future in this particular part. 100,000 transactions will definitely be .

I don’t know if the SSO is comparable to Mail.ru - the guys, like, have 1,000,000 transactions per second. We are extremely interested in their solution and we plan to learn from their experience - for example, to make a functional SSO reserve using Tarantool. Now developers from Mail.ru are doing this with us.

CRM - these are the very 80 million subscribers that we want to bring to a billion, because there are already 300 million documents that include a three-year history. We are really looking forward to new services, and here the growth point is connected services . This is a ball that will grow, because there will be more and more services. Accordingly, a story will be needed, we do not want to stumble on this.

Billing itself in terms of billing, working with customers' receivables was transformed into a separate domain . To maximize performance, the domain architecture architectural template is applied .

Everything else is enterprise-level solutions. In the call store - 2 billion per day , 60 billion per month. Sometimes you have to recount them for a month, and better quickly. Financial monitoring is precisely the very 300 million that are constantly growing and growing: subscribers often run between operators, increasing this part.

The most telecom component of mobile communications is online billing . These are the systems that allow you to call or not call, make a decision in real time. Here, the load is 30,000 transactions per second, but taking into account the growth in data transfer, we plan 250,000 transactions , and therefore we are very interested in Tarantool.

The previous picture is the domains where we are going to use Tarantool. CRM itself, of course, is wider and we are going to apply it in the core itself.

Our estimated TTX of 100 million subscribers confuses me as an architect - what if 101 million? To redo everything again? To prevent this, we use caches, at the same time raising accessibility.

In general, there are two approaches to using Tarantool. The first is to build all caches at the microservice level . As I understand it, VimpelCom follows this path, creating a client cache.

We are less dependent on vendors, we are changing the core of BSS, so we have a single client card index out of the box. But we want to embroider it. Therefore, we use a slightly different approach - we make caches inside the systems .

The method fits well in the Tarantool approach with a transactional skeleton, when only the parts that are related to updates, that is, data changes, are updated. Everything else can be stored somewhere else. There is no huge data lake, unmanaged global cache. Caches are designed for the system, either for products, or for customers, or to make life easier for the service. When a subscriber calls upset by quality, I want to provide quality service.

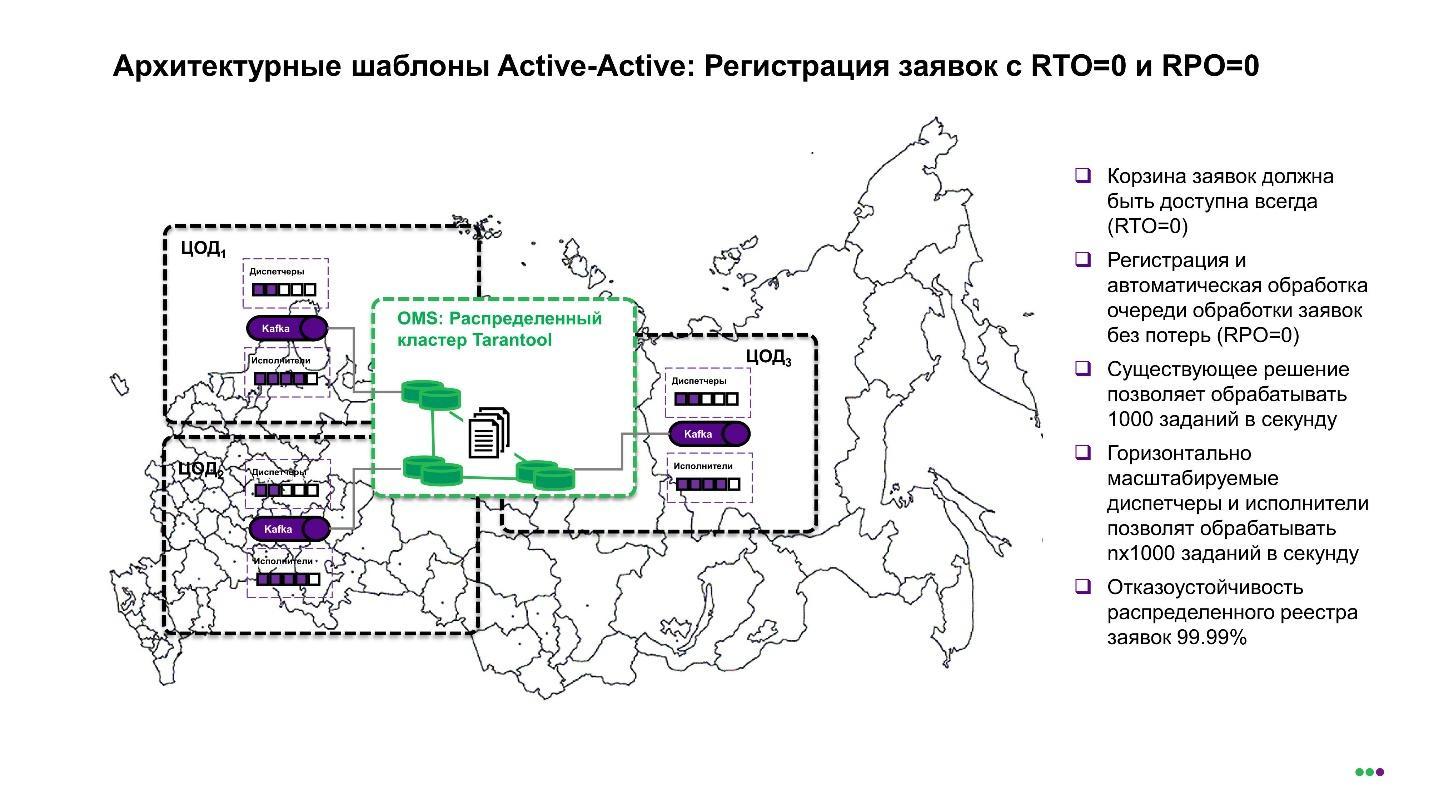

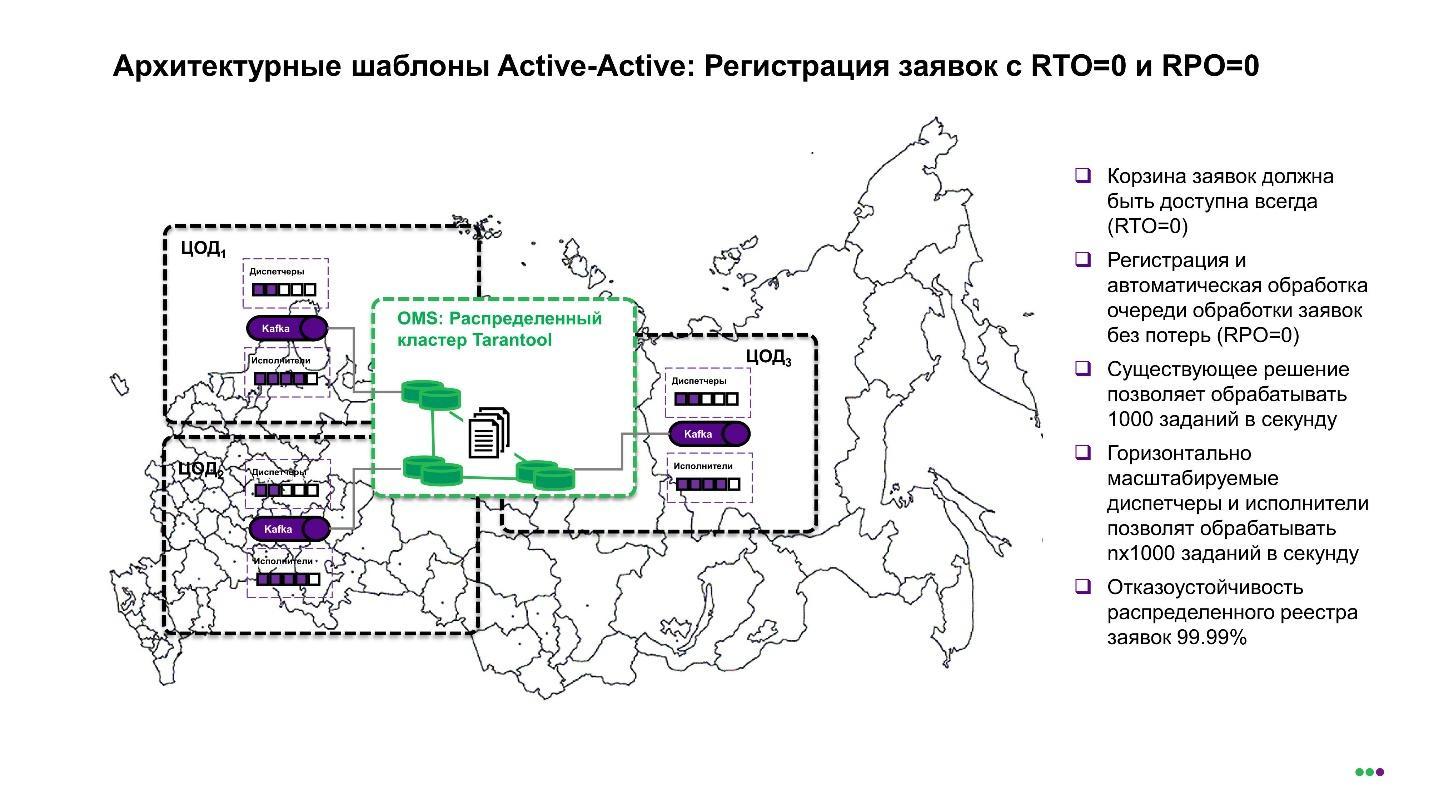

There are two terms in IT - RTO and RPO .

Recovery time objective is the time to recover a service after a failure. RTO = 0 means that even if something fell, the service continues to work.

Rrecovery point objective is the time to recover data, how much data we can lose over a period of time. RPO = 0 means we are not losing data.

Let's try to solve the problem for Tarantool.

Given : everyone understands the basket of applications, for example, in Amazon or elsewhere. The basket is required to work 24 hours 7 days a week, or 99.99% of the time. Orders that come to us must keep order, because we cannot randomly enable or disable communication for the subscriber - everything must be strictly consistent. The previous subscription affects the next, so the data is important - nothing should be lost.

Solution . You can try to solve it head on and ask the developers of the database, but the problem is not mathematically solved. One may recall theorems, conservation laws, quantum physics, but why - it cannot be solved at the DB level.

The good old architectural approach works here - you need to know the subject area well and at its expense resolve this rebus.

Our solution: create a distributed register of applications for Tarantool - a geo-distributed cluster . In the diagram, these are three different data centers - two to the Urals, one beyond the Urals, and we distribute all applications to these centers.

Netflix, which is now considered one of the leaders in IT, had only one data center until 2012. On the eve of Catholic Christmas on December 24, this data center went down. Users of Canada and the United States were left without their favorite films, very upset and wrote about this in social networks. Netflix now has three data centers on the west-east coast and one in western Europe.

So, we have a cluster, but what about RPO = 0 and RTO = 0? The solution is simple, which depends on the subject.

What is important in applications? Two parts: throwing the basket BEFORE making a purchase decision, and AFTER . A part of DO in a telecom is usually called order capturing or order negotiation . In telecom, this can be much more complicated than in the online store, because there you need to serve the client, offer 5 options, and this all happens for a while, but the basket is full. At this point, a failure is possible, but it is not scary, because it happens in an interactive mode under the supervision of a person.

If the Moscow data center suddenly fails, then automatically switching to another data center, we will continue to work. Theoretically, one product in a basket may be lost, but you see this, supplement the basket again and continue to work. In this case, RTO = 0.

At the same time, there is a second option: when we clicked “submit”, we want the data not to be lost. From that moment, automation starts to work - this is already RPO = 0. The application of these two different patterns in one case can be just a geo-distributed cluster with one switchable master, in another case some kind of quorum entry. Patterns may vary, but we solve the problem.

Further, having a distributed register of applications, we can also scale it all - to have many dispatchers and contractors who access this registry.

There is another case - the "showcase of balances . " Here is just an interesting case of the joint use of Cassandra and Tarantool.

We use Cassandra, because 2 billion calls per day is not the limit, and there will be more. Marketers love to colorize traffic by source, there are more and more details on social networks, for example. This all increases the story.

We feel comfortable with Cassandra, but she has one problem - she is not good at reading. Everything is OK on the record, 30,000 per second is not a problem - the problem is in reading .

Therefore, a topic with a cache appeared, and at the same time we solved the following problem: there is an old traditional case, when the equipment from the switch from online charging comes to the files that we upload to Cassandra. We struggled with the problem of reliable download of these files, even applied on the advice of IBM manager file transfer - there are such solutions that manage file transfers efficiently using the UDP protocol, for example, rather than TCP. This is good, but it’s all the same minutes, and until we load it all up, the operator in the call center cannot answer the client, what happened to his balance - we have to wait.

To prevent this from happening, we apply a parallel functional reserve . When we send an event through Kafka to Tarantool, recalculating aggregates in real time, for example, for today, we get a balance cache that can send balances at any speed, for example, 100 thousand transactions per second and those same 2 seconds.

The goal is that after making the call after 2 seconds in your account there is not only a changed balance, but information about why it has changed.

These were examples of using Tarantool. We really liked the openness of Mail.ru, their willingness to consider different cases.

It’s already hard for consultants from BCG or McKinsey, Accenture or IBM to surprise us with something new - much of what they offer, we are either already doing, have done, or are planning to do it. I think that Tarantool will take its rightful place in our technological stack and replace many existing technologies. We are in the active phase of the development of this project.

And here is Tarantool? Oleg Ivlev and Andrey Knyazev will talk about this.. Oleg is the chief architect of MegaFon with extensive experience working in foreign companies, Andrey is the director of business systems. From the transcript of their report at the Tarantool Conference 2018, you will learn why R&D is needed in corporations, what Tarantool is, how the deadlock for vertical scaling and globalization became the prerequisites for the emergence of this database in the company, about technological challenges, the transformation of architecture, and how MegaFon's technostek resembles Netflix, Google and Amazon.

Single Billing Project

The project that will be discussed is called "Single Billing." It was in him that Tarantool showed its best qualities.

Performance growth of Hi-End equipment did not keep pace with the growth of the subscriber base and the growth in the number of services, further growth in the number of subscribers and services due to M2M, IoT was expected, and branch features led to a deterioration of the time-to-market. The company decided to create a unified business system with a unique world-class modular architecture, instead of 8 current different billing systems.

MegaFon - it's eight companies in one. In 2009, the reorganization was completed: branches throughout Russia merged into a single company MegaFon OJSC (now PJSC). Thus, the company has 8 billing systems with its own “custom” solutions, branch features and a different organizational structure, IT and marketing.

Everything was fine until I had to launch one common federal product. A lot of difficulties appeared here: for some, tariffing with rounding up, for others, to a lesser extent, and for others, according to the arithmetic mean. There are thousands of such moments.

Despite the fact that the version of the billing system is the same, one supplier, the settings diverged so that the glue for a long time. We tried to reduce their number, and came across a second problem that is familiar to many corporations.

Vertical scaling. Even the coolest iron at that time did not meet the needs. We used Hewlett-Packard equipment, the Superdome Hi-End line, but it did not draw the need for even two branches. I wanted horizontal scaling without large transaction costs and capital investments.

Expectation of growth in the number of subscribers and services . The consultants have long brought stories about IoT and M2M to the telecom world: there will come a time when each phone and iron will have a SIM card, and two in the refrigerator. Today we have one number of subscribers, and in the near future there will be an order of magnitude more.

Technological challenges

These four reasons drove us to major changes. There was a choice between upgrading the system and designing from scratch. They thought for a long time, made serious decisions, played tenders. As a result, they decided to design from the very beginning, and took up interesting challenges - technological challenges.

Scalability

If earlier there were, let's say, 8 billing accounts for 15 million subscribers , and now 100 million subscribers and more should have turned out - the load is an order of magnitude higher.

We have become comparable in scale to major Internet players like Mail.ru or Netflix.

But further movement to increase the load and the subscriber base posed serious challenges for us.

The geography of our vast country

Between Kaliningrad and Vladivostok 7500 km and 10 time zones . The speed of light is finite and at such delay distances are already significant. 150 ms on the coolest modern optical channels is a bit much for real-time billing, especially such as it is now in telecom in Russia. In addition, you need to update in one business day, and with different time zones - this is a problem.

We do not just provide services for a monthly fee, we have complex tariffs, packages, various modifiers. We need not only to allow or prohibit the subscriber to talk, but to give him a certain quota - to calculate calls and actions in real time so that he does not notice.

fault tolerance

This is the flip side of centralization.

If we collect all subscribers in one system, then any emergency events and disasters are disastrous for business. Therefore, we design the system in such a way as to exclude the effect of accidents on the entire subscriber base.

This is again a consequence of the rejection of vertical scaling. When we went into horizontal scaling, we increased the number of servers from hundreds to thousands. They need to be managed and interchangeable, automatically backed up IT infrastructure and restored distributed system.

Such interesting challenges confronted us. We designed the system, and at that moment we tried to find the world best practices in order to check how much we are in trend, how much we follow advanced technologies.

World experience

Surprisingly, in the world telecom we did not find a single reference.

Europe fell by the number of subscribers and scale, the United States - by the plane of its tariffs. We looked at something in China, but found something in India and took specialists from Vodafone India.

To analyze the architecture, the Dream Team was assembled, led by IBM, architects from various fields. These people could adequately evaluate what we are doing and bring certain knowledge to our architecture.

Scale

A few numbers to illustrate.

We design a system for 80 million subscribers with a reserve of one billion . So we remove future thresholds. This is not because we are going to take over China, but because of the pressure of IoT and M2M.

300 million documents are processed in real time . Although we have 80 million subscribers, we work with potential customers and those who have left us if we need to collect receivables. Therefore, real volumes are much larger.

2 billion transactions daily change the balance - these are payments, charges, calls and other events. 200 TB of data changes actively , 8 PB of data changes a little slower , and this is not an archive, but live data in a single billing. Data Center Scale -5 thousand servers at 14 sites .

Technology stack

When we planned the architecture and set about assembling the system, we imported the most interesting and advanced technologies. The result was a technological stack that is familiar to any Internet player and corporations that make highly loaded systems.

The stack is similar to the stacks of other major players: Netflix, Twitter, Viber. It consists of 6 components, but we want to reduce and unify it.

Flexibility is good, but in a large corporation there is no way without unification.

We are not going to change the same Oracle to Tarantool. In the realities of large companies, this is utopia, or a crusade for 5-10 years with an incomprehensible outcome. But Cassandra and Couchbase can be replaced with Tarantool, and we are committed to this.

Why Tarantool?

There are 4 simple criteria why we chose this database.

Speed . We conducted stress tests on MegaFon industrial systems. Tarantool won - it showed the best performance.

This is not to say that other systems do not meet the needs of MegaFon. Current memory solutions are so productive that this stock of the company is more than enough. But we are interested in dealing with the leader, and not with the one who weaves in the tail, including the load test.

Tarantool covers the needs of the company even in the long run.

TCO cost . Support for Couchbase on MegaFon volumes costs space money, with Tarantool the situation is much nicer, and in terms of functionality they are close.

Another nice feature that slightly influenced our choice - Tarantool works better than other databases with memory. It shows maximum efficiency .

Reliability . MegaFon is invested in reliability, probably like no other. Therefore, when we looked at Tarantool, we realized what needs to be done so that it satisfies our requirements.

We invested our time and finances, and together with Mail.ru we created an enterprise version, which is now used by several other companies.

Tarantool-enterprise fully satisfied us with security, reliability, and logging.

Partnership

The most important thing for me is direct contact with the developer . This is exactly what the Tarantool guys bribed.

If you come to a player, especially one who works with an anchor client, and say that you need the database to be able to do this, this and that, he usually answers:

- Well, put the requirements under the bottom of that pile - sometime, probably, we get to them.

Many have a roadmap for the next 2-3 years, and it’s almost impossible to integrate there, and Tarantool developers bribe with openness, and not only with MegaFon, and adapt their system to the customer. This is cool and we really like it.

Where did we apply Tarantool

At us Tarantool is used in several elements. The first is in the pilot , which we made on the address directory system. At one time, I wanted it to be a system that is similar to Yandex.Maps and Google Maps, but it turned out a little differently.

For example, the address directory in the sales interface. On Oracle, finding the address you need takes 12-13 s. - uncomfortable numbers. When we switch to Tarantool, replace Oracle with another database in the console, and perform the same search, we get 200 times acceleration! The city pops up after the third letter. Now we are adapting the interface so that this happens after the first. However, the response speed is completely different - already milliseconds instead of seconds.

The second application is a trendy topic called two-speed IT.. This is because consultants from each iron say that corporations should go there.

There is a layer of infrastructure, domains above it, for example, a billing system, like a telecom, corporate systems, corporate reporting. This is the core that does not need to be touched. That is, of course, it is possible, but paranoid in providing quality, because it brings money to the corporation.

Next comes the layer of microservices - that which differentiates the operator or another player. Microservices can be quickly created on the basis of certain caches, lifting data from different domains there. Here is a field for experiments - if something did not work out, closed one microservice, opened another. This provides a truly enhanced time-to-market and increases the reliability and speed of the company.

Microservices is perhaps the main role of Tarantool in MegaFon.

Where we plan to apply Tarantool

If we compare our successful billing project with transformation programs at Deutsche Telekom, Svyazkome, Vodafone India, it is surprisingly dynamic and creative. In the process of implementing this project, not only MegaFon and its structure were transformed, but also Tarantool-enterprise appeared at Mail.ru, and our vendor Nexign (formerly Peter-Service) had a BSS Box (boxed billing solution).

In a sense, this is a historical project for the Russian market. It can be compared with what is described in the book of Frederick Brooks “Mythical Man-Month”. Then, in the 60s, IBM attracted 5,000 people to develop a new OS / 360 operating system for mainframes. We have less - 1,800, but ours are in vests, and taking into account the use of open source and new approaches, we work more productively.

The billing domains or, more broadly, business systems are displayed below. People at enterprise know CRM very well. Everyone should already have other systems: Open API, Gateway API.

Open API

Let's look again at the numbers and how the Open API works now. Its load is 10,000 transactions per second . Since we plan to actively develop the microservice layer and build the MegaFon public API, we expect more growth in the future in this particular part. 100,000 transactions will definitely be .

I don’t know if the SSO is comparable to Mail.ru - the guys, like, have 1,000,000 transactions per second. We are extremely interested in their solution and we plan to learn from their experience - for example, to make a functional SSO reserve using Tarantool. Now developers from Mail.ru are doing this with us.

CRM

CRM - these are the very 80 million subscribers that we want to bring to a billion, because there are already 300 million documents that include a three-year history. We are really looking forward to new services, and here the growth point is connected services . This is a ball that will grow, because there will be more and more services. Accordingly, a story will be needed, we do not want to stumble on this.

Billing itself in terms of billing, working with customers' receivables was transformed into a separate domain . To maximize performance, the domain architecture architectural template is applied .

The system is divided into domains, the load is distributed and fault tolerance is provided. Additionally, we worked with distributed architecture.

Everything else is enterprise-level solutions. In the call store - 2 billion per day , 60 billion per month. Sometimes you have to recount them for a month, and better quickly. Financial monitoring is precisely the very 300 million that are constantly growing and growing: subscribers often run between operators, increasing this part.

The most telecom component of mobile communications is online billing . These are the systems that allow you to call or not call, make a decision in real time. Here, the load is 30,000 transactions per second, but taking into account the growth in data transfer, we plan 250,000 transactions , and therefore we are very interested in Tarantool.

The previous picture is the domains where we are going to use Tarantool. CRM itself, of course, is wider and we are going to apply it in the core itself.

Our estimated TTX of 100 million subscribers confuses me as an architect - what if 101 million? To redo everything again? To prevent this, we use caches, at the same time raising accessibility.

In general, there are two approaches to using Tarantool. The first is to build all caches at the microservice level . As I understand it, VimpelCom follows this path, creating a client cache.

We are less dependent on vendors, we are changing the core of BSS, so we have a single client card index out of the box. But we want to embroider it. Therefore, we use a slightly different approach - we make caches inside the systems .

So there is less than a rassynchron - one system is responsible both for a cache, and for the main master source.

The method fits well in the Tarantool approach with a transactional skeleton, when only the parts that are related to updates, that is, data changes, are updated. Everything else can be stored somewhere else. There is no huge data lake, unmanaged global cache. Caches are designed for the system, either for products, or for customers, or to make life easier for the service. When a subscriber calls upset by quality, I want to provide quality service.

RTO and RPO

There are two terms in IT - RTO and RPO .

Recovery time objective is the time to recover a service after a failure. RTO = 0 means that even if something fell, the service continues to work.

Rrecovery point objective is the time to recover data, how much data we can lose over a period of time. RPO = 0 means we are not losing data.

Tarantool challenge

Let's try to solve the problem for Tarantool.

Given : everyone understands the basket of applications, for example, in Amazon or elsewhere. The basket is required to work 24 hours 7 days a week, or 99.99% of the time. Orders that come to us must keep order, because we cannot randomly enable or disable communication for the subscriber - everything must be strictly consistent. The previous subscription affects the next, so the data is important - nothing should be lost.

Solution . You can try to solve it head on and ask the developers of the database, but the problem is not mathematically solved. One may recall theorems, conservation laws, quantum physics, but why - it cannot be solved at the DB level.

The good old architectural approach works here - you need to know the subject area well and at its expense resolve this rebus.

Our solution: create a distributed register of applications for Tarantool - a geo-distributed cluster . In the diagram, these are three different data centers - two to the Urals, one beyond the Urals, and we distribute all applications to these centers.

Netflix, which is now considered one of the leaders in IT, had only one data center until 2012. On the eve of Catholic Christmas on December 24, this data center went down. Users of Canada and the United States were left without their favorite films, very upset and wrote about this in social networks. Netflix now has three data centers on the west-east coast and one in western Europe.

We initially build a geo-distributed solution - fault tolerance is important to us.

So, we have a cluster, but what about RPO = 0 and RTO = 0? The solution is simple, which depends on the subject.

What is important in applications? Two parts: throwing the basket BEFORE making a purchase decision, and AFTER . A part of DO in a telecom is usually called order capturing or order negotiation . In telecom, this can be much more complicated than in the online store, because there you need to serve the client, offer 5 options, and this all happens for a while, but the basket is full. At this point, a failure is possible, but it is not scary, because it happens in an interactive mode under the supervision of a person.

If the Moscow data center suddenly fails, then automatically switching to another data center, we will continue to work. Theoretically, one product in a basket may be lost, but you see this, supplement the basket again and continue to work. In this case, RTO = 0.

At the same time, there is a second option: when we clicked “submit”, we want the data not to be lost. From that moment, automation starts to work - this is already RPO = 0. The application of these two different patterns in one case can be just a geo-distributed cluster with one switchable master, in another case some kind of quorum entry. Patterns may vary, but we solve the problem.

Further, having a distributed register of applications, we can also scale it all - to have many dispatchers and contractors who access this registry.

Cassandra and Tarantool together

There is another case - the "showcase of balances . " Here is just an interesting case of the joint use of Cassandra and Tarantool.

We use Cassandra, because 2 billion calls per day is not the limit, and there will be more. Marketers love to colorize traffic by source, there are more and more details on social networks, for example. This all increases the story.

Cassandra allows you to scale horizontally to any volume.

We feel comfortable with Cassandra, but she has one problem - she is not good at reading. Everything is OK on the record, 30,000 per second is not a problem - the problem is in reading .

Therefore, a topic with a cache appeared, and at the same time we solved the following problem: there is an old traditional case, when the equipment from the switch from online charging comes to the files that we upload to Cassandra. We struggled with the problem of reliable download of these files, even applied on the advice of IBM manager file transfer - there are such solutions that manage file transfers efficiently using the UDP protocol, for example, rather than TCP. This is good, but it’s all the same minutes, and until we load it all up, the operator in the call center cannot answer the client, what happened to his balance - we have to wait.

To prevent this from happening, we apply a parallel functional reserve . When we send an event through Kafka to Tarantool, recalculating aggregates in real time, for example, for today, we get a balance cache that can send balances at any speed, for example, 100 thousand transactions per second and those same 2 seconds.

The goal is that after making the call after 2 seconds in your account there is not only a changed balance, but information about why it has changed.

Conclusion

These were examples of using Tarantool. We really liked the openness of Mail.ru, their willingness to consider different cases.

It’s already hard for consultants from BCG or McKinsey, Accenture or IBM to surprise us with something new - much of what they offer, we are either already doing, have done, or are planning to do it. I think that Tarantool will take its rightful place in our technological stack and replace many existing technologies. We are in the active phase of the development of this project.

The report of Oleg and Andrey is one of the best at the Tarantool Conference last year, and already on June 17, Oleg Ivlev will speak at the T + Conference 2019 with the report “Why Tarantool in Enterprise” . Also, MegaFon will deliver a presentation by Alexander Deulin on Tarantool Caches and Replication from Oracle . We will find out what has changed, what plans have been implemented. Join - the conference is free, you just need to register . All reports have been accepted and the conference program has been formed: new cases, new experience using Tarantool, architecture, enterprise, tutorials and microservices.