How we trained a neural network to classify screws

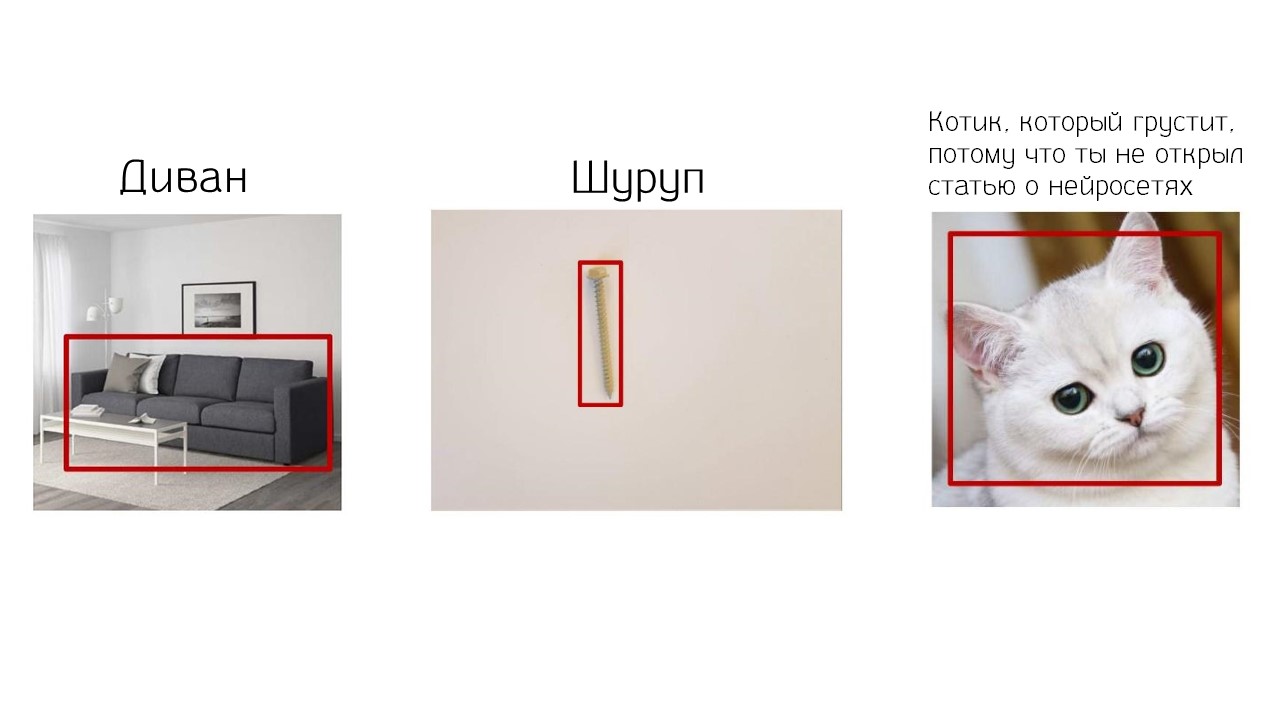

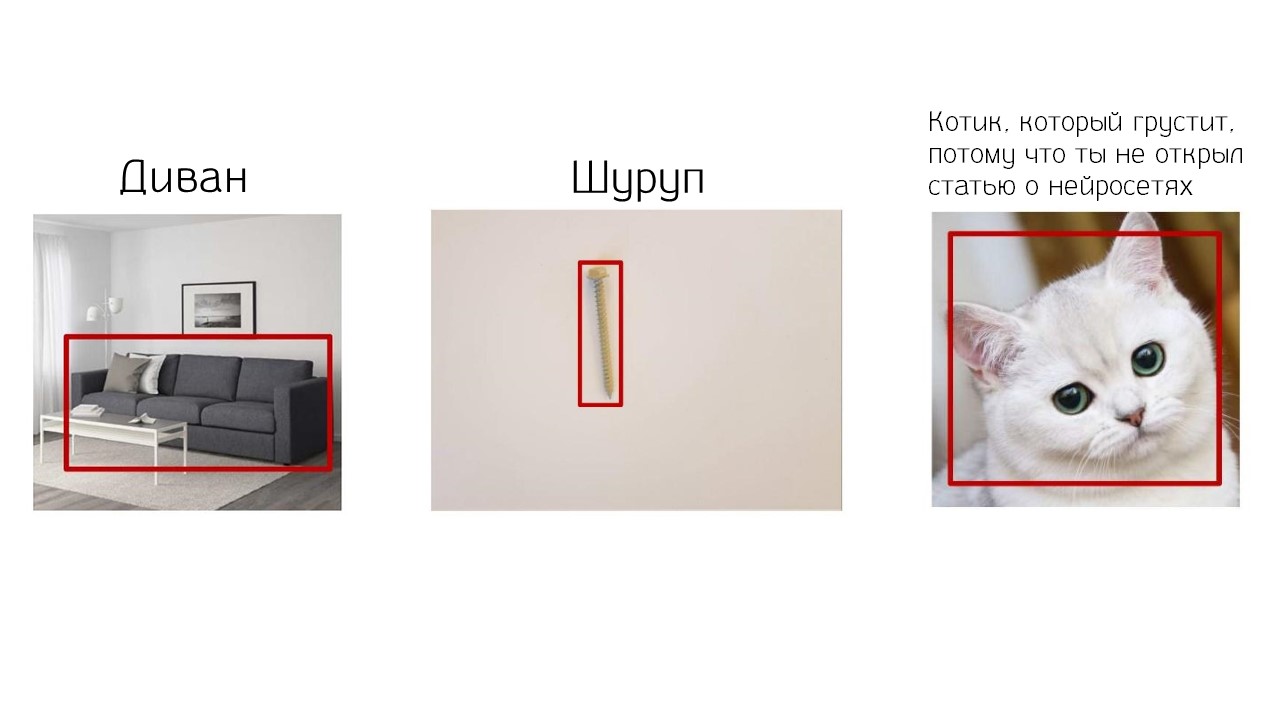

A neural network can identify a cat in a photograph, find a sofa, improve video recording, draw a picture from puppies or a simple sketch. We are already used to it. News about neural networks appears almost every day and has become commonplace. Grid Dynamics companies set the task not ordinary, but difficult - to teach the neural network to find a specific screw or bolt in a huge catalog of an online store from one photograph. The task is more difficult than finding a cat.

The problem of the online store of screws is in stock. Thousands or tens of thousands of models. Each screw has its own description and characteristics, so there is no hope for filters. What to do? Search manually or search in the hypermarket on the shelves? In both cases, it is a waste of time. As a result, the client gets tired and goes to hammer a nail. To help him, we will use a neural network. If she can find seals or sofas, then let her do something useful - picks up screws and bolts. How to teach a neural network to select screws quickly and accurately for a user, we’ll tell you in the transcript of the report of Maria Matskevichus , who at Grid Dynamics is engaged in data analysis and machine learning.

Imagine - we bought a table, but one small screw was lost, and a table cannot be assembled without it. We go to the online store in search, and we see 15,000 unique positions, each of which is possibly our screw.

We go to filters - there are about 10 of them, each of which has 5 to 100 attributes. Choose the type of hat and color: flat hat - Flat and yellow copper - Brass. We receive the issue.

What is it? We were not looking for this. Fire the person who is responsible for the extradition!

After a while, we nevertheless select 2 suitable table screws.

The simplest thing left is to decipher the description and characteristics. Each manufacturer describes the screws in their own way. There are no specific requirements for describing the parameters of a particular model.

Everything that creates difficulties for the client. Lost time, nerves and labor of technical support, which helps the client to find the desired model. Having realized these problems of the buyer, our customer - a large American company - decided to provide the client with a quick, accurate and simple search by photo, instead of a slow and not always successful semantic search.

We took up the task and realized that there are several problems.

Screws look alike. Look at the photos.

These are different screws. If you flip the photos, you can see that the important characteristic is different - head.

And in this photo?

Here the models are the same. The lighting is different, but in both photos there is one screw model.

There are rare species that require classification. For example, with "ears" or a ring.

Minimum requirements for using the application. The user can upload a photo with any background, with extraneous objects, with shadows, with poor lighting, and the application must give the result. A screw or bolt on a white background is a rarity.

The application should work in real time.The user is waiting for the result here and now.

Competitors. Recently Amazon - a competitor of our customer - launched its Part Finder . This is an application that searches for screws and bolts from a photograph.

In addition to Amazona, we had two start-up competitors with their own solutions for the customer. We needed to beat not only Amazon, but also startups, which was not difficult. One of the competitors suggested the idea of taking the 20 most popular bolts and training object detection on them. But to the question of what will happen when the neural networks give 100, 1000 or all 15 000 screws from the customer’s site, how object detection will work and where to get so much data, the competitor did not find what to answer.

It should be scalable and not depend on the number of varieties of screws and the size of the catalog. To solve the problem, we decided to consider a screw as a set of characteristics or attributes. Each attribute is a set of attributes.

Selected the following characteristics:

We examined the map of all the signs and realized that to describe 15,000 different screws, they only need 50. They will make up a combination of different signs with different attributes. It takes 50 screws and one coin to measure the scale of the screw in the photo.

So they decided. We decided on the idea. Further data is required.

We received data from the customer and were a little upset. Catalog data - photographs of objects on a white background.

But they do not quite correspond to the data that the application will process. The user will want to use any backgrounds, will take pictures in the palm of his hand or hold a bolt with his fingers. The data on which the network is trained will not coincide with the real picture.

Then we decided to follow the advice of Richard Soker .

So we did - printed a lot of colored backgrounds on the printer, bought these 50 bolts and photographed the training data on the backgrounds. So we got all the possible options for the surfaces of tables and carpets.

After collecting the data, the next step is to understand where in the picture the bolt is located, if at all.

We examined two approaches to localization: object detection and semantic segmentation .

Object detection returns the box of the minimum area around the object. Semantic segmentation returns the mask. In our case, the mask is more suitable. It retains its shape, removes the background, excess shadows and allows you to further classify screws better than object detection.

The task of semantic segmentation is to return the probability of its belonging to a class for each pixel. To train such a model, labeled data is required. We used the “labelme” application , with which we marked the sample. We got about a thousand masks with a coin and a screw.

We took U-Net . This network is very fond of at Kaggle, and so are we now.

U-Net is a successful implementation of encoder-decoder.

We decided on the model. Now we select the loss function, the value of which we will minimize in the learning process.

The classic option for segmentation is the Dice coefficient :

This is the harmonic mean between precision and recall. The harmonic mean means that we equally weigh the error of the first kind and the error of the second kind. Our data is not balanced, and this is not very suitable for us.

There is always a lot of background, and the object itself is not enough. Therefore, the model will always give very high precision and very low recall. To weigh the errors of the first and second kind in different ways, we decided to take the Tversky index :

The Tversky index has two coefficients, α and β, weigh the two errors differently. If we take α = β = 0.5, we get the same Dice coefficient. If we select other parameters, we get the Jaccard index - one of the measures of similarity of objects. For α = β = 1 - the Tversky index is equal to the Jaccard index.

You can also get Fβ-score. For α + β = 1, the Tversky index corresponds to the Fβ-score.

To select α and β, we conducted several experiments. They put forward a hypothesis - to fine the model for errors of the second kind. It's not so bad when a model classifies a background pixel as an object pixel. If there is a small background frame around the object, this is normal. But when the model classifies a screw pixel as a background pixel - holes appear on the screw, it becomes uneven, and this interferes with our classification.

Therefore, we decided to increase the parameter β and bring it closer to 1, and the parameter α to 0.

It can be seen in the picture that the best mask was obtained with β = 0.7 and α = 0.3. We decided to stop on this and train the model on all our data.

The learning strategy is pretty tricky. Since we manually marked up the data in personal time, we decided to use one feature of U-Net. It segments each new class on a new channel - adds a new channel and an object is localized on it.

Therefore, in our training there was not a single picture that contains both a coin and a bolt. All pictures contained one class: 10% - coins, 90% - screws.

This made it possible to correctly distribute efforts and save time on a coin - it is one, but the form is simple. We easily learned to segment it, which allowed us to transfer 90% of our efforts to screws. They have different shapes and colors, and it is important to learn how to segment them.

Our network has learned to segment even those instances that were not in our sample. For example, bolts of an unusual shape were absent, but the model also segmented them well. She learned to generalize the signs of screws and bolts and use this for new data, which is great.

This is the next stage after the localization of the object. Few people train convolutional neural networks to classify objects; they often use Transfer learning . Let's look at the architecture of a convolutional neural network, and then briefly recall what Transfer learning is.

In the early layers, the network learns to recognize boundaries and angles. Later it recognizes simple shapes: rectangles, circles, squares. The closer to the top, the more it recognizes the characteristic features of the data on which it is trained. At the very top, the model recognizes classes.

Most objects of the world consist of simple forms and have common features. You can take part of a network that is trained on a huge amount of data and use these attributes for our classification. The network will be trained on a small data set without the large expenditure of resources. This is what we did.

Once you have decided on the general technology of Transfer learning, you need to select a pre-trained model.

Our application works in real time. The model should be light and mobile - have few parameters, but be accurate. To account for these two factors, we sacrificed a little accuracy in favor of lightness. Therefore, we chose not the most accurate, but lightweight model - Xception .

In Xception, instead of the usual convolution - Convolution - Separable Convolution is used . Therefore, Xception is lighter than other networks, for example, with VGG.

Ordinary Convolution does both interchannel and interdimensional convolution. And Separable Convolution shares: first, interdimensional convolution - Depthwise , and then interchannel. The results combine.

Xception executes separable Convolution, while it produces the same good result as the ordinary Convolution, but there are fewer parameters.

We substitute the values in the formulas for calculating the parameters, for example, for 16 filters. For ordinary Convolution, you need to calculate the parameters 7 times more than for Separable Convolution. Due to this, Xception is more accurate and less.

First, we decided to build some baseline and trained the model in the original picture. We had 4 classifiers and each was responsible for a specific attribute. The result was unsatisfactory.

Then they trained the model on the box, which returned the object detection. Got a good increase in accuracy for Thread coverage. But for the rest of the classifiers, the result is also unsatisfactory.

Then they decided to give the classifiers only that part of the screw that they want and will classify. Head give only hats, Tip - only a spearhead. To do this, we took masks, got a contour around which a rectangle of the minimum area was circled, and calculated the angle of rotation.

At this moment, we still do not know which side the screw head and tip. To find out, they cut the box in half and looked at the square.

The area that contains the head is always larger than the area that contains the tip. Comparing the area, we determine in which part, which part of the screw. It worked, but not for all cases.

When the length of the screw is comparable to the diameter of the cap, instead of a rectangle, a square is obtained. When we turn it, we get a picture, as at number 3. The model does not classify this option well.

Then we took all the long screws, calculated the rotation angles for them, and built the Rotation Net shallow neural network , which takes the screw and predicts the rotation angle.

Then this auxiliary model was used for small short screws and bolts. We got a good result - everything works, small screws rotate too. At this stage, the error is practically reduced to zero. We took this data, trained the classifiers and saw that for each of the classifiers, except Finish, the accuracy increased significantly. This is great - we work further.

But for some reason, Finish did not take off. We studied the errors and saw the picture.

The same pair of screws under different lighting conditions and different camera settings differ in color. This may confuse not only the model, but also the person. Gray can turn pink, yellow can turn orange. Recall the blue-gold dress - the same story. The reflective surface of the screw is misleading.

We studied similar cases on the Internet and found Chinese scientists who tried to classify cars by colors and faced the same problem for cars.

As a solution, Chinese scientists have created a shallow network. Its feature is in two branches that are concatenated at the end. This architecture is called ColorNet .

We implemented a solution for our task, and we got an increase in accuracy by almost 2 times. With such results and models you can work and look for the very screw from the table in the catalog of the online store.

We had only 4 classifiers for 4 attributes, and there are many others. So, you need to create some kind of filter that will take the catalog data and filter it in a certain way.

Each classifier returns a soft label and class. We took the values of the soft tags and our database, counted some score - multiplying all the tags for each feature.

Score shows the confidence of all classifiers that this combination of features will most likely appear. The higher the score, the more likely that the screw from the catalog and the screw in the picture are similar.

It turned out such an application.

Eat the elephant in parts . Divide the big problem into parts.

Label data that will reflect reality. Do not be afraid of data markup - this is the safest way, which will ensure maximum model quality quickly. Data synthesis methods usually produce worse results than using real data.

Test it out . Before building many models, we took small chunks of data, labeled it with our hands, and tested the operation of each hypothesis. Only after that they trained U-Net, classifiers, Rotation.

Do not reinvent the wheel . Often the problem you are facing already has a solution. Look on the Internet, read articles - be sure to find something!

The story about our Visual Search application is not only about the classification of screws. It is about how to make a complex project, which has no analogues, but even if there are, they do not meet the requirements that we set for the application.

For more information on Grid Dynamics projects and other challenges faced by the Data Science team, see the company's technology blog .

The problem of the online store of screws is in stock. Thousands or tens of thousands of models. Each screw has its own description and characteristics, so there is no hope for filters. What to do? Search manually or search in the hypermarket on the shelves? In both cases, it is a waste of time. As a result, the client gets tired and goes to hammer a nail. To help him, we will use a neural network. If she can find seals or sofas, then let her do something useful - picks up screws and bolts. How to teach a neural network to select screws quickly and accurately for a user, we’ll tell you in the transcript of the report of Maria Matskevichus , who at Grid Dynamics is engaged in data analysis and machine learning.

A short demo of what happened

Buyer Problems

Imagine - we bought a table, but one small screw was lost, and a table cannot be assembled without it. We go to the online store in search, and we see 15,000 unique positions, each of which is possibly our screw.

We go to filters - there are about 10 of them, each of which has 5 to 100 attributes. Choose the type of hat and color: flat hat - Flat and yellow copper - Brass. We receive the issue.

What is it? We were not looking for this. Fire the person who is responsible for the extradition!

After a while, we nevertheless select 2 suitable table screws.

The simplest thing left is to decipher the description and characteristics. Each manufacturer describes the screws in their own way. There are no specific requirements for describing the parameters of a particular model.

Everything that creates difficulties for the client. Lost time, nerves and labor of technical support, which helps the client to find the desired model. Having realized these problems of the buyer, our customer - a large American company - decided to provide the client with a quick, accurate and simple search by photo, instead of a slow and not always successful semantic search.

Implementation difficulties

We took up the task and realized that there are several problems.

Screws look alike. Look at the photos.

These are different screws. If you flip the photos, you can see that the important characteristic is different - head.

And in this photo?

Here the models are the same. The lighting is different, but in both photos there is one screw model.

There are rare species that require classification. For example, with "ears" or a ring.

Minimum requirements for using the application. The user can upload a photo with any background, with extraneous objects, with shadows, with poor lighting, and the application must give the result. A screw or bolt on a white background is a rarity.

The application should work in real time.The user is waiting for the result here and now.

Competitors. Recently Amazon - a competitor of our customer - launched its Part Finder . This is an application that searches for screws and bolts from a photograph.

In addition to Amazona, we had two start-up competitors with their own solutions for the customer. We needed to beat not only Amazon, but also startups, which was not difficult. One of the competitors suggested the idea of taking the 20 most popular bolts and training object detection on them. But to the question of what will happen when the neural networks give 100, 1000 or all 15 000 screws from the customer’s site, how object detection will work and where to get so much data, the competitor did not find what to answer.

Decision

It should be scalable and not depend on the number of varieties of screws and the size of the catalog. To solve the problem, we decided to consider a screw as a set of characteristics or attributes. Each attribute is a set of attributes.

Selected the following characteristics:

- hat - head (32 attributes);

- external coating - finish (15 attributes);

- point - tip (12 attributes);

- thread coverage - thread coverage (4 attributes).

We examined the map of all the signs and realized that to describe 15,000 different screws, they only need 50. They will make up a combination of different signs with different attributes. It takes 50 screws and one coin to measure the scale of the screw in the photo.

So they decided. We decided on the idea. Further data is required.

Data

We received data from the customer and were a little upset. Catalog data - photographs of objects on a white background.

But they do not quite correspond to the data that the application will process. The user will want to use any backgrounds, will take pictures in the palm of his hand or hold a bolt with his fingers. The data on which the network is trained will not coincide with the real picture.

Then we decided to follow the advice of Richard Soker .

Instead of learning a teaching method without a teacher for a month, it’s easier to take a week, mark up the data and train the classifier.

So we did - printed a lot of colored backgrounds on the printer, bought these 50 bolts and photographed the training data on the backgrounds. So we got all the possible options for the surfaces of tables and carpets.

After collecting the data, the next step is to understand where in the picture the bolt is located, if at all.

Localization

We examined two approaches to localization: object detection and semantic segmentation .

Object detection returns the box of the minimum area around the object. Semantic segmentation returns the mask. In our case, the mask is more suitable. It retains its shape, removes the background, excess shadows and allows you to further classify screws better than object detection.

The task of semantic segmentation is to return the probability of its belonging to a class for each pixel. To train such a model, labeled data is required. We used the “labelme” application , with which we marked the sample. We got about a thousand masks with a coin and a screw.

Model

We took U-Net . This network is very fond of at Kaggle, and so are we now.

U-Net is a successful implementation of encoder-decoder.

- A constructing path or encoder . This is the part of U-Net, which is trying to represent the entire set of data, present as a vector representation in a more compressed space. She learns these signs and finds the most significant.

- An expanding path or decoder . Attempts to decode a feature map and understand where the object is in the picture.

We decided on the model. Now we select the loss function, the value of which we will minimize in the learning process.

Loss function

The classic option for segmentation is the Dice coefficient :

This is the harmonic mean between precision and recall. The harmonic mean means that we equally weigh the error of the first kind and the error of the second kind. Our data is not balanced, and this is not very suitable for us.

There is always a lot of background, and the object itself is not enough. Therefore, the model will always give very high precision and very low recall. To weigh the errors of the first and second kind in different ways, we decided to take the Tversky index :

The Tversky index has two coefficients, α and β, weigh the two errors differently. If we take α = β = 0.5, we get the same Dice coefficient. If we select other parameters, we get the Jaccard index - one of the measures of similarity of objects. For α = β = 1 - the Tversky index is equal to the Jaccard index.

You can also get Fβ-score. For α + β = 1, the Tversky index corresponds to the Fβ-score.

To select α and β, we conducted several experiments. They put forward a hypothesis - to fine the model for errors of the second kind. It's not so bad when a model classifies a background pixel as an object pixel. If there is a small background frame around the object, this is normal. But when the model classifies a screw pixel as a background pixel - holes appear on the screw, it becomes uneven, and this interferes with our classification.

Therefore, we decided to increase the parameter β and bring it closer to 1, and the parameter α to 0.

It can be seen in the picture that the best mask was obtained with β = 0.7 and α = 0.3. We decided to stop on this and train the model on all our data.

Training

The learning strategy is pretty tricky. Since we manually marked up the data in personal time, we decided to use one feature of U-Net. It segments each new class on a new channel - adds a new channel and an object is localized on it.

Therefore, in our training there was not a single picture that contains both a coin and a bolt. All pictures contained one class: 10% - coins, 90% - screws.

This made it possible to correctly distribute efforts and save time on a coin - it is one, but the form is simple. We easily learned to segment it, which allowed us to transfer 90% of our efforts to screws. They have different shapes and colors, and it is important to learn how to segment them.

Our network has learned to segment even those instances that were not in our sample. For example, bolts of an unusual shape were absent, but the model also segmented them well. She learned to generalize the signs of screws and bolts and use this for new data, which is great.

Classification

This is the next stage after the localization of the object. Few people train convolutional neural networks to classify objects; they often use Transfer learning . Let's look at the architecture of a convolutional neural network, and then briefly recall what Transfer learning is.

In the early layers, the network learns to recognize boundaries and angles. Later it recognizes simple shapes: rectangles, circles, squares. The closer to the top, the more it recognizes the characteristic features of the data on which it is trained. At the very top, the model recognizes classes.

Most objects of the world consist of simple forms and have common features. You can take part of a network that is trained on a huge amount of data and use these attributes for our classification. The network will be trained on a small data set without the large expenditure of resources. This is what we did.

Once you have decided on the general technology of Transfer learning, you need to select a pre-trained model.

Model selection

Our application works in real time. The model should be light and mobile - have few parameters, but be accurate. To account for these two factors, we sacrificed a little accuracy in favor of lightness. Therefore, we chose not the most accurate, but lightweight model - Xception .

In Xception, instead of the usual convolution - Convolution - Separable Convolution is used . Therefore, Xception is lighter than other networks, for example, with VGG.

Ordinary Convolution does both interchannel and interdimensional convolution. And Separable Convolution shares: first, interdimensional convolution - Depthwise , and then interchannel. The results combine.

Xception executes separable Convolution, while it produces the same good result as the ordinary Convolution, but there are fewer parameters.

We substitute the values in the formulas for calculating the parameters, for example, for 16 filters. For ordinary Convolution, you need to calculate the parameters 7 times more than for Separable Convolution. Due to this, Xception is more accurate and less.

Training

First, we decided to build some baseline and trained the model in the original picture. We had 4 classifiers and each was responsible for a specific attribute. The result was unsatisfactory.

Then they trained the model on the box, which returned the object detection. Got a good increase in accuracy for Thread coverage. But for the rest of the classifiers, the result is also unsatisfactory.

Then they decided to give the classifiers only that part of the screw that they want and will classify. Head give only hats, Tip - only a spearhead. To do this, we took masks, got a contour around which a rectangle of the minimum area was circled, and calculated the angle of rotation.

At this moment, we still do not know which side the screw head and tip. To find out, they cut the box in half and looked at the square.

The area that contains the head is always larger than the area that contains the tip. Comparing the area, we determine in which part, which part of the screw. It worked, but not for all cases.

When the length of the screw is comparable to the diameter of the cap, instead of a rectangle, a square is obtained. When we turn it, we get a picture, as at number 3. The model does not classify this option well.

Then we took all the long screws, calculated the rotation angles for them, and built the Rotation Net shallow neural network , which takes the screw and predicts the rotation angle.

Then this auxiliary model was used for small short screws and bolts. We got a good result - everything works, small screws rotate too. At this stage, the error is practically reduced to zero. We took this data, trained the classifiers and saw that for each of the classifiers, except Finish, the accuracy increased significantly. This is great - we work further.

But for some reason, Finish did not take off. We studied the errors and saw the picture.

The same pair of screws under different lighting conditions and different camera settings differ in color. This may confuse not only the model, but also the person. Gray can turn pink, yellow can turn orange. Recall the blue-gold dress - the same story. The reflective surface of the screw is misleading.

We studied similar cases on the Internet and found Chinese scientists who tried to classify cars by colors and faced the same problem for cars.

As a solution, Chinese scientists have created a shallow network. Its feature is in two branches that are concatenated at the end. This architecture is called ColorNet .

We implemented a solution for our task, and we got an increase in accuracy by almost 2 times. With such results and models you can work and look for the very screw from the table in the catalog of the online store.

We had only 4 classifiers for 4 attributes, and there are many others. So, you need to create some kind of filter that will take the catalog data and filter it in a certain way.

Filtration

Each classifier returns a soft label and class. We took the values of the soft tags and our database, counted some score - multiplying all the tags for each feature.

Score shows the confidence of all classifiers that this combination of features will most likely appear. The higher the score, the more likely that the screw from the catalog and the screw in the picture are similar.

Pipeline

It turned out such an application.

- Input : start with a raw picture.

- Localization : determine where the bolt or screw is located, and where is the coin.

- Transformation and rotation .

- Classification : we carefully trim everything, classify and determine the size.

- Filtering .

- Exit to a specific SKU position.

How to implement a complex project

Eat the elephant in parts . Divide the big problem into parts.

Label data that will reflect reality. Do not be afraid of data markup - this is the safest way, which will ensure maximum model quality quickly. Data synthesis methods usually produce worse results than using real data.

Test it out . Before building many models, we took small chunks of data, labeled it with our hands, and tested the operation of each hypothesis. Only after that they trained U-Net, classifiers, Rotation.

Do not reinvent the wheel . Often the problem you are facing already has a solution. Look on the Internet, read articles - be sure to find something!

The story about our Visual Search application is not only about the classification of screws. It is about how to make a complex project, which has no analogues, but even if there are, they do not meet the requirements that we set for the application.

For more information on Grid Dynamics projects and other challenges faced by the Data Science team, see the company's technology blog .

Reports with such a bias - the use of machine learning algorithms in real non-standard projects - we are just looking for UseData Conf . Here is more about which areas we are most interested in.

Send applications if you know how to prank the models so that they fly. If you know that convergence does not guarantee speed, and are ready to tell you what is more important to pay attention to, we are waiting for you on September 16.