Sounding the past. A guide for historians on converting data to sound

- Transfer

I'm tired of looking at the past. There are many guides to recreate the appearance of historical artifacts, but often we forget that this is a creative act. Perhaps we are too attached to our screens, we attach too much importance to the appearance. Instead, let's try to hear something from the past.

The rich literature on archaeoacoustics and sound landscapes helps to recreate the sound of the place as it was (for example, see St. Paul's Virtual Cathedral or Jeff Veitch's work on ancient Ostia ). But I’m interested in “voicing” the data itself. I want to define the syntax for representing data in the form of sound so that these algorithms can be used in historical science. Drucker saidthe famous phrase that “data” is actually not what is given, but rather what is captured, transformed, that is, 'capta'. When voicing data, I literally reproduce the past in the present. Therefore, assumptions and transformations of this data come to the fore. The resulting sounds are a “deformed performance” that makes you hear modern layers of history in a new way.

I want to hear the meaning of the past, but I know that this is impossible. However, when I hear the instrument, I can physically imagine the musician; by echoes and resonances I can distinguish physical space. I feel the bass, I can move in rhythm. Music covers my body, all my imagination. Associations with previously heard sounds, music and tones create a deep temporal experience, a system of embodied relationships between me and the past. Visuality? We have had visual representations of the past for so long that these grammars have almost lost their artistic expressiveness and performative aspect.

In this lesson, you will learn how to make some noise from historical data. The significance of this noise, well ... is up to you. Part of the point is to make your data unfamiliar again. By translating, recoding, restoring them, we begin to see data elements that remained invisible during visual examination. This deformation is consistent with the arguments made, for example, by Mark Sample about the deformation of society or Bethany Nouwiski about the "resistance of materials . " Scoring leads us from data to Capt, from social sciences to art, from glitch to aesthetics . Let's see what it looks like.

In this tutorial, I will discuss three ways to generate sound or music from your data.

First, we will use the free and open Musical Algorithms developed by Jonathan Middleton. It introduces you to key issues and terms. Then we take a small Python library to “translate” the data to the 88-key keyboard and bring some creativity to the work. Finally, upload the data to the Sonic Pi real-time sound and music processing program, for which many tutorials and reference resources have been published.

You will see how working with sounds moves us from simple visualization to a really effective environment.

Sonification is a method of translating certain aspects of data into audio signals. In general, a method can be called “scoring” if it satisfies certain conditions. These include reproducibility (other researchers can process the same data in the same ways and get the same results) and what can be called “intelligibility” or “intelligibility,” that is, when significant elements of the original data are systematically reflected in the resulting sound (see Hermann, 2008 ). The work of Mark Last and Anna Usyskina (2015) describes a series of experiments to determine which analytical tasks can be performed when scoring data. Their experimental resultsshowed that even untrained listeners (without formal training in music) can distinguish data by ear and draw useful conclusions. They found that listeners were able to perform by ear the general tasks of data mining, such as classification and clustering (in their experiments they broadcast basic scientific data on a Western music scale).

Last and Usyskina focused on the time series. According to their findings, time series data are particularly well suited for scoring, since there are natural parallels here. The music is consistent, it has a duration, and it develops over time; the same with time series data ( Last, Usyskina 2015: p. 424) It remains to compare the data with the corresponding audio outputs. In many applications, the parameter mapping method is used in many applications to combine data aspects of various auditory measurements, such as height, variational form, and interval (onset). The problem with this approach is that if there is no temporary connection (or rather, non-linear connection) between the source data points, the resulting sound may turn out to be “confusing” ( 2015: 422 ).

Listening to the sound, a person fills the moments of silence with his expectations. Consider a video where mp3 is converted to MIDI and back to mp3; the music is “flattened”, so that all audio information is reproduced with one instrument (the effect is similar to saving a web page as .txt, opening it in Word, and then saving it again in .html format). All sounds (including vocals) are translated into the corresponding note values, and then back to mp3.

This is noise, but you can catch the point:

What's going on here? If this song was known to you, you probably understood the real “words." But the song has no words! If you have not heard it before, then it sounds like meaningless cacophony (more examples onAndy Bayo website). This effect is sometimes called auditory hallucination. The example shows how in any data representation we can hear / see what, strictly speaking, is not. We fill the void with our own expectations.

What does this mean for the story? If we voice our data and begin to hear patterns in sound or strange outbursts, then our cultural expectations for music (memories of similar fragments of music heard in certain contexts) will color our interpretation. I would say that this is true for all ideas about the past, but scoring is quite different from standard methods, so this self-awareness helps to identify or express certain critical patterns in (data about) the past.

Consider three tools for scoring data and note how the choice of a tool affects the result, and how to solve this problem by rethinking the data in another tool. Ultimately, scoring is not more objective than visualization, so the researcher should be ready to justify his choice and make this choice transparent and reproducible. (So that no one would think that scoring and algorithmically generated music is something new, I direct the interested reader to Hedges, 1978 ).

Each section contains a conceptual introduction, followed by a walkthrough using archaeological or historical data.

There is a wide range of tools for voicing data. For example, packages for the popular R statistical environment such as playitbyR and AudiolyzR . But the first is not supported in the current version of R (the last update was several years ago), and in order to get the second to work properly, a serious configuration of additional software is required.

In contrast, the Musicalgorithms sitequite easy to use, it has been working for more than ten years. Although the source code has not been published, it is a long-term research project in computational music by Jonathan Middleton. It is currently in the third major version (previous iterations are available on the Internet). Let's start with Musicalalgorithms, because it allows us to quickly download and configure our data to release the presentation as MIDI files. Before you begin, be sure to select the third version . Musical Algorithms website as of February 2, 2016 Musical Algorithms is performing a series of data transformations. In the example below (by default on the site) there is only one row of data, although it looks like several rows. This pattern consists of fields separated by commas, which are internally separated by spaces.

These numbers represent the source data and their conversions. Sharing a file allows another researcher to repeat the work or continue processing with other tools. If you start from the very beginning, then you need only the source data below (list of data points):

For us, the key is the field 'areaPitch1' with the input data, which are separated by spaces. Other fields will be filled in during the work with various Musical Algorithms settings. In the above data (for example, 24 72 12 84, etc.), the values are the initial calculations of the number of inscriptions in British cities along the Roman road (later we will practice with other data). After loading the data in the top menu bar, you can select various operations. In the screenshot, hovering over the information displays an explanation of what happens when you select the division operation to scale the data to the selected note range

Now, when viewing various tabs in the interface (duration, height translation, duration translation, scale options), various transformations are available. In pitch mapping, there are a number of mathematical options for transmitting data to a full 88-key piano keyboard (in a linear translation, the average value is translated to the average C, i.e. 40). You can also choose the type of scale: minor or major, and so on. At this point, after selecting various transformations, you must save the text file. On the File → Play tab, you can download the midi file. Your default audio program should be able to play midi (often the default piano notes are used). More sophisticated midi tools are assigned in mixer programs such as GarageBand (Mac) or LMMS(Windows, Mac, Linux). However, using GarageBand and LMMS is beyond the scope of this guide: an LMMS video tutorial is available here , and GarageBand tutorials are available online. For example, an excellent guide on Lynda.com.

It happens that for the same points there are several columns of data. Say, in our example from Britain, we also want to voice the calculation of the types of ceramics for the same cities. Then you can reload the next row of data, perform transformations and comparisons - and create another MIDI file. Since GarageBand and LMMS allow you to overlay voices, you can create complex musical sequences.

Screenshot of GarageBand, where midi-files are voiced themes from John Adams's diary. In the GarageBand (and LMMS) interface, each midi file is dragged with the mouse to the appropriate location. The toolkit of each midi file (i.e. track) is selected in the GarageBand menu. Track labels have been changed to reflect keywords in each topic. The green area on the right is a visualization of the notes on each track. You can watch this interface in action and listen to music here

What conversions to use? If you have two columns of data, then these are two votes. Perhaps, in our hypothetical data, it makes sense to reproduce the first voice loudly as the main one: in the end, the inscriptions “speak” to us in some way (Roman inscriptions literally refer to passers-by: “Oh you, passing by ...”). And ceramics is perhaps a more modest artifact that can be compared with the lower end of the scale or increase the duration of notes, reflecting its ubiquity among representatives of different classes in this region.

There is no single “right” way to translate data into sound , at least not yet. But even in this simple example, we see how shades of meaning and interpretation appear in the data and their perception.

But what about the time? Historical data often has a certain date binding. Therefore, the time span between two data points must be considered. It is here that our next tool becomes useful if the data points are related to each other in time space. We are starting to move from scoring (data points) to music (relationships between points).

In the first column of the data set is the number of Roman coins and the number of other materials from the same cities. Information taken from the British Museum's Portable Antiquities Scheme. Processing this data may reveal some aspects of the economic situation along Watling Street, the main route through Roman Britain. Data points are located geographically from northwest to southeast; thus, as the sound reproduces, we hear movement in space. Each note represents every stop on the way.

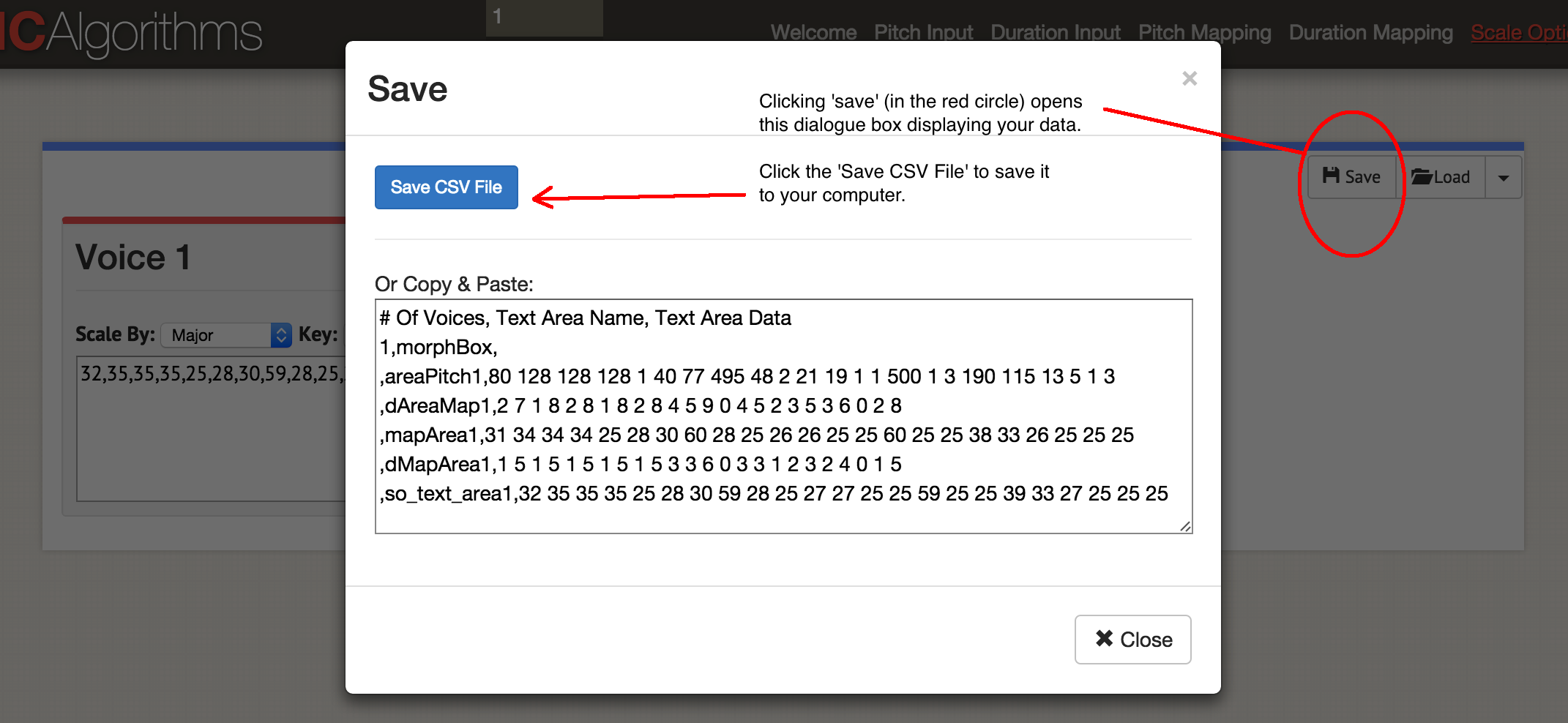

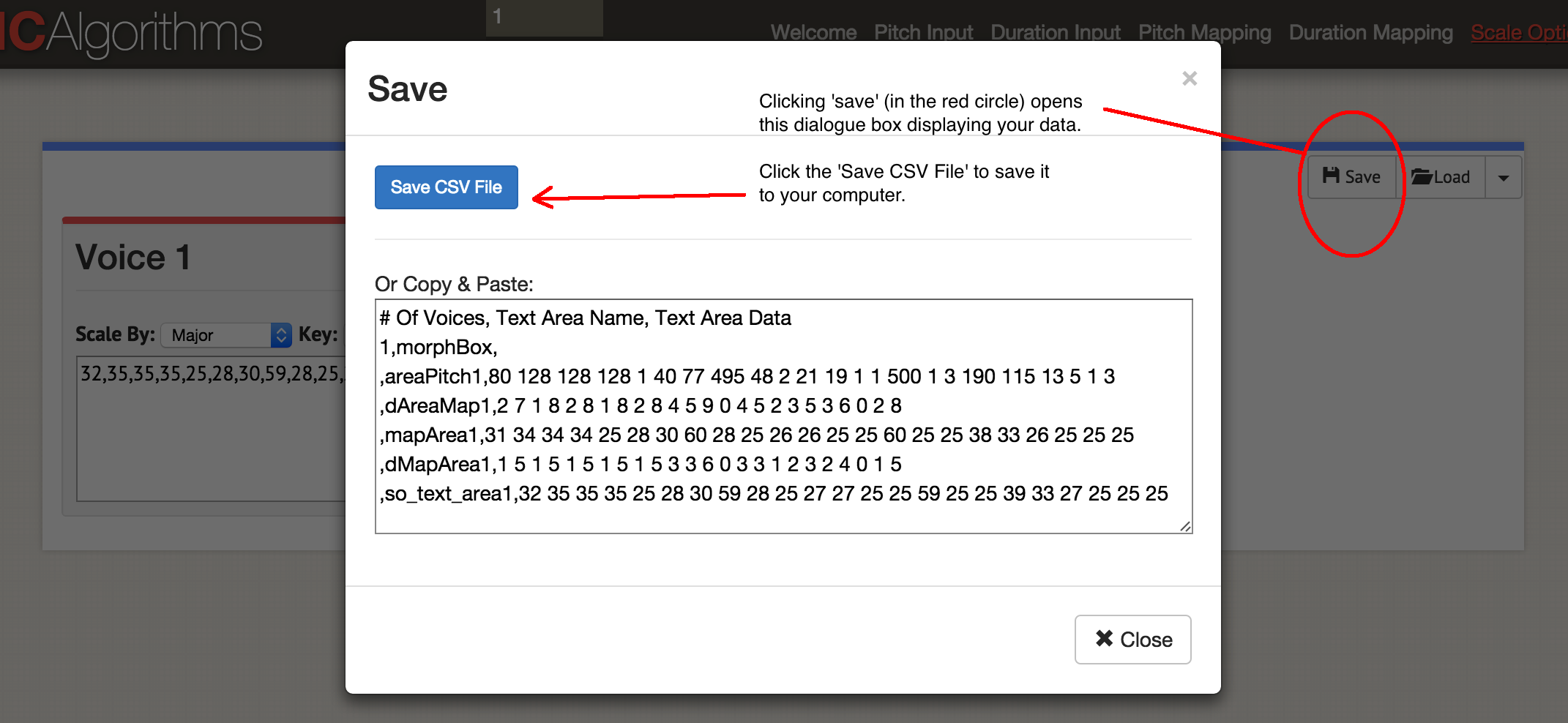

So, we announced one column of data! Click 'Save', then 'Save CSV'. Dialog box 'Save' You will get something like this file:

The original data remained in the field 'areaPitch1', and then the created mappings follow. The site allows you to generate in one MIDI file up to four voices at a time. Depending on which instruments you want to use later, you can choose to generate one MIDI file at a time. Let's play the music: click 'Play'. Here you choose the pace and instrument. You can listen to your data in a browser or save as a MIDI file with the blue 'Save MIDI file' button.

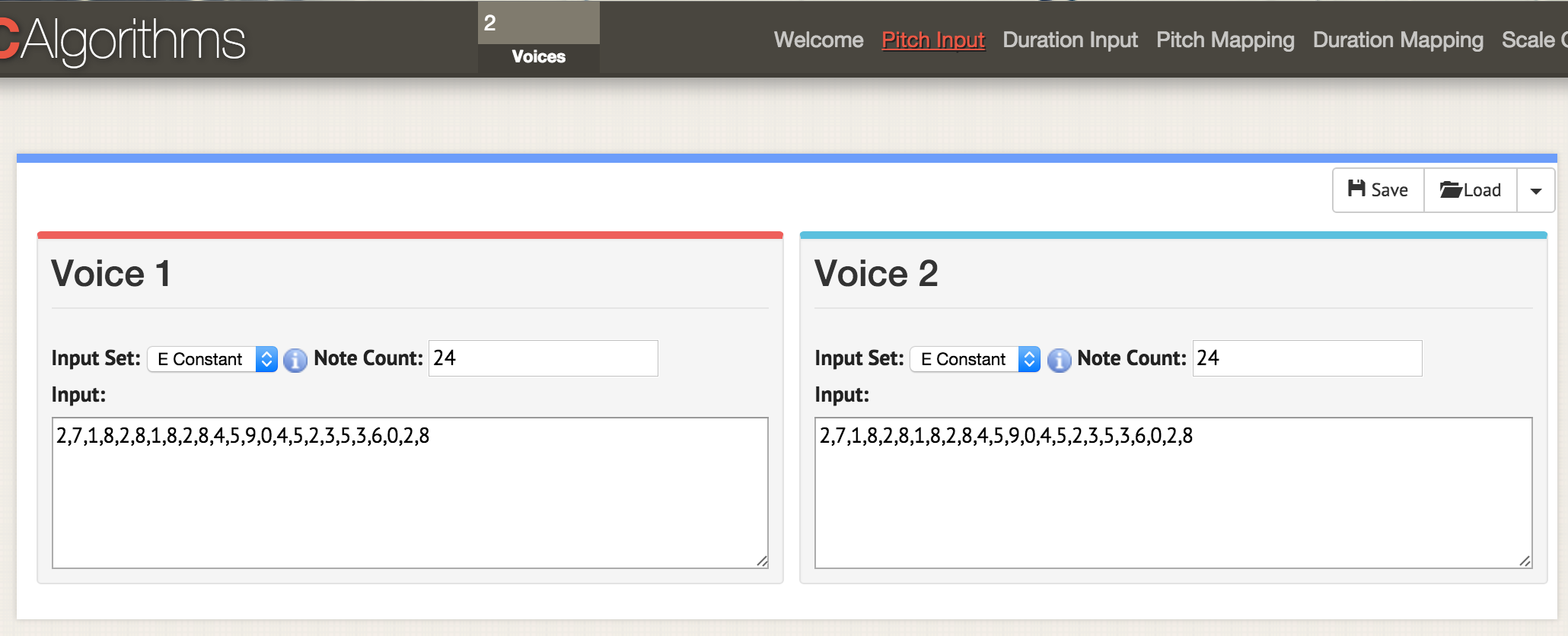

Let's go back to the beginning and load both data columns into this template:

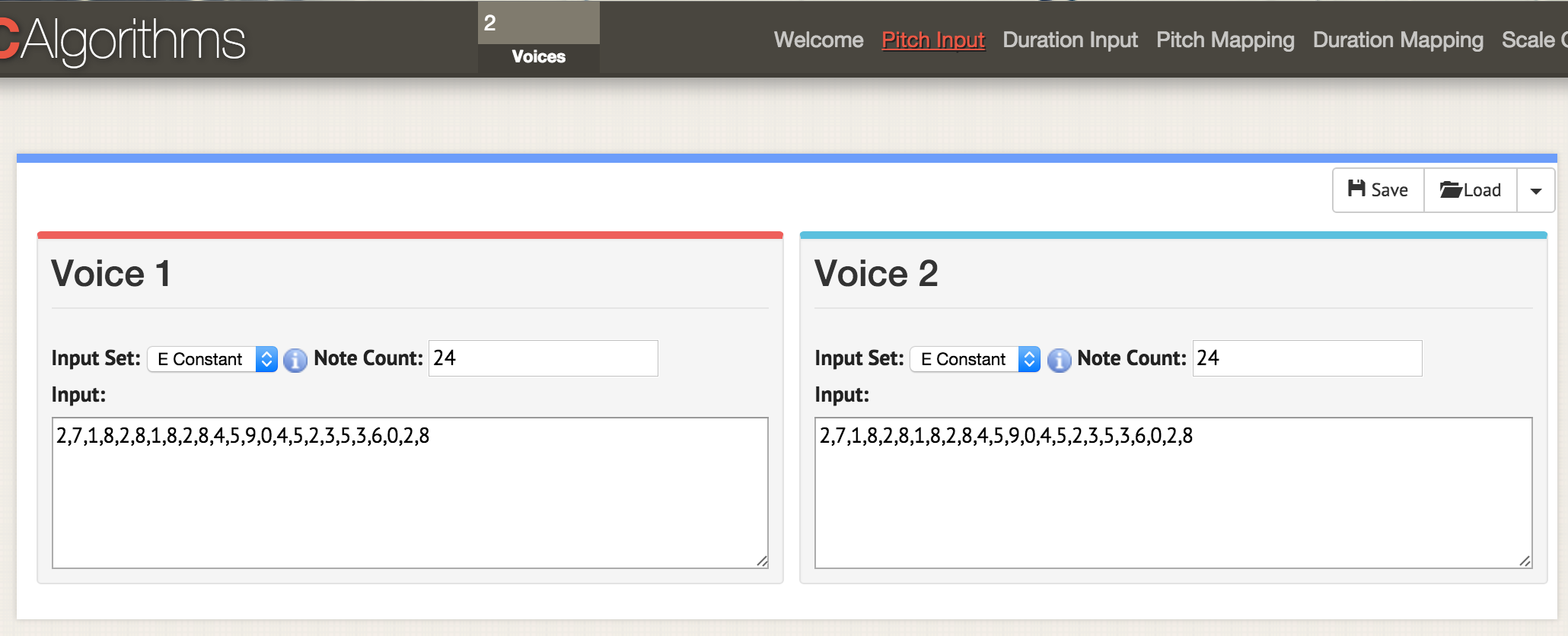

Here we are on the page with the parameters 'pitch input'. At the top of the window, specify two votes, now on any page with parameters two windows for two votes open. As before, we upload the data in CSV format, but the file must be formatted so that the values 'areaPitch1' and 'areaPitch2' are indicated there. Data for the first vote will appear on the left, and the second on the right.

If we have several votes, what should be highlighted? Please note that with this approach, our voice acting does not take into account the distance between points in the real world. If you consider, it will greatly affect the result. Of course, distance does not have to be tied to geography - it can be tied to time. The following tool will explicitly indicate this factor when scoring.

This section of the manual will require Python. If you have not experimented with this language, you will have to spend some time getting to know the command line . See also quick installation guide for modules .

Python is already installed on Macs. You can check: press COMMAND and a space, in the search box, enter

Windows users need to install Python on their own: start from this page , although everything is a little more complicated than it says. First, you need to download the file

If nothing happens by pressing Enter, then the command has worked. To check, open the command line (here are 10 ways to do this ) and enter

The last piece of the puzzle is a program called

When you have the python code you want to run, paste it into a text editor and save the file with the extension

MIDITime is a Python package developed by Reveal News (formerly called the Center for Investigative Journalism). Repository on Github . MIDITime is specifically designed to process time series (i.e., a sequence of observations collected over time).

While Musical Algorithms have a more or less intuitive interface, the advantage here is open source. More importantly, the previous tool is not able to take into account data taking into account historical time. MIDITime allows you to cluster information on this factor.

Suppose we have a historical diary to which a thematic model has been applied. The resulting output may contain diary entries in the form of strings, and in the columns will be the percentage contribution of each topic. In this case, listening to the values will help to understand such patterns of thinking from the diary that it is impossible to convey in the form of a graph. At the hearing, surges or repeating musical patterns that are not visible on the chart are immediately noticeable.

Installation with one pip command :

for poppies;

under Linux;

under Windows (if the instructions do not work, you can try this utility to install Pip).

Consider an example script. Open a text editor, copy and paste this code:

Save the script as

A new file will be created in the directory

Play with the script, add more notes. Here are the notes for the song 'Baa Baa Black Sheep':

Can you write instructions for the computer to play a melody (here is a diagram to help)?

By the way . There is a special text file format for describing music called ABC Notation . It is beyond the scope of this article, but you can write a script for scoring, say, in spreadsheets, comparing the values of notes in ABC notation (if you ever used the IF - THEN construct in Excel, you have an idea how to do this), and then through sites like this, the ABC notation is converted to a .mid file.

This file contains a sample from the John Adams diary theme model for Macroscope . Only the strongest signals were left here, rounding the values in the columns to two decimal places. To insert this data into the Python script, you need to format it in a special way. The hardest thing is with the date field.

For this tutorial, let's leave the variable names and the rest unchanged from the example script. An example is designed to process earthquake data; therefore, here "magnitude" can be represented as our "contribution of the topic."

Regular expressions can be used to format the data, and even easier - spreadsheets. Copy the element with the theme contribution value to a new sheet and leave the columns left and right. In the example below, I put it in column D and then filled in the rest:

Then copy and paste the immutable elements, filling in the entire column. The item with the date must be in the format (year, month, day). After filling the table, you can copy and paste it into a text editor, making it part of the array

Note that there is no comma at the end of the last line.

The final script will look something like this if you use the example from the page of Miditime itself (the code fragments below are interrupted by comments, but they should be inserted together as a single file in a text editor):

The values after MIDITime are set as

Now we pass the data to the script by loading them into an array

... here we insert all the data and do not forget to remove the comma at the end of the last line

then insert the timing:

This code sets the timing between different diary entries; if the diary entries are close to each other in time, then the corresponding notes will also be closer. Finally, we determine how data compares with height. The initial values are indicated as a percentage in the range from 0.01 (i.e. 1%) to 0.99 (99%), so we

and the last fragment to save data to a file:

Save this file with a new name and extension

For each column in the source data we make a unique script and do not forget to change the name of the output file ! You can then upload individual midi files to GarageBand or LMMS for instrumentation. Here is the complete diary of John Adams .

Processing unique midi in GarageBand or another music editor means moving from simple dubbing to musical art. This final section of the article is not a complete guide to using Sonic Pi , but rather acquaintance with an environment that allows real-time encoding and playback of data in the form of music (see video for an example of encoding with real-time playback ). The tutorials built into the program will show how to use a computer as a musical instrument (you enter the Ruby code into the built-in editor, and the interpreter immediately plays the result).

Why is this needed? As you can understand from this guide, as you read the data, you begin to make decisions about how to translate the data into sound. These decisions reflect implicit or explicit decisions about what data matters. There is a continuum of “objectivity,” if you like. On the one hand, voiced historical data, on the other - an idea of the past, is as exciting and personal as any well-made public lecture. Sounding allows you to really hear the data that is stored in the documents: this is a kind of public history. The musical performance of our data ... just imagine!

Here I propose a code snippet for importing data, which is just a list of values stored as csv. My thanks to George Washington University librarian Laura Vrubel, who uploaded her experiments on sounding library operations to gist.github.com .

There are two topics in this sample (the thematic model generated from the Jesuit Relation ). In the first line, the headers are 'topic1' and 'topic2'.

Follow the built-in Sonic Pi tutorials until you get familiar with the interface and features (all of these tutorials are compiled here ; you can also listen to an interview with Sam Aaron, creator of Sonic Pi). Then, in the new buffer (editor window), copy the following code (again, separate fragments should be collected in one script):

Remember that

Now load this data into the music composition:

The first few rows load the data columns; then we indicate which sample of sound we want to use (piano), and then we indicate to play the first topic (topic1) in accordance with the specified criteria: for the strength of the note (attack), a random value of less than 0.5 is selected; for decay - a random value less than 1; for amplitude, a random value of less than 0.25.

See a line with multiplication by one hundred (

And the last thing to note here: the value of 'rand' (random) allows you to add a bit of “humanity” to the music in terms of dynamics. We do the same for 'topic2'.

You can also specify the rhythm (beats per minute), loops, samples, and other effects that Sonic Pi supports. The location of the code affects playback: for example, if placed in front of the above data block, it will play first. For example, if you

... you get a little musical introduction. The program waits 2 seconds, plays the sample 'ambi_choir', then waits another 6 seconds before starting to play our data. If you want to add a bit of an ominous drum throughout the melody, put this bit as follows (in front of your own data):

The code is pretty straightforward: the looped sample 'bd_boom' with the sound effect of reverb at a certain speed. The pause between cycles is 2 seconds.

As for “real-time coding,” this means that you can make changes to the code while replaying those changes . Don't like what you hear? Change the code immediately!

You can start exploring the Sonic Pi with this workshop . See also the report of Laura Vrubel about attending the seminar, which also describes her work in this area and the work of her colleagues.

And again I repeat: it is not necessary to think that we, with our algorithmic approach, are at the forefront of science. In 1978, a scientific article was published on “musical dice games” of the 18th century, where rolls of dice determined the recombination of previously written pieces of music. Robin Newman studied and coded some of these games for the Sonic Pi . For musical notation, Newman uses a tool that can be described as Markdown + Pandoc, and for converting to notes, Lilypond . So all the topics on our The Programming Historian blog have a long history!

When voiced, we see that our data often reflect not so much the story as its interpretation in our performance. This is partly due to the novelty and artistic nature required to translate data into sound. But this greatly distinguishes sound interpretation from traditional visualization. Maybe the generated sounds will never rise to the level of “music”; but if they help change our understanding of the past and influence others, then the effort is worth it. As Trevor Owens would say, “Sounding is a discovery of the new, not justification of the known .”

The rich literature on archaeoacoustics and sound landscapes helps to recreate the sound of the place as it was (for example, see St. Paul's Virtual Cathedral or Jeff Veitch's work on ancient Ostia ). But I’m interested in “voicing” the data itself. I want to define the syntax for representing data in the form of sound so that these algorithms can be used in historical science. Drucker saidthe famous phrase that “data” is actually not what is given, but rather what is captured, transformed, that is, 'capta'. When voicing data, I literally reproduce the past in the present. Therefore, assumptions and transformations of this data come to the fore. The resulting sounds are a “deformed performance” that makes you hear modern layers of history in a new way.

I want to hear the meaning of the past, but I know that this is impossible. However, when I hear the instrument, I can physically imagine the musician; by echoes and resonances I can distinguish physical space. I feel the bass, I can move in rhythm. Music covers my body, all my imagination. Associations with previously heard sounds, music and tones create a deep temporal experience, a system of embodied relationships between me and the past. Visuality? We have had visual representations of the past for so long that these grammars have almost lost their artistic expressiveness and performative aspect.

In this lesson, you will learn how to make some noise from historical data. The significance of this noise, well ... is up to you. Part of the point is to make your data unfamiliar again. By translating, recoding, restoring them, we begin to see data elements that remained invisible during visual examination. This deformation is consistent with the arguments made, for example, by Mark Sample about the deformation of society or Bethany Nouwiski about the "resistance of materials . " Scoring leads us from data to Capt, from social sciences to art, from glitch to aesthetics . Let's see what it looks like.

Content

- Targets and goals

- A small introduction to dubbing

- Musical algorithms

- Python setup briefly

- MIDITime

- Sonic pi

- Nihil novi sub sole

- Conclusion

Targets and goals

In this tutorial, I will discuss three ways to generate sound or music from your data.

First, we will use the free and open Musical Algorithms developed by Jonathan Middleton. It introduces you to key issues and terms. Then we take a small Python library to “translate” the data to the 88-key keyboard and bring some creativity to the work. Finally, upload the data to the Sonic Pi real-time sound and music processing program, for which many tutorials and reference resources have been published.

You will see how working with sounds moves us from simple visualization to a really effective environment.

Instruments

Data samples

- Roman Empire Artifacts

- Excerpt from the thematic model of the diary of President John Adams

- Excerpt from the thematic model of “Religious Jesuits”

A small introduction to dubbing

Sonification is a method of translating certain aspects of data into audio signals. In general, a method can be called “scoring” if it satisfies certain conditions. These include reproducibility (other researchers can process the same data in the same ways and get the same results) and what can be called “intelligibility” or “intelligibility,” that is, when significant elements of the original data are systematically reflected in the resulting sound (see Hermann, 2008 ). The work of Mark Last and Anna Usyskina (2015) describes a series of experiments to determine which analytical tasks can be performed when scoring data. Their experimental resultsshowed that even untrained listeners (without formal training in music) can distinguish data by ear and draw useful conclusions. They found that listeners were able to perform by ear the general tasks of data mining, such as classification and clustering (in their experiments they broadcast basic scientific data on a Western music scale).

Last and Usyskina focused on the time series. According to their findings, time series data are particularly well suited for scoring, since there are natural parallels here. The music is consistent, it has a duration, and it develops over time; the same with time series data ( Last, Usyskina 2015: p. 424) It remains to compare the data with the corresponding audio outputs. In many applications, the parameter mapping method is used in many applications to combine data aspects of various auditory measurements, such as height, variational form, and interval (onset). The problem with this approach is that if there is no temporary connection (or rather, non-linear connection) between the source data points, the resulting sound may turn out to be “confusing” ( 2015: 422 ).

Filling in the blanks

Listening to the sound, a person fills the moments of silence with his expectations. Consider a video where mp3 is converted to MIDI and back to mp3; the music is “flattened”, so that all audio information is reproduced with one instrument (the effect is similar to saving a web page as .txt, opening it in Word, and then saving it again in .html format). All sounds (including vocals) are translated into the corresponding note values, and then back to mp3.

This is noise, but you can catch the point:

What's going on here? If this song was known to you, you probably understood the real “words." But the song has no words! If you have not heard it before, then it sounds like meaningless cacophony (more examples onAndy Bayo website). This effect is sometimes called auditory hallucination. The example shows how in any data representation we can hear / see what, strictly speaking, is not. We fill the void with our own expectations.

What does this mean for the story? If we voice our data and begin to hear patterns in sound or strange outbursts, then our cultural expectations for music (memories of similar fragments of music heard in certain contexts) will color our interpretation. I would say that this is true for all ideas about the past, but scoring is quite different from standard methods, so this self-awareness helps to identify or express certain critical patterns in (data about) the past.

Consider three tools for scoring data and note how the choice of a tool affects the result, and how to solve this problem by rethinking the data in another tool. Ultimately, scoring is not more objective than visualization, so the researcher should be ready to justify his choice and make this choice transparent and reproducible. (So that no one would think that scoring and algorithmically generated music is something new, I direct the interested reader to Hedges, 1978 ).

Each section contains a conceptual introduction, followed by a walkthrough using archaeological or historical data.

Musical algorithms

There is a wide range of tools for voicing data. For example, packages for the popular R statistical environment such as playitbyR and AudiolyzR . But the first is not supported in the current version of R (the last update was several years ago), and in order to get the second to work properly, a serious configuration of additional software is required.

In contrast, the Musicalgorithms sitequite easy to use, it has been working for more than ten years. Although the source code has not been published, it is a long-term research project in computational music by Jonathan Middleton. It is currently in the third major version (previous iterations are available on the Internet). Let's start with Musicalalgorithms, because it allows us to quickly download and configure our data to release the presentation as MIDI files. Before you begin, be sure to select the third version . Musical Algorithms website as of February 2, 2016 Musical Algorithms is performing a series of data transformations. In the example below (by default on the site) there is only one row of data, although it looks like several rows. This pattern consists of fields separated by commas, which are internally separated by spaces.

# Of Voices, Text Area Name, Text Area Data 1, morphBox, , areaPitch1,2 7 1 8 2 8 1 8 2 8 4 5 9 0 4 5 2 3 5 3 6 0 2 8 , dAreaMap1,2 7 1 8 2 8 1 8 2 8 4 5 9 0 4 5 2 3 5 3 6 0 2 8 , mapArea1.20 69 11 78 20 78 11 78 20 78 40 49 88 1 40 49 20 30 49 30 59 1 20 78 , dMapArea1.1 5 1 5 1 5 1 5 1 5 3 3 6 0 3 3 1 2 3 2 4 0 1 5 , so_text_area1.20 69 11 78 20 78 11 78 20 78 40 49 88 1 40 49 20 30 49 30 59 1 20 78

These numbers represent the source data and their conversions. Sharing a file allows another researcher to repeat the work or continue processing with other tools. If you start from the very beginning, then you need only the source data below (list of data points):

# Of Voices, Text Area Name, Text Area Data 1, morphBox, , area pitch 1.24 72 12 84 21 81 14 81 24 81 44 51 94 01 44 51 24 31 5 43 61 04 21 81

For us, the key is the field 'areaPitch1' with the input data, which are separated by spaces. Other fields will be filled in during the work with various Musical Algorithms settings. In the above data (for example, 24 72 12 84, etc.), the values are the initial calculations of the number of inscriptions in British cities along the Roman road (later we will practice with other data). After loading the data in the top menu bar, you can select various operations. In the screenshot, hovering over the information displays an explanation of what happens when you select the division operation to scale the data to the selected note range

Now, when viewing various tabs in the interface (duration, height translation, duration translation, scale options), various transformations are available. In pitch mapping, there are a number of mathematical options for transmitting data to a full 88-key piano keyboard (in a linear translation, the average value is translated to the average C, i.e. 40). You can also choose the type of scale: minor or major, and so on. At this point, after selecting various transformations, you must save the text file. On the File → Play tab, you can download the midi file. Your default audio program should be able to play midi (often the default piano notes are used). More sophisticated midi tools are assigned in mixer programs such as GarageBand (Mac) or LMMS(Windows, Mac, Linux). However, using GarageBand and LMMS is beyond the scope of this guide: an LMMS video tutorial is available here , and GarageBand tutorials are available online. For example, an excellent guide on Lynda.com.

It happens that for the same points there are several columns of data. Say, in our example from Britain, we also want to voice the calculation of the types of ceramics for the same cities. Then you can reload the next row of data, perform transformations and comparisons - and create another MIDI file. Since GarageBand and LMMS allow you to overlay voices, you can create complex musical sequences.

Screenshot of GarageBand, where midi-files are voiced themes from John Adams's diary. In the GarageBand (and LMMS) interface, each midi file is dragged with the mouse to the appropriate location. The toolkit of each midi file (i.e. track) is selected in the GarageBand menu. Track labels have been changed to reflect keywords in each topic. The green area on the right is a visualization of the notes on each track. You can watch this interface in action and listen to music here

What conversions to use? If you have two columns of data, then these are two votes. Perhaps, in our hypothetical data, it makes sense to reproduce the first voice loudly as the main one: in the end, the inscriptions “speak” to us in some way (Roman inscriptions literally refer to passers-by: “Oh you, passing by ...”). And ceramics is perhaps a more modest artifact that can be compared with the lower end of the scale or increase the duration of notes, reflecting its ubiquity among representatives of different classes in this region.

There is no single “right” way to translate data into sound , at least not yet. But even in this simple example, we see how shades of meaning and interpretation appear in the data and their perception.

But what about the time? Historical data often has a certain date binding. Therefore, the time span between two data points must be considered. It is here that our next tool becomes useful if the data points are related to each other in time space. We are starting to move from scoring (data points) to music (relationships between points).

Practice

In the first column of the data set is the number of Roman coins and the number of other materials from the same cities. Information taken from the British Museum's Portable Antiquities Scheme. Processing this data may reveal some aspects of the economic situation along Watling Street, the main route through Roman Britain. Data points are located geographically from northwest to southeast; thus, as the sound reproduces, we hear movement in space. Each note represents every stop on the way.

- Open thesonification-roman-data.csv in a spreadsheet. Copy the first column into a text editor. Delete line endings so that all data is on the same line.

- Add the following information:

# Of Voices, Text Area Name, Text Area Data 1, morphBox, , areaPitch1,

... so your data follows immediately after the last comma (like pltcm ). Save the file with a meaningful name, for examplecoinsounds1.csv. - Go to the Musicalgorithms website (third version) and click on the 'Load' button. In the pop-up window, click the blue 'Load' button and select the file saved in the previous step. The site will upload your materials and, if successful, will show a green checkmark. If this is not the case, make sure the values are separated by spaces and immediately follow the last comma in the code block. You can try downloading the demo file from this guide .

After clicking 'Load', this dialog box appears on the main screen. Then click on 'Load CSV File'. Select your file, it will appear in the field. Then click on the 'Load' button at the bottom. - Click on 'Pitch Input' and you will see the values of your data. Do not select additional options on this page at this time (thus, the default values apply).

- Click on 'Duration Input'. Do not select any options here yet . These options will make various transformations of your data with a change in the duration of each note. Until you worry about these options, move on.

- Click 'Pitch Mapping'. This is the most important choice, as it translates (i.e. scales) your raw data to the keyboard keys. Leave

mappingthe value 'division' (other parameters - translation modulo or logarithmic). ParameterRange1 to 88 uses the entire keyboard length of 88 keys; thus, the lowest value will correspond to the deepest note on the piano, and the highest value to the highest note. Instead, you can limit the range of music around middle C, the type a range of 25 to 60. The issuance will change as follows:31,34,34,34,25,28,30,60,28,25,26,26,25,25,60,25,25,38,33,26,25,25,25. These are not your numbers, but the notes on the keyboard.

Click in the 'Range' field and enter 25. The values below will change automatically. In the 'to' field, set 60. If you go to another field, the values will be updated - Click 'Duration Mapping'. As with height translation, here the program takes the specified time range and uses various mathematical parameters to translate this range into notes. If you hover over

i, you will see which numbers correspond to whole notes, quarters, eighths and so on. Leave the defaults for now. - Click on 'Scale Options'. Here we begin to work with what corresponds in a sense to the “emotional” aspect. Usually, the major scale is perceived as “joyful,” and the minor — as “sad”; A detailed discussion of this topic can be found here . For now, leave 'scale by: major'. Leave 'scale' in the value

С.

So, we announced one column of data! Click 'Save', then 'Save CSV'. Dialog box 'Save' You will get something like this file:

# Of Voices, Text Area Name, Text Area Data 1, morphBox, , area pitch 1.80 128 128 128 1 40 77 495 48 2 21 19 1 1 500 1 3 190 115 13 5 1 3 , dAreaMap1,2 7 1 8 2 8 1 8 2 8 4 5 9 0 4 5 2 3 5 3 6 0 2 , mapArea1.31 34 34 34 25 28 30 60 28 25 26 26 25 25 60 25 25 38 33 26 25 25 25 , dMapArea1.1 5 1 5 1 5 1 5 1 5 3 3 6 0 3 3 1 2 3 2 4 0 1 , so_text_area1.32 35 35 35 25 28 30 59 28 25 27 27 25 25 59 25 25 39 33 27 25 25 25

The original data remained in the field 'areaPitch1', and then the created mappings follow. The site allows you to generate in one MIDI file up to four voices at a time. Depending on which instruments you want to use later, you can choose to generate one MIDI file at a time. Let's play the music: click 'Play'. Here you choose the pace and instrument. You can listen to your data in a browser or save as a MIDI file with the blue 'Save MIDI file' button.

Let's go back to the beginning and load both data columns into this template:

# Of Voices, Text Area Name, Text Area Data 2, morphBox, , areaPitch1, , areaPitch2,

Here we are on the page with the parameters 'pitch input'. At the top of the window, specify two votes, now on any page with parameters two windows for two votes open. As before, we upload the data in CSV format, but the file must be formatted so that the values 'areaPitch1' and 'areaPitch2' are indicated there. Data for the first vote will appear on the left, and the second on the right.

If we have several votes, what should be highlighted? Please note that with this approach, our voice acting does not take into account the distance between points in the real world. If you consider, it will greatly affect the result. Of course, distance does not have to be tied to geography - it can be tied to time. The following tool will explicitly indicate this factor when scoring.

Python setup briefly

This section of the manual will require Python. If you have not experimented with this language, you will have to spend some time getting to know the command line . See also quick installation guide for modules .

Python is already installed on Macs. You can check: press COMMAND and a space, in the search box, enter

terminaland click on the terminal application. The command $ type python —versionwill show which version of Python you have installed. In this article we are working with Python 2.7, the code was not tested in Python 3. Windows users need to install Python on their own: start from this page , although everything is a little more complicated than it says. First, you need to download the file

.msi(Python 2.7). Run the installer, it will be installed in a new directory, for example C:\Python27\. Then you need to register this directory in the paths, that is, tell Windows where to look for Python when you run the Python program. There are several ways to do this. Perhaps the easiest way is to find a program on your computer Powershell(type 'powershell' in the Windows search bar). Open Powershell and at the command prompt, paste this whole:[Environment] :: SetEnvironmentVariable ("Path", "$ env: Path; C: \ Python27 \; C: \ Python27 \ Scripts \", "User")If nothing happens by pressing Enter, then the command has worked. To check, open the command line (here are 10 ways to do this ) and enter

python --version. A response should appear with an indication Python 2.7.10or a similar version. The last piece of the puzzle is a program called

Pip. Mac users can install it with a command in the terminal sudo easy_install pip. Windows users will have a little more complicated. Firstly, right-click and save the file by this link (if you just click on the link, the get-pip.py code will open in the browser). Keep it somewhere at hand. Open a command prompt in the directory where you saved get-pip.py. Then enter at the command prompt python get-pip.py.When you have the python code you want to run, paste it into a text editor and save the file with the extension

.py. This is a text file, but the file extension tells the computer to use Python to interpret it. It is run from the command line, where he first tells the interpreter the name and then the name of the file: python my-cool-script.py.MIDITime

MIDITime is a Python package developed by Reveal News (formerly called the Center for Investigative Journalism). Repository on Github . MIDITime is specifically designed to process time series (i.e., a sequence of observations collected over time).

While Musical Algorithms have a more or less intuitive interface, the advantage here is open source. More importantly, the previous tool is not able to take into account data taking into account historical time. MIDITime allows you to cluster information on this factor.

Suppose we have a historical diary to which a thematic model has been applied. The resulting output may contain diary entries in the form of strings, and in the columns will be the percentage contribution of each topic. In this case, listening to the values will help to understand such patterns of thinking from the diary that it is impossible to convey in the form of a graph. At the hearing, surges or repeating musical patterns that are not visible on the chart are immediately noticeable.

Setting MIDITime

Installation with one pip command :

$ pip install miditimefor poppies;

$ sudo pip install miditimeunder Linux;

> python pip install miditimeunder Windows (if the instructions do not work, you can try this utility to install Pip).

Practice

Consider an example script. Open a text editor, copy and paste this code:

#!/usr/bin/pythonfrom miditime.miditime import MIDITime

# NOTE: this import works at least as of v1.1.3; for older versions or forks of miditime, you may need to use# from miditime.MIDITime import MIDITime# Instantiate the class with a tempo (120bpm is the default) and an output file destination.

mymidi = MIDITime(120, 'myfile.mid')

# Create a list of notes. Each note is a list: [time, pitch, attack, duration]

midinotes = [

[0, 60, 200, 3], #At 0 beats (the start), Middle C with attack 200, for 3 beats

[10, 61, 200, 4] #At 10 beats (12 seconds from start), C#5 with attack 200, for 4 beats

]

# Add a track with those notes

mymidi.add_track(midinotes)

# Output the .mid file

mymidi.save_midi()Save the script as

music1.py. In a terminal or command line, run it:$ python music1.pyA new file will be created in the directory

myfile.mid. You can open it for listening using Quicktime or Windows Media Player (and add tools there in GarageBand or LMMS ). Music1.pyimports miditime (do not forget to install it before running the script :) pip install miditime. Then sets the pace. All notes are listed separately, where the first number is the start time of the playback, the height (that is, the note itself!), How strongly or rhythm the note is played (attack) and its duration. Then the notes are recorded on the track, and the track itself is recorded in a file myfile.mid. Play with the script, add more notes. Here are the notes for the song 'Baa Baa Black Sheep':

D, D, A, A, B, B, B, B, A Baa, Baa, black, sheep, have, you, any, wool?

Can you write instructions for the computer to play a melody (here is a diagram to help)?

By the way . There is a special text file format for describing music called ABC Notation . It is beyond the scope of this article, but you can write a script for scoring, say, in spreadsheets, comparing the values of notes in ABC notation (if you ever used the IF - THEN construct in Excel, you have an idea how to do this), and then through sites like this, the ABC notation is converted to a .mid file.

Uploading Your Own Data

This file contains a sample from the John Adams diary theme model for Macroscope . Only the strongest signals were left here, rounding the values in the columns to two decimal places. To insert this data into the Python script, you need to format it in a special way. The hardest thing is with the date field.

For this tutorial, let's leave the variable names and the rest unchanged from the example script. An example is designed to process earthquake data; therefore, here "magnitude" can be represented as our "contribution of the topic."

my_data = [

{'event_date': <datetime object>, 'magnitude': 3.4},

{'event_date': <datetime object>, 'magnitude': 3.2},

{'event_date': <datetime object>, 'magnitude': 3.6},

{'event_date': <datetime object>, 'magnitude': 3.0},

{'event_date': <datetime object>, 'magnitude': 5.6},

{'event_date': <datetime object>, 'magnitude': 4.0}

]Regular expressions can be used to format the data, and even easier - spreadsheets. Copy the element with the theme contribution value to a new sheet and leave the columns left and right. In the example below, I put it in column D and then filled in the rest:

| A | B | C | D | E | |

|---|---|---|---|---|---|

| one | {'event_date': datetime | (1753,6,8) | , 'magnitude': | 0.0024499630 | }, |

| 2 | |||||

| 3 |

Then copy and paste the immutable elements, filling in the entire column. The item with the date must be in the format (year, month, day). After filling the table, you can copy and paste it into a text editor, making it part of the array

my_data, for example:my_data = [

{'event_date': datetime (1753,6,8), 'magnitude': 0.0024499630},

{'event_date': datetime (1753,6,9), 'magnitude': 0.0035766320},

{'event_date': datetime (1753,6,10), 'magnitude': 0.0022171550},

{'event_date': datetime (1753,6,11), 'magnitude': 0.0033220150},

{'event_date': datetime (1753,6,12), 'magnitude': 0.0046445900},

{'event_date': datetime (1753,6,13), 'magnitude': 0.0035766320},

{'event_date': datetime (1753,6,14), 'magnitude': 0.0042241550}

]Note that there is no comma at the end of the last line.

The final script will look something like this if you use the example from the page of Miditime itself (the code fragments below are interrupted by comments, but they should be inserted together as a single file in a text editor):

from miditime.MIDITime import MIDITime

from datetime import datetime

import random

mymidi = MIDITime(108, 'johnadams1.mid', 3, 4, 1)The values after MIDITime are set as

MIDITime(108, 'johnadams1.mid', 3, 4, 1), here:- number of beats per minute (108),

- output file ('johnadams1.mid'),

- the number of seconds in music to represent one year in history (3 seconds per calendar year, so diary entries for 50 years are scaled to a melody of 50 × 3 seconds, that is, two and a half minutes),

- base octave for music (middle C is usually represented as C5, so here 4 corresponds to an octave one lower than the reference),

- and the number of octaves for comparing heights.

Now we pass the data to the script by loading them into an array

my_data:my_data = [

{'event_date': datetime(1753,6,8), 'magnitude':0.0024499630},

{'event_date': datetime(1753,6,9), 'magnitude':0.0035766320},... here we insert all the data and do not forget to remove the comma at the end of the last line

event_date, and after the data put the final bracket on a separate line:{'event_date': datetime(1753,6,14), 'magnitude':0.0042241550}

]then insert the timing:

my_data_epoched = [{'days_since_epoch': mymidi.days_since_epoch(d['event_date']), 'magnitude': d['magnitude']} for d in my_data]

my_data_timed = [{'beat': mymidi.beat(d['days_since_epoch']), 'magnitude': d['magnitude']} for d in my_data_epoched]

start_time = my_data_timed[0]['beat']This code sets the timing between different diary entries; if the diary entries are close to each other in time, then the corresponding notes will also be closer. Finally, we determine how data compares with height. The initial values are indicated as a percentage in the range from 0.01 (i.e. 1%) to 0.99 (99%), so we

scale_pctset between 0 and 1. If we do not have percentages, then we use the lowest and highest values . Thus, we insert the following code:defmag_to_pitch_tuned(magnitude):

scale_pct = mymidi.linear_scale_pct(0, 1, magnitude)

# Pick a range of notes. This allows you to play in a key.

c_major = ['C', 'C#', 'D', 'D#', 'E', 'E#', 'F', 'F#', 'G', 'G#', 'A', 'A#', 'B', 'B#']

#Find the note that matches your data point

note = mymidi.scale_to_note(scale_pct, c_major)

#Translate that note to a MIDI pitch

midi_pitch = mymidi.note_to_midi_pitch(note)

return midi_pitch

note_list = []

for d in my_data_timed:

note_list.append([

d['beat'] - start_time,

mag_to_pitch_tuned(d['magnitude']),

random.randint(0,200), # attack

random.randint(1,4) # duration, in beats

])and the last fragment to save data to a file:

# Add a track with those notes

mymidi.add_track(midinotes)

# Output the .mid file

mymidi.save_midi()Save this file with a new name and extension

.py. For each column in the source data we make a unique script and do not forget to change the name of the output file ! You can then upload individual midi files to GarageBand or LMMS for instrumentation. Here is the complete diary of John Adams .

Sonic pi

Processing unique midi in GarageBand or another music editor means moving from simple dubbing to musical art. This final section of the article is not a complete guide to using Sonic Pi , but rather acquaintance with an environment that allows real-time encoding and playback of data in the form of music (see video for an example of encoding with real-time playback ). The tutorials built into the program will show how to use a computer as a musical instrument (you enter the Ruby code into the built-in editor, and the interpreter immediately plays the result).

Why is this needed? As you can understand from this guide, as you read the data, you begin to make decisions about how to translate the data into sound. These decisions reflect implicit or explicit decisions about what data matters. There is a continuum of “objectivity,” if you like. On the one hand, voiced historical data, on the other - an idea of the past, is as exciting and personal as any well-made public lecture. Sounding allows you to really hear the data that is stored in the documents: this is a kind of public history. The musical performance of our data ... just imagine!

Here I propose a code snippet for importing data, which is just a list of values stored as csv. My thanks to George Washington University librarian Laura Vrubel, who uploaded her experiments on sounding library operations to gist.github.com .

There are two topics in this sample (the thematic model generated from the Jesuit Relation ). In the first line, the headers are 'topic1' and 'topic2'.

Practice

Follow the built-in Sonic Pi tutorials until you get familiar with the interface and features (all of these tutorials are compiled here ; you can also listen to an interview with Sam Aaron, creator of Sonic Pi). Then, in the new buffer (editor window), copy the following code (again, separate fragments should be collected in one script):

require'csv'

data = CSV.parse(File.read("/path/to/your/directory/data.csv"), {:headers => true, :header_converters => :symbol})

use_bpm 100Remember that

path/to/your/directory/ this is the actual location of your data on the computer. Make sure the file is really named data.csv, or edit this line in the code. Now load this data into the music composition:

#this bit of code will run only once, unless you comment out the line with#'live_loop', and also comment out the final 'end' at the bottom# of this code block#'commenting out' means removing the # sign.# live_loop :jesuit do

data.each do|line|

topic1 = line[:topic1].to_f

topic2 = line[:topic2].to_f

use_synth :piano

play topic1*100, attack: rand(0.5), decay: rand(1), amp: rand(0.25)

use_synth :piano

play topic2*100, attack: rand(0.5), decay: rand(1), amp: rand(0.25)

sleep (0.5)

endThe first few rows load the data columns; then we indicate which sample of sound we want to use (piano), and then we indicate to play the first topic (topic1) in accordance with the specified criteria: for the strength of the note (attack), a random value of less than 0.5 is selected; for decay - a random value less than 1; for amplitude, a random value of less than 0.25.

See a line with multiplication by one hundred (

*100)? It takes our data value (decimal) and turns it into an integer. In this fragment, the number is directly equated to a note. If the lowest note is 88 and the highest one is 1, then this approach is a bit problematic: we actually do not display any pitch here! In this case, you can use Musical Algorithms to display the pitch, and then pass these values back to Sonic Pi. In addition, since this code is more or less standard Ruby, you can use the usual methods of normalizing data, and then do a linear comparison of your values with a range of 1–88. For starters, it's nice to look at Steve Lloyd’s work on voicing weather data with Sonic Pi.And the last thing to note here: the value of 'rand' (random) allows you to add a bit of “humanity” to the music in terms of dynamics. We do the same for 'topic2'.

You can also specify the rhythm (beats per minute), loops, samples, and other effects that Sonic Pi supports. The location of the code affects playback: for example, if placed in front of the above data block, it will play first. For example, if you

use_bpm 100insert the following after a line :#intro bit

sleep 2

sample :ambi_choir, attack:2, sustain:4, rate:0.25, release:1

sleep 6... you get a little musical introduction. The program waits 2 seconds, plays the sample 'ambi_choir', then waits another 6 seconds before starting to play our data. If you want to add a bit of an ominous drum throughout the melody, put this bit as follows (in front of your own data):

#bit that keeps going throughout the music

live_loop :boomdo

with_fx :reverb, room:0.5do

sample :bd_boom, rate:1, amp:1end

sleep 2endThe code is pretty straightforward: the looped sample 'bd_boom' with the sound effect of reverb at a certain speed. The pause between cycles is 2 seconds.

As for “real-time coding,” this means that you can make changes to the code while replaying those changes . Don't like what you hear? Change the code immediately!

You can start exploring the Sonic Pi with this workshop . See also the report of Laura Vrubel about attending the seminar, which also describes her work in this area and the work of her colleagues.

Nothing new under the sun

And again I repeat: it is not necessary to think that we, with our algorithmic approach, are at the forefront of science. In 1978, a scientific article was published on “musical dice games” of the 18th century, where rolls of dice determined the recombination of previously written pieces of music. Robin Newman studied and coded some of these games for the Sonic Pi . For musical notation, Newman uses a tool that can be described as Markdown + Pandoc, and for converting to notes, Lilypond . So all the topics on our The Programming Historian blog have a long history!

Conclusion

When voiced, we see that our data often reflect not so much the story as its interpretation in our performance. This is partly due to the novelty and artistic nature required to translate data into sound. But this greatly distinguishes sound interpretation from traditional visualization. Maybe the generated sounds will never rise to the level of “music”; but if they help change our understanding of the past and influence others, then the effort is worth it. As Trevor Owens would say, “Sounding is a discovery of the new, not justification of the known .”

Terms

- MIDI : digital musical instrument interface. This is a description of the height and duration of the notes, not their dynamics in the way they are played (this is an important difference). It is a common language for computers and musical instruments. You can apply various tools to the MIDI file, as if changing the font in the text.

- The MP3 : An audio compression format with a loss in the sense that the compression of the data is deleted.

- Height : actual note itself (middle C, etc.)

- Attack : how a note or beat is played

- Duration : how long a note lasts (whole notes, quarters, eighths, etc.)

- Translation of pitch and duration : scaling data values over a range of notes or note duration

- Amplitude : roughly speaking, the volume of a note