Serverless Racks

Serverless is not about the physical absence of servers. This is not a “killer” of containers and not a passing trend. This is a new approach to building systems in the cloud. In today's article, we will touch on the architecture of Serverless applications, see what role the Serverless service provider and open-source projects play. In the end, we’ll talk about Serverless.

I want to write the server side of the application (yes even an online store). It can be a chat, a service for publishing content, or a load balancer. In any case, there will be a lot of headaches: you will have to prepare the infrastructure, determine the application dependencies, and think about the host operating system. Then you need to update small components that do not affect the work of the rest of the monolith. Well, let's not forget about scaling under load.

But what if we take ephemeral containers in which the required dependencies are already preinstalled, and the containers themselves are isolated from each other and from the host OS? We will break the monolith into microservices, each of which can be updated and scaled independently of the others. Having placed the code in such a container, I can run it on any infrastructure. Already better.

And if you do not want to configure containers? I don’t want to think about scaling the application. I do not want to pay for idle running containers when the load on the service is minimal. I want to write code. Focus on business logic and market products at the speed of light.

Such thoughts led me to serverless computing. Serverless in this case meansnot the physical absence of servers, but the absence of a headache for managing infrastructure.

The idea is that application logic breaks down into independent functions. They have an event structure. Each of the functions performs one "microtask". All that is required from the developer is to load the functions into the console provided by the cloud provider and correlate them with the event sources. The code will be executed upon request in an automatically prepared container, and I will pay only for the execution time.

Let's see how the application development process will now look.

From the developer

Earlier, we started talking about the application for the online store. In the traditional approach, the main logic of the system is performed by a monolithic application. And the server with the application is constantly running, even if there is no load.

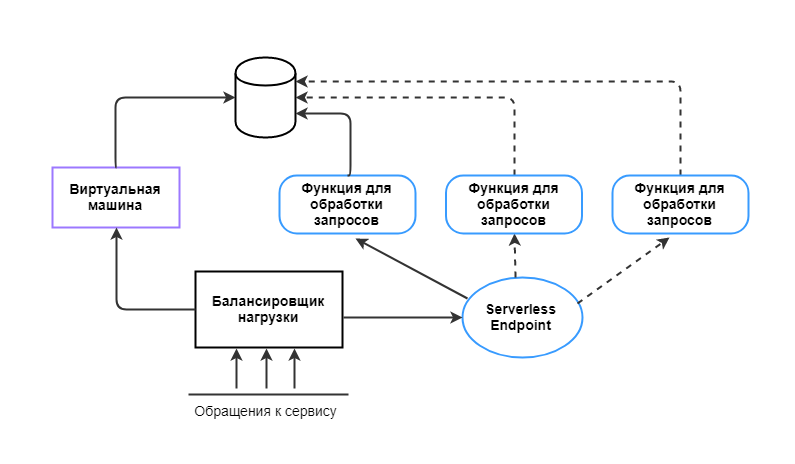

To switch to serverless, we break the application into microtasks. Under each of them we write our own function. Functions are independent of each other and do not store state information. They can even be written in different languages. If one of them crashes, the entire application will not stop. The application architecture will look like this:

Division into functions in Serverless is similar to work with microservices. But a microservice can perform several tasks, and ideally, a function should perform one. Imagine that the task is to collect statistics and display at the request of the user. In the microservice approach, the task is performed by one service with two entry points: write and read. In serverless computing, these will be two different functions that are not interconnected. A developer saves computing resources if, for example, statistics are updated more often than downloaded.

Serverless functions must be executed in a short period of time (timeout), which is determined by the service provider. For example, for AWS, the timeout is 15 minutes. This means that long-lived functions (long-lived) will have to be changed to meet the requirements - this Serverless differs from other technologies popular today (containers and Platform as a Service).

We assign an event to each function. An event is a trigger for an action:

| Event | The action that the function performs |

| The product image was uploaded to the store | Compress image and upload to catalog |

| The physical store address has been updated in the database | Upload new location to maps |

| Customer pays for goods | Start payment processing |

The architecture worked, and the application almost became serverless. Next we go to the service provider.

From the provider

Serverless computing is usually offered by cloud service providers. They call it differently: Azure Functions, AWS Lambda, Google Cloud Functions, IBM Cloud Functions.

We will use the service through the console or personal account of the provider. Function code can be downloaded in one of the following ways:

- write code in built-in editors via the web console,

- Download the archive with the code,

- work with public or private git repositories.

Here we configure the events that call the function. Different providers may have different event sets.

The provider on its infrastructure has built and automated the Function as a Service (FaaS) system:

- The function code gets to the repository on the provider side.

- When an event occurs, containers with the prepared environment are automatically deployed to the server. Each function instance has its own isolated container.

- From the storage, the function is sent to the container, calculated, returns the result.

- The number of parallel events is growing - the number of containers is growing. The system automatically scales. If users do not access the function, it will be inactive.

- The provider sets the idle time of the containers - if during this time the functions do not appear in the container, it is destroyed.

So we get Serverless out of the box. We will pay for the service according to the pay-as-you-go model and only for those functions that are used, and only for the time when they were used.

To introduce developers to the service, providers offer up to 12 months of free testing, but they limit the total computing time, the number of requests per month, money or power consumption.

The main advantage of working with a provider is the ability to not worry about infrastructure (servers, virtual machines, containers). For its part, the provider can implement FaaS both on its own developments, and using open-source tools. We’ll talk about them further.

From open source

Over the past couple of years, the open-source community has been actively working on Serverless tools. In particular, the largest market players contribute to the development of serverless platforms:

- Google offers developers its open-source tool - Knative . Its development involved IBM, RedHat, Pivotal and SAP;

- IBM worked on Serverless-platform OpenWhisk , which later became the Apache Foundation project;

- Microsoft partially opened the Azure Functions platform code .

Developments are also being conducted in the direction of serverless frameworks. Kubeless and Fission are deployed within pre-prepared Kubernetes clusters, OpenFaaS works with both Kubernetes and Docker Swarm. The framework acts as a kind of controller - upon request, it prepares the runtime inside the cluster, then runs a function there.

Frameworks leave room for tool configuration to fit your needs. So, in Kubeless, the developer can set the timeout for the function to execute (the default value is 180 seconds). Fission in an attempt to solve the problem of cold start offers to keep part of the containers running all the time (although this entails the cost of downtime). And OpenFaaS offers a set of triggers for every taste and color: HTTP, Kafka, Redis, MQTT, Cron, AWS SQS, NATs and others.

Instructions for getting started can be found in the official documentation of the frameworks. Working with them implies a bit more skills than working with a provider - this is at least the ability to start a Kubernetes cluster through the CLI. Maximum, include other open-source tools (for example, Kafka's queue manager).

Regardless of how we work with Serverless - through a provider or using open-source, we get a number of advantages and disadvantages of the Serverless approach.

From the perspective of advantages and disadvantages

Serverless develops the ideas of container infrastructure and microservice approach, in which teams can work in multilingual mode, without being tied to one platform. Building a system is simplified, and fixing errors becomes easier. Microservice architecture allows you to add new functionality to the system much faster than in the case of a monolithic application.

Serverless reduces development time even further by letting the developer focus solely on the application’s business logic and coding. As a result, the time to market for development is reduced.

As a bonus, we get automatic scaling to the load, and we pay only for the resources used and only at the time when they are used.

Like any technology, Serverless has disadvantages.

For example, such a drawback could be a cold start time (up to 1 second on average for languages such as JavaScript, Python, Go, Java, Ruby).

On the one hand, in fact, the time of a cold start depends on many variables: the language in which the function is written, the number of libraries, the amount of code, communication with additional resources (the same databases or authentication servers). Because the developer controls these variables, he can shorten the start time. But on the other hand, the developer cannot control the launch time of the container - it all depends on the provider.

A cold start can turn into a warm one when the function reuses the container launched by the previous event. This situation will occur in three cases:

- if customers often use the service and the number of calls to the function is growing;

- if the provider, platform or framework allows you to keep part of the containers running all the time;

- if the developer runs timer functions (say, every 3 minutes).

For many applications, a cold start is not a problem. Here you need to build on the type and tasks of the service. A delayed start for a second is not always critical for a business application, but can become critical for medical services. Probably, in this case, the serverless approach will no longer be suitable.

The next drawback of Serverless is the short lifetime of the function (the timeout for which the function must execute).

But, if you have to work with long-lived tasks, you can use a hybrid architecture - combine Serverless with other technology.

Not all systems will be able to work according to Serverless-scheme.

Some applications will still store data and status at runtime. Some architectures will remain monolithic, and some functions will be long-lived. However (as cloud technologies used to be, and then containers), Serverless is a technology with a great future.

In this vein, I would like to smoothly move on to the issue of applying the Serverless approach.

On the application side

In 2018, the percentage of Serverless usage increased by one and a half times . Among the companies that have already implemented the technology in their services, there are such market giants as Twitter, PayPal, Netflix, T-Mobile, Coca-Cola. At the same time, you need to understand that Serverless is not a panacea, but a tool for solving a certain range of tasks:

- Reduce downtime resources. You do not need to constantly keep the virtual machine under services that are not accessed much.

- “On the fly” process the data. Compress pictures, cut out the background, change the video encoding, work with IoT sensors, perform mathematical operations.

- Glue together other services. Git repository with internal programs, chat bot in Slack with Jira and with a calendar.

- Balance the load. Here we dwell in more detail.

Let's say there is a service that 50 people come to. Under it is a virtual machine with weak hardware. Periodically, the load on the service increases significantly. Then the weak iron can not cope.

You can include a balancer in the system that will distribute the load, say, to three virtual machines. At this stage, we cannot accurately predict the load, so we keep a certain amount of resources running “in reserve”. And overpay for downtime.

In this situation, we can optimize the system through a hybrid approach: for the load balancer we leave one virtual machine and put a link to Serverless Endpoint with functions. If the load exceeds the threshold, the balancer launches instances of functions that take on part of the request processing.

Thus, Serverless can be used where it is not too often, but to process a large number of requests intensively. In this case, running several functions for 15 minutes is more profitable than holding a virtual machine or server all the time.

With all the advantages of serverless computing, before implementing it, you should first of all evaluate the application logic and understand what tasks Serverless can solve in a particular case.

Serverless and Selectel

At Selectel, we have already made working with Kubernetes in a virtual private cloud easier through our control panel. Now we are building our own FaaS platform. We want developers to be able to solve their problems with Serverless through a convenient, flexible interface.

Want to follow the development process of the new FaaS platform? Subscribe to the Selectel “Cloud Functions” newsletter on the service page . We will talk about the development process and announce the closed release of Cloud Functions.

If you have any ideas what an ideal FaaS platform should be and how you want to use Serverless in your projects, share them in the comments. We will take your wishes into account when developing the platform.

Materials used in the article: