100GbE: luxury or immediate need?

IEEE P802.3ba, a standard for transferring data over 100-gigabit Ethernet (100GbE) channels, was developed between 2007 and 2010 [3], but was widely used only in 2018 [5]. Why in 2018, and not earlier? And why immediately in droves? There are at least five reasons ...

IEEE P802.3ba was developed primarily to meet the needs of data centers and the needs of Internet traffic exchange points (between independent operators); as well as to ensure the smooth operation of resource-intensive web services, such as portals with a large amount of video content (for example, YouTube); and for high performance computing. [3] Ordinary Internet users also contribute to changing bandwidth requirements: many have digital cameras, and people want to transfer the content they have shot over the Internet. T.O. the volume of content circulating over the Internet over time is becoming more and more. Both at the professional and consumer levels. In all these cases, when transferring data from one domain to another, the aggregate bandwidth of key network nodes, has long exceeded the capabilities of 10GbE ports. [1] This is the reason for the emergence of a new standard: 100GbE.

Large data centers and cloud service providers are already actively using 100GbE, and plan to gradually switch to 200GbE and 400GbE in a couple of years. At the same time, they are already eyeing speeds exceeding terabit. [6] Although there are some large vendors that are switching to 100GbE only last year (for example, Microsoft Azure). Data centers that perform high-performance computing for financial services, government platforms, oil and gas platforms, and utilities have also begun switching to 100GbE. [5]

In corporate data centers, bandwidth demand is slightly lower: only recently 10GbE has become an urgent need here, and not a luxury. However, as the rate of traffic consumption is growing more rapidly, it is doubtful that 10GbE will live in corporate data centers for at least 10 or even 5 years. Instead, we will see a quick transition to 25GbE and even faster to 100GbE. [6] Because, as Intel analysts note, the traffic intensity inside the data center increases by 25% annually. [5]

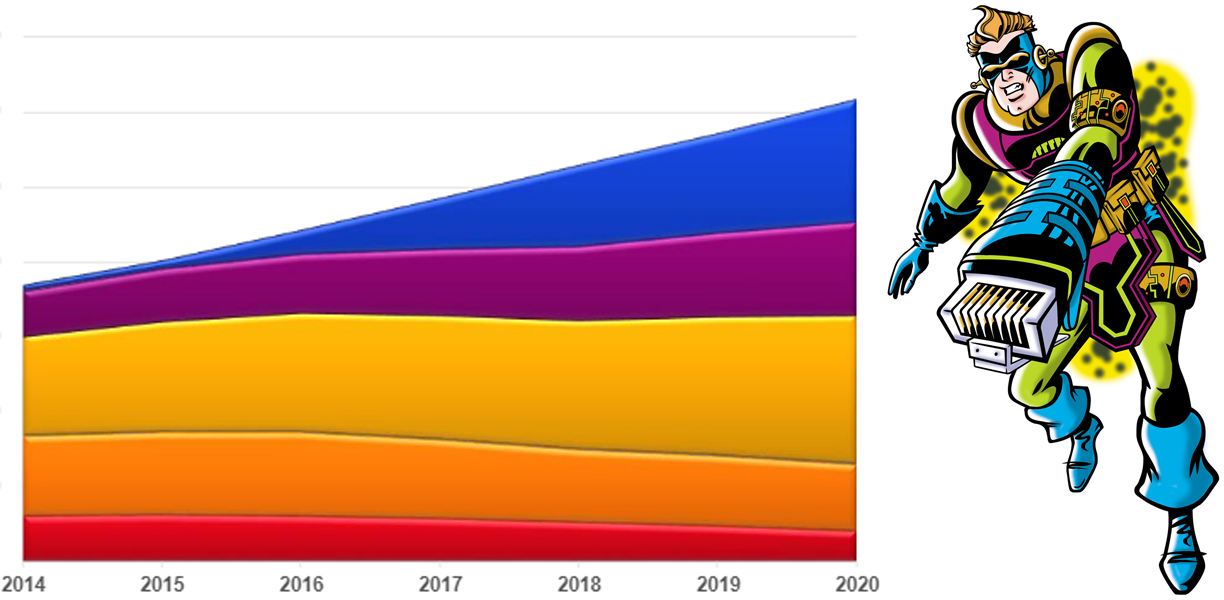

Analysts at Dell and Hewlett Packard state [4] that 2018 is the year of 100GbE for data centers. Back in August 2018, deliveries of 100GbE equipment doubled supplies for the entire 2017 year. And the pace of supply continues to grow, as data centers began to move away from 40GbE en masse. It is expected that by 2022, 19.4 million 100GbE ports will be delivered annually (in 2017, for comparison, this figure was 4.6 million). [4] As for the costs, $ 7 billion was spent on 100GbE ports in 2017, and in 2020, according to forecasts, about $ 20 billion will be spent (see Fig. 1). [1]

Figure 1. Statistics and forecasts of demand for network equipment

Why now? 100GbE is not such a new technology, so why is there such a stir right now around it?

1) Because this technology has matured and become cheaper. It was in 2018 that we crossed the line when the use of platforms with 100-gigabit ports in the data center became more cost-effective than “stacking” several 10-gigabit platforms. Example: Ciena 5170 (see Figure 2) is a compact platform providing aggregate throughput at 800GbE (4x100GbE, 40x10GbE). If several 10-gigabit ports are required to provide the necessary throughput, then the cost of additional iron, additional space, excess power consumption, maintenance, additional spare parts and additional cooling systems add up to a fairly round amount. [1] For example, Hewlett Packard specialists, analyzing the potential benefits of switching from 10GbE to 100GbE, came to the following figures: productivity is higher (by 56%), total costs are lower (by 27%), less electricity consumption (by 31%), simplification of cable connections (by 38%). [5]

Figure 2. Ciena 5170: an example platform with 100 gigabit ports

2) Juniper and Cisco have finally created their own ASIC chips for 100GbE switches. [5] Which is an eloquent confirmation of the fact that 100GbE technology is really ripe. The fact is that it is cost-effective to create ASIC microcircuits only when, firstly, the logic implemented on them does not require changes in the foreseeable future, and secondly, when a large number of identical microcircuits are manufactured. Juniper and Cisco would not produce these ASICs without being confident in the maturity of 100GbE.

3) Because Broadcom, Cavium, and Mellanox Technologie started punching processors with 100GbE support, and these processors are already used in switches of manufacturers such as Dell, Hewlett Packard, Huawei Technologies, Lenovo Group, etc. [5]

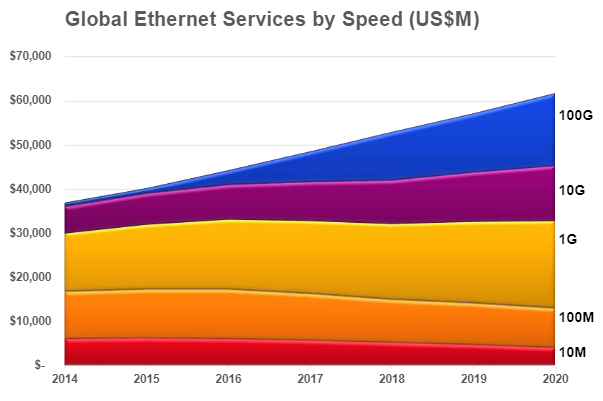

4) Because servers located in server racks are increasingly equipped with the latest Intel network adapters (see Figure 3), with two 25-gigabit ports, and sometimes even converged network adapters with two 40-gigabit ports (XXV710 and XL710) .

Figure 3. Latest Intel Network Adapters: XXV710 and XL710

5) Because 100GbE equipment is backward compatible, which simplifies deployment: you can reuse already-wired cables (just connect a new transceiver to them).

In addition, the availability of 100GbE prepares us for new technologies, such as NVMe over Fabrics (for example, Samsung Evo Pro 256 GB NVMe PCIe SSD; see Fig. 4) [8, 10], Storage Area Network (SAN) / “Software Defined Storage” (see Fig. 5) [7], RDMA [11], which without 100GbE could not reach their full potential.

Figure 4. Samsung Evo Pro 256 GB NVMe PCIe SSD

Figure 5. “Storage Area Network” (SAN) / “Software Defined Storage”

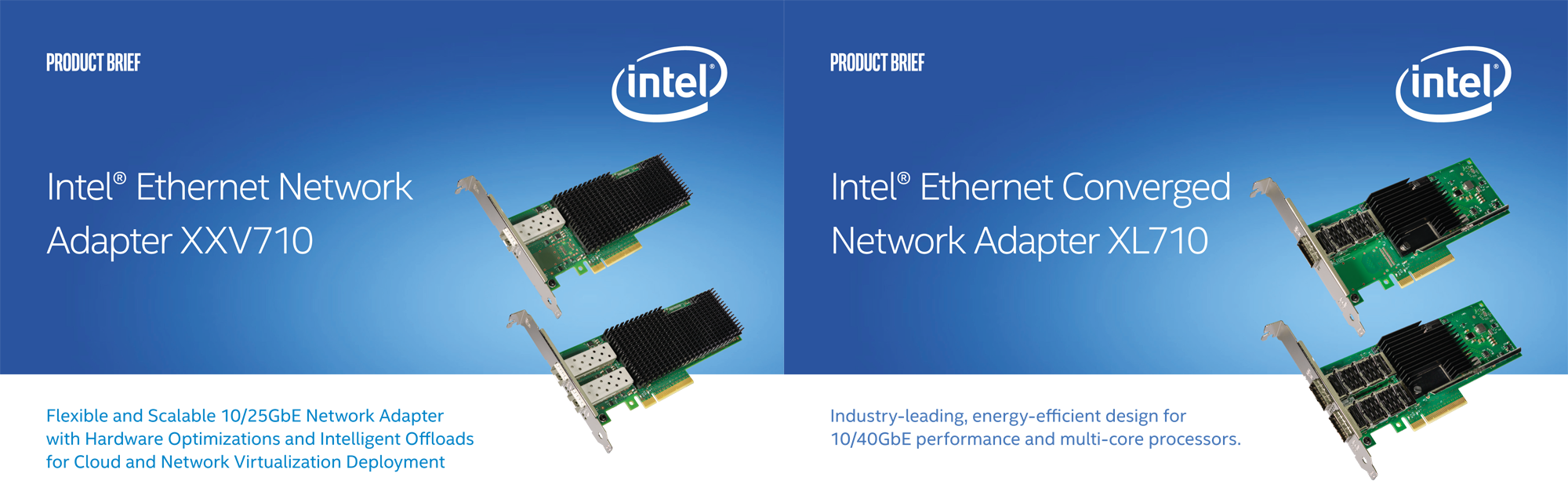

Finally, as an exotic example of the practical relevance of using 100GbE and related high-speed technologies, we can cite the Cambridge University scientific cloud (see Fig. 6), which is built on the basis of 100GbE (Spectrum SN2700 Ethernet switches), in order, inter alia, Ensure the efficient operation of the NexentaEdge SDS Distributed Disk Storage, which can easily overload a 10 / 40GbE network. [2] Such high-performance scientific clouds are deployed to solve a wide variety of applied scientific problems [9, 12]. For example, medical scientists use such clouds to decrypt the human genome, and 100GbE channels are used to transfer information between research groups of universities.

Figure 6. Fragment of a scientific cloud at Cambridge University

- John Hawkins 100GbE: Closer to the Edge, Closer to Reality // 2017.

- Amit Katz. 100GbE Switches - Have You Done The Math? // 2016.

- Margaret Rouse. 100 Gigabit Ethernet (100GbE) .

- David Graves. Dell EMC Doubles Down on 100 Gigabit Ethernet for the Open, Modern Data Center // 2018.

- Mary Branscombe. The Year of 100GbE in Data Center Networks // 2018.

- Jarred Baker. Moving Faster in the Enterprise Data Center // 2017.

- Tom Clark Designing Storage Area Networks: A Practical Reference for Implementing Fiber Channel and IP SANs. 2003.572p.

- James O'Reilly. Network Storage: Tools and Technologies for Storing Your Company Data // 2017.280p.

- James Sullivan. Student cluster competition 2017, Team University of Texas at Austin / Texas State University: Reproducing vectorization of the Tersoff multi-body potential on the Intel Skylake and NVIDIA V100 architectures // Parallel Computing. v. 79, 2018. pp. 30-35.

- Manolis Katevenis. The next Generation of Exascale-class Systems: the ExaNeSt Project // Microprocessors and Microsystems. v. 61, 2018. pp. 58-71.

- Hari Subramoni. RDMA over Ethernet: A Preliminary Study // Proceedings of the Workshop on High Performance Interconnects for Distributed Computing. 2009.

- Chris Broekema Energy-Efficient Data Transfers in Radio Astronomy with Software UDP RDMA // Future Generation Computer Systems. v. 79, 2018. pp. 215-224.

PS. The article was originally published in the System Administrator .

Only registered users can participate in the survey. Please come in.

Why did large data centers start massively switching to 100GbE?

- 47.4% In fact, no one has yet begun to cross ... 28

- 37.2% Because this technology has matured and cheaper 22

- 20.3% Because Juniper and Cisco Created ASICs for 100GbE 12 Switches

- 13.5% Because Broadcom, Cavium, and Mellanox Technologie Added 100GbE 8 Support

- 23.7% because 25- and 40-gigabit ports appeared on the servers 14

- 6.7% Own version (write in the comments) 4