Rendering Features in Metro: Exodus c raytracing

- Transfer

Foreword

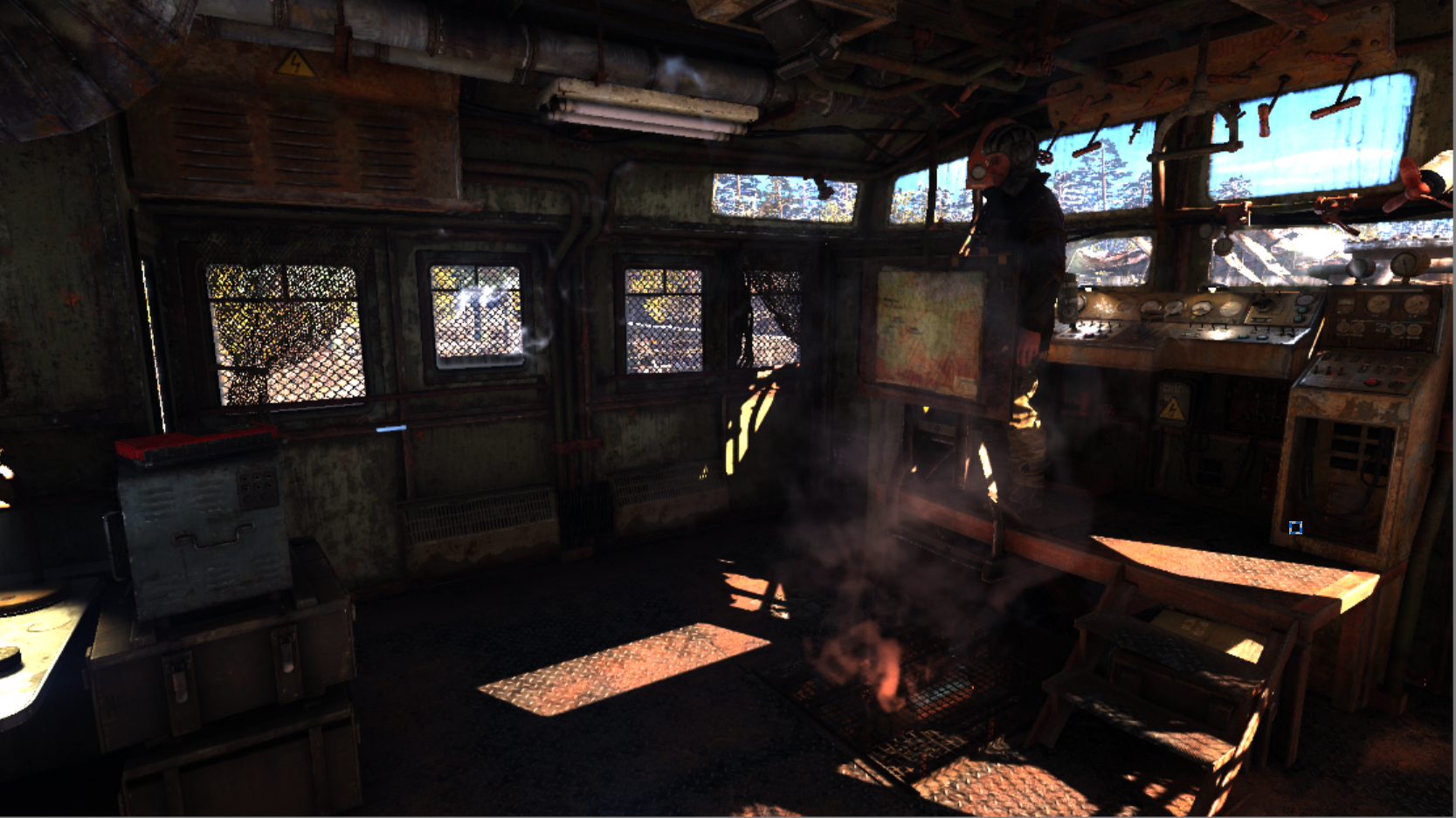

After the last game from the Metro series was released, I spent several hours studying its internal work and decided to share something that might seem interesting from a technological point of view. I will not conduct a detailed analysis or study the disassembled code of the shaders, but I will show the high-level decisions made by the developers in the process of creating the game.

At the moment, the developers have not yet talked about the rendering techniques used in the game. The only official source of information is the GDC report , which cannot be found anywhere else on the Internet. And this is annoying, because the game runs on a very interesting proprietary engine that has evolved from previous games in the Metro series. This is one of the first games to use DXR .

Note: this article is not a complete description and I will return to it if I find something worth adding. Perhaps I missed something, because some aspects appear only in the next stages of the game, or I just looked at the details.

First steps

It took me several days to find an environment capable of working with this game. After testing several versions of RenderDoc and PIX, I settled on studying the results of ray tracing using Nvidia NSight. I wanted to learn rendering without raytracing, but NSight allowed me to explore the details of this feature too, so I decided to leave it on. For the rest of the rendering, PIX is a good fit. Screenshots were taken using both applications.

NSight has one drawback - it does not support saving the capture to a file, so I could not return to the frames I was studying.

At the very beginning of my work, I also ran into another problem that had nothing to do with frame debugging applications: ray tracing functions required installing the latest Windows update, but the game allowed them to be included in the options without installing the update. In this case, the inclusion of functions caused the game to crash at startup. The GeForce Experience also said nothing about the need for the correct version of Windows to enable these features. This problem needs to be addressed on both sides.

For the sake of completeness, I made captures from a game running with the maximum possible parameters, but without DLSS.

Frame analysis

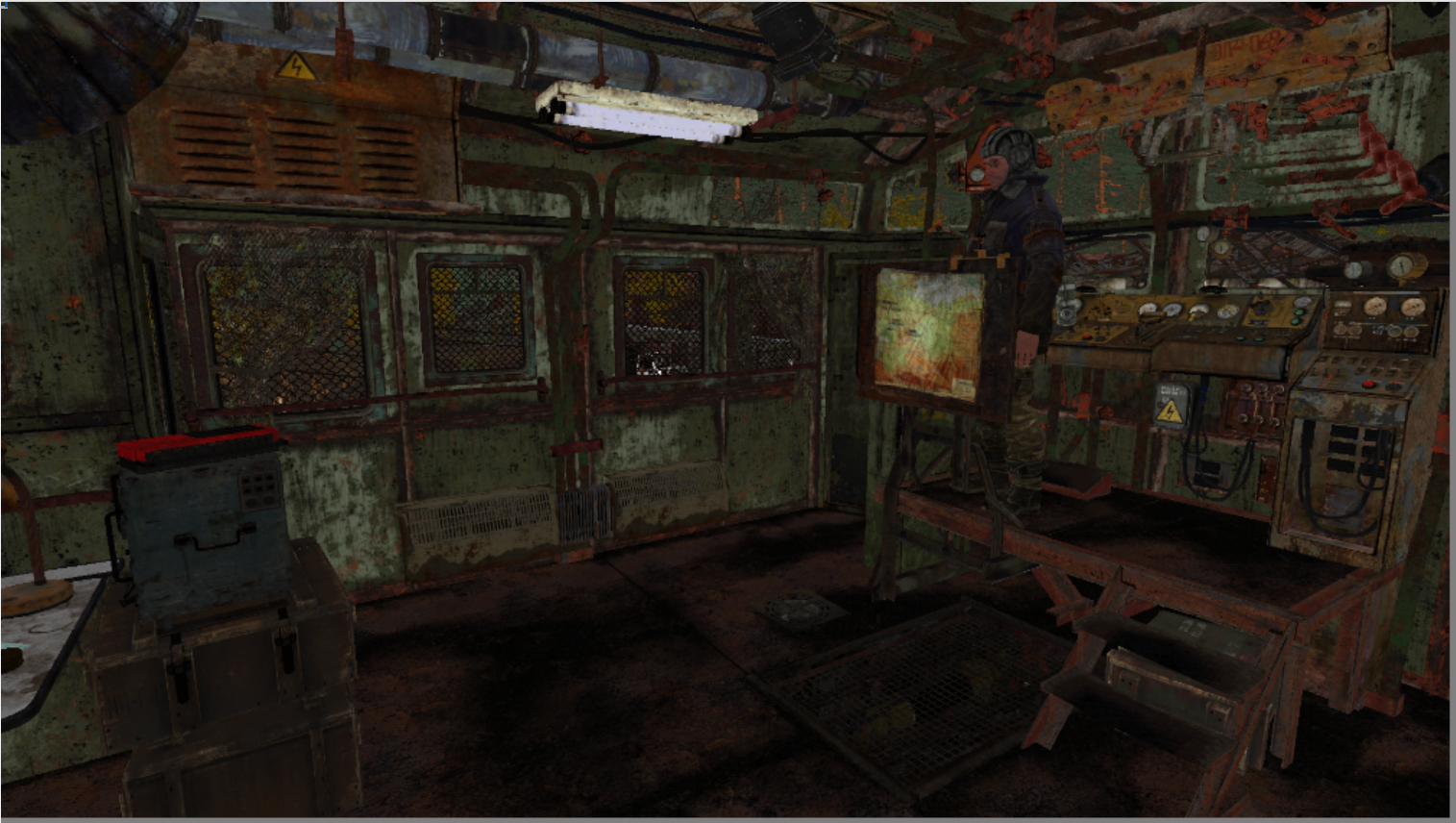

Finished frame

A brief rendering analysis demonstrates a fairly standard set of functions, with the exception of global illumination performed by ray traced (raytraced GI).

Before rendering the picture, the scale of the previous frame is reduced in the compute queue and the average brightness is calculated.

The graphic queue begins with the rendering of distortion particles (droplets on the camera), which are applied at the post-processing stage. Then a quick preliminary pass of the depths creates a part of the depths in front of Gbuffer; it looks like it only renders a relief.

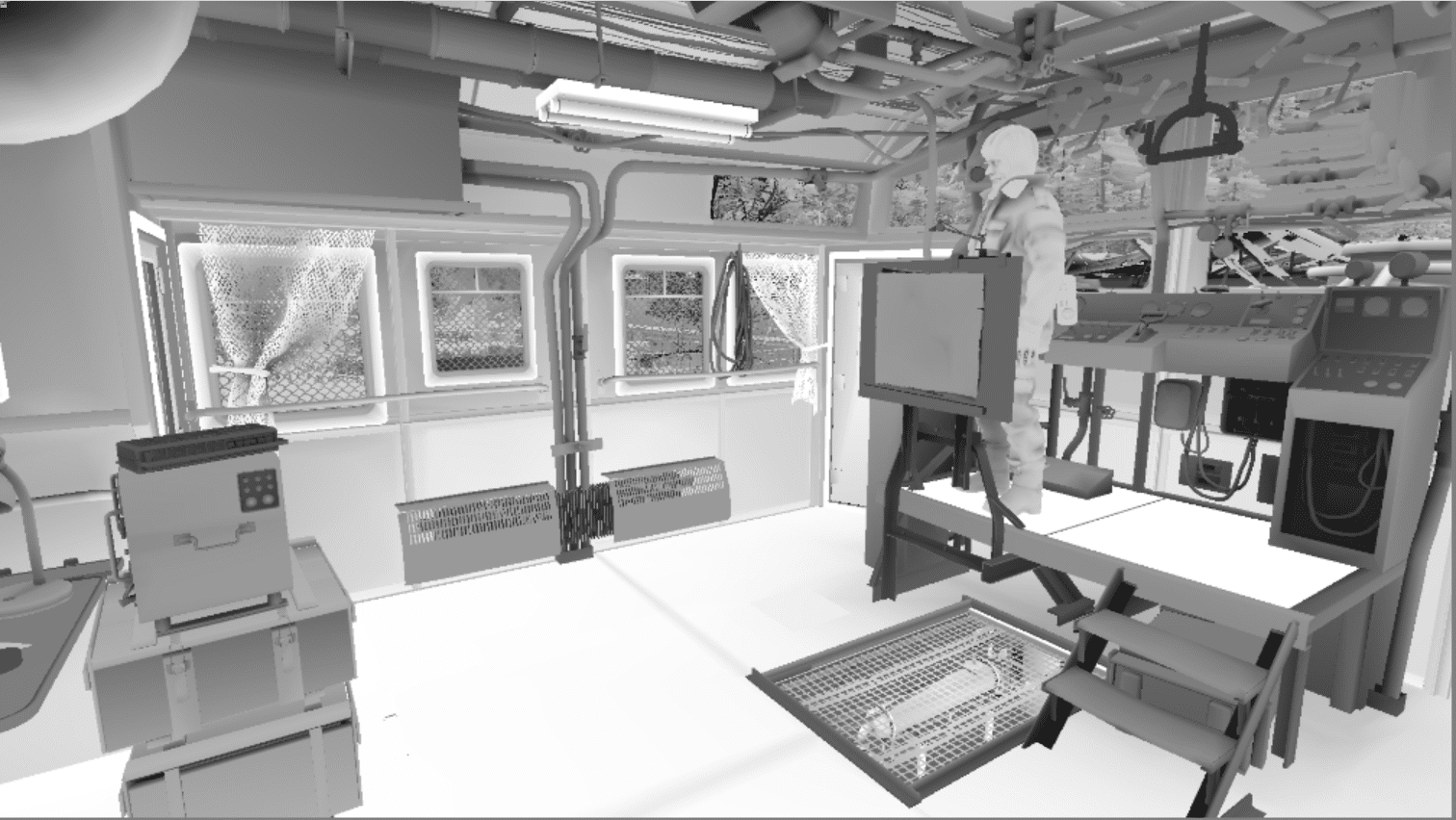

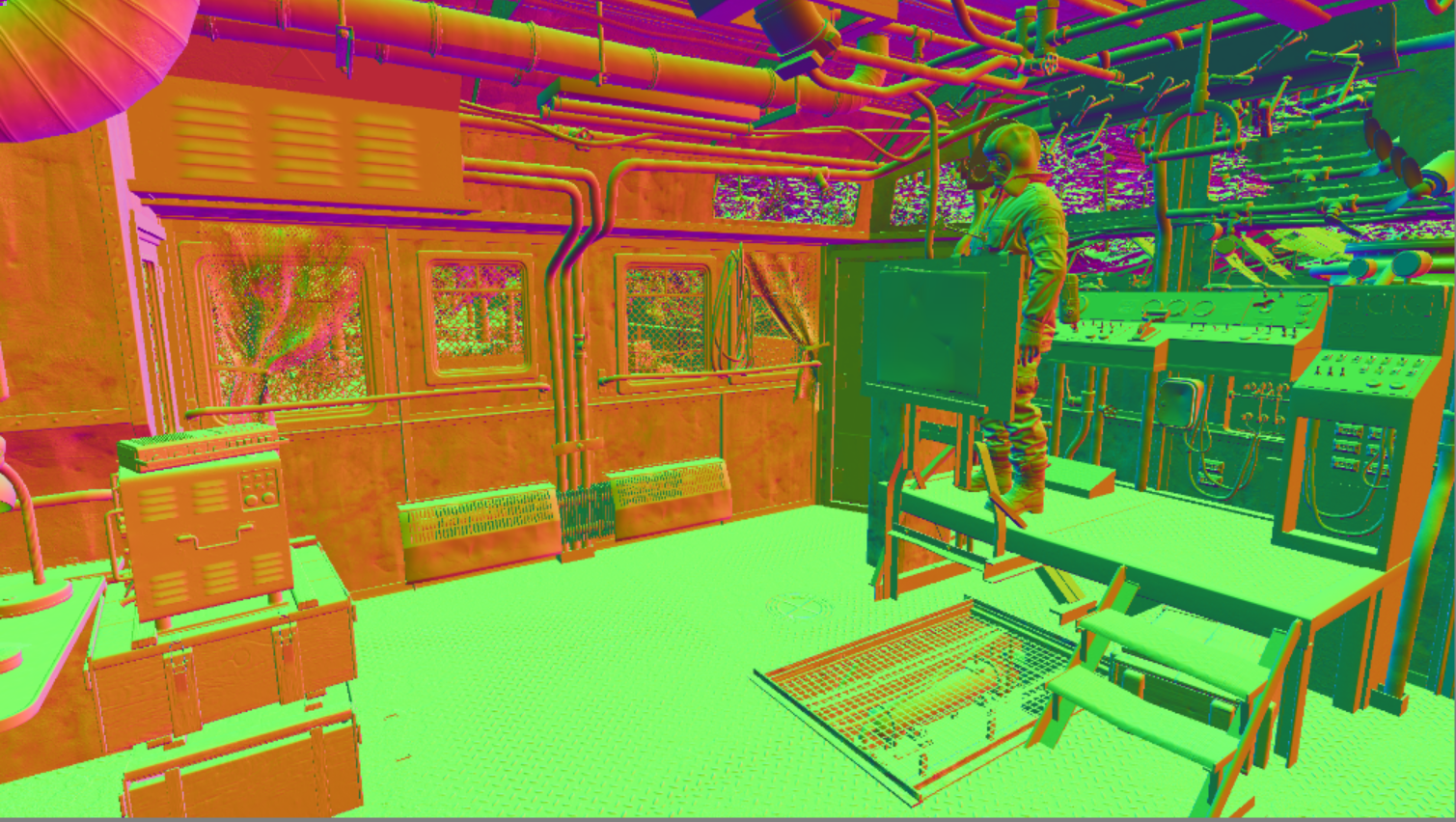

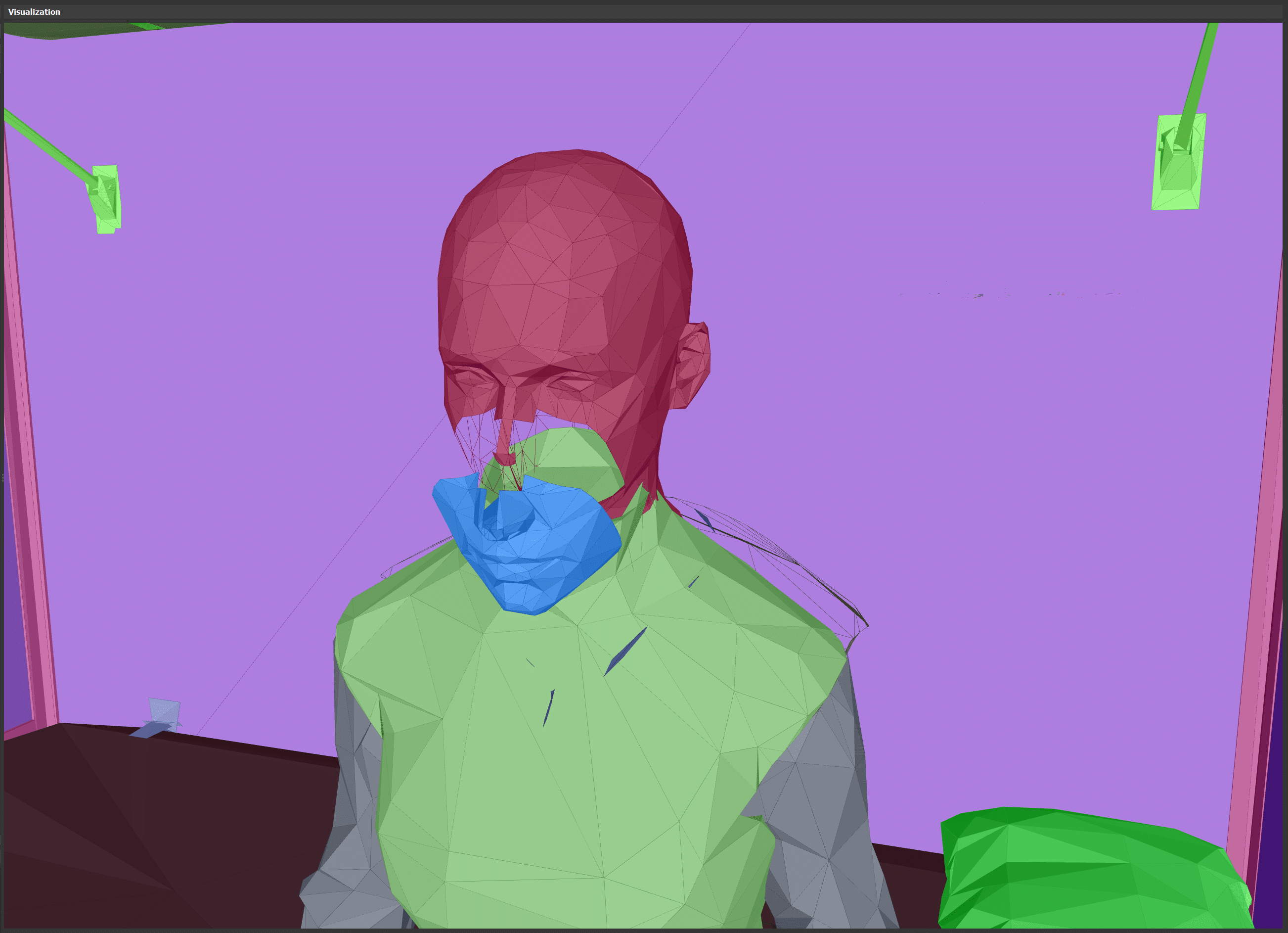

The GBuffer pass fills 4 render targets according to the diagram below, and also completes the depth buffer filling.

1. Target in RGBA8 format with albedo and, possibly, Ambient Occlusion in the alpha channel; On some surfaces it looks very dark.

2. Target in RGB10A2 format with normals and, possibly, a subsurface scattering mask in the alpha channel.

3. Target in RGBA8 format with other material parameters, probably metalness and roughness in the alpha channel. Curiously, the RGB channels in this case contain exactly the same data.

4. Target in RG16F format with 2D motion vectors.

After the depths are completely filled, a linear depth buffer is built and its scale decreases. All this is done in the compute queue. In the same queue, the buffer is filled with something similar to directional lighting without using shadows.

In the graphics queue, the GPU traces the rays of global illumination, but I will talk more about this below.

The compute queue computes ambient occlusion, reflections, and something similar to edge recognition.

In the graphic queue, a four-stage shadow map is rendered into a 32-bit depth map of size 6k * 6k. More on this below. After completion of the map of directed shadows, the resolution of the third cascade for unknown reasons decreases to 768 * 768.

In the middle of the shadow rendering process, there is a curious moment: impostor atlas is supplemented by some objects before rendering local shadows from lighting (about what impostors can be found here ). Both the impostor buffers and the local lighting shadow buffers are also 6k * 6k textures.

After all the shadows are completed, the calculation of lighting begins. This part of the rendering is rather incomprehensible, because there are a lot of renderings that perform some mysterious actions, and therefore require additional study.

Rendering of the scene ends with frontally lit objects (eyes, particles). Visual effects are rendered into a half-resolution buffer, after which they are composited with opaque objects using zooming.

The final picture is achieved by tonal correction and bloom calculation (decrease and then increase the resolution of the frame with tonal correction). Finally, the UI is rendered into a separate buffer and, along with bloom compositing, is superimposed on top of the scene.

I did not find the part in which the smoothing is performed, so I'll leave it for later.

Global light ray tracing

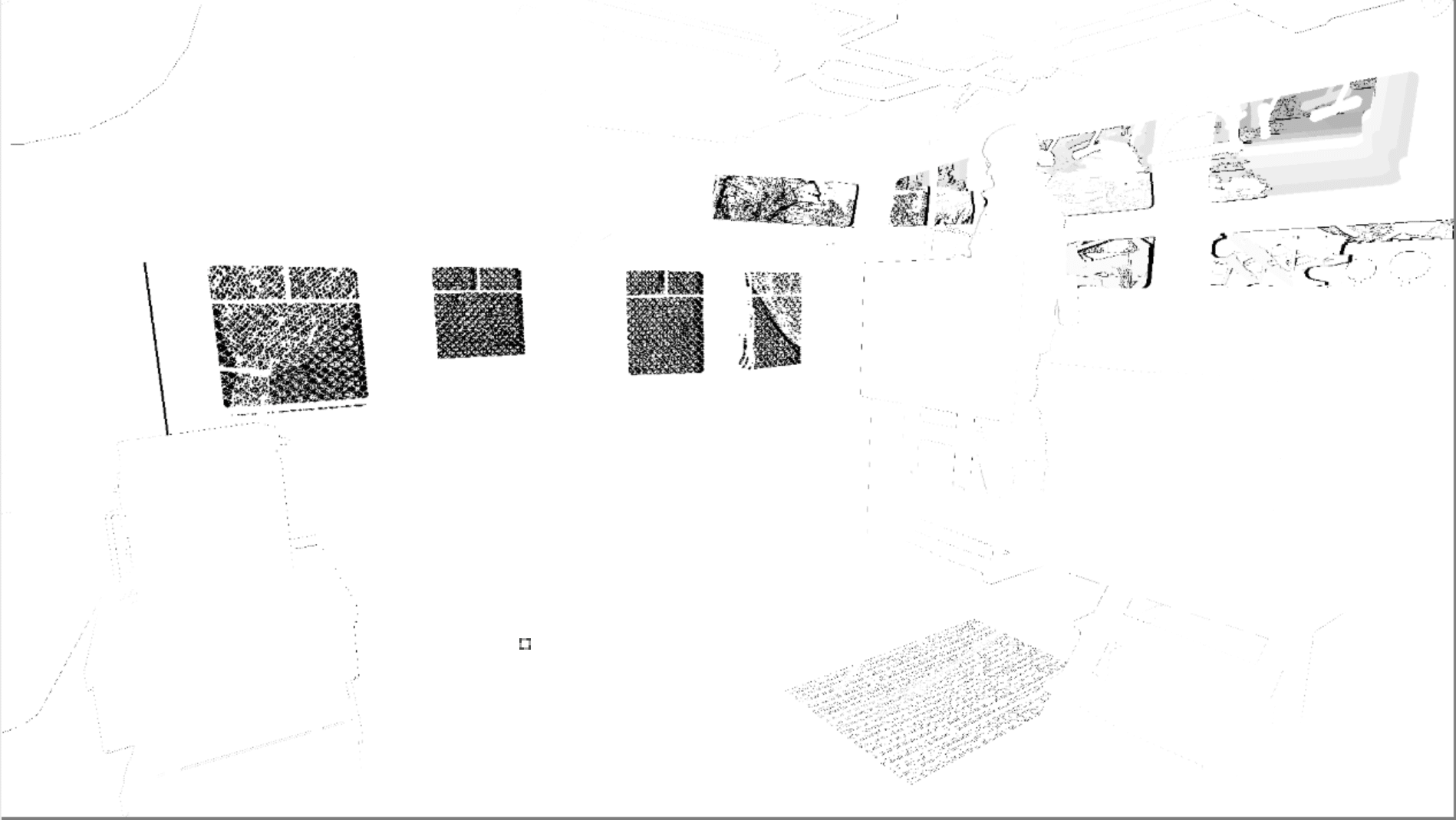

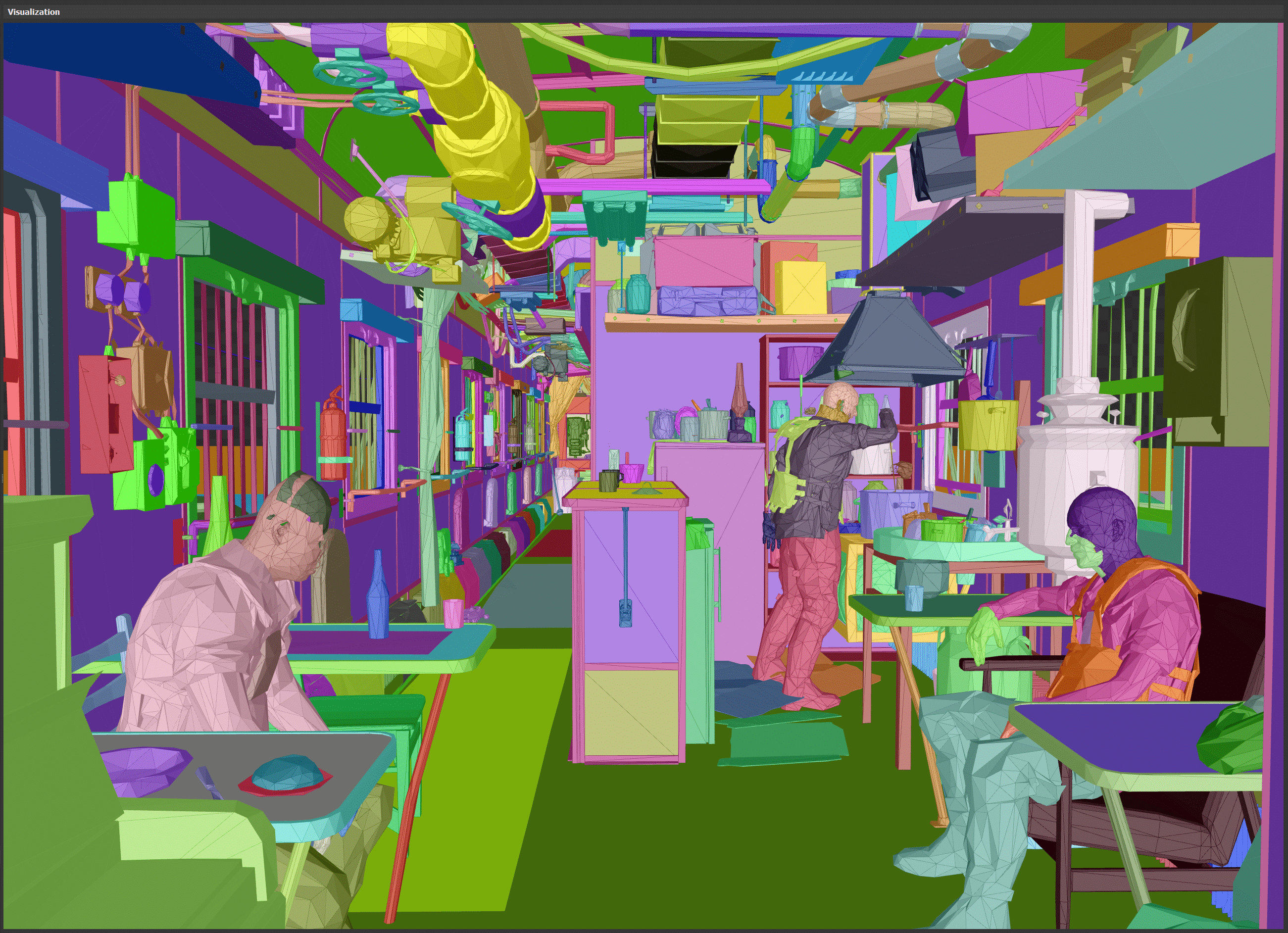

Some information about the global lighting performed by raytraced GI. This accelerating structure covers a large area of the game world, probably several hundred meters, while maintaining very high detail everywhere. It seems to be streaming somehow. The scene of the accelerating structure does not coincide with the rasterized scene, for example, the buildings in the image below are not visible in the rasterized form.

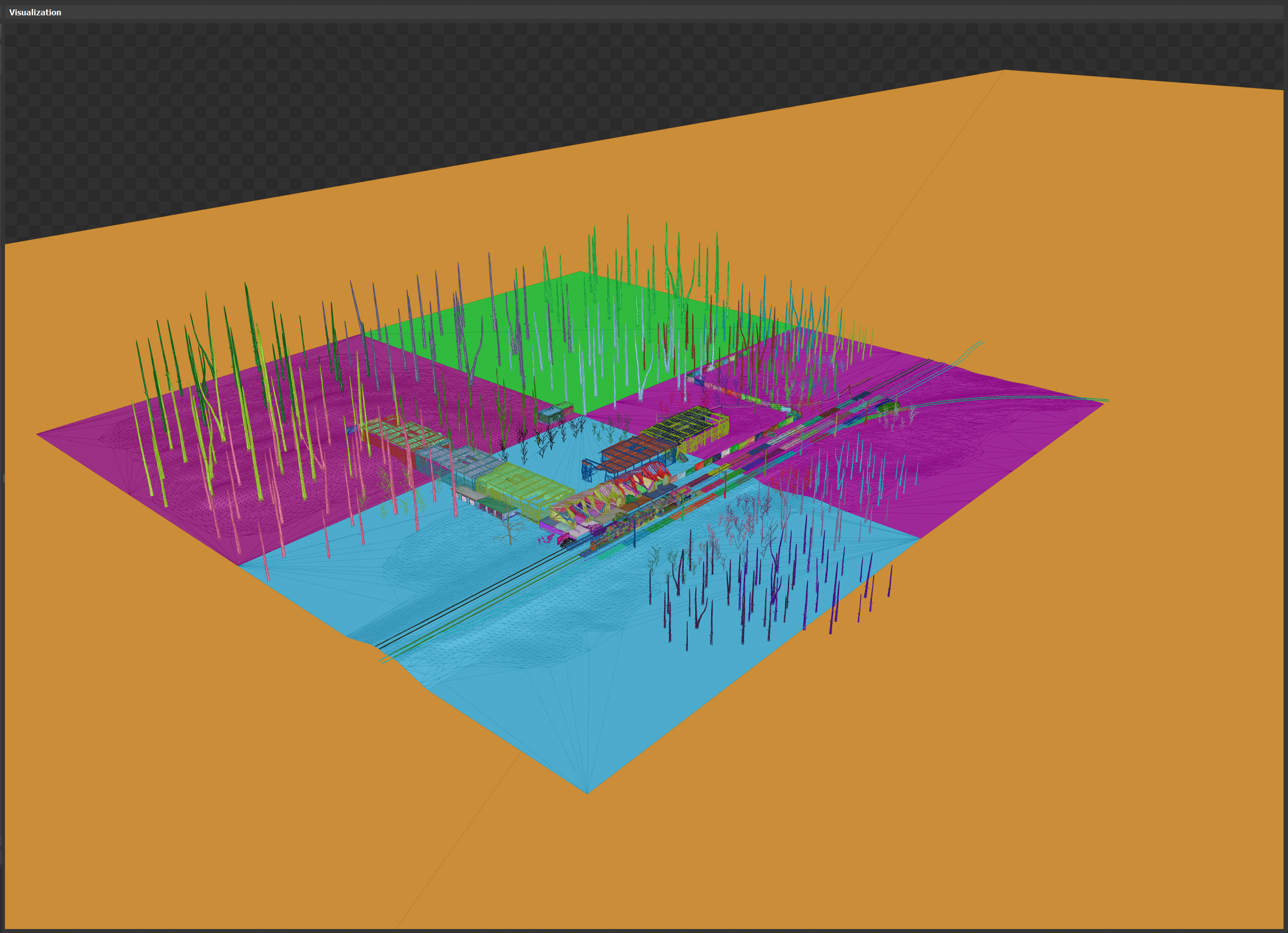

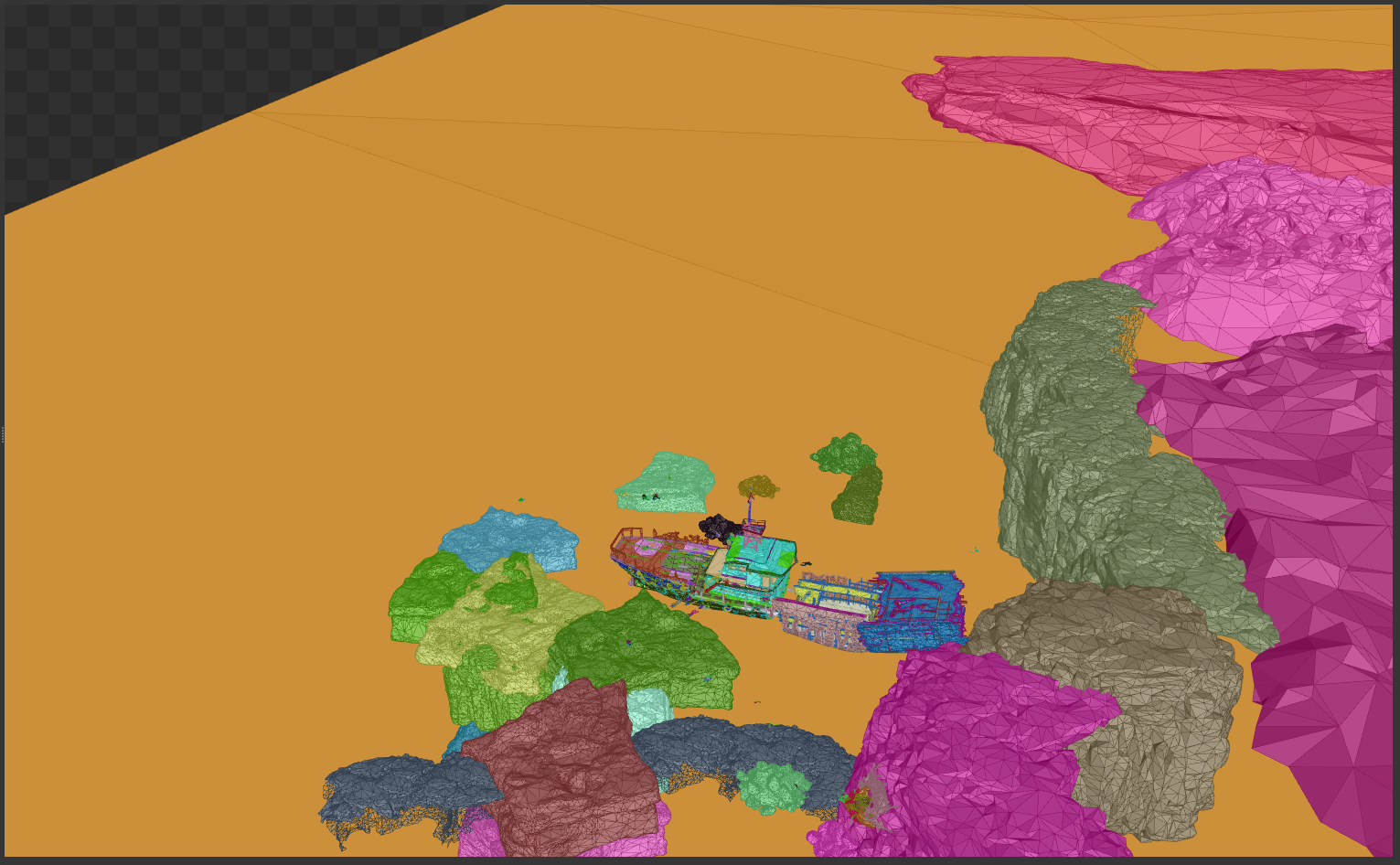

Top view

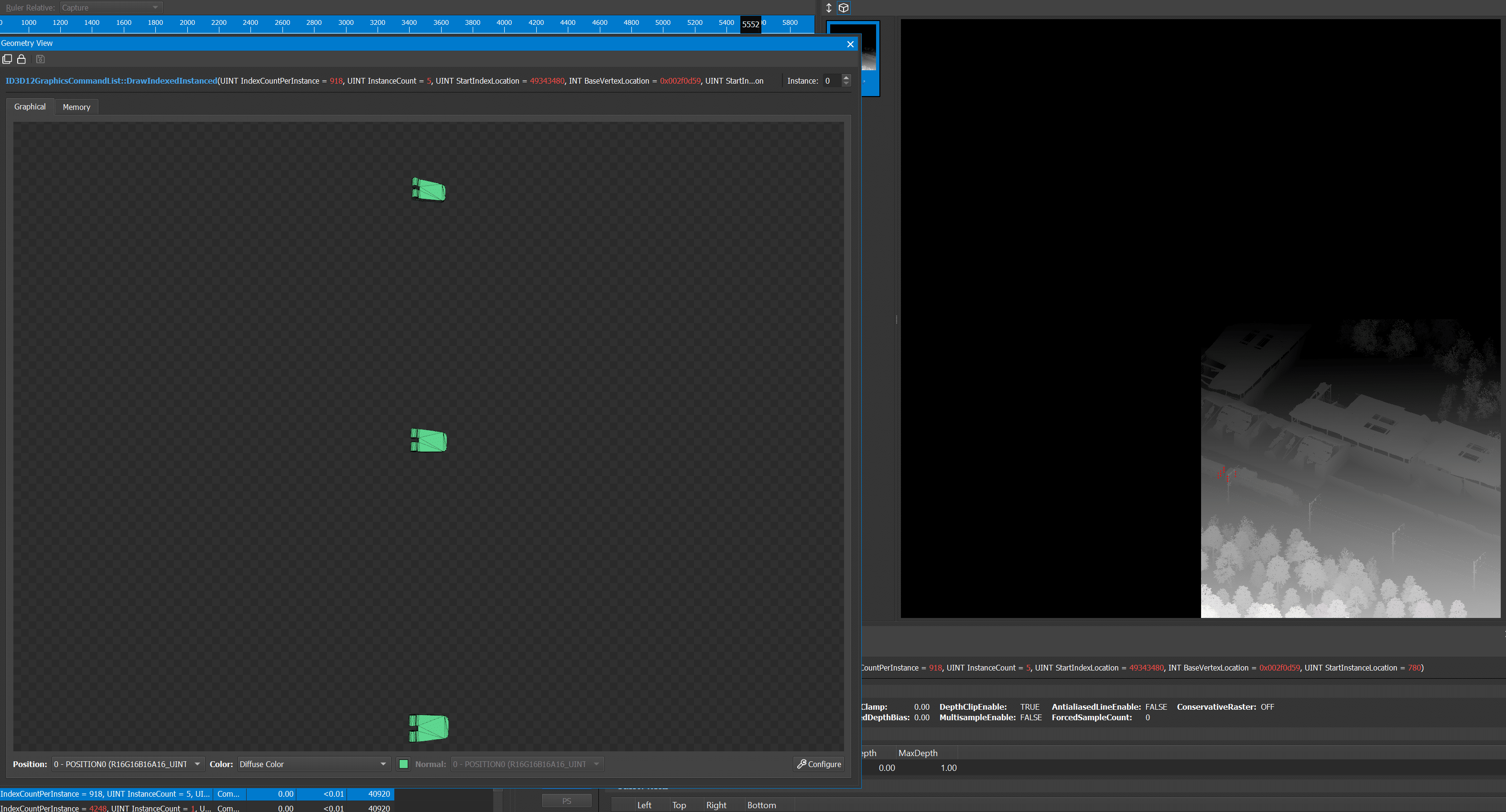

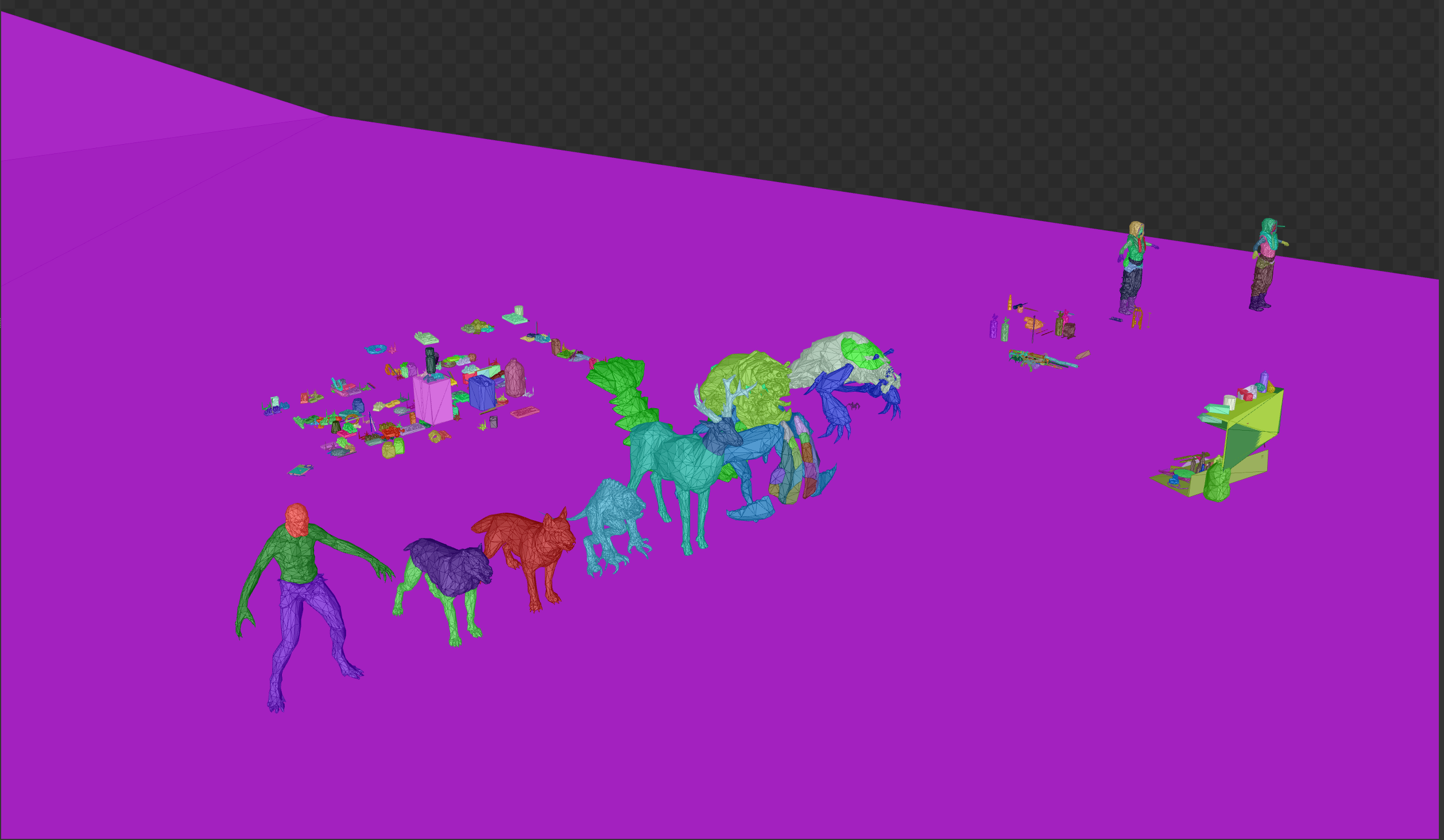

Here we can see four tiles surrounding the player’s position. Also apparent is the lack of geometry being tested on the alpha channel. Trees have trunks, but no foliage, no grass, no bushes.

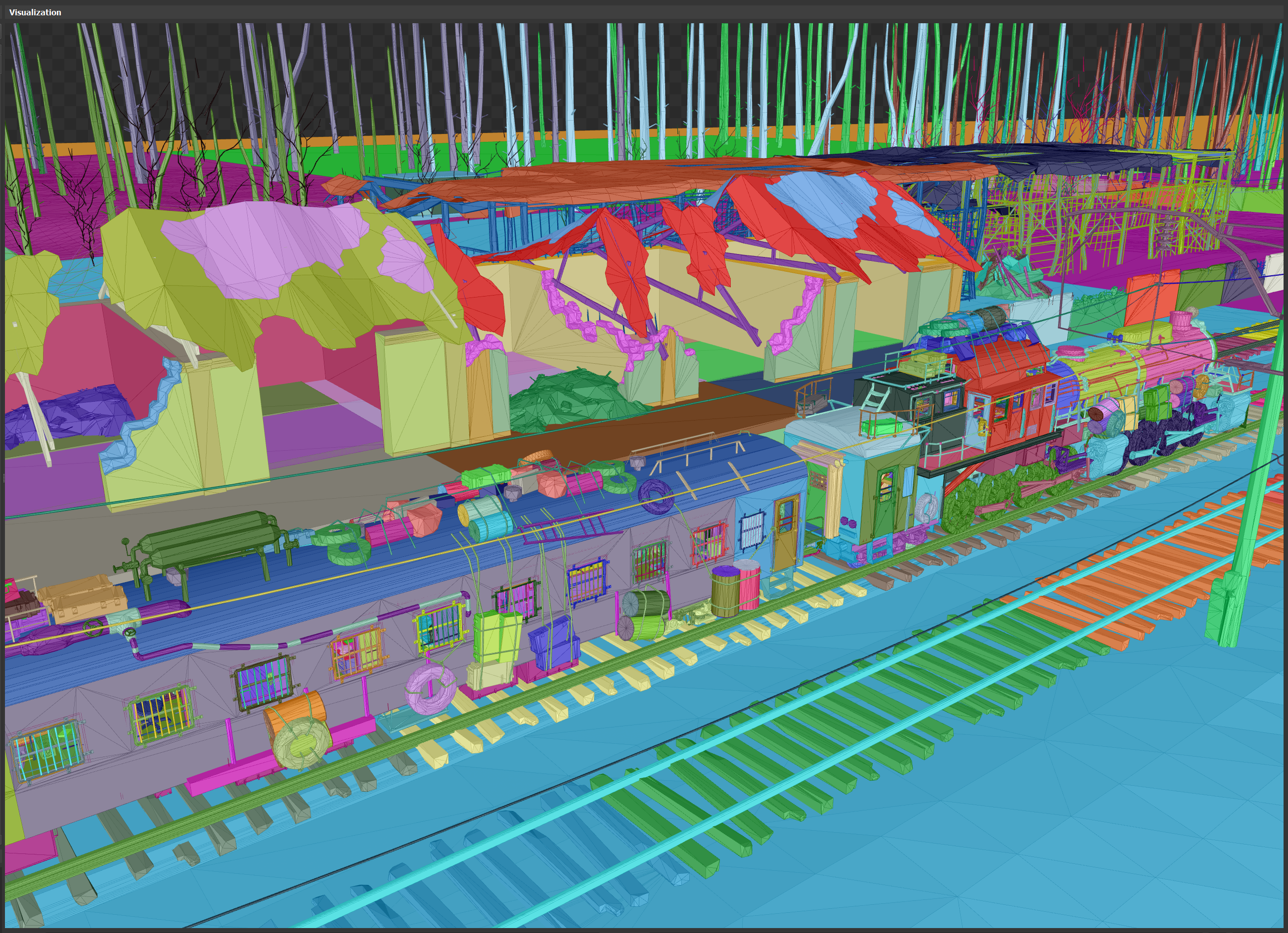

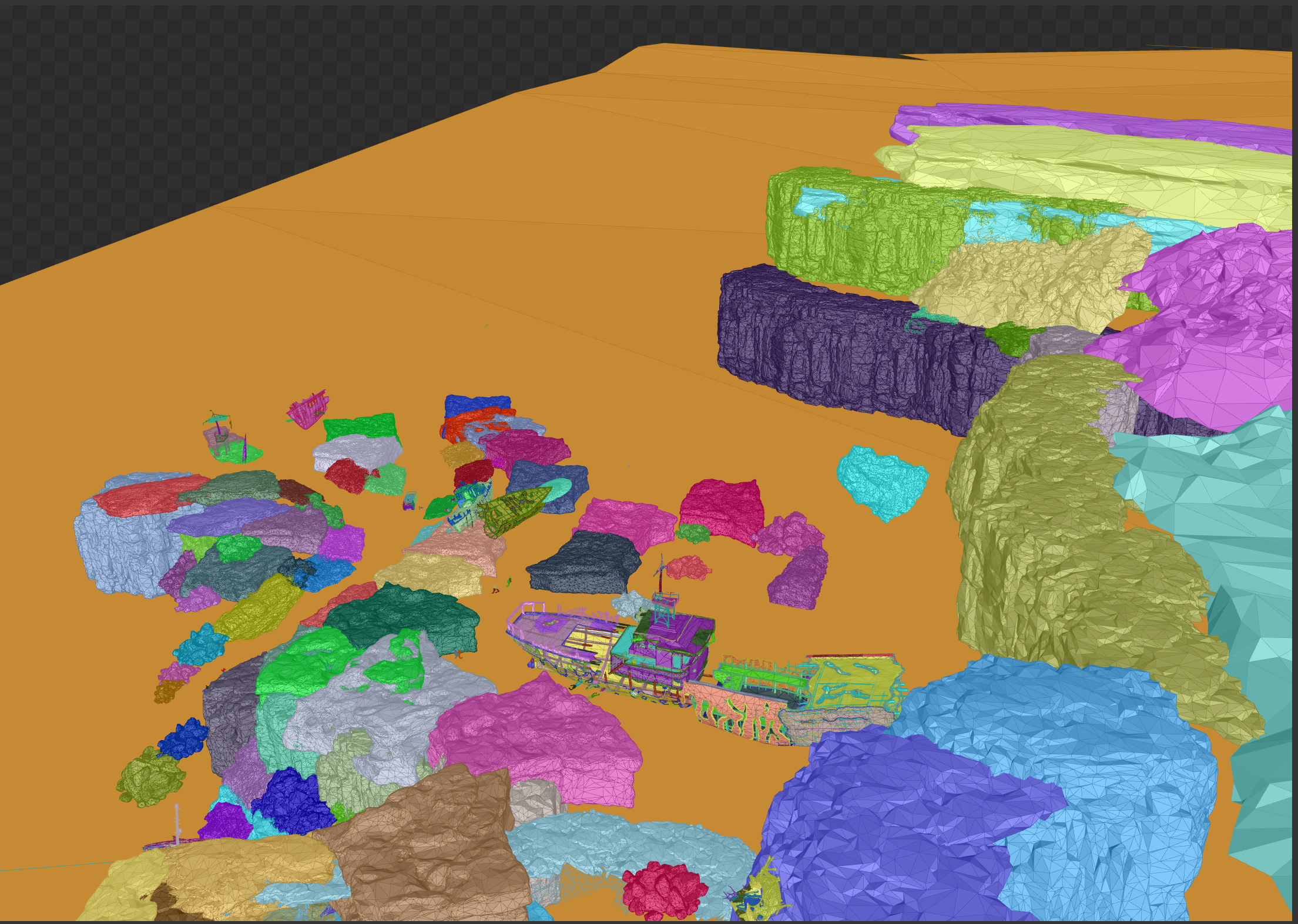

Close-up view The close-up

view shows better detail and density of objects. Each object of a different color has its own accelerating structure of the lower level. Only in this picture there are several hundred of them.

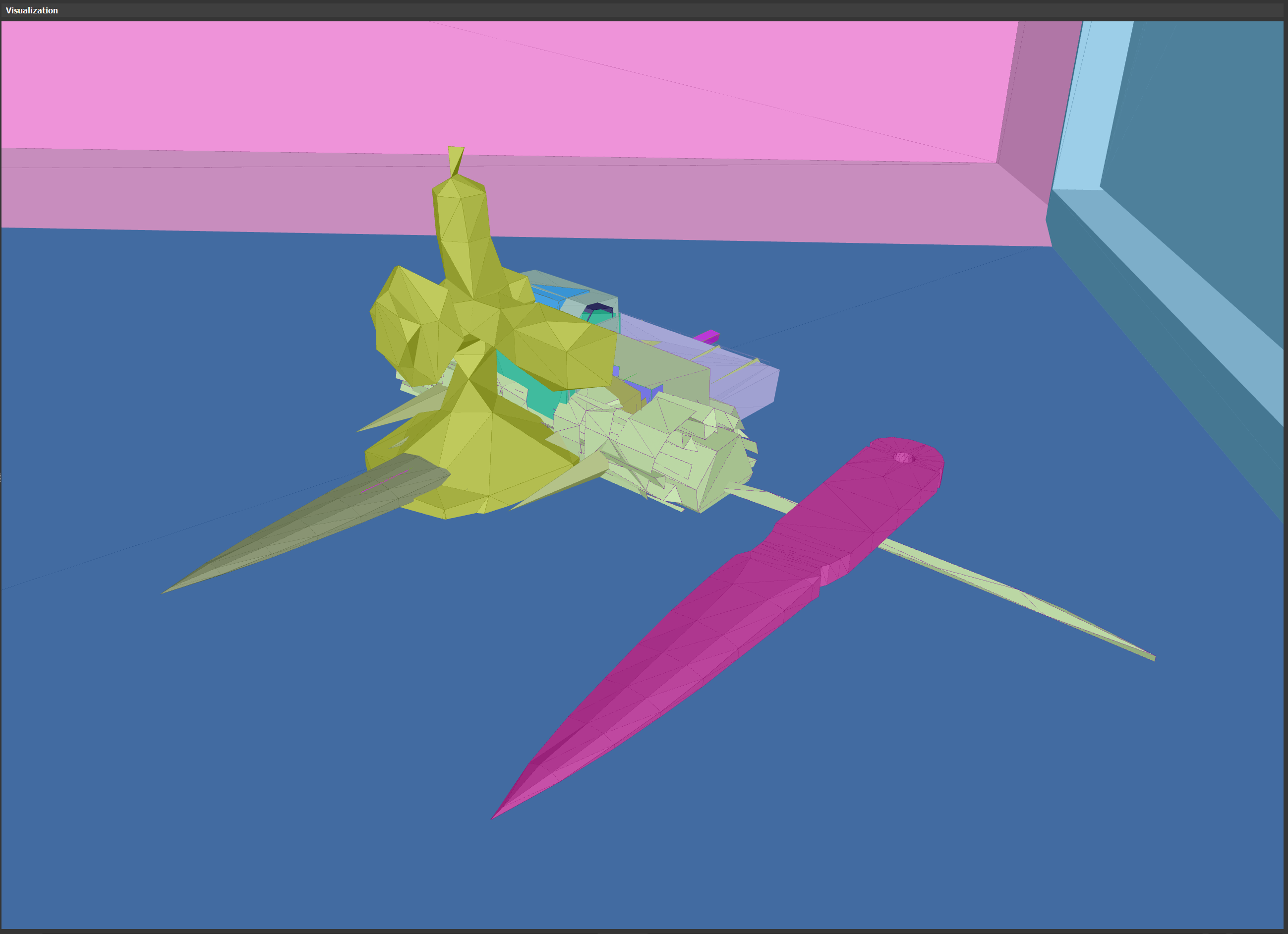

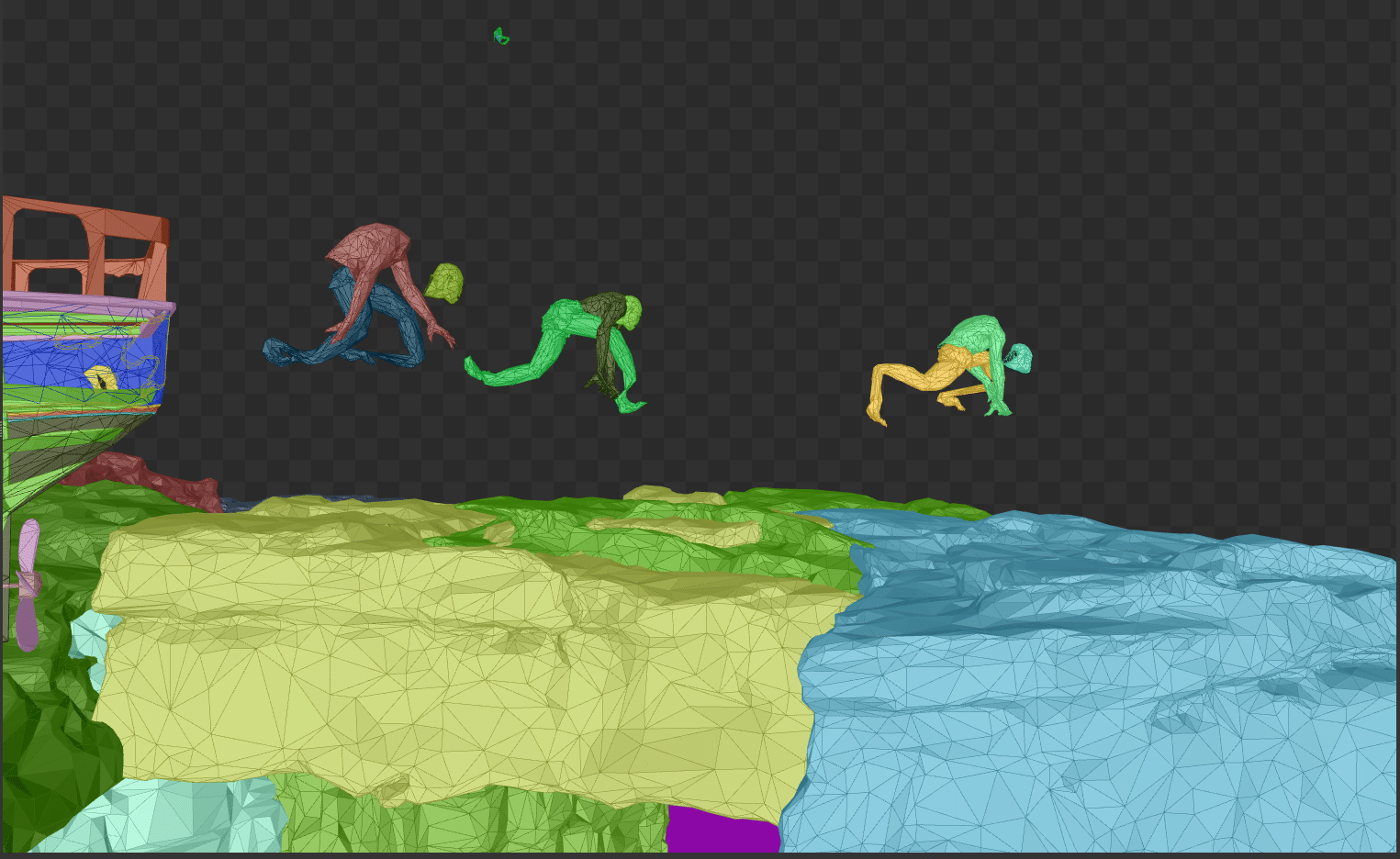

Player Items Underfoot

It is interesting that player items are also part of the accelerating structure, but for some reason are located under his feet.

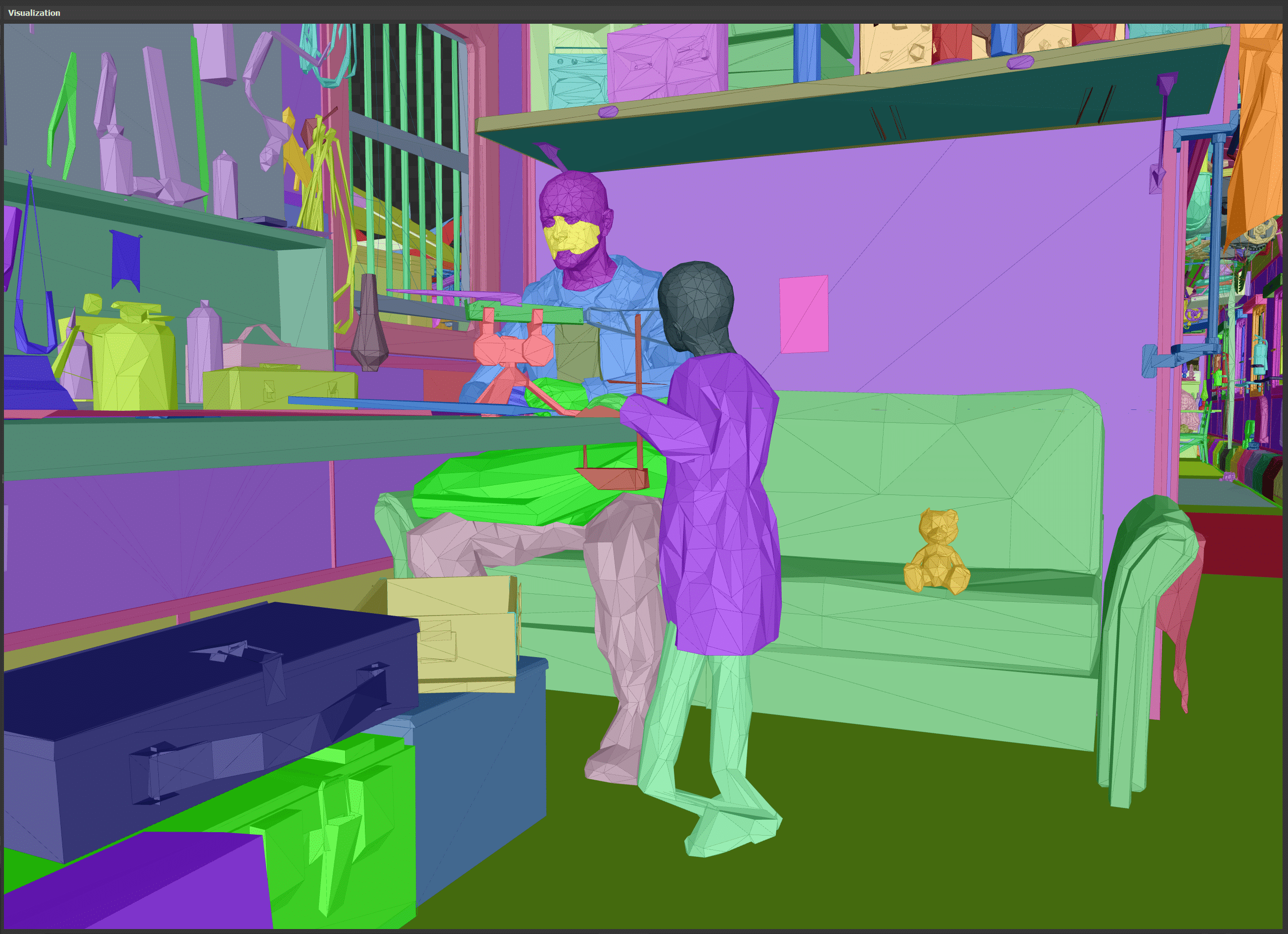

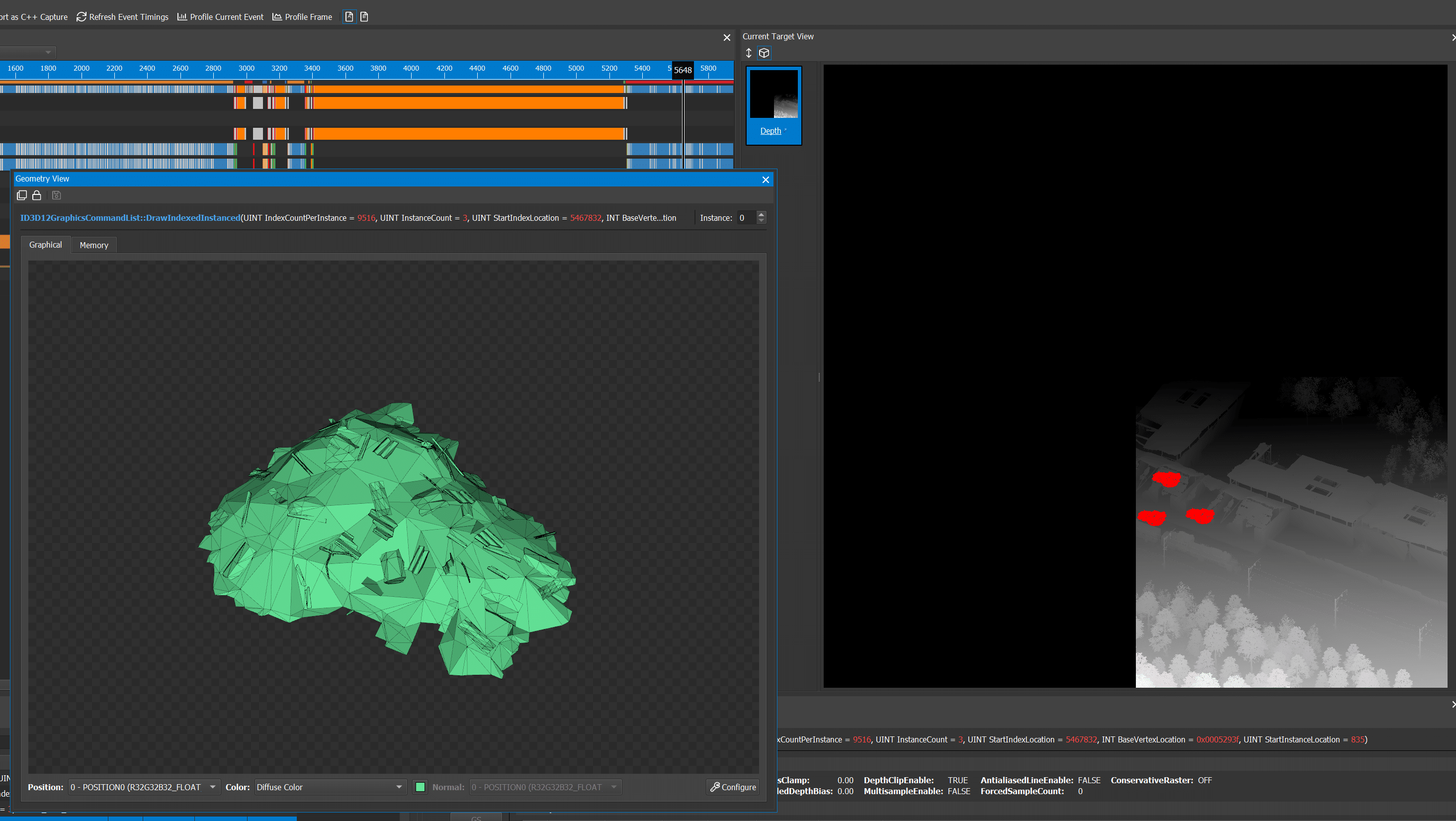

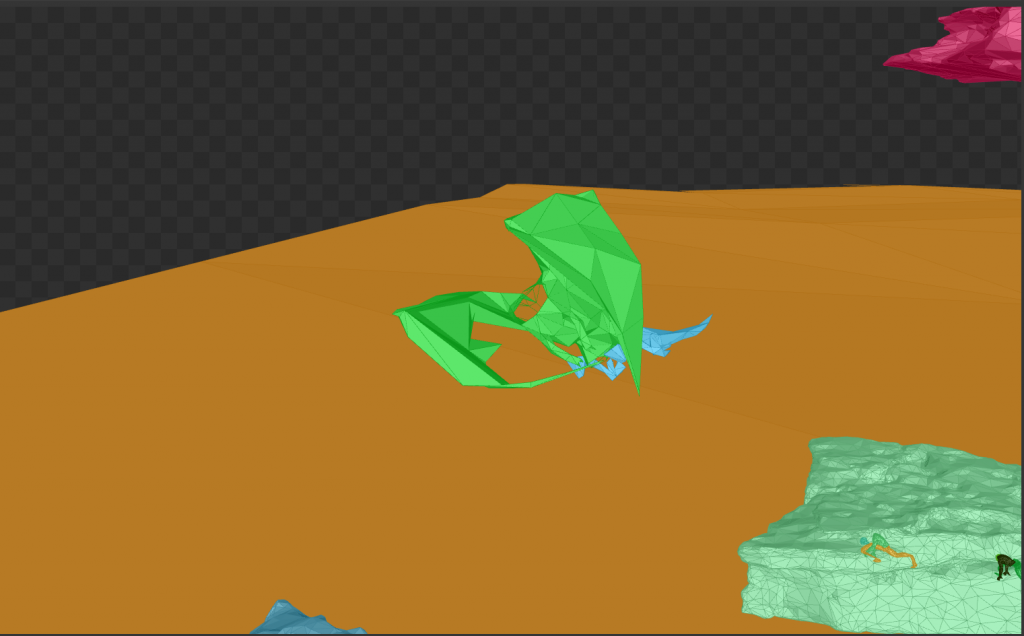

Broken skinning?

Broken skinning again?

Some of the objects with skinning look broken in the accelerating structure. One of the observed problems is stretching the mesh (on the child’s legs). Another problem leads to the fact that different parts of the character with skinning are in different positions. There is no stretching, but the parts are separated from each other. It seems that none of this is visible in the global ray-tracing lighting, or at least I have not been able to notice this in the game.

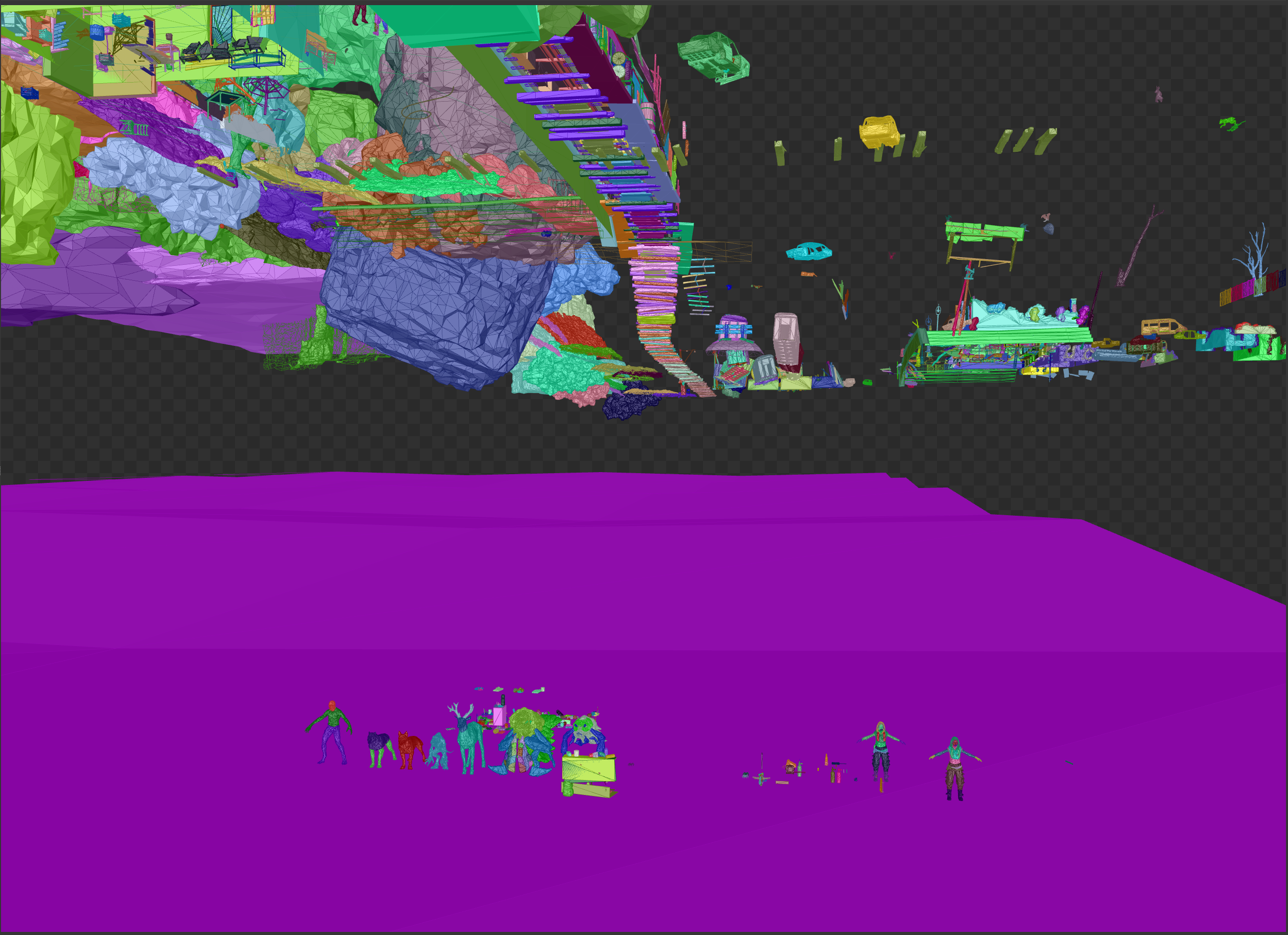

A huge number of objects

On a more general plane, you can see how many different objects there are in the accelerating structure. Most of them will not actually contribute to the results of the calculations of global illumination. It is also seen here that there is no LOD scheme. All objects are added with full detail. It would be interesting to know if this has any effect on ray tracing (I would assume yes).

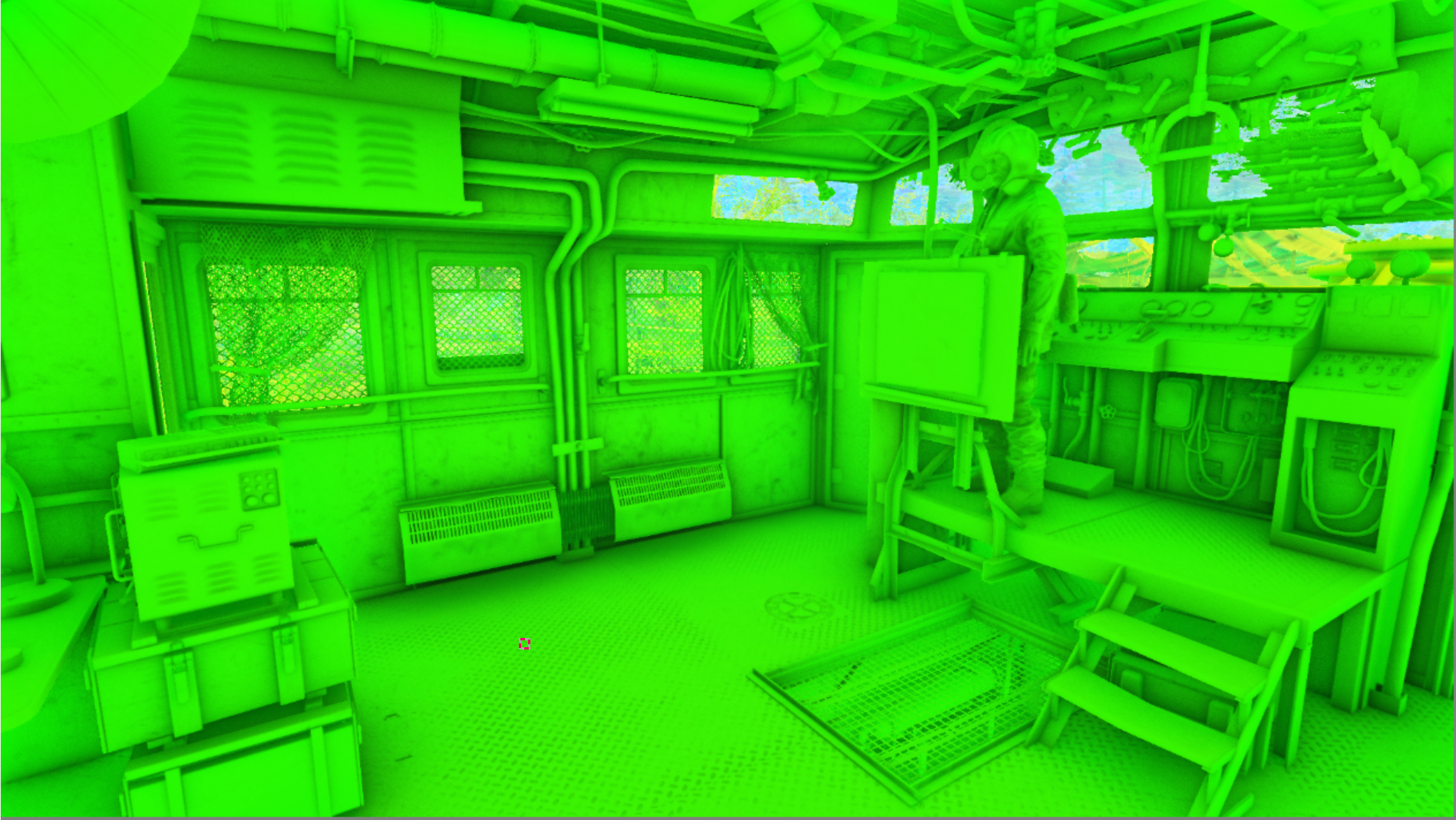

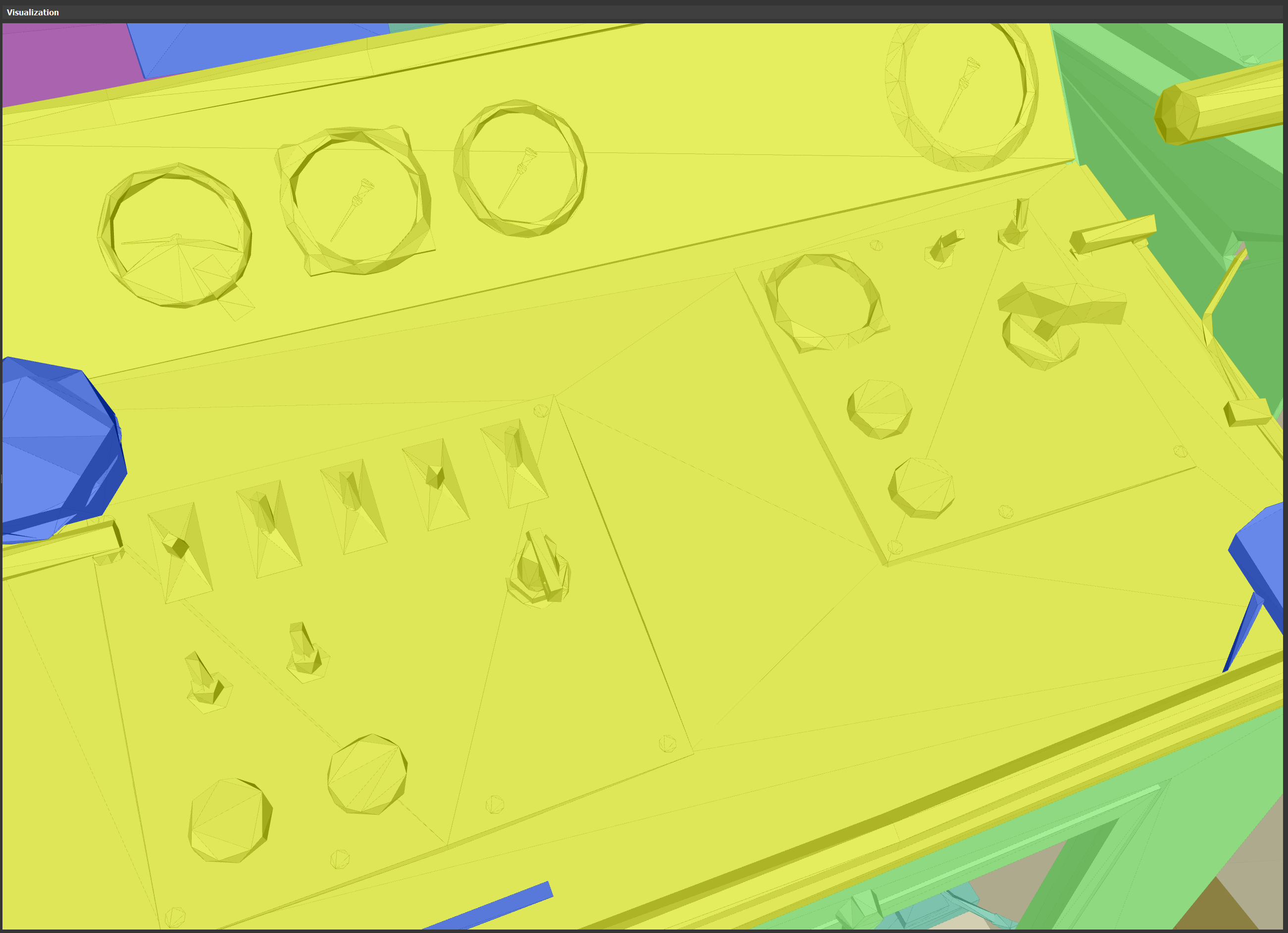

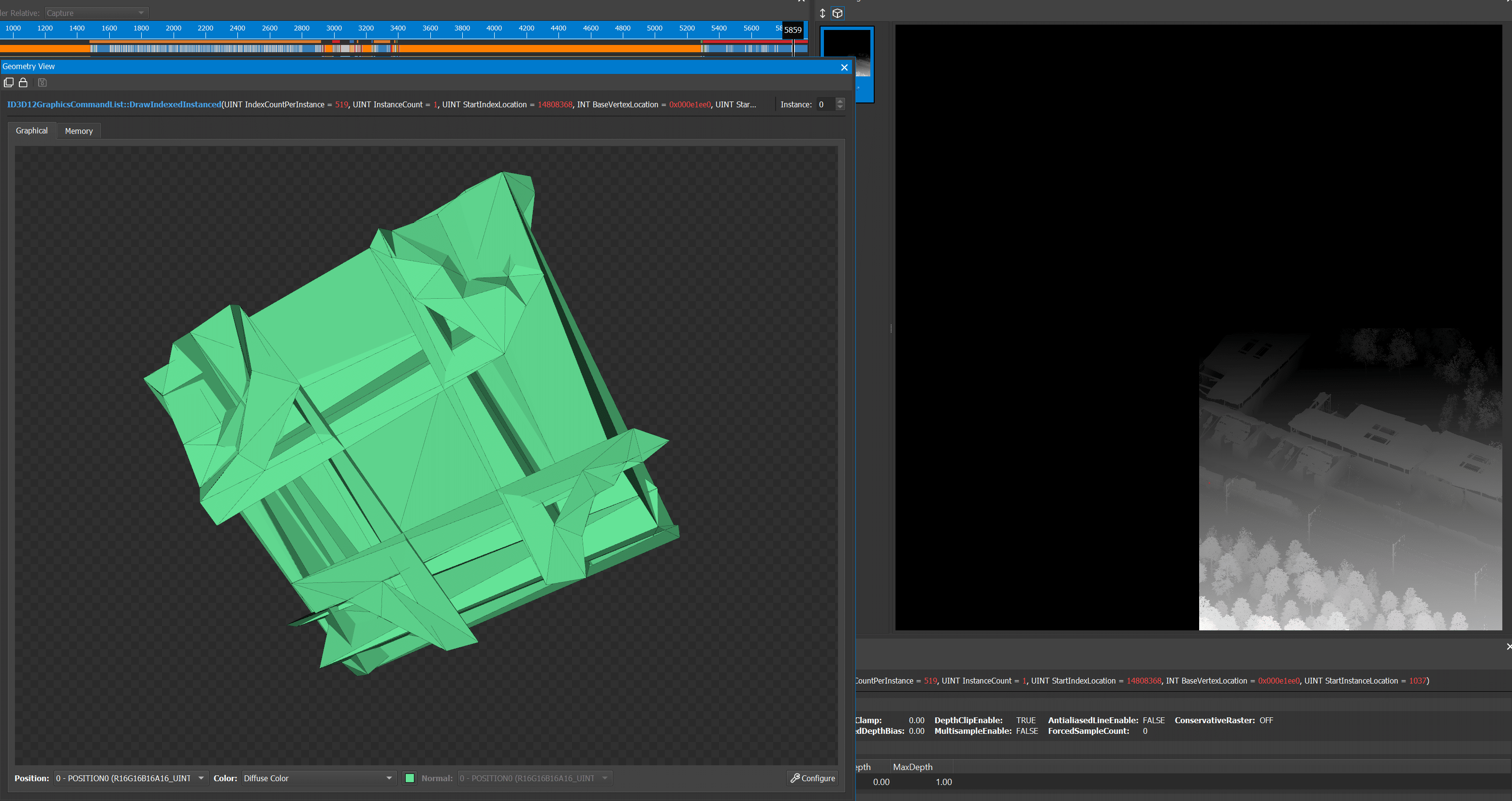

Ultra-high LOD, each scale and switch are completely modeled.

Another screenshot shows a huge detail of objects even far from the player. Each switch and each scale in this picture are clearly readable even without textures. The place where I moved the camera to take this screenshot is located tens of meters from the player and eliminating these details would not have worsened the quality of the picture. Perhaps updating the acceleration structure using LOD would be too costly, but there is a high probability that this update can be performed asynchronously. This point is definitely worth exploring in more detail.

Rendering Directional Shadows

The main part of rendering shadows is simple and does not require special mention, but there are interesting points here.

Meshes for which shadow casting is unlikely

Huge detail in shadow maps

Meshes for which it seems the wrong index buffer seems to be used.

It seems that like accelerating structures, shadow rendering includes absolutely everything. There are objects that almost do not contribute to the shadow map, but they still render. I wonder if this happens because of permission, or is there no easy way in the engine to exclude them?

There are objects that are difficult to notice even with shadows in the screen space. It doesn’t take much time to render them, but it would be interesting to see if they can be removed to save a little time.

When examining the mesh, it seems that some of the meshes rendered in the shadow map have broken index buffers, but after the vertex shader they look correct (the results are the same in both PIX and NSight). This is the best example that I managed to find, but it is far from the only one. Maybe this is some kind of special packaging position?

The meshes

seem to have poor skinning. It seems that skinning is causing problems not only in accelerating structures. Interestingly, it does not lead to visible artifacts on the screen.

Part 2

Minor amendment

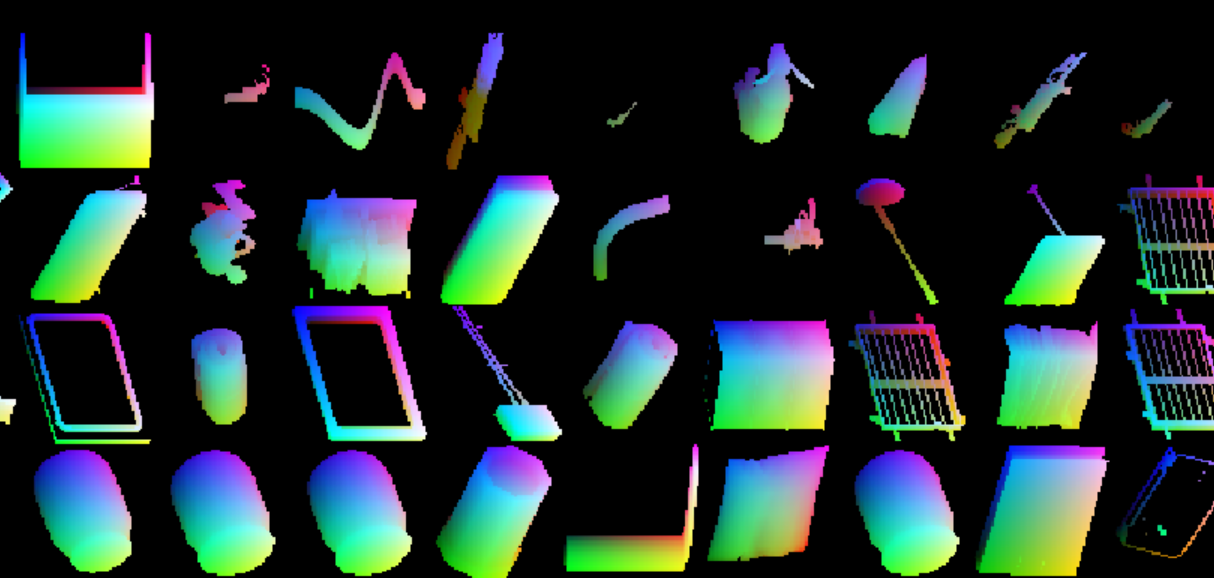

In the previous part, I wrote that the third render target of the GBuffer buffer most likely contains metalness, but it seems that it actually contains specular color. At first I didn’t see any colors and didn’t understand why all three RGB channels contain the same data, but it was probably because there were no color reflections in the scene. For this weapon, the buffer contains many more different colors.

I also forgot to add my favorite texture, which I found in the process of researching the rendering of the game. It is definitely worth mentioning because it demonstrates the chaotic nature of game development when it is not always possible to clean it up.

"Improve me!"

Transparency compositing and anti-aliasing

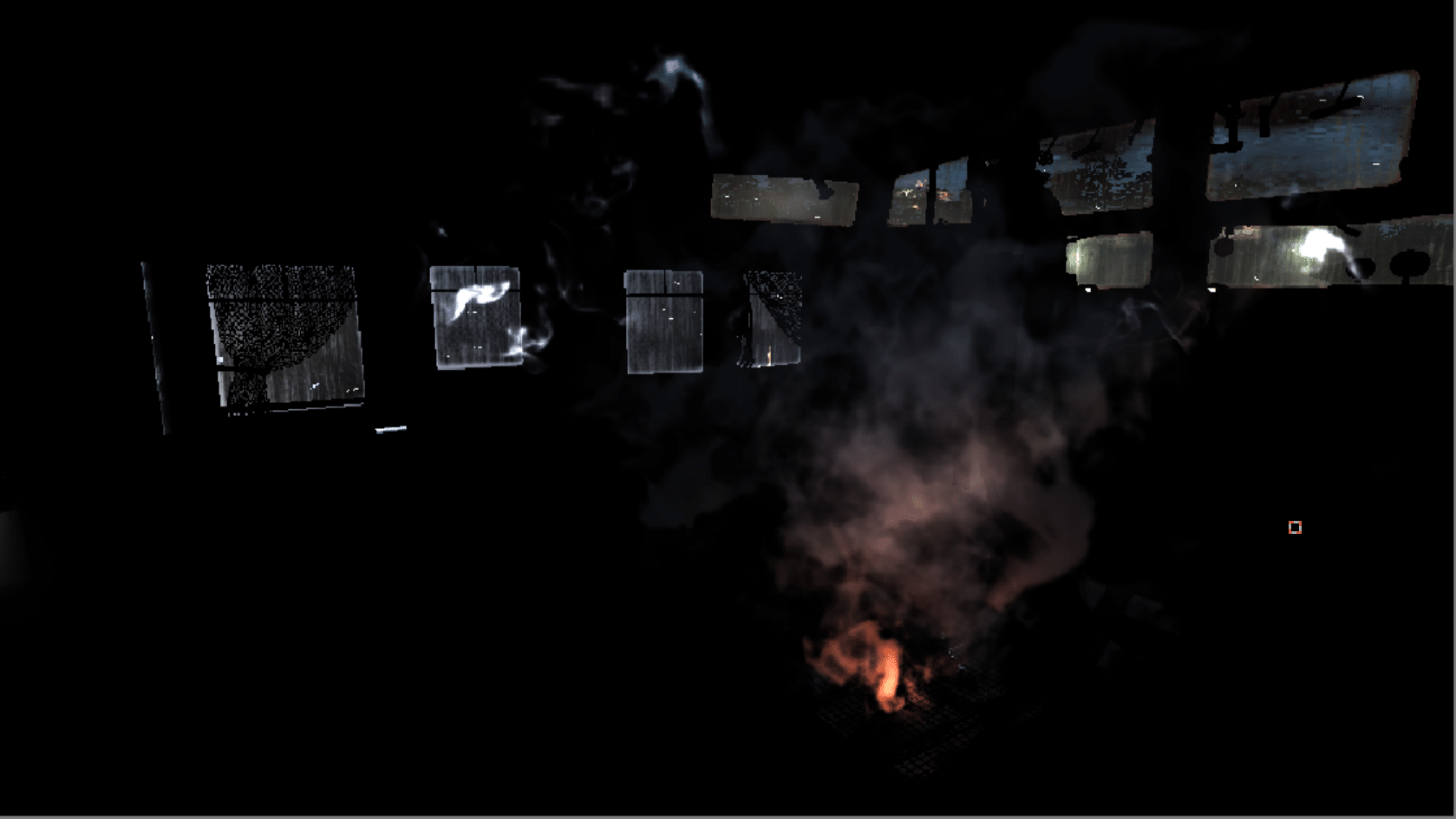

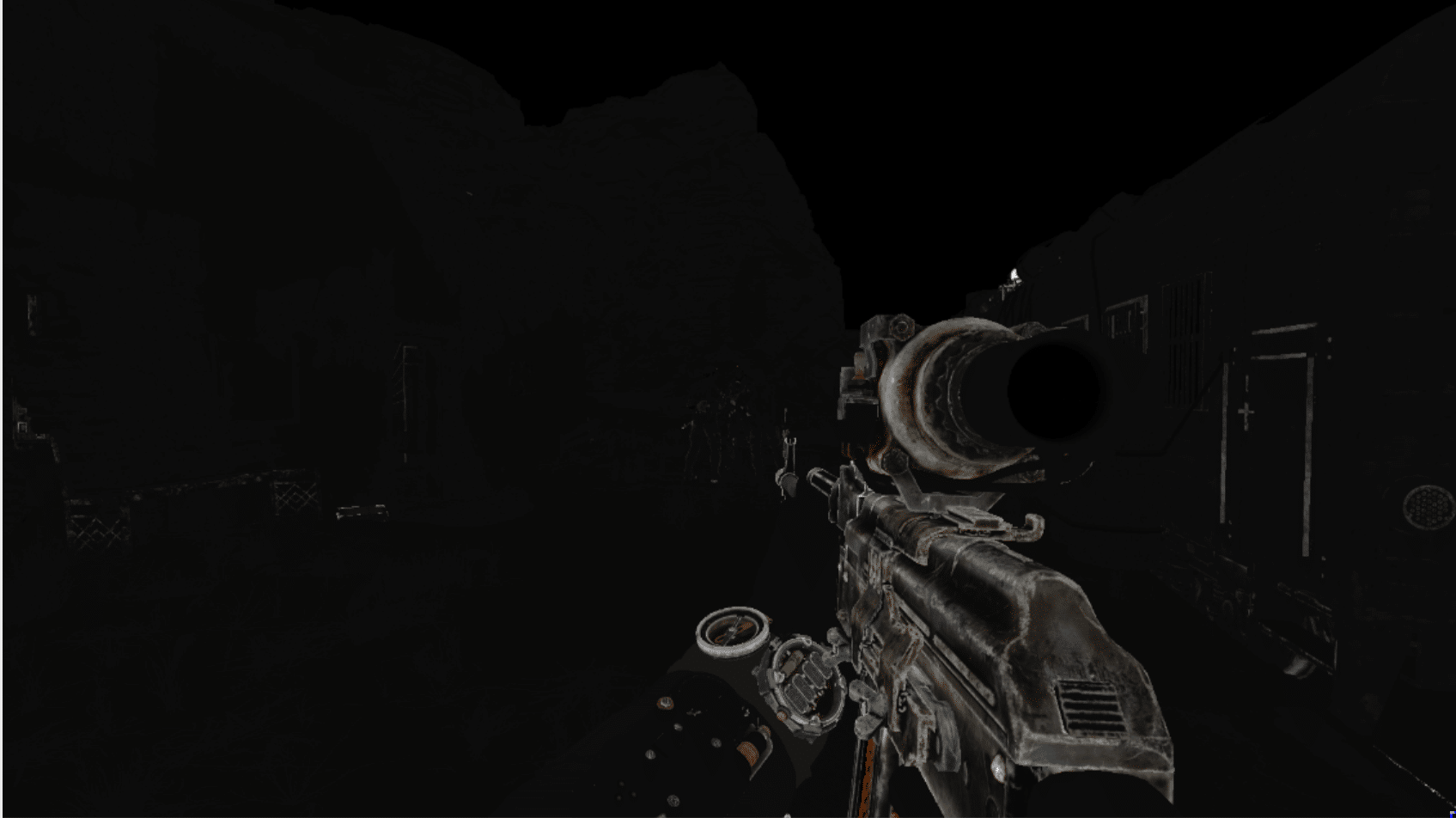

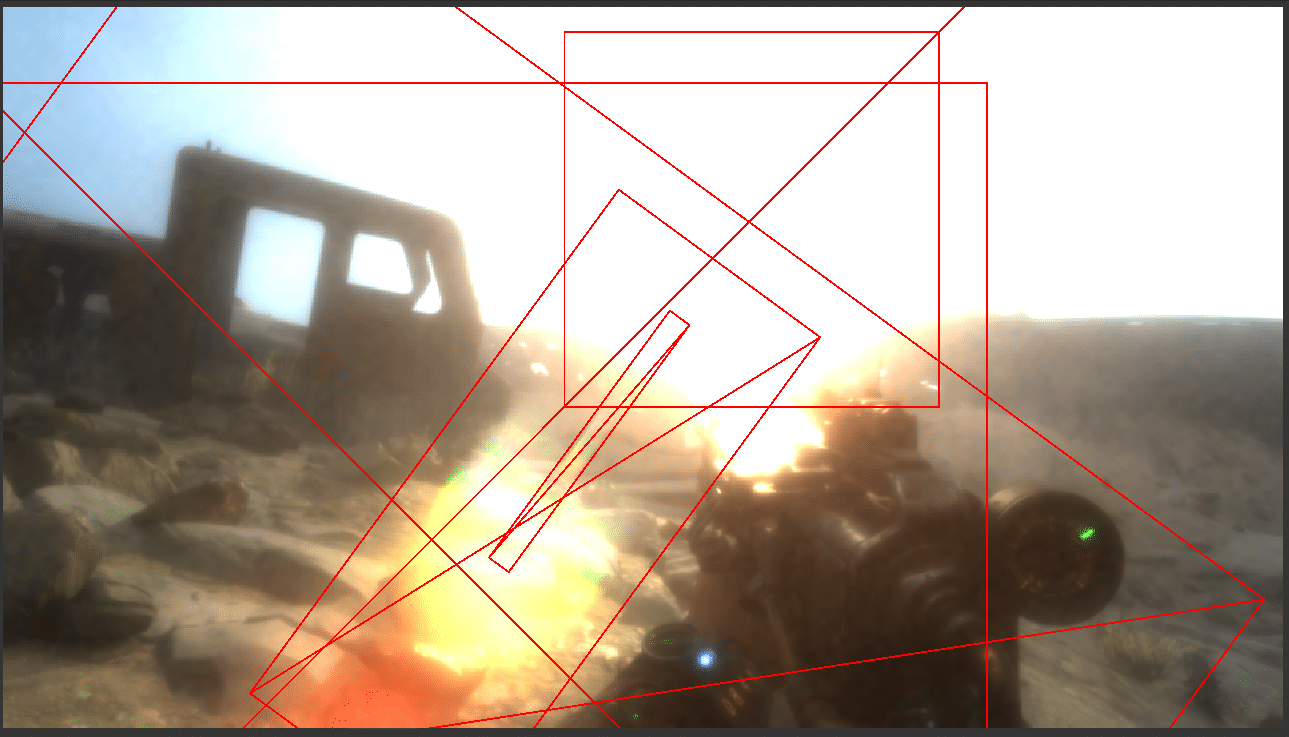

Trying to figure out how the resolution of the half-size transparency buffer increases, and how the game performs antialiasing, I noticed something interesting. I needed a scene where there was much more contrast so that it was clearly visible what was happening. Fortunately, I managed to capture a frame in which the player’s weapon moves slightly between frames.

Before rendering transparency

It seems that before compositing the transparency buffer, the buffer already contains a fully rendered image, and since there are no sharp edges in this frame, it is logical to assume that this is the data of the previous frame.

After compositing the transparency of the current frame

When adding transparency to the current frame, we can notice individual broken edges. It happened because the weapon shifted slightly to the right. Some clouds are rendered transparent, but they are clipped to the horizon (which is opaque), so compositing does not change the bottom, but already renders over the weapon mesh from the previous frame using the depth buffer of the current frame.

After adding opacity to the current frame

After several draw calls, compositing and opaque meshes are performed. There seems to be no particular reason to do this in this order. It is logical to compose the transparency buffer into the data of opaque objects of the current frame, but this does not happen, and it would be interesting to know why.

After TAA

After completing a full frame, the TAA (Temporal Smoothing) pass smooths the edges. I was already interested in this before, because I did not see where the smoothing takes place. But I skipped this because immediately after this draw call the downsampling for the bloom pass starts and I miss this one draw call.

Lens flare

Usually I don’t want to analyze individual effects, but there are many ways to implement lens flare, so I was curious about which developers chose.

Lens flare in ready-made compositing

In most cases, the lens flare is hardly noticeable, but this is a beautiful effect. It is difficult to show in the screenshot, so I will not put much effort into this.

Lens flare in the bloom buffer

After searching, I found a draw call that adds this effect, and it turned out that it was a call after the last stage of raising the bloom resolution. In this buffer, the effect is much more noticeable.

Geometry Lens flare

If you look at the geometry, lens flare is quite simple. At least 6 quadrangles are involved in creating the finished result on the screen, but there is no series of smaller quadrangles getting closer to the position of the sun. We can conclude that this is a fairly standard solution, although some developers render the lens flare directly in the rendertarget scene, while others calculate the effect as post-processing.

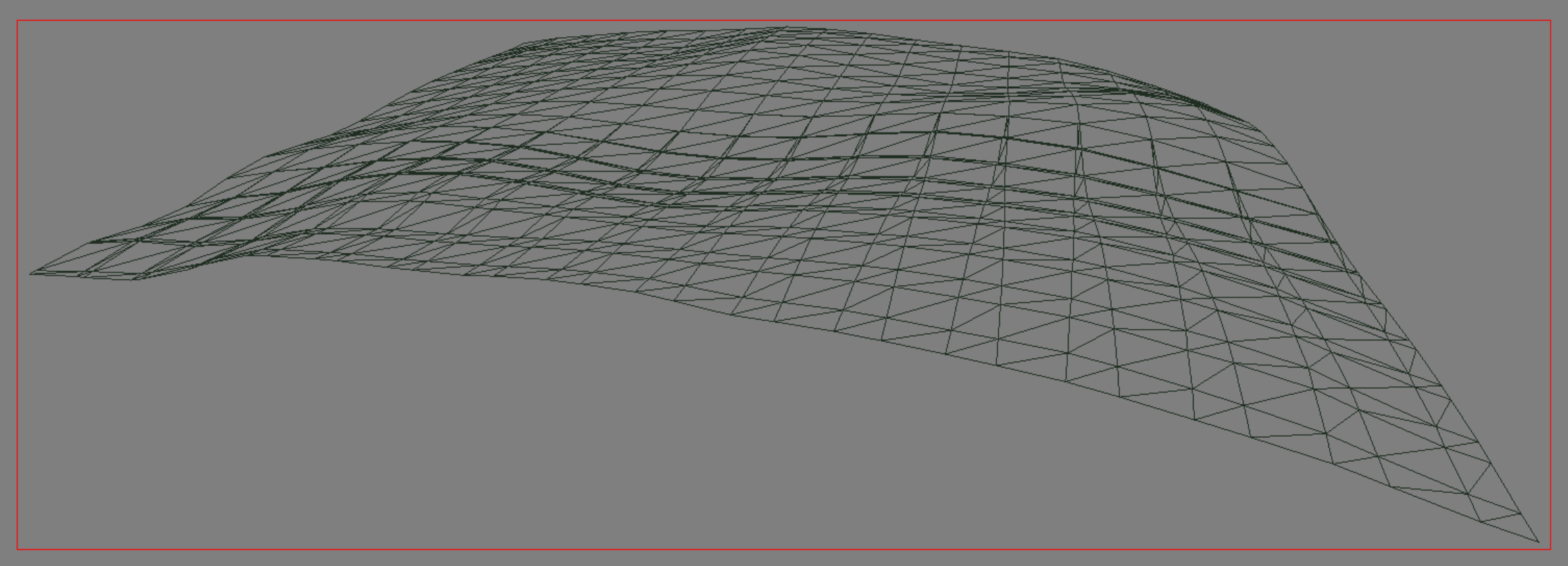

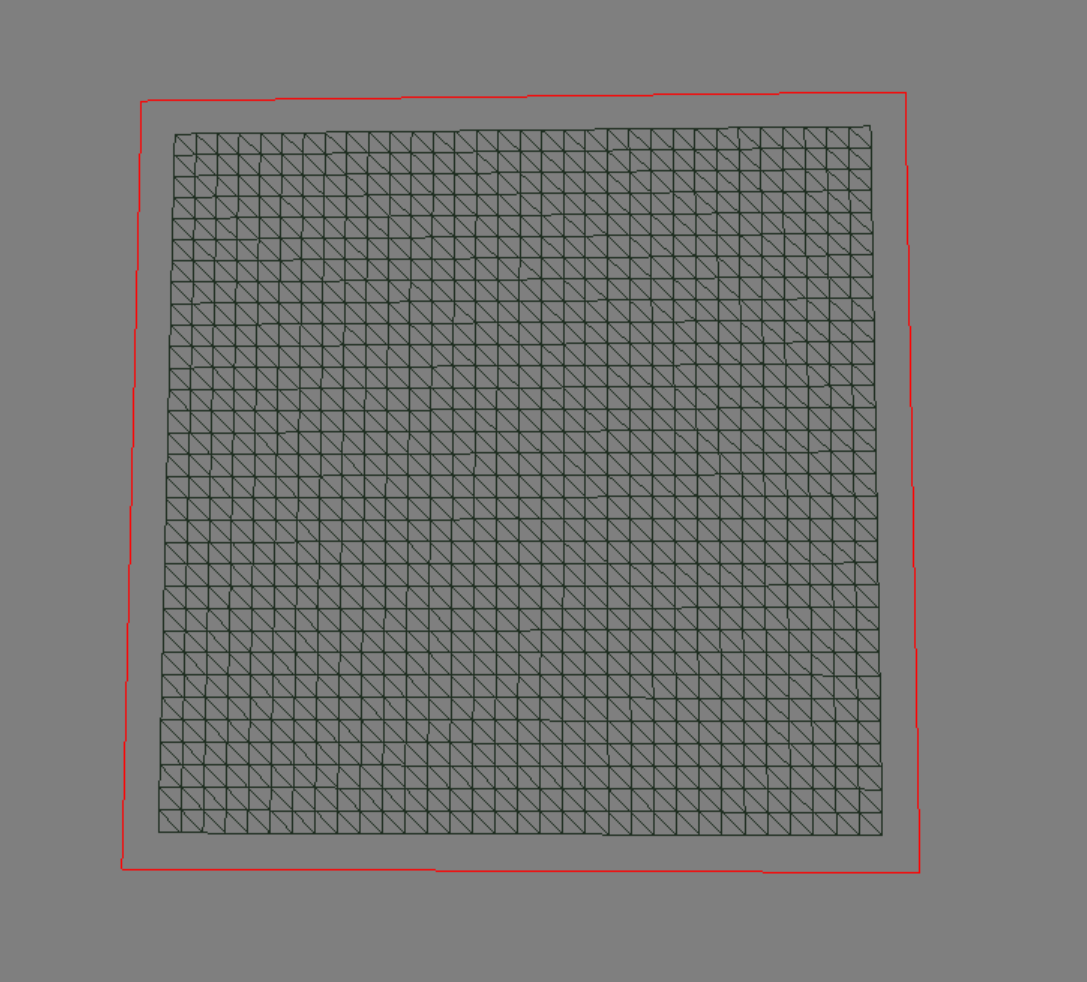

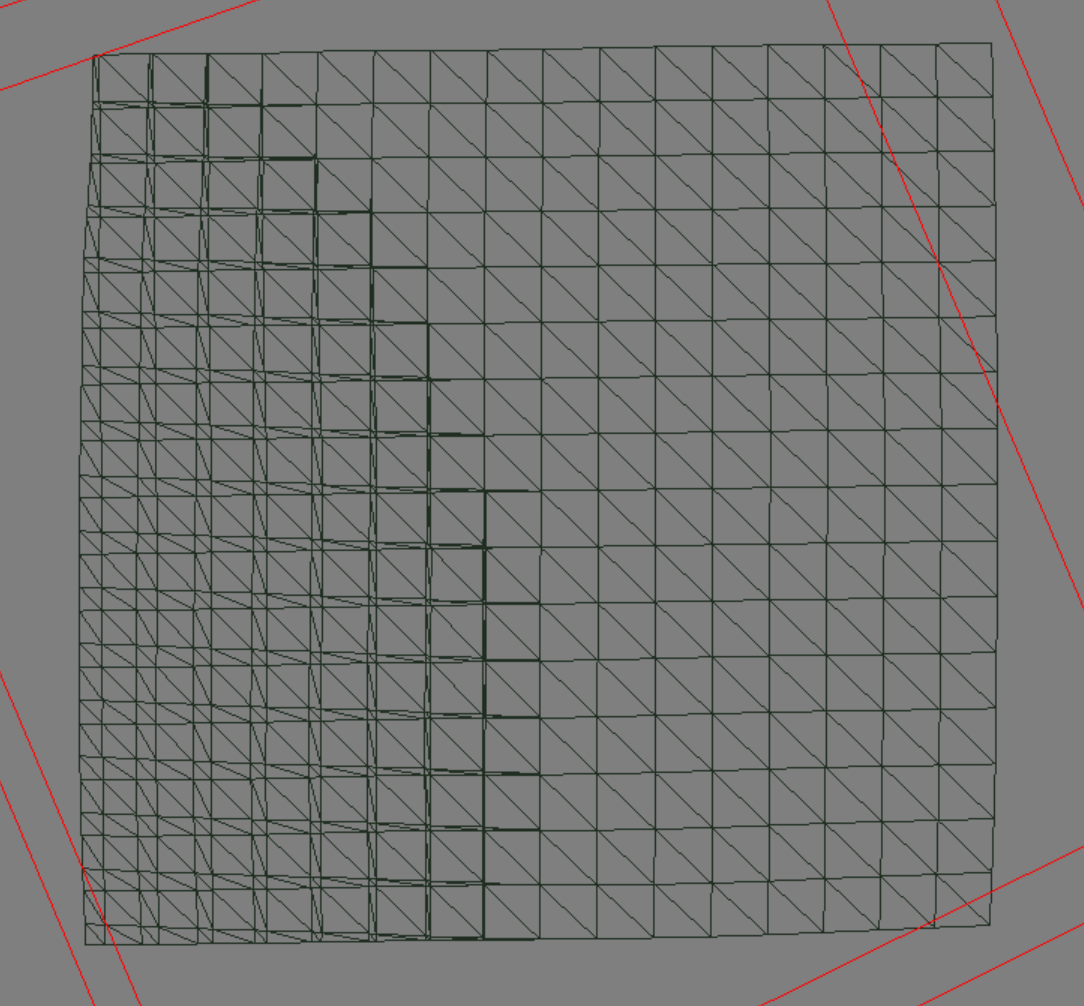

Terrain rendering

In all open-world games, one of the most interesting difficulties is rendering terrain. I decided that it might seem interesting to study this aspect, but, frankly, a little disappointed.

At first glance, a fragment of the relief looks as if some kind of tessellation is being performed. The way the relief is deformed during movement makes it logical to assume that there is some additional displacement. In addition, on a PC, the game actively uses tessellation, so it would be logical to use it in relief.

Perhaps I had the wrong parameters set, but the game renders all fragments of the relief without tessellation. For each fragment of the relief, she uses this uniform 32 * 32 grid. There is also no LOD.

Looking at the relief fragment after the vertex shader, you can see that most pairs of vertices merged, forming an almost perfect 16 * 16 grid, with the exception of some places where greater accuracy is required (probably due to the curvature of the relief). The deformation mentioned above probably arises due to reading the mip-textures of the elevation map of the relief when the relief is far from the camera.

Ray Tracking Tricks

And now about what everyone was waiting for.

Streaming data

One of the most interesting aspects of any DXR implementation at the moment is the way you work with data. The most important thing is how the data is loaded into accelerating structures and how it is updated. To test this, I took two captures and compared the accelerating structures in NSight.

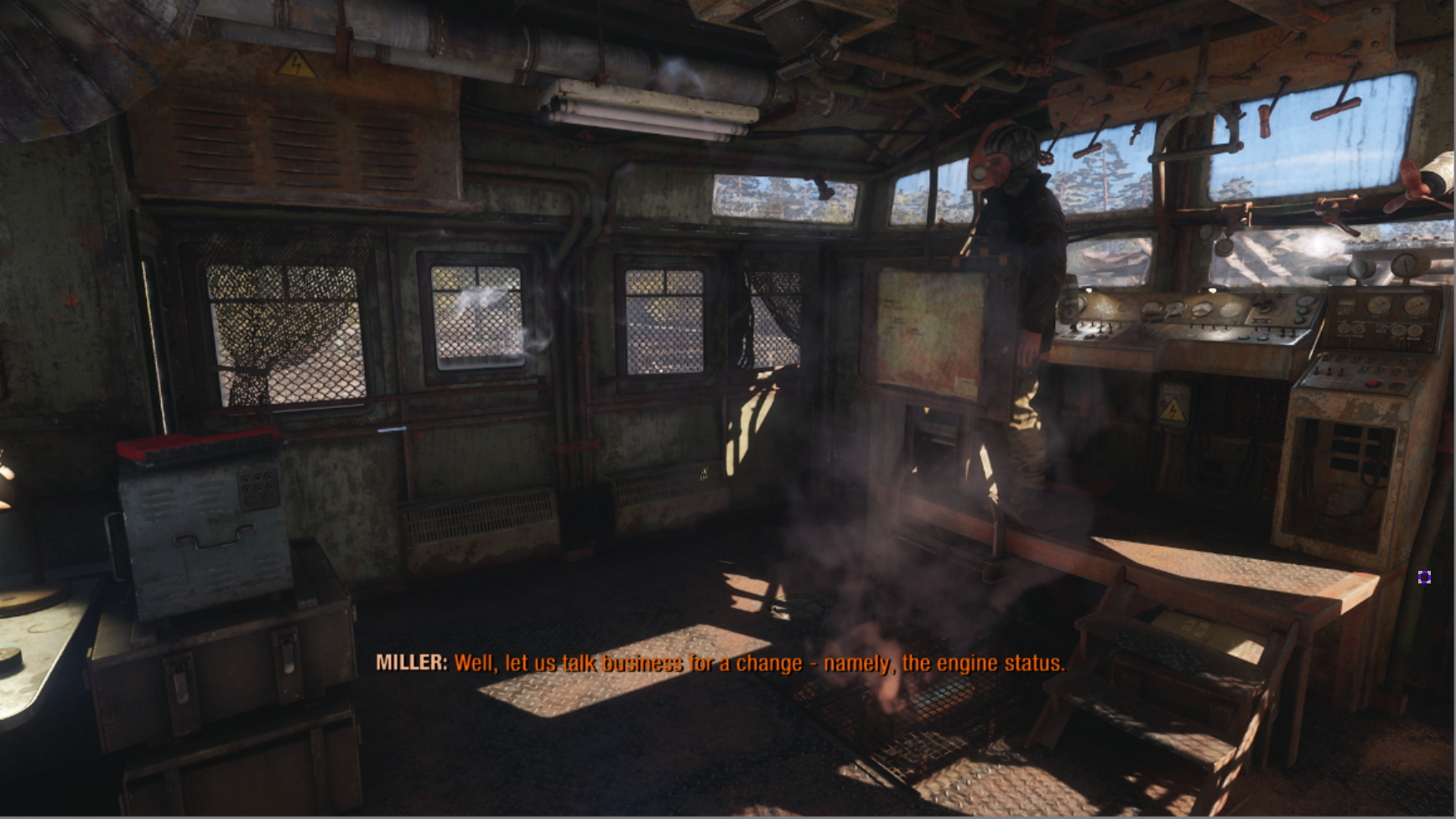

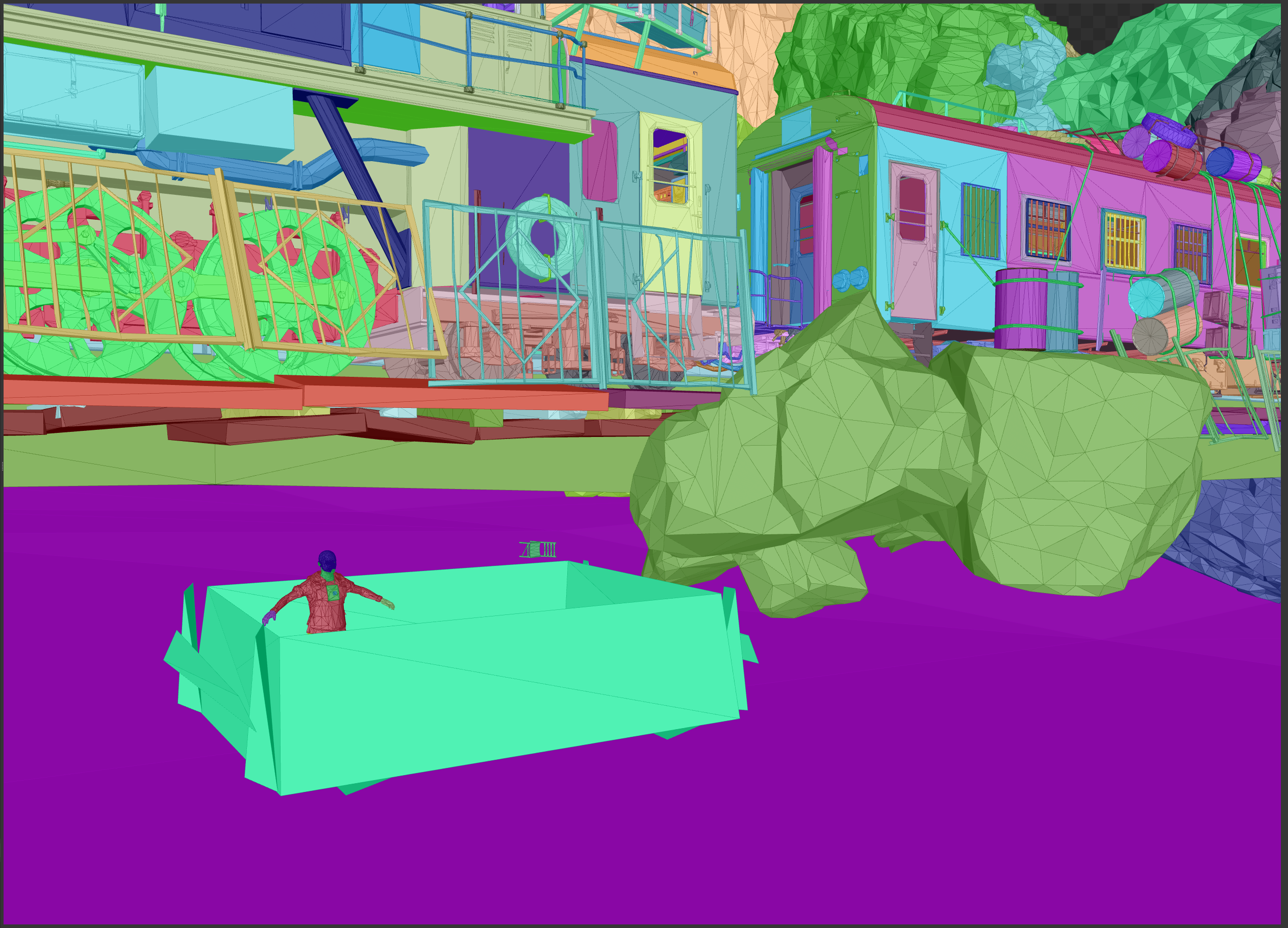

The player is inside the vessel.

In the first capture, I stood inside the broken vessel, which is visible in the middle of this image. Only the nearest objects are loaded, except for large rocks at the edge of the map.

The player moved to the upper left corner of this image.

In the second capture, I moved away from the edge of the map and came closer to the upper left edge of the image. The ship and everything around it is still loaded, but new objects have also loaded. Interestingly, I cannot define any tile structure. Objects can be loaded / removed from the accelerating structure based on distance and visibility (perhaps limiting the parallelogram?). In addition, the upper right edge looks more detailed, although it has moved away from it. It would be interesting to know more about this.

Relief and what's under it

Several aspects of the DXR implementation in Metro: Exodus regarding terrain can be mentioned.

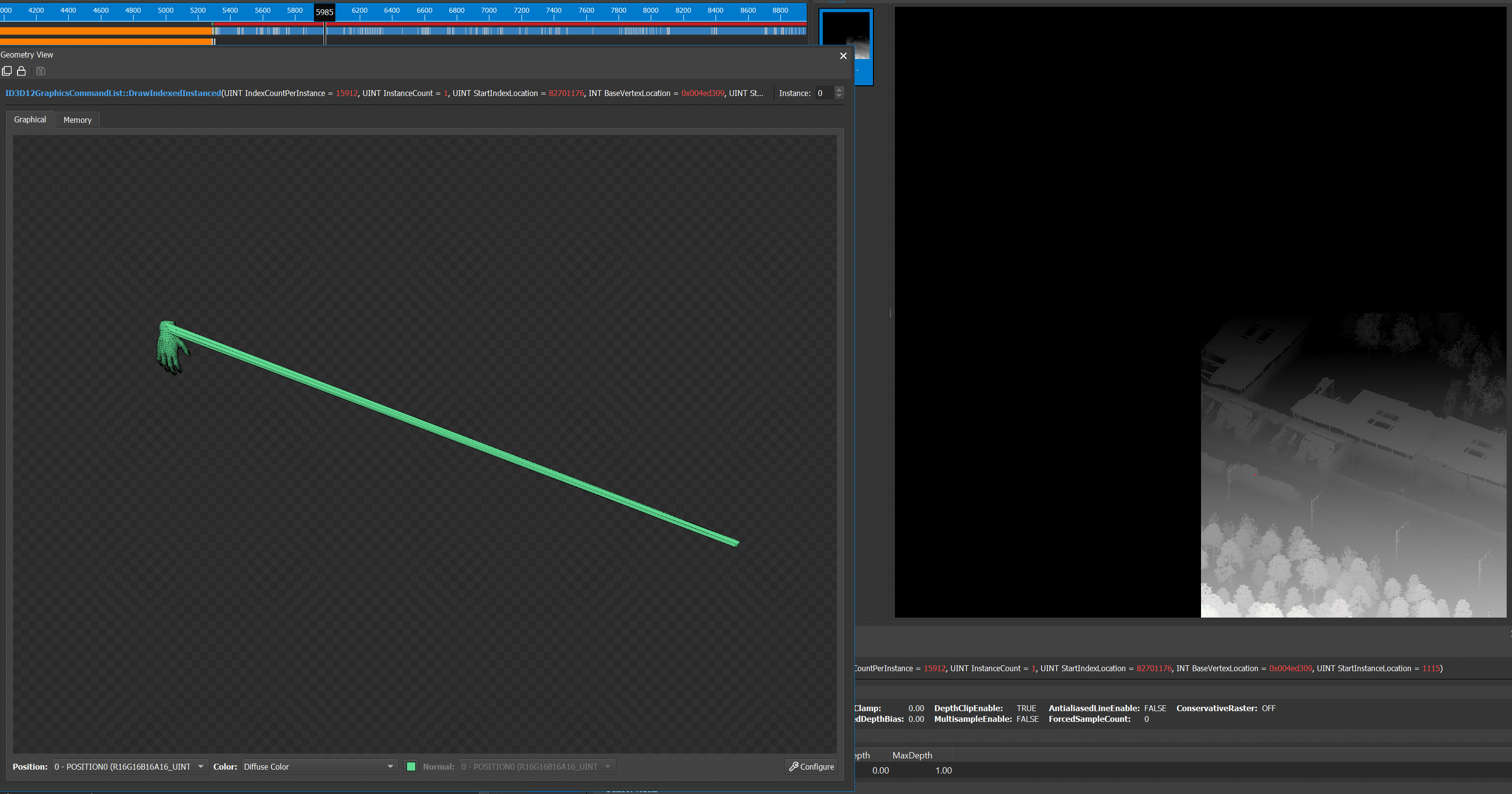

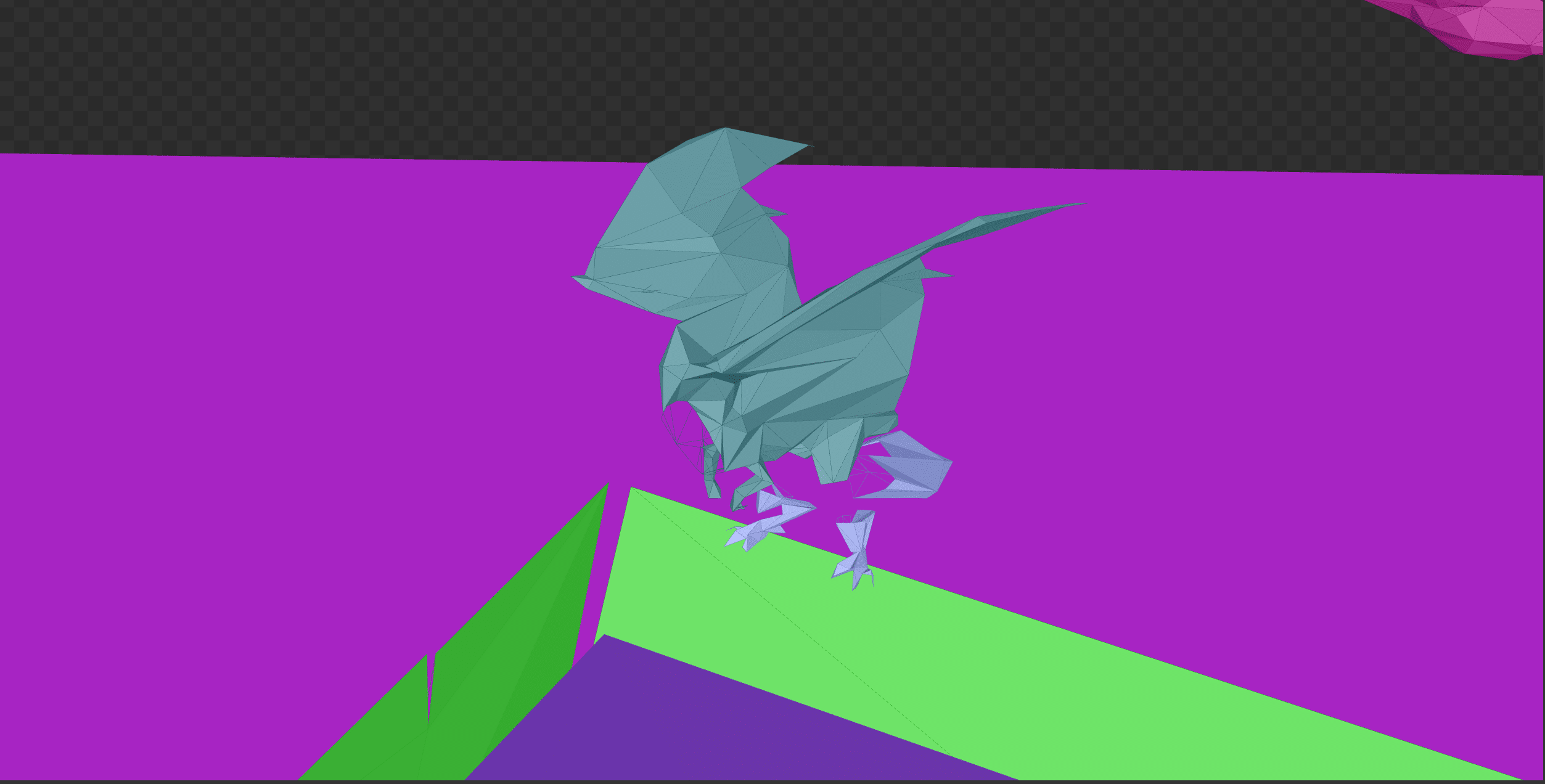

Firstly, it is interesting that accelerating structures do not contain any relief meshes (with the exception of special cases). These monsters actually run in the game on the ground, but judging by the data in NSight, you might think that they are flying. This poses an interesting question for us: can the implementation of global lighting somehow take the relief into account (possibly using a height map and relief material) or not.

The next moment I would never have noticed if the relief were in place. Looking at the beginning of the level at the accelerating structure in NSight, I noticed some meshes under the relief.

Artists quite often, for various reasons, place debug meshes under the level, but they are usually deleted before the game is released. In this case, these meshes not only survived until the release, but also became part of the accelerating structure.

In addition to those mentioned above, I found other meshes scattered beneath the relief. Basically, they are not worth much mention, but this one was very interesting - this is a character standing right below the starting point of the level. It even has its own pool.

Finally, the last curious element of the accelerating structure is the one-sided meshes looking outward of the level. Unless they are considered bilateral, there is very little chance that they make any contribution to the picture of the game. Even if the meshes are two-sided, they are so far from the playable area that they probably just stretch the accelerating structure. It is interesting to see that they are not filtered. This image also shows one of the special cases of the "relief mesh" in the lower right corner, between the train and the building.

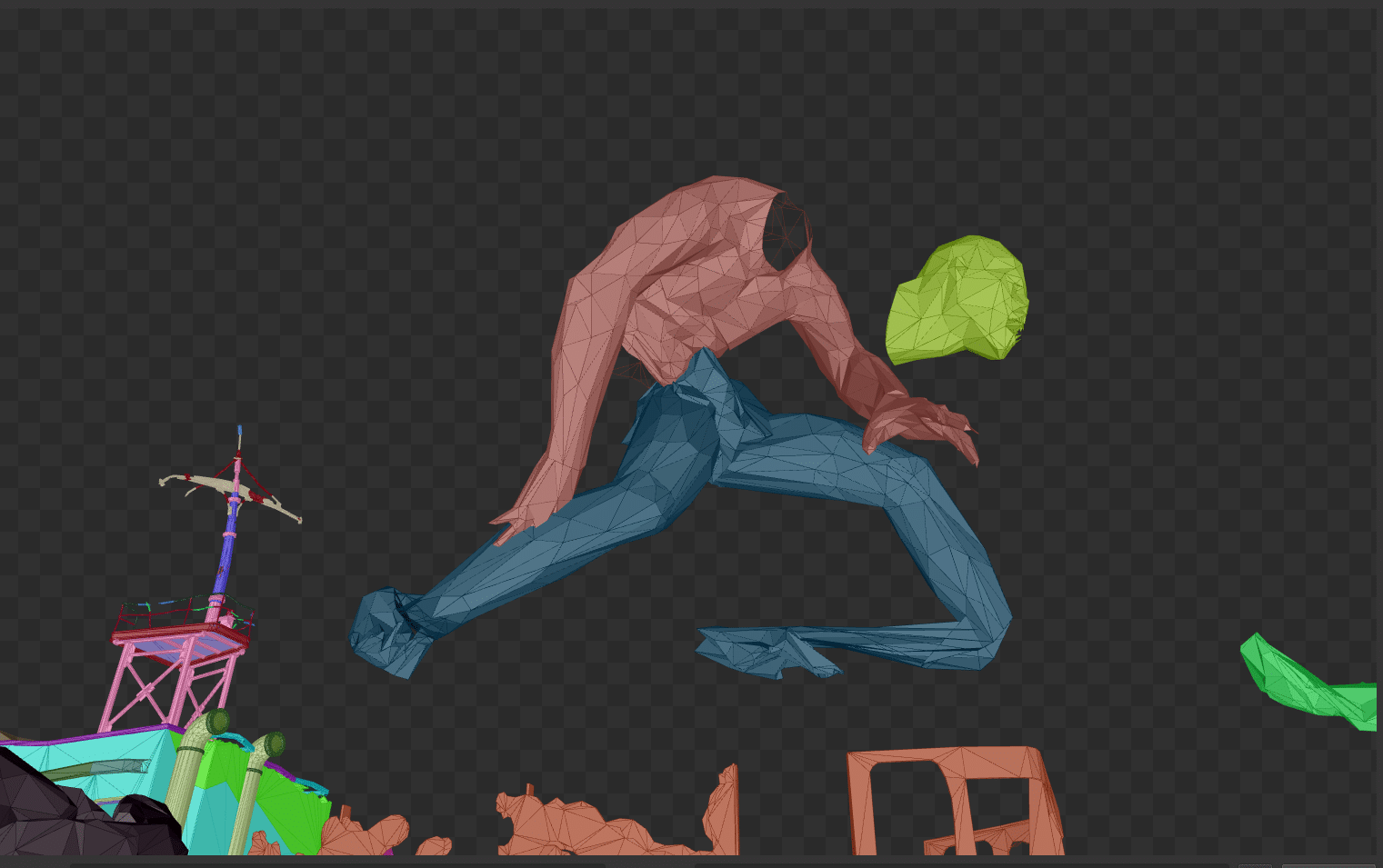

Headlessness with skinning

I already talked about the problems of skinning meshes, but at this level I noticed something else.

Firstly, this monster shows both errors in one image, which I noticed above. I’m still wondering what caused them.

I also noticed that these small creatures, like bats, have no heads in the accelerating structure.

One more example. Notice the hole where the head should be. I have not seen a single case where the head was visible.

The same kind of creatures in rasterization mode. Notice that the head is clearly visible.

And here is the wireframe of the head.

Finally

That's all for today. I hope you enjoyed this look at the insides of Metro: Exodus.

I will continue to explore the rendering of the game, but I will not publish new parts of the article unless I find some special parts that would be interesting to people or find something worth sharing.