5 life hacks for optimizing SQL queries in Greenplum

Any processes related to the database, sooner or later encounter problems with the performance of queries to this database.

Rostelecom's data warehouse is built on Greenplum, most of the calculations (transform) are performed by sql queries, which start (or generate and start) the ETL mechanism. DBMS has its own nuances that significantly affect performance. This article is an attempt to highlight the most critical aspects of working with Greenplum in terms of performance and share experience.

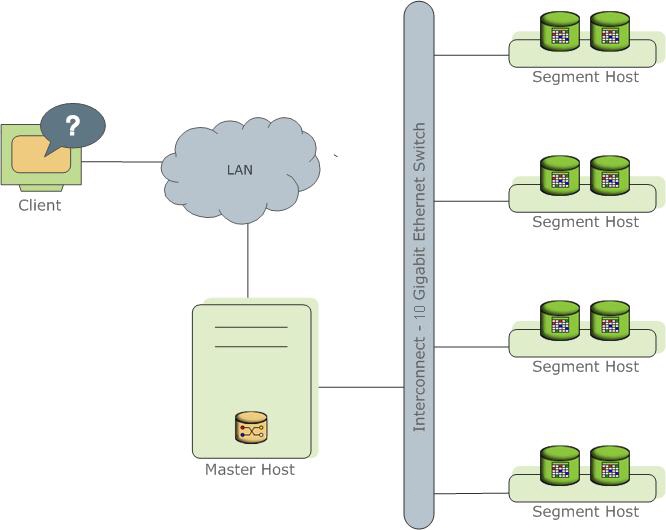

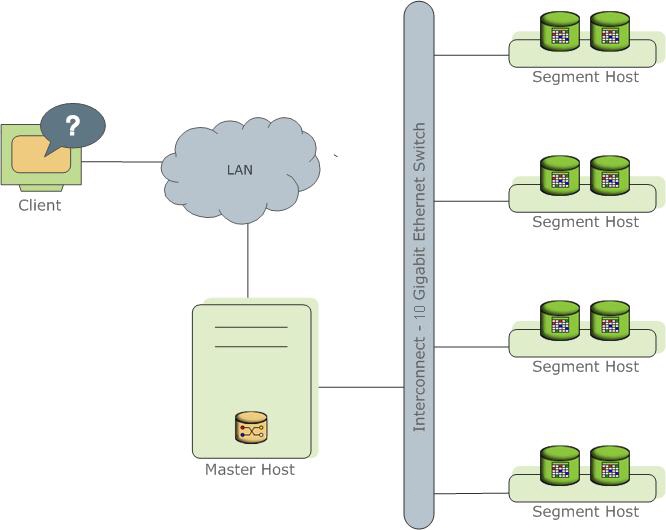

In a nutshell about Greenplum

Greenplum — MPP сервер БД, ядро которого построено на PostgreSql.

Представляет собой несколько разных экземпляров процесса PostgreSql (инстансы). Один из них является точкой входа для клиента и называется master instance (master), все остальные — Segment instanсe (segment, Независимые инстансы, на каждом из которых хранится своя порция данных). На каждом сервере (segment host) может быть запущено от одного до нескольких сервисов (segment). Делается это для того, чтобы лучше утилизировать ресурсы серверов и в первую очередь процессоры. Мастер хранит метаданные, отвечает за связь клиентов с данными, а также распределяет работу между сегментами.

Подробнее можно почитать в официальной документации.

Представляет собой несколько разных экземпляров процесса PostgreSql (инстансы). Один из них является точкой входа для клиента и называется master instance (master), все остальные — Segment instanсe (segment, Независимые инстансы, на каждом из которых хранится своя порция данных). На каждом сервере (segment host) может быть запущено от одного до нескольких сервисов (segment). Делается это для того, чтобы лучше утилизировать ресурсы серверов и в первую очередь процессоры. Мастер хранит метаданные, отвечает за связь клиентов с данными, а также распределяет работу между сегментами.

Подробнее можно почитать в официальной документации.

Further in the article there will be many references to the request plan. Information for Greenplum is available here .

How to write good queries on Greenplum (well, or at least not quite sad)

Since we are dealing with a distributed database, it is important not only how the sql query is written, but also how the data is stored.

1. Distribution

Data is physically stored on different segments. You can separate data by segments randomly or by the value of the hash function of a field or a set of fields.

Syntax (when creating a table):

DISTRIBUTED BY (some_field)Or so:

DISTRIBUTED RANDOMLYThe distribution field should have good selectivity and not have null values (or have a minimum of such values), since records with such fields will be distributed on one segment, which can lead to data distortions.

The field type is preferably integer. The field is used to join tables. Hash join is one of the best ways to join tables (in terms of query execution), works best with this data type.

For distribution, it is advisable to choose no more than two fields, and, of course, one is better than two. Additional fields in distribution keys, firstly, require additional time for hashing, and secondly (in most cases) require data transfer between segments when executing joins.

You can use random distribution if you are unable to select one or two suitable fields, as well as for small labels. But we must take into account that such a distribution works best for mass data insertion, and not for a single record. GreenPlum distributes data according to the cyclic algorithm, and it starts a new cycle for each insert operation, starting from the first segment, which, with frequent small inserts, leads to skews (data skew).

With a well-chosen distribution field, all calculations will be performed on the segment, without sending data to other segments. Also, for optimal join of tables (join), the same values should be located on the same segment.

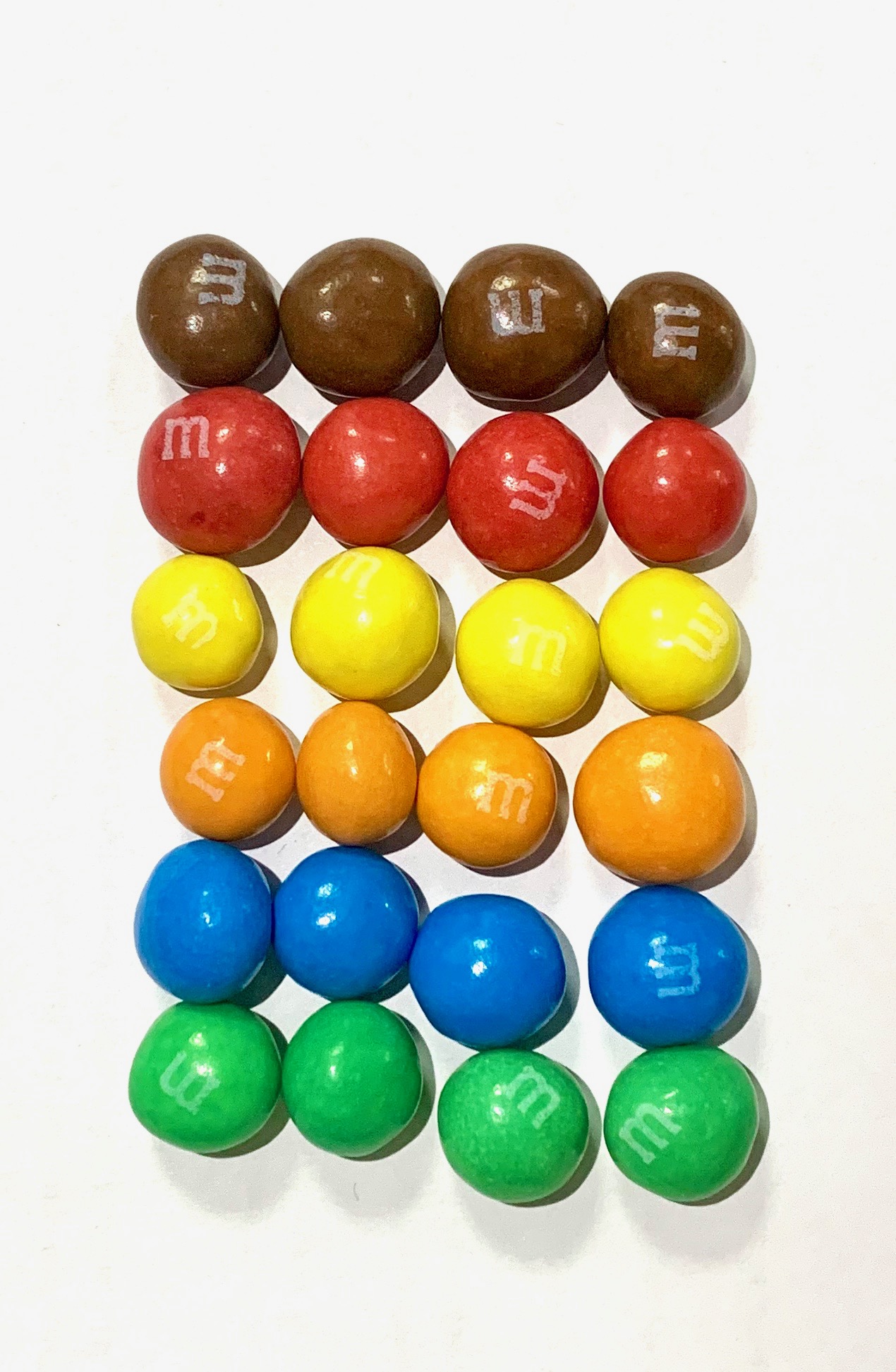

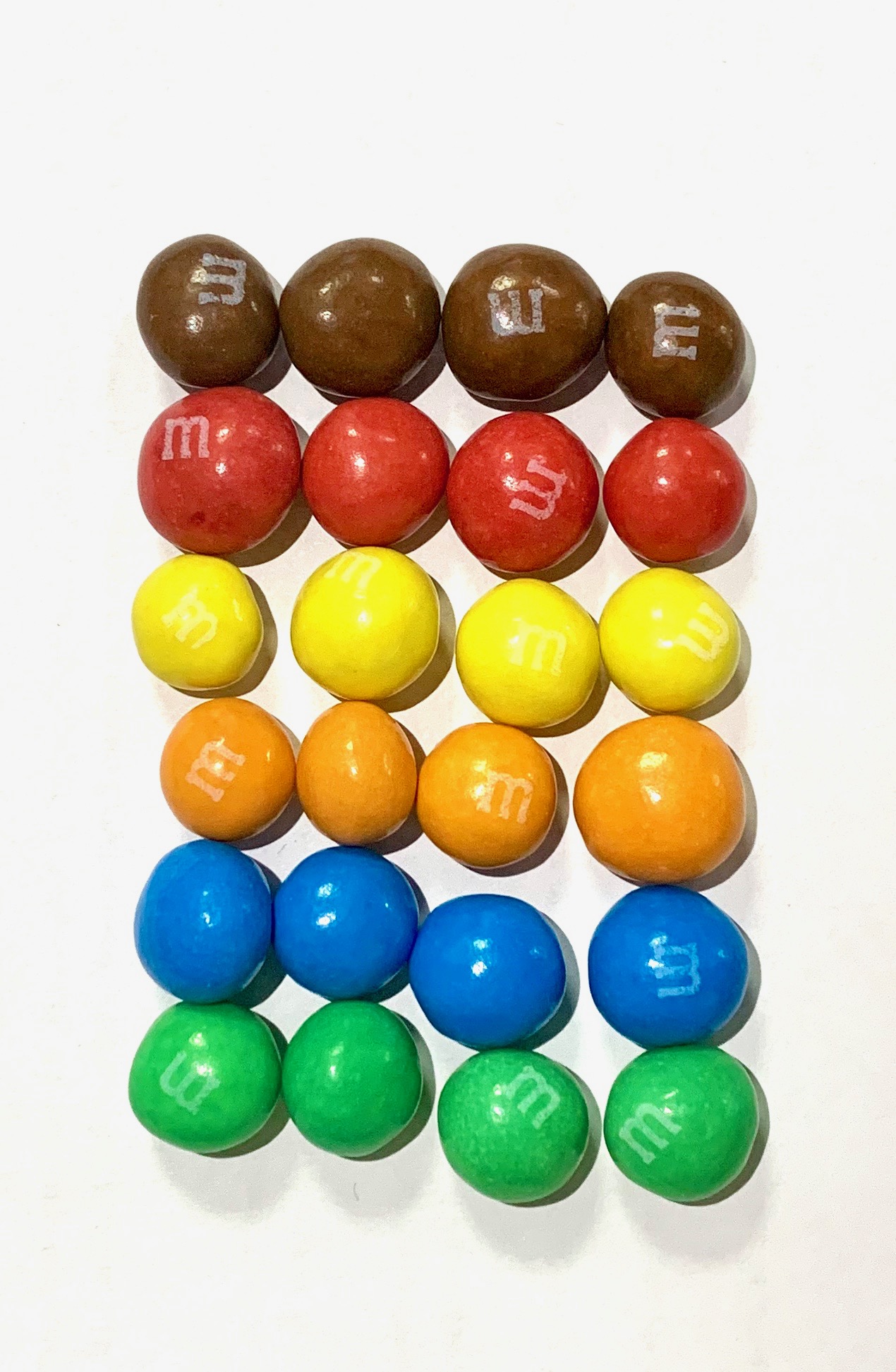

Distribution in pictures

Хороший ключ распределения:

Плохо подобранный ключ распределения:

Случайное распределение:

Плохо подобранный ключ распределения:

Случайное распределение:

The type of fields used in join must be the same in all tables.

Important: do not use as the distribution fields those that are used to filter queries in where, since in this case the load during the query will also not be distributed evenly.

2. Partitioning

Partitioning allows you to divide large tables, such as facts , into logically separated pieces. Greenplum physically divides your table into separate tables, each of which is divided into segments based on the settings from p. 1.

Tables should be divided into sections logically, for this purpose, select the field that is often used in the where block. In fact tables this will be the period. Thus, with proper access to the table in queries, you will work only with part of the entire large table.

In general, partitioning is a fairly well-known topic, and I wanted to emphasize that you should not choose the same field for partitioning and distribution. This will lead to the fact that the request will be executed entirely on one segment.

It’s time to go, actually, to the requests. The request will be executed on segments according to a specific plan :

3. The optimizer

Greenplum has two optimizers, the built-in legacy optimizer and the third-party Orca optimizer: GPORCA - Orca - Pivotal Query Optimizer.

Enable GPORCA on request:

set optimizer = on;As a rule , the GPORCA optimizer is better than the built-in. It works more adequately with subqueries and CTE (more details here ).

Made a call to a large table in CTE with maximum data filtering (do not forget about partition pruning) and an explicitly specified list of fields - it works very well.

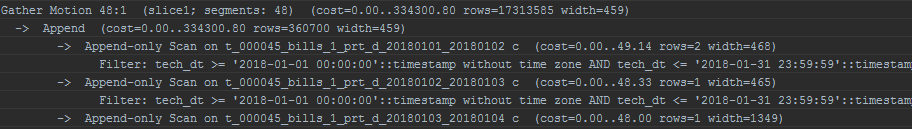

It slightly modifies the query plan, for example, it displays scanned partitions differently:

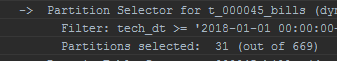

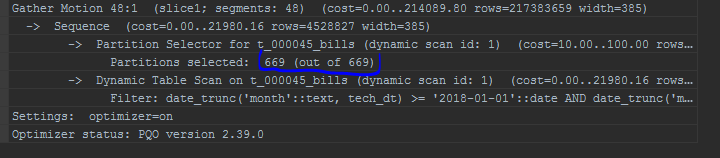

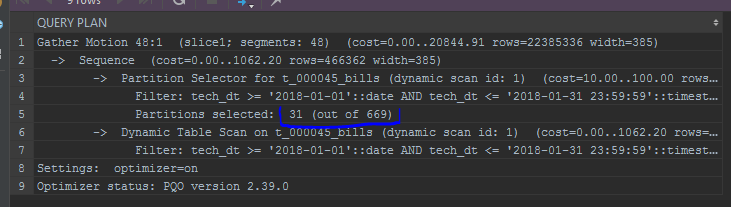

Standard optimizer:

Orca:

GPORCA also allows updating partition / distribution fields. Although there are situations when the built-in optimizer performs better. A third-party optimizer is very demanding on statistics, it is important not to forget to analyze .

No matter how good the optimizer, a poorly written query will not even stretch Orca:

4. Manipulations with fields in the where block or join conditions

It is important to remember that the function applied to the filter field or the conditions of the join is applied to each record.

In the case of the partitioning field (for example, date_trunc to the partitioning field - date), even GPORCA cannot work out correctly in this case, clipping partitions will not work.

-- функция к полю партиционированияset optimizer = on;

explainselect *

from edw_ods.t_000045_bills c

where date_trunc('month',tech_dt) betweento_date('20180101', 'YYYYMMDD') andto_date('20180101', 'YYYYMMDD') + interval'1 month - 1 second'

;

-- без преобразования поля партиционированияset optimizer = on;

explainselect *

from edw_ods.t_000045_bills c

where tech_dt betweento_date('20180101', 'YYYYMMDD') andto_date('20180101', 'YYYYMMDD') + interval'1 month - 1 second'

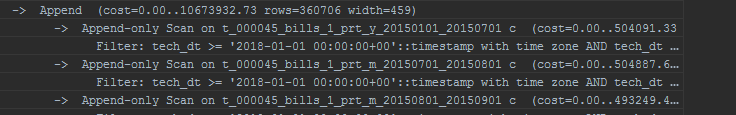

I also draw attention to the display of partitions. The built-in optimizer will display partitions in a list:

With care, apply functions to constants in the same partition filters. An example is the same date_trunc:

date_trunc('month',to_date($p_some_dt, 'YYYYMMDD'))

GPORCA will completely cope with such a feint and will work correctly, the standard optimizer will no longer cope. However, by making an explicit type conversion, you can make it work:

date_trunc('month',to_date($p_some_dt, 'YYYYMMDD'))::timestamp without time zone

And if everything is done wrong?

5. Motions

Another type of operation that can be observed in the query plan is motions. So marked the movement of data between segments:

- Gather motion - will be displayed in almost every plan, means combining the results of query execution from all segments into one stream (usually to the master).

Two tables, distributed by one key, which is used for a join, perform all operations on segments, without moving data. Otherwise, Broadcast motion or Redistribution motion occurs: - Broadcast motion - each segment sends its copy of the data to other segments. In an ideal situation, Broadcast occurs only for small tables.

- Redistribution motion - to join large tables distributed across different keys, redistribution is performed to make connections locally. For large tables, this can be quite an expensive operation.

Broadcast and Redistribution are quite disadvantageous operations. They are executed every time the request is run. It is recommended to avoid them. Having seen such points in the query plan, it is worth paying attention to the distribution keys. Also, distinct and union operations are the cause of motions.

This list is not exhaustive and is based mainly on the experience of the author. Could not find everything at once on the Internet at the time. Here I tried to identify the most critical factors affecting the performance of the request, and to understand why and why this is happening.

This article was prepared by the Rostelecom data management team