Back to microservices with Istio. Part 1

- Transfer

- Tutorial

Note perev. : Service meshes have definitely become a relevant solution in the modern infrastructure for applications that follow the microservice architecture. Although Istio may be heard by many DevOps engineers, it is a fairly new product, which, being comprehensive in terms of the features provided, may require considerable time to get to know each other. German engineer Rinor Maloku, who is responsible for cloud computing for large customers at the telecommunications company Orange Networks, has written a wonderful series of materials that allow you to quickly and deeply dive into Istio. He begins his story with what Istio can do and how you can quickly see it with your own eyes.

Isstio- An open source project developed in collaboration with teams from Google, IBM and Lyft. It solves the difficulties that arise in applications based on microservices, for example, such as:

- Traffic management : timeouts, retries, load balancing;

- Security : authentication and authorization of the end user;

- Observability : trace, monitoring, logging.

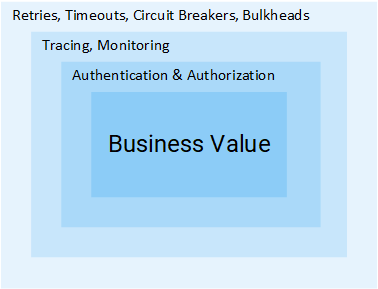

All of them can be solved at the application level, but after that your services will cease to be “micro”. All the additional efforts to solve these problems are an extra waste of company resources that could be used directly for business values. Consider an example:

Project Manager: How long to add feedback?I think the idea is clear: the volume of steps and efforts that are required to add one service is huge. In this article, we will look at how Istio removes all of the difficulties mentioned above (which are not targeted for business logic) from services.

Developer: Two sprints.

MP: What? .. It's just CRUD!

R: Making CRUD is a simple part of the task, but we still need to authenticate and authorize users and services. Since the network is unreliable, you will need to implement repeated requests, as well as the circuit breaker pattern in clients. Still, to make sure that the whole system did not fall, you will need timeouts and bulkheads (for more details about both mentioned patterns, see further in the article - approx. Transl.) , And in order to detect problems, you will need monitoring, tracing, [...]

MP : Oh, then let's just insert this feature into the Product service.

Note : This article assumes that you have practical knowledge of Kubernetes. Otherwise, I recommend reading my introduction to Kubernetes and only after that continue reading this material.

Idea Istio

In a world without Istio, one service makes direct requests to another, and in the event of a failure, the service must process it itself: make a new attempt, provide a timeout, open a circuit breaker, etc.

Network traffic in Kubernetes

Istio also offers a specialized solution, completely separated from services and functioning by interfering with network interaction. And so it implements:

- Fault tolerance : Based on the status code in the response, it understands whether the request has failed, and runs it again.

- Canary rollouts : redirects to the new version of the service only a fixed percentage of the number of requests.

- Monitoring and metrics : how long did the service respond?

- Tracing and Observability : Adds special headers to each request and traces them in the cluster.

- Security : extracts JWT token, authenticates and authorizes users.

These are just a few of the possibilities (really only a few!) To intrigue you. Now let's dive into the technical details!

Architecture Istio

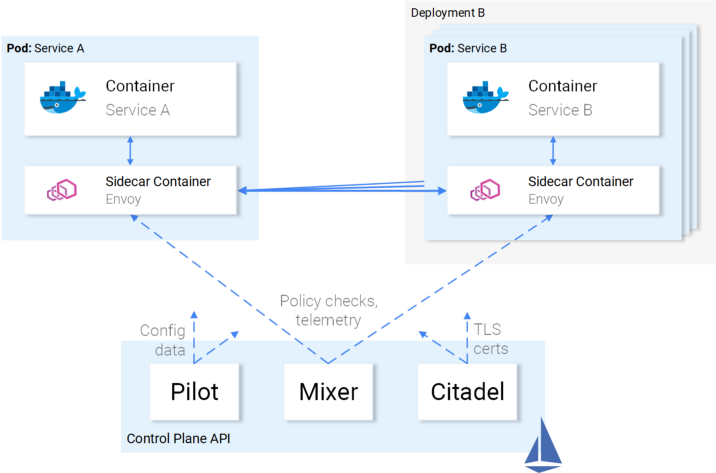

Istio intercepts all network traffic and applies a set of rules to it, inserting a smart proxy into each pod in the form of a sidecar-container. Proxies that activate all features form a Data Plane , and they can be dynamically configured using the Control Plane .

Data plane

The proxies inserted into pods allow Istio to easily meet the requirements we need. For example, check the retry and circuit breaker functions.

How retries and circuit breaking are implemented in Envoy To

summarize:

- Envoy (talking about a proxy in a sidecar container that is distributed as a separate product - approx. Transl.) Sends a request to the first instance of service B and a failure occurs.

- Envoy Sidecar retries . (1)

- The failed request is returned to the proxy that called it.

- This opens Circuit Breaker and calls the next service for subsequent requests. (2)

This means that you don’t have to use the next Retry library, you don’t have to do your own implementation of Circuit Breaking and Service Discovery in the programming language X, Y or Z. All this and much more is available out of the box in Istio and does not require any changes to the code.

Excellent! Now you may want to go on a voyage with Istio, but there are still some doubts, open-ended questions. If this is a universal solution for all occasions in life, then you have a legitimate suspicion: after all, all such decisions in reality turn out to be unsuitable for any occasion.

And finally, you ask: “Is it customizable?”

Now you are ready for a sea voyage - and let's get acquainted with the Control Plane.

Control plane

It consists of three components: Pilot , Mixer, and Citadel , which work together to configure Envoys to route traffic, apply policies, and collect telemetry data. Schematically, it all looks like this: The

interaction of the Control Plane with the Data Plane

Envoys (i.e. the data plane) are configured using Kubernetes CRD (Custom Resource Definitions) defined by Istio and specifically designed for this purpose. For you, this means that they appear to be the next resource in Kubernetes with familiar syntax. After creation, this resource will be picked up by the control plane and applied to Envoys.

Service ratio for Istio

We described Istio's attitude to services, but not the opposite: how do services relate to Istio?

Honestly, Istio knows about the presence of services as well as fishes - about water, when they ask themselves: “What is water in general?”.

Illustration by Victoria Dimitrakopoulos : - How do you like the water? - What is water in general?

Thus, you can take the working cluster and after the deployment of the Istio components, the services located in it will continue to work, and after the removal of these components, everything will be fine again. It is clear that in doing so you will lose the opportunities provided by Istio.

Enough theory - let's put this knowledge into practice!

Istio in practice

Istio requires a Kubernetes cluster in which at least 4 vCPUs and 8 GB RAM are available. To quickly raise the cluster and follow the instructions from the article, I recommend using the Google Cloud Platform, which offers new users free $ 300 .

After you create a cluster and configure access to Kubernetes through the console utility, you can install Istio through the Helm package manager.

Helm Installation

Install the Helm client on your computer, as they say in the official documentation . We will use it to generate templates for installing Istio in the next section.

Install Istio

Download Istio resources from the latest release (the original author’s link to version 1.0.5 is changed to the current one, i.e. 1.0.6 - approx. Transl.) , Extract the contents into one directory, which I will call in the future

[istio-resources]. For ease of identifying Istio resources, create a namespace in the K8s cluster

istio-system:$ kubectl create namespace istio-systemComplete the installation by going to the directory

[istio-resources]and running the command:$ helm template install/kubernetes/helm/istio \

--set global.mtls.enabled=false \

--set tracing.enabled=true \

--set kiali.enabled=true \

--set grafana.enabled=true \

--namespace istio-system > istio.yamlThis command will output the key components of Istio to a file

istio.yaml. We changed the standard template for ourselves, specifying the following parameters:global.mtls.enabledset tofalse(i.e. mTLS authentication is disabled - approx. transl.) in order to simplify our dating process;tracing.enabledEnables query tracing using Jaegerkiali.enabledinstalls Kiali in a cluster to visualize services and traffic;grafana.enabledsets Grafana to visualize collected metrics.

We apply the generated resources with the command:

$ kubectl apply -f istio.yamlInstalling Istio in the cluster is complete! Wait until all pods in the namespace

istio-systemare in the state Runningor Completedby executing the command below:$ kubectl get pods -n istio-systemNow we are ready to continue in the next section, where we will raise and launch the application.

Sentiment Analysis Application Architecture

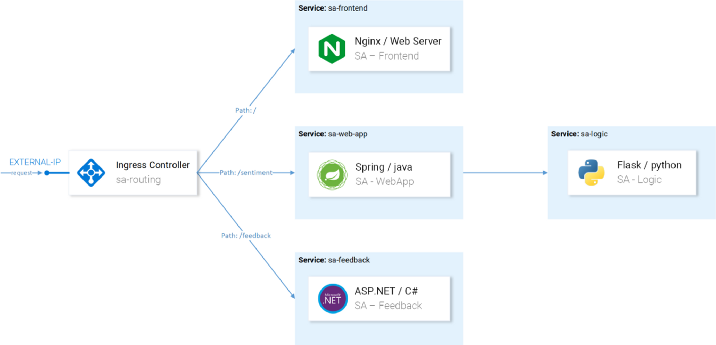

Let's take an example of the microservice application Sentiment Analysis used in the already mentioned introduction article in Kubernetes . It is sophisticated enough to show Istio's capabilities in practice.

The application consists of four microservices:

- Service SA-Frontend , which serves front-end applications on Reactjs;

- A SA-WebApp service that serves Sentiment Analysis requests;

- Service SA-Logic , which performs sentimental analysis ;

- Service SA-Feedback , which receives feedback from users about the accuracy of the analysis.

In this diagram, in addition to services, we also see the Ingress Controller, which in Kubernetes routes incoming requests to the corresponding services. Istio uses a similar concept within the Ingress Gateway, details of which will follow.

Launching an application with a proxy from Istio

For the further operations mentioned in the article, clone the istio-mastery repository . It contains the app and manifests for Kubernetes and Istio.

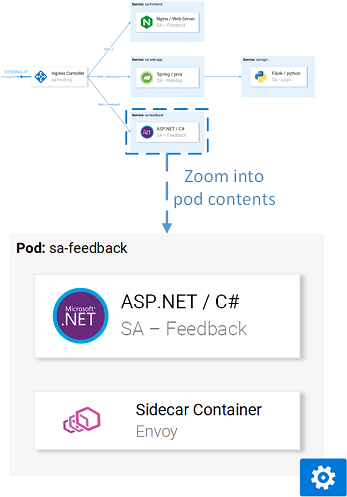

Sidecar insert

Insertion can be done automatically or manually . To automatically insert sidecar containers, you need to set the label namespace

istio-injection=enabled, which is done by the following command:$ kubectl label namespace default istio-injection=enabled

namespace/default labeledNow every pod that will be deployed in the default namespace (

default) will receive its sidecar-container. To verify this, let's install a test application by going to the root directory of the repository [istio-mastery]and running the following command:$ kubectl apply -f resource-manifests/kube

persistentvolumeclaim/sqlite-pvc created

deployment.extensions/sa-feedback created

service/sa-feedback created

deployment.extensions/sa-frontend created

service/sa-frontend created

deployment.extensions/sa-logic created

service/sa-logic created

deployment.extensions/sa-web-app created

service/sa-web-app createdHaving expanded the services, we will verify that pods have two containers (with the service itself and its sidecar), by executing the command

kubectl get podsand making sure that a READYvalue is indicated under the column 2/2, symbolizing that both containers are running:$ kubectl get pods

NAME READY STATUS RESTARTS AGE

sa-feedback-55f5dc4d9c-c9wfv 2/2 Running 0 12m

sa-frontend-558f8986-hhkj9 2/2 Running 0 12m

sa-logic-568498cb4d-2sjwj 2/2 Running 0 12m

sa-logic-568498cb4d-p4f8c 2/2 Running 0 12m

sa-web-app-599cf47c7c-s7cvd 2/2 Running 0 12mVisually, it

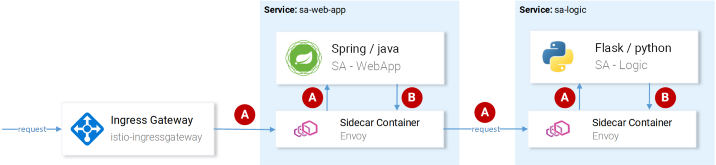

looks like this: Envoy proxy in one of the pods.

Now that the application is up and running, we need to allow incoming traffic to come to the application.

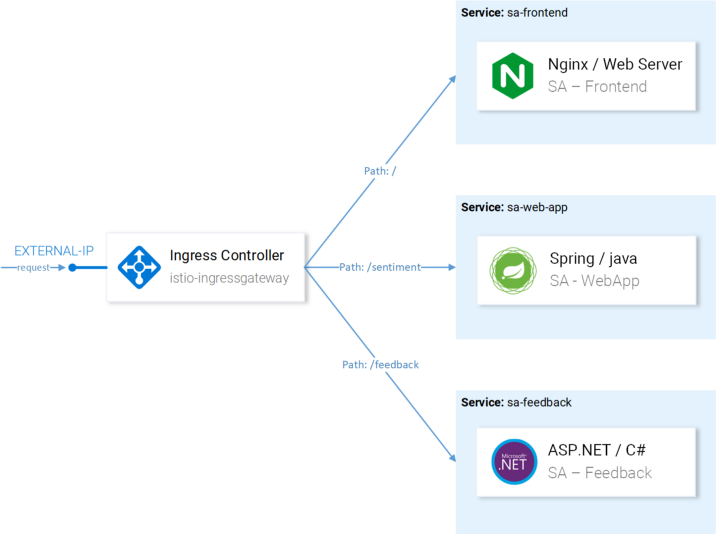

Ingress gateway

The best practice to achieve this (to allow traffic in the cluster) is through the Ingress Gateway in Istio, which is located at the “border” of the cluster and allows you to enable Istio features such as routing, load balancing, security, and monitoring for incoming traffic.

The Ingress Gateway component and the service that forwards it outside were installed into the cluster during the installation of Istio. To find out the external IP address of a service, do:

$ kubectl get svc -n istio-system -l istio=ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP

istio-ingressgateway LoadBalancer 10.0.132.127 13.93.30.120We will continue to access the application over this IP (I will refer to it as EXTERNAL-IP), so for convenience we will write the value into a variable:

$ EXTERNAL_IP=$(kubectl get svc -n istio-system \

-l app=istio-ingressgateway \

-o jsonpath='{.items[0].status.loadBalancer.ingress[0].ip}')If you try to access this IP through a browser now, you will receive a Service Unavailable error, because By default, Istio blocks all incoming traffic until a Gateway is defined.

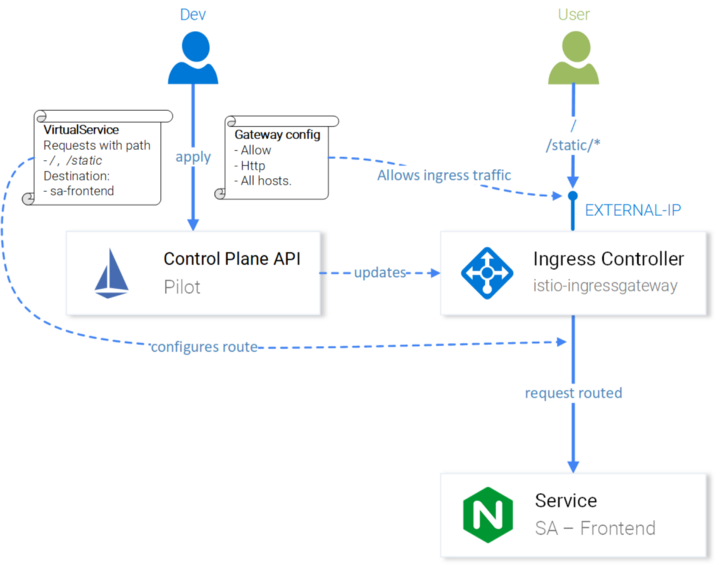

Gateway Resource

Gateway is a CRD (Custom Resource Definition) in Kubernetes, defined after installing Istio in a cluster and activating the ability to specify the ports, protocol and hosts for which we want to allow incoming traffic.

In our case, we want to allow HTTP traffic to port 80 for all hosts. The task is implemented by the following definition ( http-gateway.yaml ) :

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: http-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"This configuration needs no explanation except for the selector

istio: ingressgateway. With this selector, we can indicate to which Ingress Gateway the configuration is applied. In our case, this is the Ingress Gateway controller, which was installed by default in Istio. Configuration is applied by calling the following command:

$ kubectl apply -f resource-manifests/istio/http-gateway.yaml gateway.networking.istio.io/http-gateway createdNow the gateway allows access to port 80, but has no idea where to route requests. This will require Virtual Services .

VirtualService Resource

VirtualService tells Ingress Gateway how to route requests that are allowed within the cluster.

Requests to our application that come via http-gateway must be sent to the sa-frontend, sa-web-app and sa-feedback services:

Routes that must be configured with VirtualServices

Consider the requests that must be sent to SA-Frontend:

- An exact match along the path

/should be sent to SA-Frontend to get index.html; - Prefixed paths

/static/*must be sent to SA-Frontend to receive static files used in the frontend, such as CSS and JavaScript; - Paths that fall under the regular expression

'^.*\.(ico|png|jpg)$'must be sent to SA-Frontend, as These are the images displayed on the page.

Implementation is achieved by the following configuration ( sa-virtualservice-external.yaml ):

kind: VirtualService

metadata:

name: sa-external-services

spec:

hosts:

- "*"

gateways:

- http-gateway # 1

http:

- match:

- uri:

exact: /

- uri:

exact: /callback

- uri:

prefix: /static

- uri:

regex: '^.*\.(ico|png|jpg)$'

route:

- destination:

host: sa-frontend # 2

port:

number: 80Important points:

- This VirtualService refers to requests coming through http-gateway ;

- The

destinationservice is defined where the requests are sent.

Note : The configuration above is stored in a file

sa-virtualservice-external.yamlthat also contains settings for routing in SA-WebApp and SA-Feedback, but was shortened here in the article for brevity. Apply VirtualService by calling:

$ kubectl apply -f resource-manifests/istio/sa-virtualservice-external.yaml

virtualservice.networking.istio.io/sa-external-services createdNote : When we use Istio resources, the Kubernetes API Server raises an event that receives Istio Control Plane, and after that the new configuration is applied to the Envoy proxies of each pod. And the Ingress Gateway controller appears to be the next Envoy configured in Control Plane. All of this on the diagram looks like this:

Istio-IngressGateway configuration for query routing

Sentiment Analysis application became available on

http://{EXTERNAL-IP}/. Don’t worry if you get Not Found status: sometimes it takes a little longer for the configuration to take effect and Envoy caches to be updated . Before you continue, work a little with the application to generate traffic(its presence is necessary for clarity in subsequent actions - approx. transl.) .

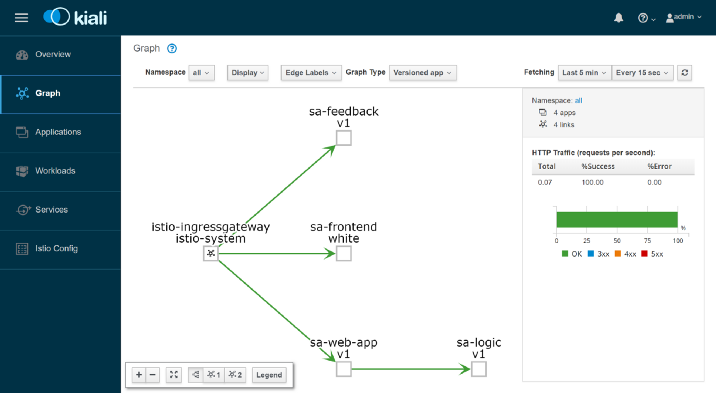

Kiali: Observability

To get to the Kiali administrative interface, run the following command:

$ kubectl port-forward \

$(kubectl get pod -n istio-system -l app=kiali \

-o jsonpath='{.items[0].metadata.name}') \

-n istio-system 20001... and open http: // localhost: 20001 / , logging in as admin / admin. Here you will find many useful features, for example, to check the configuration of Istio components, visualize services based on information collected when intercepting network requests, and receive answers to the questions “Who is contacting whom?”, “Which version of the service has crashes?” etc. In general, explore the possibilities of Kiali before moving on to visualizing metrics with Grafana.

Grafana: visualization of metrics

Metrics collected in Istio get into Prometheus and visualized with Grafana. To get into the Grafana admin interface, run the command below, then open http: // localhost: 3000 / :

$ kubectl -n istio-system port-forward \

$(kubectl -n istio-system get pod -l app=grafana \

-o jsonpath={.items[0].metadata.name}) 3000By clicking on the Home menu in the upper left and selecting Istio Service Dashboard in the upper left corner, start with the sa-web-app service to look at the collected metrics:

Here we are waiting for an empty and completely boring presentation - the guide will never approve of this. Let's create a small load with the following command:

$ while true; do \

curl -i http://$EXTERNAL_IP/sentiment \

-H "Content-type: application/json" \

-d '{"sentence": "I love yogobella"}'; \

sleep .8; doneNow we have much nicer charts, and in addition to them, wonderful Prometheus tools for monitoring and Grafana for visualizing metrics, which will allow us to learn about the performance, health status, improvements / degradation of the services over time.

Finally, let's look at the trace of requests in services.

Jaeger: trace

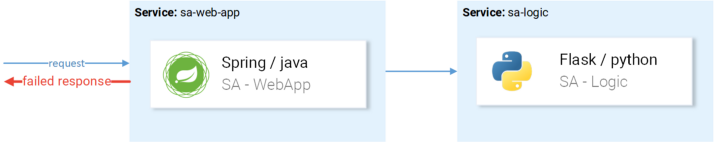

We will need tracing, because the more services we have, the more difficult it is to get to the cause of the failure. Let's look at a simple case from the picture below:

A typical example of a random unsuccessful request.

A request arrives, falls, what is the reason? First service? Or the second? There are exceptions in both - let's look at the logs of each. How often do you find yourself doing this? Our work is more like software detectives rather than developers ...

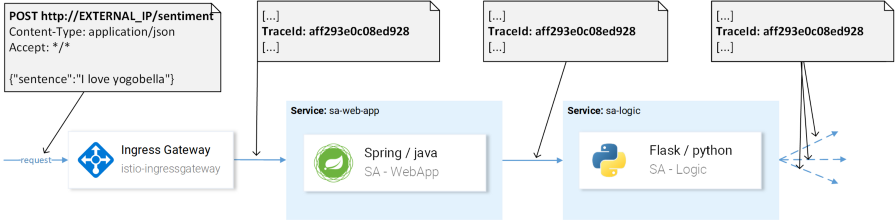

This is a widespread problem in microservices and is solved by distributed trace systems in which services pass each other a unique header, after which this information is redirected to the trace system, where it is compared with the request data . Here is an illustration:

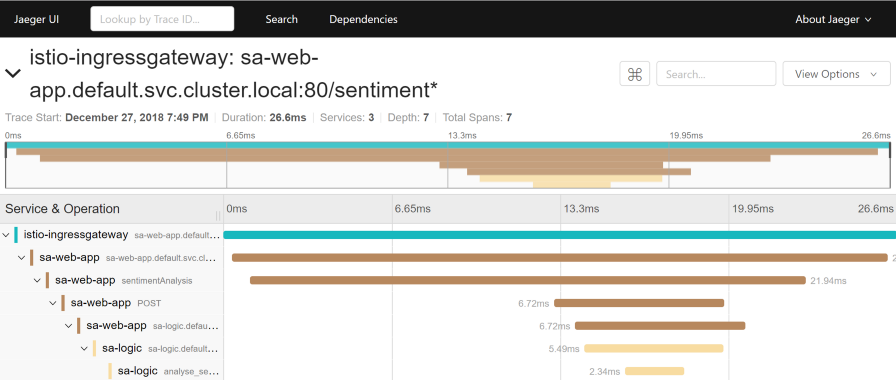

TraceId is used to identify the request. Istio uses Jaeger Tracer, which implements the vendor-independent OpenTracing API framework. You can access the Jaeger user interface with the following command:

$ kubectl port-forward -n istio-system \

$(kubectl get pod -n istio-system -l app=jaeger \

-o jsonpath='{.items[0].metadata.name}') 16686Now go to http: // localhost: 16686 / and select the sa-web-app service . If the service is not shown in the drop-down menu, show / generate activity on the page and update the interface. After that, click on the Find Traces button , which will show the latest traces - select any - detailed information will be displayed for all traces:

This trace shows:

- Запрос приходит в istio-ingressgateway (это первое взаимодействие с одним из сервисов, и для запроса генерируется Trace ID), после чего шлюз направляет запрос в сервис sa-web-app.

- В сервисе sa-web-app запрос подхватывается Envoy sidecar'ом, создаётся «ребёнок» в span'е (поэтому мы видим его в трейсах) и перенаправляется в контейнер sa-web-app. (Span — логическая единица работы в Jaeger, имеющая название, время начало операции и её продолжительность. Span'ы могут быть вложенными и упорядоченными. Ориентированный ациклический граф из span'ов образует trace. — прим. перев.)

- Здесь запрос обрабатывается методом sentimentAnalysis. Эти трейсы уже сгенерированы приложением, т.е. для них потребовались изменения в коде.

- С этого момента инициируется POST-запрос в sa-logic. Trace ID должен быть проброшен из sa-web-app.

- …

Note : In step 4, the application should see the headers generated by Istio and pass them on to subsequent requests, as shown in the image below:

(A) Istio is responsible for forwarding the headers; (B)

Istio does the main work for the headers . generates headers for incoming requests, creates new spans in each sidecare and forwards them. However, without working with the headers inside the services, the full trace path of the request will be lost.

The following headings should be considered (forwarded):

x-request-id

x-b3-traceid

x-b3-spanid

x-b3-parentspanid

x-b3-sampled

x-b3-flags

x-ot-span-contextThis is a simple task, however, to simplify its implementation, many libraries already exist - for example, in the sa-web-app service, the RestTemplate client forwards these headers if you simply add the Jaeger and OpenTracing libraries depending on it .

Note that the Sentiment Analysis application demonstrates implementations on Flask, Spring, and ASP.NET Core.

Now that it has become clear what we are getting out of the box (or almost “out of the box”), consider the issues of finely tuned routing, network traffic management, security, etc.!

Note perev. : Read about this in the next installment of Rinor Maloku’s Istio, which will be available on our blog in the near future. UPDATE (March 14):The second part has already been published.

PS from the translator

Read also in our blog:

- “Back to microservices with Istio”: part 2 (routing, traffic control) , part 3 (authentication and authorization) ;

- “ Conduit - a lightweight service mesh for Kubernetes ”;

- “ What is a service mesh and why do I need it [for a cloud application with microservices]? ";

- “ An Illustrated Guide to Networking at Kubernetes.” Parts 1 and 2 ";

- “ How did this sidecar container get here [at Kubernetes]?” ".