Insecure network: scheduling error

- Transfer

On October 29, 1969, the first attempt at communication between two remote computers was made.

David D. Clark, an MIT scientist whose aura of wisdom earned him the nickname “Albus Dumbledore,” remembers very well when he encountered the dark side of the Internet. He led a meeting of network engineers when he received news of the spread of a dangerous computer worm (the first widespread virus).

One of the engineers working for a leading computer company took responsibility for the security error that the worm took advantage of. The engineer said in a changing voice: “Damn, I thought I fixed this bug.”

But when the attack in November 1988 began to disable thousands of computers and the bill from its damage began to go to millions, it became clear that the problem was not a mistake of one person. The worm used the very essence of the Internet, a fast, open and unhindered connection, to transfer malicious code over networks designed to transmit harmless files or emails.

Dozens of years later, millions have been spent on computer security - but threats are only growing every year. Hackers have gone from simple attacks on computers to threats to the real sector - banks, sellers, government services, Hollywood studios, and from there close to critical systems like dams, power plants and airplanes.

In hindsight, this development of events seems inevitable - but it shocked those who presented us with the network in its current form. Scientists, having spent years developing the Internet, could not imagine how popular and necessary it would become. And no one imagined that it would become available to almost everyone for use in useful and harmful purposes.

“It's not that we don't think about security,” Clark recalls. “We knew that there were people who could not be trusted, and we believed that we could exclude them.”

It was a serious mistake. From an online community of several dozen researchers, the Internet has evolved into a system to which 3 billion people have access. So many people lived on the entire planet in the year 1960, at a time when thoughts about creating a computer network had just begun to appear.

Network developers focused on technical issues and the need for reliable and fast information transfer. They foresaw that the networks needed to be protected from potential intrusions or military threats, but not that the Internet needed to be protected from the users themselves, who at some point would begin to attack each other.

“We didn’t think about how to specifically break the system,” said Vinton G. Cerf, a neatly looking but excited Google vice president who developed key components of the Web in the 70s and 80s. “Now, of course, we can argue that it was necessary - but the task of launching this system was non-trivial in itself.”

The people at the origins of the network, the generation of its founders, are displeased with the statements that they could somehow prevent its insecure state today. As if road designers were responsible for the robbery on the roads, or architects - for theft in cities. They argue that online crimes and aggression are manifestations of human nature that cannot be avoided, and it is impossible to defend themselves against them by technical means.

“I think that since we don’t know the solution to these problems today, it’s just stupid to think that we could solve them 30-40 years ago,” said David H. Crocker, who began work on computer networks at the beginning of 70 and involved in the development of email.

But the 1988 attack by Morris Worm, named after its author, Robert T. Morris, a student at Cornell University, was an alarm for Internet architects. They conducted their work at a time when there were no smartphones, no Internet cafes, or even the wide popularity of personal computers. As a result of the attack, they experienced both rage - because one of them wanted to harm the project they created and anxiety - because the Internet was so vulnerable.

When the NBC broadcast Today’s program reported a riot of a computer worm, it became clear that the Internet, along with its problems, would grow out of an ideal world of scientists and engineers. As Surf recalled: “a group of geeks who had no intention of destroying the network.”

But it was too late. The founders of the Internet no longer controlled it - no one controlled it. People with evil intentions will soon find out that the Internet is perfect for their purposes, allowing you to find quick, easy and cheap ways to get to everyone and everything via the network. And soon this network will cover the whole planet.

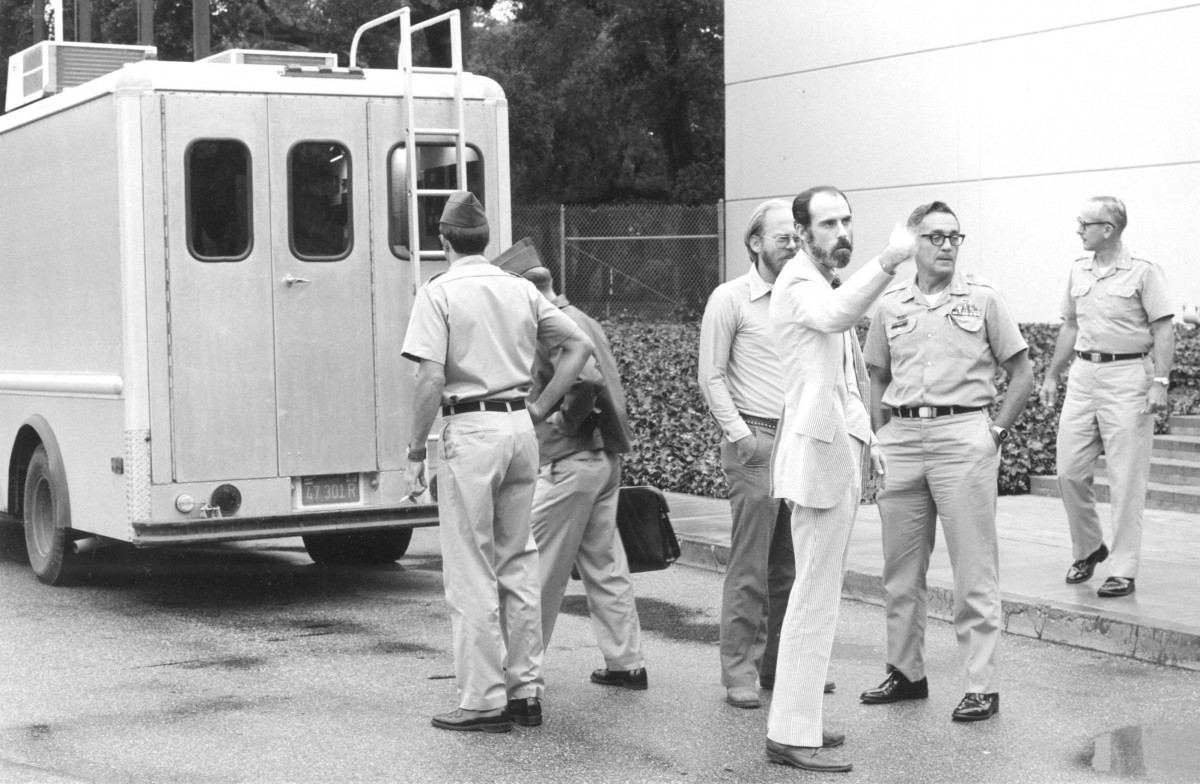

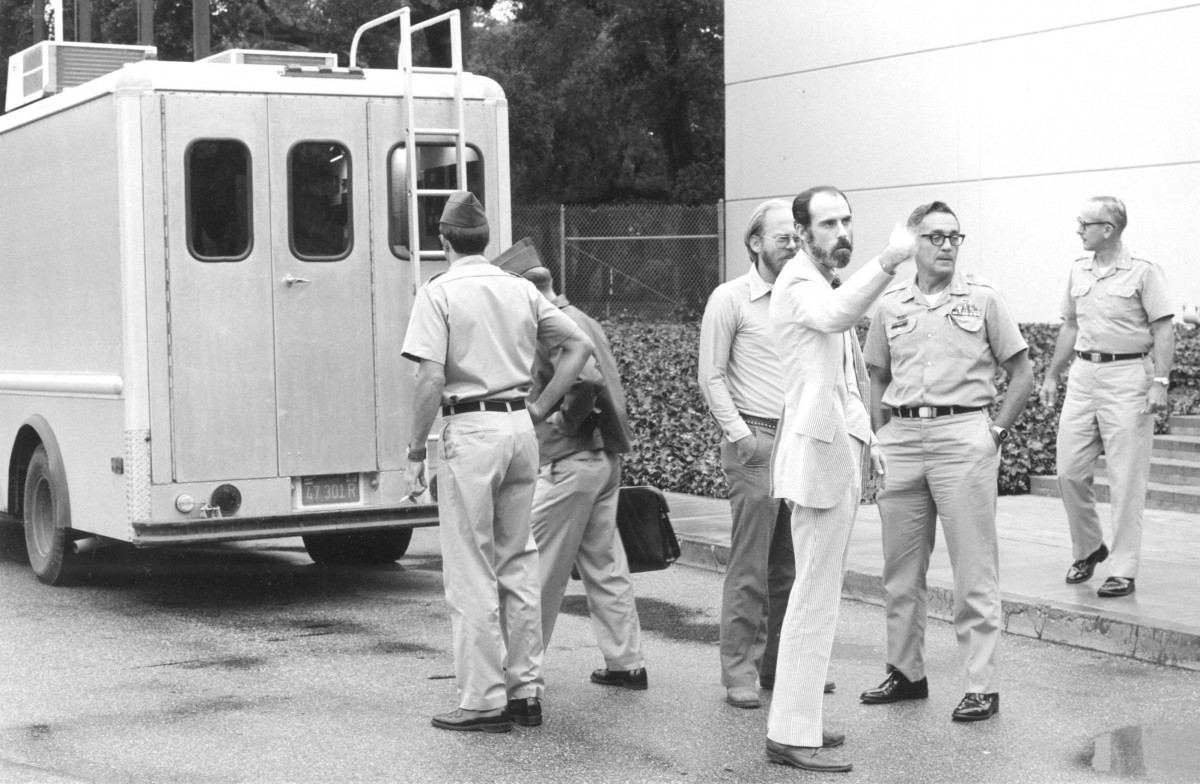

David Clark at MIT Lab

The idea of the Internet was that messages can be divided into pieces, sent over the network using a sequence of transmissions, and reassembled at the place of receipt - quickly and efficiently. Historians attribute the first ideas in this field to the Welsh scientist Donald W. Davies and the American engineer Paul Baran, who wanted to convince his people of the possibility of nuclear war.

Baran described his ideas in a landmark article in 1960, while working at the Rand Corp research center. He wrote that the threat of war hovered over the planet, but people have the power to do a lot to minimize its consequences.

One of the tasks was to create a reliable redundant messaging system that could work after the Soviet bombing and allow survivors to help each other, maintain a democratic government and move to a counterattack. This, according to Baran, “would help the survivors of this massacre shake off the ashes and quickly restore the economy.”

Davis's fantasies were more peaceful. At that time, computers were large and expensive room-sized hippos. They needed to be able to serve several users at the same time, but for this they needed to constantly keep expensive telephone lines in connection mode, even when there were long periods of silence on them.

Davis in the mid-60s suggested that it would be easier to cut data into pieces and send it to and fro in continuous mode, allowing multiple users to use the same telephone line while having simultaneous access to a computer. Davis even built a small network in the UK to illustrate his idea.

These two fantasies, one about the war, the other about the world, worked together to advance the Internet from concept to prototype, and then to reality.

The main organization driving the development of the Internet was the United States Advanced Research Projects Agency (ARPA), created in 1958 in response to the launch of the first artificial Earth satellite from the USSR, and fueled by fears about the backlog in the scientific field.

Ten years later, ARPA began work on a breakthrough computer network, and hired scientists from the country's best universities. This group formed the collegial core of the founders of the Internet.

At the time of the first link between the universities in California and Utah in 1969, the goals were modest: it was a purely scientific research project. Users of ARPANET, the ancestor of the Internet, exchanged messages, files and received remote access to computers.

It would be necessary to have an extraordinary gift of foresight, according to technology historian Janet Abbeit, so that people at the beginning of the development of the Internet could understand the security problems that arose years later when the Internet took center stage in the economy, culture and conflicts of the whole world. On the Internet of the 70s - 80s there were not only few obvious problems, but in general any kind of information that would be worth stealing.

»People break into banks not because there is no security. They do it because there is money, "says Abbeit, author of the book" Inventing the Internet. "" They thought they were building a school, and it turned into a bank. "

Leonard Kleinrock next to the computer, the predecessor of routers

Enthusiasm in the work was facilitated by the complexity of the intellectual task of developing a technology that many predicted was a failure. The Internet pioneers were terribly annoyed by the AT&T Bell telephony system, in which they saw an inflexible, expensive and monopolistic structure - and they wanted to get rid of all these qualities when creating their network.

Baran, who left in 2011, once spoke about a meeting with Bell engineers, where he tried to explain the concept of a digital network - but he was interrupted in the middle of the sentence. “The old analog network engineer looked startled. He looked at his colleagues, and rolled his eyes, as if not believing what he hears. After a pause, he said: Son, telephony actually works as follows ... ”And he went on to explain how the carbon microphone works. “It was a dead end clash of concepts.”

But it was on the lines owned by AT&T that ARPANET first started working by transferring data between two Interface Message Processors (IMPs), the predecessors of modern routers the size of a telephone box. One of them, standing at UCLA, sent messages to the second at Stanford Research Institute, over 300 miles away, on October 29, 1969. The task was to log in remotely — but managed to get only two letters “LO” from “LOGIN” before the computer at Stanford “crashed”.

Leonard Kleinrock, computer scientist at UCLA, one of the first developers of network technologies, at first was discouraged by such an unsuccessful experiment - especially when compared with the phrase “This is a small step for man and a big one for humanity”, uttered at the first lunar landing several months before.

Later, however, Kleinrock considered that “LO” could be regarded as “Lo and behold” (Look and be amazed) - a phrase just right for such a technological breakthrough. “Even on purpose, we could not have prepared a more concise, powerful and insightful message than what happened by chance,” he said later.

ARPANET was growing and soon was already unifying computers located in 15 different places across the country. But the main barriers were neither technology nor the lack of interest on the part of AT&T. It was simply not clear why such a network could come in handy. File sharing was not particularly in demand, and remote access to a computer at that time was quite inconvenient.

But it was very nice to talk with friends and colleagues over long distances. The first "popular application" was e-mail, which appeared in 1972. And over the next year, it occupied 75% of all network traffic.

The instant popularity of email has predicted how computer communication will take the place of more traditional ways like letters, telegraphs and telephones. And at the same time it will become the source of all kinds of security problems.

But in that era no one thought of such things, concerns were related to building a network and proving its necessity. At a three-day computer conference at the Washington Hilton in October 1972, the ARPA team held their first public demonstration of the network and application suite — including an “artificial intelligence” game, where the remote computer was a psychotherapist asking questions and sharing observations.

In general, the demonstration went off with a bang, but there was one cant. Robert Metcalfe, a Harvard student who will later be involved in Ethernet and the founding of 3Com, suddenly found that the system had crashed while demonstrating network capabilities.

It did not function for a short period of time, but even that was enough to upset Metcalf. And then he got angry when he saw a group of AT&T directors in identical striped suits laughing at failure.

“They gurgled happily,” he recalls this early collision of telephony and computer networks. “They did not think about what a disturbing event it was. This fall seemed to confirm that the network was a toy. ”

The rivalry acquired a caricature when the Networkers challenged the Netheads vs Bellheads. About this recalls Billy Brackenridge [Billy Brackenridge], a programmer who later got a job at Microsoft. "The Bellovics needed complete control, and the Networkers were anarchists."

The reasons for this confrontation lay both in culture (young growth against the establishment), and in technology. It was said that the telephony currently operating has a “smart” core (automatic telephone exchanges and switches) and “dumb” edges — simple telephones in all companies and households. The Internet, on the contrary, has a “dumb” core — the network just transmitted data — and “smart” edges, that is, computers that are under the control of users.

The "dumb" core did not allow centrally addressing security issues, but it made it easy for new users to connect. And this system worked as long as colleagues with similar motives and a high degree of mutual trust connected to it. But at the "edges" was the responsible role of the gatekeepers of the network.

“And we have a system in place where security is ensured through the vigilance of individuals,” says Janet Abbot, a Virginia Tech historian. “It reminds safe sex. It turned out that the Internet company is dangerous, and everyone should protect himself from what he can meet there. There is a feeling that the Internet provider will not protect you. And the government will not protect. You must protect yourself. ”

Few showed similar vigilance in the ARPANET era. Everyone who had a login with a password — no matter officially obtained by him, or taken from a colleague — could log in to the network. All that was needed was access to the terminal and the telephone of the desired computer.

Some warned of the emerging risks already at the dawn of the network. Metcalfe posted an official appeal to the ARPANET working group in December 1973, in which he warned about the network being overly accessible to outsiders.

“It could all be fuckers and fuckers, and jokes at parties, if in recent weeks people who knew what they were risking would not have dropped at least two servers under suspicious circumstances. And the third system’s wheel password was compromised. Two students from Los Angeles were to blame for this, ”Metcalf wrote. "We suspect that the number of violations of safety rules is actually much greater than we thought, and it is constantly growing."

As the number of people who were denied access to the network grew, so did disagreement about the purpose of the network. Officially, it was controlled from the Pentagon, but the military’s attempts to clean up ran up against the resistance of the online community, which gravitated to experimentation and appreciated freedom. Unauthorized societies such as the science fiction e-mail newsletter flourished.

The tension in the user environment grew, as the 1980s began, then the 90s with its WWW and the 2000s with smartphones. And the ever-expanding network has incorporated groups of people of opposing interests: musicians and listeners who wanted free music. People who wanted to communicate without witnesses, and governments with their wiretapping. Criminal crackers and their victims.

MIT student Clark calls these conflicts “struggles.” These graters, the emergence of which the creators of the Internet simply did not expect, turned into the very essence of the Internet. “The original goal of launching and fostering the Internet no longer worked,” Clark wrote in 2002. “Powerful players create today's online environment out of interests that contradict each other.”

The trouble loomed back in 1978, when one advertiser from Digital Equipment Corp sent hundreds of ARPANET users messages about the upcoming presentation of new computers. Internet historians consider this event the emergence of spam.

This triggered the Pentagon’s laconic but capitalized response, from which came the message of the “GLAD VIOLATION” of the rules. “APPROPRIATE MEASURES ARE TAKEN TO PREVENT SIMILAR PRECEDENTS IN THE FUTURE.”

But in parallel with these events, some users continued to defend the idea of an open Internet, which can serve many purposes, including commercial ones.

“Will I need to scold the online dating service?” Wrote Richard Stallman, an online freedom fighter. "I hope no. But even if so, let it not stop you when you want to inform me that such a service has opened. ”

Steve Crocker, who worked at the origins of network technology, chairs the Internet Corporation for Assigned Names and Numbers (ICANN), a non-profit organization that regulates the issuance of web addresses.

In telephony, during a conversation, the communication line remains open, and users pay a per-minute fee. On the Internet, data is transmitted piece by piece, as the opportunity arises. These pieces are called packages. And their transmission system is packet switching.

The result is something like a branched pneumatic mail system through which you can transfer everything that fits into the capsule. The main task was to ensure the correct construction of the package path, and keep track of successfully delivered packages. Then it was possible to resend packets that did not reach, possibly along other routes, in search of a successful route.

Although the technology requires high accuracy, it can work without any central oversight. Although the Pentagon monitored the network, its supervision gradually came to naught. Today, no US agency has such control over the Internet as various countries practice for telephony.

At first, the network worked on a simple protocol (a set of rules that allowed different computers to work together). The network grew, so did the customers. Large universities connected their computers with local networks. Others used radio and even satellite communications to provide switching.

To connect all these networks, it was necessary to create a new protocol. By order of ARPA (renamed DARPA in 1972), Surf and his colleague Robert E. Kahn took over in the 70s. The TCP / IP protocol they developed allowed any network to communicate with each other, regardless of hardware, software, or computer languages in the systems. But the transition from a limited ARPANET to a global network has raised the security concerns that Cerf and Kahn had.

“We, of course, recognized the importance of security - but only from a military point of view, in the sense of working in a hostile environment,” Surf recalls. “I did not think about it from the point of view of commerce or people, but only from the point of view of the military.”

It would be possible to include encryption in TCP / IP - such encoding of messages in which only the recipient could read it. And although primitive encryption has been known for centuries, a new generation of computer varieties of it began to appear in the 1970s.

A successful implementation of encryption would make the network inaccessible to wiretapping and facilitate the task of establishing the sender of the message. If the owner of the key is a person with a certain level of trust, then other messages using this key can be attributed with great confidence to it. This is true even when the real name of the sender is not used or is unknown.

But in the years when Surf and Kahn were developing TCP / IP, the introduction of ubiquitous encryption was discouraging. Encrypting and decrypting messages consumed a lot of computer power, in addition, it was not clear how encryption keys could be distributed securely.

Political motives also entered. The National Security Agency, which, according to Cerf, actively supported secure packet switching technologies, was strongly opposed to allowing everyone to encrypt on public or commercial networks. It was believed that even the encryption algorithms themselves threatened national security, and fell under the export ban along with other military technologies.

Steve Crocker, brother of David Crocker and a friend of Cerf who also worked on networking, said: "Then the NSA still had the opportunity to visit the professor and say: Do not publish this cryptography work."

The 70s were over, and Surf and Kahn abandoned their attempts to include cryptography in TCP / IP, resting, in their opinion, in insurmountable obstacles. And although no one canceled the ability to encrypt traffic, the Internet has turned into a system that works in the open. Anyone with network access could track transfers. And with a small spread of encryption, it was difficult to make sure that you are communicating with the right person.

Kleinrock says the result is a network that combines unprecedented availability, speed and efficiency with anonymity. “This is the perfect formula for the dark side.”

Vinton Surf

TCP / IP has become an engineering triumph, allowing completely different networks to work together. From the late 70s to the early 80s, DARPA sponsored several tests for the reliability and efficiency of protocols, and the ability to work on different channels from portable antennas to flying airplanes.

A military component was also present. Cerf had a “personal goal,” as he put it, to prove that Baran’s ideas about a communications system capable of surviving a nuclear catastrophe were realistic. The ideas gave rise to different tests in which digital radio stations communicated with each other under the ever-increasing complexity of environmental conditions.

The most serious test replicated Operation Spyglass, a Cold War campaign that required at least one air command center to be in the air all the time, out of the reach of a possible nuclear attack. As part of that operation, for 29 years in a row, planes constantly took off and landed as scheduled at the Strategic Air Command aerodrome in Omaha.

Once, in the early 80s, two air force tankers flew over the deserts of the Midwest, and a specially equipped van rode along the highway below. Digital radio stations transmitted messages via TCP / IP, and created a temporary network of air and ground means, stretching for hundreds of miles. It included a strategic command center located in an underground bunker.

To test technologies, the command center transmitted a conditional file with conditional instructions for conducting a nuclear counterattack. Such a process would take hours when transmitting by voice over the radio, and over TCP / IP, transmission took place in less than a minute. This showed how computers can easily and simply share information, linking even networks damaged by war.

January 1, 1983, the culmination of the work of all engineers was the "global reboot." Every computer that wanted to communicate with the network had to switch to TCP / IP. And gradually they all crossed, linking disparate networks into one new, global one.

So the internet was born.

Of course, there were practical entry barriers - due to the high cost of computers and transmission lines. Most of the people online in the 70s and 80s were connected to universities, government agencies, or very advanced technology companies. But the barriers were reduced, and a community was created that surpassed any nation, while being uncontrollable.

The military made their secure TCP / IP based system and introduced encryption for protection. But the civilian Internet took decades to spread this basic technology for security. This process is still incomplete, it accelerated only after the revelations of 2013, in which the extent of wiretapping of the NSA became clear.

Encryption would not solve all modern problems, many of which grow from the open nature of the Internet and the enormous importance of information and all systems connected to it. But it would reduce wiretapping and simplify the verification of information sources - and these two problems have not yet been resolved.

Surf says he would like for him and Kahn to manage to enable encryption in TCP / IP. “We would have encryption on a more permanent basis, it’s easy for me to imagine this alternative universe.”

But many argue about whether it would really be possible to implement encryption at the beginning of the Internet. Large requests to computers could make this a daunting task. Perhaps in this case another network with different protocols would become dominant.

“I don’t think the Internet would become so popular if they introduced mandatory encryption,” writes Matthew Green, a cryptologist. “I think they made the right choice.”

The Internet, born as a Pentagon project, has developed into a global network without stops, tariffs, police, army, regulators and passports - generally without the possibility of an identity check. National governments have gradually taken root on the Internet to enforce laws, apply security measures and attack each other. But all this does not have sufficient completeness.

Morris’s worm exposed the flaws of a blunt core system with smart edges. Safety has also become a concern for the region. And most of the hacks happen there - they all go from one computer to another. The Internet is not a place for attacks, but a delivery system.

The Morris worm also teaches that problems are difficult to solve even after they are widely publicized. Robert Morris was convicted of a computer crime and given a trial period before he could become an entrepreneur and professor at MIT. But he didn’t want to drop the Internet — he simply experimented with self-replicating programs and took advantage of the “buffer overflow” error that was first discovered back in the 1960s. And in 1988, it was still a problem, and is still used by today's hackers - 50 years after its discovery.

Many scientists are sure that the task of squeezing security into a system that was developed in the past era is so complex that you need to delete everything and start again. DARPA has spent $ 100 million over the past five years on the Clean Slate program, which is struggling with issues that it could not have foreseen during ARPANET.

“The problem is that security is complicated, and people say,“ well, we'll add it later. ” But you can't add it later, ”says Peter G. Neumann, one of the first computer experts to lead the RISKS Digest column on online security issues since 1985. “Security cannot be added to something that was not intended to be safe.”

Not everyone thinks the same. But the mixed legacy of the Internet, how amazing, so insecure, continues to be alarming among the generation of its founders.

“I wish we could do better,” says Steve Crocker, who is now struggling with Internet issues as chairman of ICANN. “We could have done more, but almost everything we did was done in response to problems, and not by anticipating future problems.”

Clark, a scientist at MIT, often said the same thing. A few months before the Morris Worm attack, he wrote a work listing the priorities of Internet developers. In the description of seven important goals, the word “security” was never mentioned.

After 20 years, in 2008, Clark wrote a new priority sheet in the project of the National Science Foundation to build an improved Internet. The first paragraph simply means: "Security."

David D. Clark, an MIT scientist whose aura of wisdom earned him the nickname “Albus Dumbledore,” remembers very well when he encountered the dark side of the Internet. He led a meeting of network engineers when he received news of the spread of a dangerous computer worm (the first widespread virus).

One of the engineers working for a leading computer company took responsibility for the security error that the worm took advantage of. The engineer said in a changing voice: “Damn, I thought I fixed this bug.”

But when the attack in November 1988 began to disable thousands of computers and the bill from its damage began to go to millions, it became clear that the problem was not a mistake of one person. The worm used the very essence of the Internet, a fast, open and unhindered connection, to transfer malicious code over networks designed to transmit harmless files or emails.

Dozens of years later, millions have been spent on computer security - but threats are only growing every year. Hackers have gone from simple attacks on computers to threats to the real sector - banks, sellers, government services, Hollywood studios, and from there close to critical systems like dams, power plants and airplanes.

In hindsight, this development of events seems inevitable - but it shocked those who presented us with the network in its current form. Scientists, having spent years developing the Internet, could not imagine how popular and necessary it would become. And no one imagined that it would become available to almost everyone for use in useful and harmful purposes.

“It's not that we don't think about security,” Clark recalls. “We knew that there were people who could not be trusted, and we believed that we could exclude them.”

It was a serious mistake. From an online community of several dozen researchers, the Internet has evolved into a system to which 3 billion people have access. So many people lived on the entire planet in the year 1960, at a time when thoughts about creating a computer network had just begun to appear.

Network developers focused on technical issues and the need for reliable and fast information transfer. They foresaw that the networks needed to be protected from potential intrusions or military threats, but not that the Internet needed to be protected from the users themselves, who at some point would begin to attack each other.

“We didn’t think about how to specifically break the system,” said Vinton G. Cerf, a neatly looking but excited Google vice president who developed key components of the Web in the 70s and 80s. “Now, of course, we can argue that it was necessary - but the task of launching this system was non-trivial in itself.”

The people at the origins of the network, the generation of its founders, are displeased with the statements that they could somehow prevent its insecure state today. As if road designers were responsible for the robbery on the roads, or architects - for theft in cities. They argue that online crimes and aggression are manifestations of human nature that cannot be avoided, and it is impossible to defend themselves against them by technical means.

“I think that since we don’t know the solution to these problems today, it’s just stupid to think that we could solve them 30-40 years ago,” said David H. Crocker, who began work on computer networks at the beginning of 70 and involved in the development of email.

But the 1988 attack by Morris Worm, named after its author, Robert T. Morris, a student at Cornell University, was an alarm for Internet architects. They conducted their work at a time when there were no smartphones, no Internet cafes, or even the wide popularity of personal computers. As a result of the attack, they experienced both rage - because one of them wanted to harm the project they created and anxiety - because the Internet was so vulnerable.

When the NBC broadcast Today’s program reported a riot of a computer worm, it became clear that the Internet, along with its problems, would grow out of an ideal world of scientists and engineers. As Surf recalled: “a group of geeks who had no intention of destroying the network.”

But it was too late. The founders of the Internet no longer controlled it - no one controlled it. People with evil intentions will soon find out that the Internet is perfect for their purposes, allowing you to find quick, easy and cheap ways to get to everyone and everything via the network. And soon this network will cover the whole planet.

David Clark at MIT Lab

Nuclear war preparations

The idea of the Internet was that messages can be divided into pieces, sent over the network using a sequence of transmissions, and reassembled at the place of receipt - quickly and efficiently. Historians attribute the first ideas in this field to the Welsh scientist Donald W. Davies and the American engineer Paul Baran, who wanted to convince his people of the possibility of nuclear war.

Baran described his ideas in a landmark article in 1960, while working at the Rand Corp research center. He wrote that the threat of war hovered over the planet, but people have the power to do a lot to minimize its consequences.

One of the tasks was to create a reliable redundant messaging system that could work after the Soviet bombing and allow survivors to help each other, maintain a democratic government and move to a counterattack. This, according to Baran, “would help the survivors of this massacre shake off the ashes and quickly restore the economy.”

Davis's fantasies were more peaceful. At that time, computers were large and expensive room-sized hippos. They needed to be able to serve several users at the same time, but for this they needed to constantly keep expensive telephone lines in connection mode, even when there were long periods of silence on them.

Davis in the mid-60s suggested that it would be easier to cut data into pieces and send it to and fro in continuous mode, allowing multiple users to use the same telephone line while having simultaneous access to a computer. Davis even built a small network in the UK to illustrate his idea.

These two fantasies, one about the war, the other about the world, worked together to advance the Internet from concept to prototype, and then to reality.

The main organization driving the development of the Internet was the United States Advanced Research Projects Agency (ARPA), created in 1958 in response to the launch of the first artificial Earth satellite from the USSR, and fueled by fears about the backlog in the scientific field.

Ten years later, ARPA began work on a breakthrough computer network, and hired scientists from the country's best universities. This group formed the collegial core of the founders of the Internet.

At the time of the first link between the universities in California and Utah in 1969, the goals were modest: it was a purely scientific research project. Users of ARPANET, the ancestor of the Internet, exchanged messages, files and received remote access to computers.

It would be necessary to have an extraordinary gift of foresight, according to technology historian Janet Abbeit, so that people at the beginning of the development of the Internet could understand the security problems that arose years later when the Internet took center stage in the economy, culture and conflicts of the whole world. On the Internet of the 70s - 80s there were not only few obvious problems, but in general any kind of information that would be worth stealing.

»People break into banks not because there is no security. They do it because there is money, "says Abbeit, author of the book" Inventing the Internet. "" They thought they were building a school, and it turned into a bank. "

Leonard Kleinrock next to the computer, the predecessor of routers

The first super application

Enthusiasm in the work was facilitated by the complexity of the intellectual task of developing a technology that many predicted was a failure. The Internet pioneers were terribly annoyed by the AT&T Bell telephony system, in which they saw an inflexible, expensive and monopolistic structure - and they wanted to get rid of all these qualities when creating their network.

Baran, who left in 2011, once spoke about a meeting with Bell engineers, where he tried to explain the concept of a digital network - but he was interrupted in the middle of the sentence. “The old analog network engineer looked startled. He looked at his colleagues, and rolled his eyes, as if not believing what he hears. After a pause, he said: Son, telephony actually works as follows ... ”And he went on to explain how the carbon microphone works. “It was a dead end clash of concepts.”

But it was on the lines owned by AT&T that ARPANET first started working by transferring data between two Interface Message Processors (IMPs), the predecessors of modern routers the size of a telephone box. One of them, standing at UCLA, sent messages to the second at Stanford Research Institute, over 300 miles away, on October 29, 1969. The task was to log in remotely — but managed to get only two letters “LO” from “LOGIN” before the computer at Stanford “crashed”.

Leonard Kleinrock, computer scientist at UCLA, one of the first developers of network technologies, at first was discouraged by such an unsuccessful experiment - especially when compared with the phrase “This is a small step for man and a big one for humanity”, uttered at the first lunar landing several months before.

Later, however, Kleinrock considered that “LO” could be regarded as “Lo and behold” (Look and be amazed) - a phrase just right for such a technological breakthrough. “Even on purpose, we could not have prepared a more concise, powerful and insightful message than what happened by chance,” he said later.

ARPANET was growing and soon was already unifying computers located in 15 different places across the country. But the main barriers were neither technology nor the lack of interest on the part of AT&T. It was simply not clear why such a network could come in handy. File sharing was not particularly in demand, and remote access to a computer at that time was quite inconvenient.

But it was very nice to talk with friends and colleagues over long distances. The first "popular application" was e-mail, which appeared in 1972. And over the next year, it occupied 75% of all network traffic.

The instant popularity of email has predicted how computer communication will take the place of more traditional ways like letters, telegraphs and telephones. And at the same time it will become the source of all kinds of security problems.

But in that era no one thought of such things, concerns were related to building a network and proving its necessity. At a three-day computer conference at the Washington Hilton in October 1972, the ARPA team held their first public demonstration of the network and application suite — including an “artificial intelligence” game, where the remote computer was a psychotherapist asking questions and sharing observations.

In general, the demonstration went off with a bang, but there was one cant. Robert Metcalfe, a Harvard student who will later be involved in Ethernet and the founding of 3Com, suddenly found that the system had crashed while demonstrating network capabilities.

It did not function for a short period of time, but even that was enough to upset Metcalf. And then he got angry when he saw a group of AT&T directors in identical striped suits laughing at failure.

“They gurgled happily,” he recalls this early collision of telephony and computer networks. “They did not think about what a disturbing event it was. This fall seemed to confirm that the network was a toy. ”

“It resembles safe sex.”

The rivalry acquired a caricature when the Networkers challenged the Netheads vs Bellheads. About this recalls Billy Brackenridge [Billy Brackenridge], a programmer who later got a job at Microsoft. "The Bellovics needed complete control, and the Networkers were anarchists."

The reasons for this confrontation lay both in culture (young growth against the establishment), and in technology. It was said that the telephony currently operating has a “smart” core (automatic telephone exchanges and switches) and “dumb” edges — simple telephones in all companies and households. The Internet, on the contrary, has a “dumb” core — the network just transmitted data — and “smart” edges, that is, computers that are under the control of users.

The "dumb" core did not allow centrally addressing security issues, but it made it easy for new users to connect. And this system worked as long as colleagues with similar motives and a high degree of mutual trust connected to it. But at the "edges" was the responsible role of the gatekeepers of the network.

“And we have a system in place where security is ensured through the vigilance of individuals,” says Janet Abbot, a Virginia Tech historian. “It reminds safe sex. It turned out that the Internet company is dangerous, and everyone should protect himself from what he can meet there. There is a feeling that the Internet provider will not protect you. And the government will not protect. You must protect yourself. ”

Few showed similar vigilance in the ARPANET era. Everyone who had a login with a password — no matter officially obtained by him, or taken from a colleague — could log in to the network. All that was needed was access to the terminal and the telephone of the desired computer.

Some warned of the emerging risks already at the dawn of the network. Metcalfe posted an official appeal to the ARPANET working group in December 1973, in which he warned about the network being overly accessible to outsiders.

“It could all be fuckers and fuckers, and jokes at parties, if in recent weeks people who knew what they were risking would not have dropped at least two servers under suspicious circumstances. And the third system’s wheel password was compromised. Two students from Los Angeles were to blame for this, ”Metcalf wrote. "We suspect that the number of violations of safety rules is actually much greater than we thought, and it is constantly growing."

As the number of people who were denied access to the network grew, so did disagreement about the purpose of the network. Officially, it was controlled from the Pentagon, but the military’s attempts to clean up ran up against the resistance of the online community, which gravitated to experimentation and appreciated freedom. Unauthorized societies such as the science fiction e-mail newsletter flourished.

The tension in the user environment grew, as the 1980s began, then the 90s with its WWW and the 2000s with smartphones. And the ever-expanding network has incorporated groups of people of opposing interests: musicians and listeners who wanted free music. People who wanted to communicate without witnesses, and governments with their wiretapping. Criminal crackers and their victims.

MIT student Clark calls these conflicts “struggles.” These graters, the emergence of which the creators of the Internet simply did not expect, turned into the very essence of the Internet. “The original goal of launching and fostering the Internet no longer worked,” Clark wrote in 2002. “Powerful players create today's online environment out of interests that contradict each other.”

The trouble loomed back in 1978, when one advertiser from Digital Equipment Corp sent hundreds of ARPANET users messages about the upcoming presentation of new computers. Internet historians consider this event the emergence of spam.

This triggered the Pentagon’s laconic but capitalized response, from which came the message of the “GLAD VIOLATION” of the rules. “APPROPRIATE MEASURES ARE TAKEN TO PREVENT SIMILAR PRECEDENTS IN THE FUTURE.”

But in parallel with these events, some users continued to defend the idea of an open Internet, which can serve many purposes, including commercial ones.

“Will I need to scold the online dating service?” Wrote Richard Stallman, an online freedom fighter. "I hope no. But even if so, let it not stop you when you want to inform me that such a service has opened. ”

Steve Crocker, who worked at the origins of network technology, chairs the Internet Corporation for Assigned Names and Numbers (ICANN), a non-profit organization that regulates the issuance of web addresses.

NSA Alarms

In telephony, during a conversation, the communication line remains open, and users pay a per-minute fee. On the Internet, data is transmitted piece by piece, as the opportunity arises. These pieces are called packages. And their transmission system is packet switching.

The result is something like a branched pneumatic mail system through which you can transfer everything that fits into the capsule. The main task was to ensure the correct construction of the package path, and keep track of successfully delivered packages. Then it was possible to resend packets that did not reach, possibly along other routes, in search of a successful route.

Although the technology requires high accuracy, it can work without any central oversight. Although the Pentagon monitored the network, its supervision gradually came to naught. Today, no US agency has such control over the Internet as various countries practice for telephony.

At first, the network worked on a simple protocol (a set of rules that allowed different computers to work together). The network grew, so did the customers. Large universities connected their computers with local networks. Others used radio and even satellite communications to provide switching.

To connect all these networks, it was necessary to create a new protocol. By order of ARPA (renamed DARPA in 1972), Surf and his colleague Robert E. Kahn took over in the 70s. The TCP / IP protocol they developed allowed any network to communicate with each other, regardless of hardware, software, or computer languages in the systems. But the transition from a limited ARPANET to a global network has raised the security concerns that Cerf and Kahn had.

“We, of course, recognized the importance of security - but only from a military point of view, in the sense of working in a hostile environment,” Surf recalls. “I did not think about it from the point of view of commerce or people, but only from the point of view of the military.”

It would be possible to include encryption in TCP / IP - such encoding of messages in which only the recipient could read it. And although primitive encryption has been known for centuries, a new generation of computer varieties of it began to appear in the 1970s.

A successful implementation of encryption would make the network inaccessible to wiretapping and facilitate the task of establishing the sender of the message. If the owner of the key is a person with a certain level of trust, then other messages using this key can be attributed with great confidence to it. This is true even when the real name of the sender is not used or is unknown.

But in the years when Surf and Kahn were developing TCP / IP, the introduction of ubiquitous encryption was discouraging. Encrypting and decrypting messages consumed a lot of computer power, in addition, it was not clear how encryption keys could be distributed securely.

Political motives also entered. The National Security Agency, which, according to Cerf, actively supported secure packet switching technologies, was strongly opposed to allowing everyone to encrypt on public or commercial networks. It was believed that even the encryption algorithms themselves threatened national security, and fell under the export ban along with other military technologies.

Steve Crocker, brother of David Crocker and a friend of Cerf who also worked on networking, said: "Then the NSA still had the opportunity to visit the professor and say: Do not publish this cryptography work."

The 70s were over, and Surf and Kahn abandoned their attempts to include cryptography in TCP / IP, resting, in their opinion, in insurmountable obstacles. And although no one canceled the ability to encrypt traffic, the Internet has turned into a system that works in the open. Anyone with network access could track transfers. And with a small spread of encryption, it was difficult to make sure that you are communicating with the right person.

Kleinrock says the result is a network that combines unprecedented availability, speed and efficiency with anonymity. “This is the perfect formula for the dark side.”

Vinton Surf

Operation Spyglass

TCP / IP has become an engineering triumph, allowing completely different networks to work together. From the late 70s to the early 80s, DARPA sponsored several tests for the reliability and efficiency of protocols, and the ability to work on different channels from portable antennas to flying airplanes.

A military component was also present. Cerf had a “personal goal,” as he put it, to prove that Baran’s ideas about a communications system capable of surviving a nuclear catastrophe were realistic. The ideas gave rise to different tests in which digital radio stations communicated with each other under the ever-increasing complexity of environmental conditions.

The most serious test replicated Operation Spyglass, a Cold War campaign that required at least one air command center to be in the air all the time, out of the reach of a possible nuclear attack. As part of that operation, for 29 years in a row, planes constantly took off and landed as scheduled at the Strategic Air Command aerodrome in Omaha.

Once, in the early 80s, two air force tankers flew over the deserts of the Midwest, and a specially equipped van rode along the highway below. Digital radio stations transmitted messages via TCP / IP, and created a temporary network of air and ground means, stretching for hundreds of miles. It included a strategic command center located in an underground bunker.

To test technologies, the command center transmitted a conditional file with conditional instructions for conducting a nuclear counterattack. Such a process would take hours when transmitting by voice over the radio, and over TCP / IP, transmission took place in less than a minute. This showed how computers can easily and simply share information, linking even networks damaged by war.

Network birth

January 1, 1983, the culmination of the work of all engineers was the "global reboot." Every computer that wanted to communicate with the network had to switch to TCP / IP. And gradually they all crossed, linking disparate networks into one new, global one.

So the internet was born.

Of course, there were practical entry barriers - due to the high cost of computers and transmission lines. Most of the people online in the 70s and 80s were connected to universities, government agencies, or very advanced technology companies. But the barriers were reduced, and a community was created that surpassed any nation, while being uncontrollable.

The military made their secure TCP / IP based system and introduced encryption for protection. But the civilian Internet took decades to spread this basic technology for security. This process is still incomplete, it accelerated only after the revelations of 2013, in which the extent of wiretapping of the NSA became clear.

Encryption would not solve all modern problems, many of which grow from the open nature of the Internet and the enormous importance of information and all systems connected to it. But it would reduce wiretapping and simplify the verification of information sources - and these two problems have not yet been resolved.

Surf says he would like for him and Kahn to manage to enable encryption in TCP / IP. “We would have encryption on a more permanent basis, it’s easy for me to imagine this alternative universe.”

But many argue about whether it would really be possible to implement encryption at the beginning of the Internet. Large requests to computers could make this a daunting task. Perhaps in this case another network with different protocols would become dominant.

“I don’t think the Internet would become so popular if they introduced mandatory encryption,” writes Matthew Green, a cryptologist. “I think they made the right choice.”

Old flaws, new dangers

The Internet, born as a Pentagon project, has developed into a global network without stops, tariffs, police, army, regulators and passports - generally without the possibility of an identity check. National governments have gradually taken root on the Internet to enforce laws, apply security measures and attack each other. But all this does not have sufficient completeness.

Morris’s worm exposed the flaws of a blunt core system with smart edges. Safety has also become a concern for the region. And most of the hacks happen there - they all go from one computer to another. The Internet is not a place for attacks, but a delivery system.

The Morris worm also teaches that problems are difficult to solve even after they are widely publicized. Robert Morris was convicted of a computer crime and given a trial period before he could become an entrepreneur and professor at MIT. But he didn’t want to drop the Internet — he simply experimented with self-replicating programs and took advantage of the “buffer overflow” error that was first discovered back in the 1960s. And in 1988, it was still a problem, and is still used by today's hackers - 50 years after its discovery.

Many scientists are sure that the task of squeezing security into a system that was developed in the past era is so complex that you need to delete everything and start again. DARPA has spent $ 100 million over the past five years on the Clean Slate program, which is struggling with issues that it could not have foreseen during ARPANET.

“The problem is that security is complicated, and people say,“ well, we'll add it later. ” But you can't add it later, ”says Peter G. Neumann, one of the first computer experts to lead the RISKS Digest column on online security issues since 1985. “Security cannot be added to something that was not intended to be safe.”

Not everyone thinks the same. But the mixed legacy of the Internet, how amazing, so insecure, continues to be alarming among the generation of its founders.

“I wish we could do better,” says Steve Crocker, who is now struggling with Internet issues as chairman of ICANN. “We could have done more, but almost everything we did was done in response to problems, and not by anticipating future problems.”

Clark, a scientist at MIT, often said the same thing. A few months before the Morris Worm attack, he wrote a work listing the priorities of Internet developers. In the description of seven important goals, the word “security” was never mentioned.

After 20 years, in 2008, Clark wrote a new priority sheet in the project of the National Science Foundation to build an improved Internet. The first paragraph simply means: "Security."