SOC for intermediate. We understand what we protect, or how to take an inventory of infrastructure

Hello again. The cycle "SOC for ..." continues its movement and development. We already managed to cover the first layer of the inner kitchen of the incident monitoring and response centers in previous articles, so we’ll try to go a little deeper into the technical details and more subtle problems.

We have several times indirectly touched on the topic of asset management: in the article about security control , and in matters of automation and artificial intelligence in SOC. Obviously, without an inventory of the customer’s infrastructure, the monitoring center will not be able to protect it. At the same time, drawing up its detailed description is by no means a trivial task. And most importantly - after a couple of months it is again not relevant: some hosts disappeared, others appeared, new services or systems appeared. But infrastructure protection is a continuous process, and the SOC cannot slow down its activities until the latest information on customer assets is obtained. Let me remind you that the quality of Solar JSOC’s work is governed not by abstract promises, but by a very specific SLA, the violation of which is followed by various celestial vehicles. How to get out in such a situation and not lose in the quality of the service provided?

We suggest now returning to this issue through one of the comparative examples of incident notification:

It would seem that the difference between these two notifications is in-depth analysis. In the first case, the notification looks like it was received automatically from the SIEM platform, and in the second, the operator’s work and enrichment of incidents are visible. This is so and not so. In order for the operator to be able to analyze the incident and draw some conclusions, it is extremely important for him to understand what (or who) is hiding behind these mysterious and almost identical IP addresses from customers, and to use this information in the analysis of the incident.

About 20% of our readers will now say: “Well, for this, all companies have implemented the CMDB system, which is easier than connecting the data from it to SIEM? You don’t even need to write a connector. ” Let's leave colleagues from integrators and vendors on this conviction. From our practice, no matter how sad it is to realize this, information from CMDB should not even be connected or exported to SIEM due to its complete irrelevance. So you have to roll up your sleeves and do it yourself. Let’s take a closer look at this question and try to understand how to understand the bowels of the infrastructurewithout attracting the attention of orderlies and not hoping for the full support of IT departments.

We eat the elephant in parts or immerse ourselves in the description of the infrastructure

In our move to inventory our assets, we usually try to go through four milestones.

1. Building a top-level, but detailed network map of the organization

Here, the customer’s IT departments are the first and main contact (unless the company has correctly implemented and configured the network equipment management system or firewall management, but this is still an exception). Both descriptive pieces of information from network equipment, network diagrams, and so on are used.

The target result of this stage is the ability to split all internal addresses into network zones with the identification of the following information:

This leads to the first important conclusion : assets are not only a description of specific hosts with detailed inventory information about them, but also the ability to describe network segments and use this data in reports / correlations / event searches.

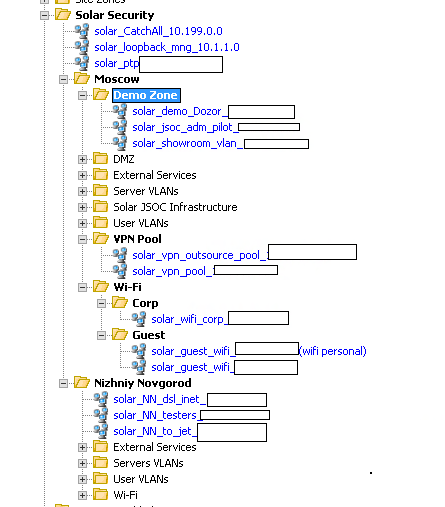

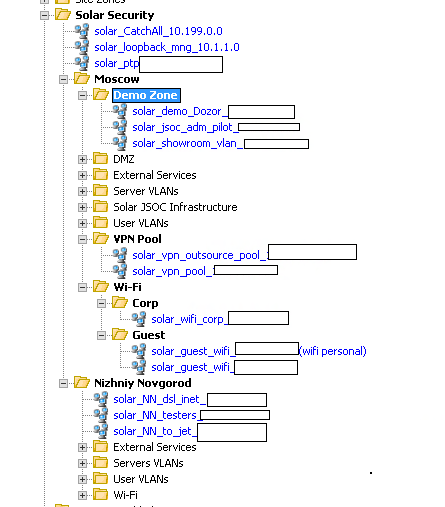

Here's what it looks like in SIEM:

2. Description of the main subsystems in the data center

As a rule, the starting identification of these machines appears according to the results of the first business interviews. Communicating with security, IT, networkers, and application specialists makes it possible, as a first approximation, to understand how the company's infrastructure is structured and which resources / systems in it are the most critical. It is easy enough to collect information about their addressing and network affiliation. Further, in the mode of point questions, it is already possible to obtain the required information:

At this stage, unloading from various centralization systems usually helps:

The second important conclusion follows from this : you need to be able to “take” information about assets from completely different sources and add this information to SIEM in accordance with configured scenarios and monitoring logic.

3. Description of critical hosts and assets from the point of view of information security

When the customer has identified his “pain points” and the most critical systems, we enter into a dialogue with those responsible for them to understand how these systems are interconnected, what data is stored there and what IS risks associated with them. We also evaluate them by additional parameters, after which we assign to some of the systems coefficients that increase the level of criticality. Here's what we pay attention to:

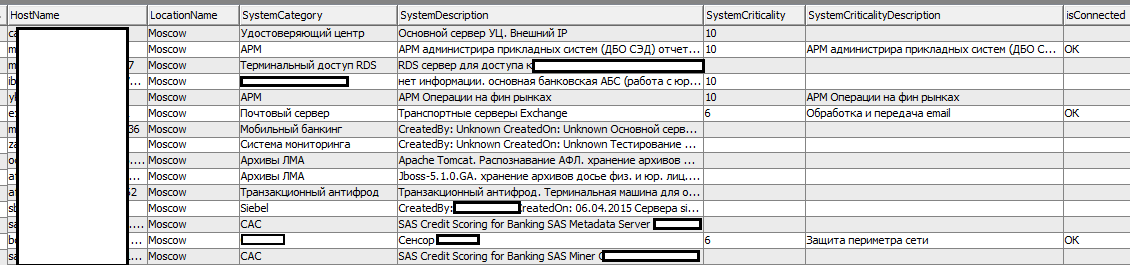

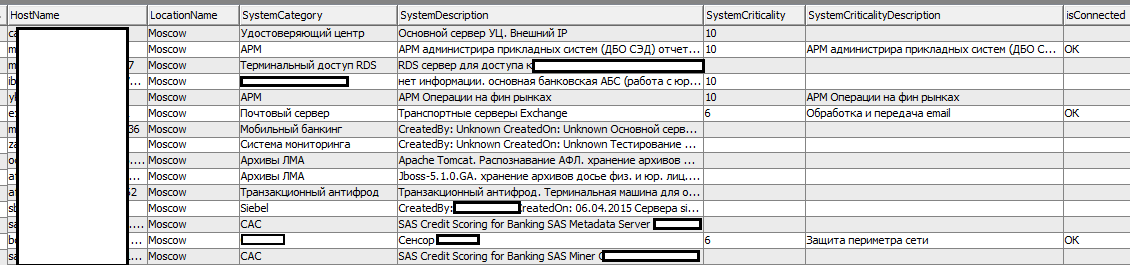

Example descriptions:

The third important conclusion : SOC should be able to add its own descriptions of the purpose, functionality and criticality of the host for each asset and for each subnet wired into the system. As they say, “all markers are different in taste and color,” and what is good in IT is not always necessary in information security.

4. Enrichment of information on a host / asset

In the final, we try to enrich information on a host / asset with data from information security systems or security scanners:

This information can be extremely useful for investigation, although, in our opinion, it is not primary in identifying and assessing the criticality of the host incident itself.

Understanding problems

Although even at first glance the process of describing the infrastructure looks rather complicated, in reality these are only the difficulties that lie on the surface. As always, inside you can find a number of underwaterrakes of stones. Let's try to discuss them and share possible solutions.

Choosing a unique asset identifier

It would seem that the question is banal and obvious. Choose anything: at least an IP address (if there are several interfaces, assign the main one), at least a domain name, at least a serial number. But in life, everything is not so simple.

Any hardware or software identifiers are really very good for their uniqueness and direct connection with the device. There is only one problem - we work with logs, and we identify incidents based on logs, and they simply do not have these unique identifiers. Therefore, we have (almost) no way to correctly connect what is happening in our network with an asset.

The DNS name is also good from the point of view of its uniqueness (let us ignore those companies that prefer not to use it). But the problem, unfortunately, is completely similar - the vast majority of logs do not contain any information about this DNS name. Therefore, matching an incident with an asset also remains a problem.

It would seem that we have no options left, we will have to work with the IP address. It appears in the vast majority of journals that we have to deal with, and in the case of the server segment is an almost guaranteed criterion for determining the device.

But things get more complicated when we try to use it to identify user hosts. Quite often, in user networks or part of them, we are faced with DHCP and randomly issuing a host IP address within a segment. This breaks the whole logic of working with the incident: at the time the event occurred, the IP address could refer to one machine, and by the time the analyst connected to the parsing or the customer’s IT service blocked it, this IP address could already belong to a completely different host.

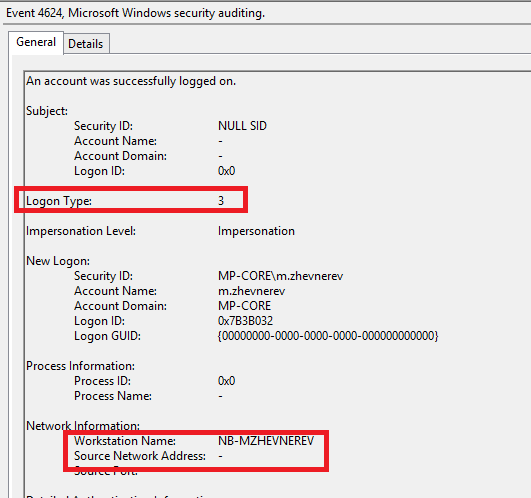

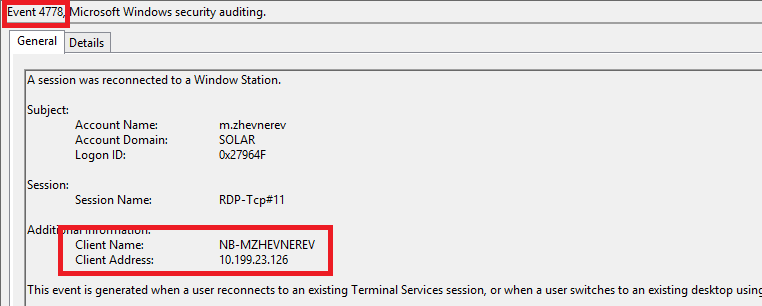

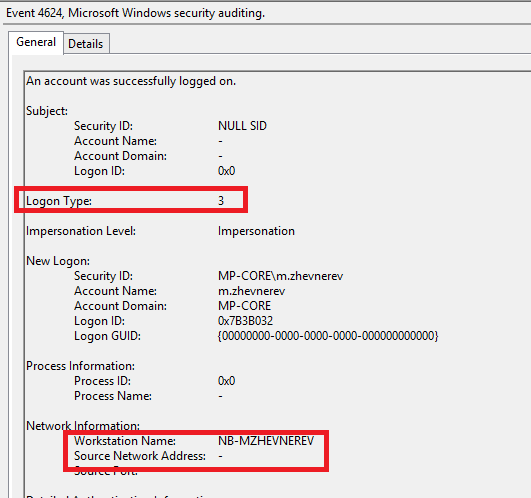

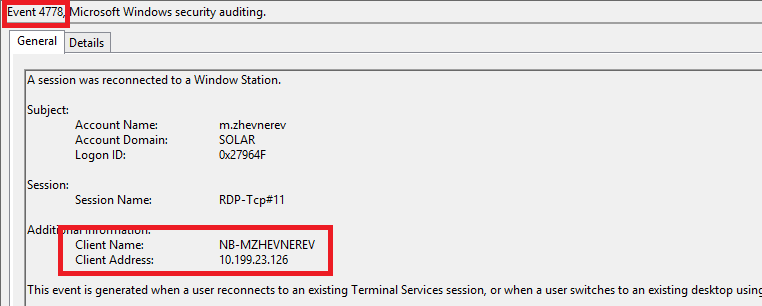

There are even more enchanting cases. Look, for example, at Windows logging for RDP authentication:

As a result, depending on the type of event, the identifier will be either the host or IP. And this problem is generally better solved at the parsing level of the event.

Most often, we use the following host definition scheme. When processing events, a number of conditions are sequentially checked and the first non-zero value is selected (problems with parsing from the example above are solved at the parser level :)):

This process is performed for each incoming event and is used to check all exception lists, profiles, etc.

It is important to note here that it will never work to get assets for all the hosts in the company and keep them up to date. In any case, there are guest networks, VPN address pools, test environments, and Internet . You cannot start an asset in these networks, because everything is constantly changing. But at the same time, it’s still important to correctly determine the source of activity, and not generate alerts for the same host, which is present in the logs in a different format (for example, nb-hostname.domain.local, nb-hostname, 10.10.10.10 (but there is nb -hostname in DHCP)).

Use of relevant inventory information in event enrichment

When we talk about the analysis of an incident, it is very important to operate with up-to-date data on the presence of updates / software / vulnerabilities on the host, etc.

As a rule, regular scans (and, accordingly, updates of asset information) occur at best once a month. Scanning the entire infrastructure more often is practically unrealistic, and this at one point generates another very important detail in incident handling, which is often overlooked: between two inventory scans, the state of the host can change as often as necessary and at the same time eventually return to the original condition. For example, in incidents involving malicious mailings, referrals to infected websites, detection of indicators of compromise, etc., it is very important to understand whether the antivirus was active specifically at that moment.

We solve this problem in two ways:

This information is automatically added to the corresponding incident:

Keeping asset information up to date.

As we have already said, no matter how scrupulous you come to the description of the infrastructure, it will become obsolete very soon. In just a month or two, the customer’s network will begin to change: new services or hosts will appear, old ones will disappear, part of the systems will change their purpose.

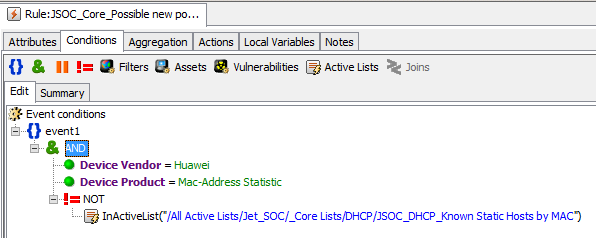

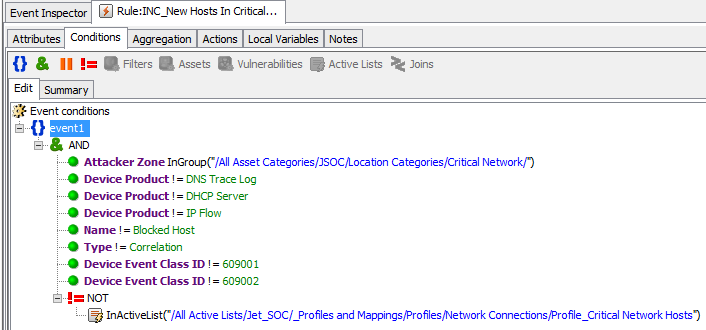

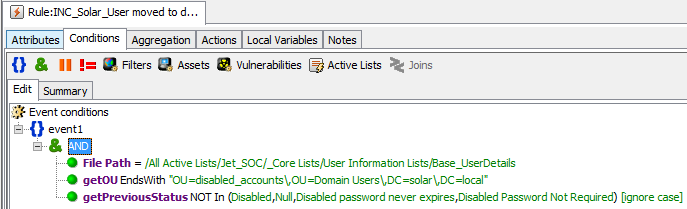

Here are a few approaches that we use in Solar JSOC to maintain the current context:

The game is not worth the candle, but the result is labor?

Surely not one of our readers, practitioners at the end of this article thought: why do you need to fence all this crazy design, taking into account and updating the asset model? Maybe it’s easier and “cheaper” to manually deal with each host on the fact of an incident, and the end customer does not need such a thorough inventory?

We will try to show with a few examples how the asset model simplifies and automates part of the activities of the SOC operator and helps in its daily work.

Ability to filter the excess

“Correct” SIEM systems allow you to automatically use the asset model to filter potential incidents into exceptions. This can significantly reduce the time that the operator has to spend on the analysis of "garbage".

For example, often we begin to collect primary information about script triggers from the customer before we fill out the first network model, because the rule for detecting calls to C & C servers and malicious sites can be started quite quickly.

Does this mean that the entire customer’s network is teeming with malware, or, conversely, the reputation databases of C&C servers give so many false positives? As a rule, neither one nor the other. It’s just that guest wifi works in the branches of the customer’s company, all user sessions of which use the same Internet access infrastructure as the main client network, and therefore fall into the scope of monitoring.

Is it interesting to the customer how secure are the phones of external people who simply used his guest Wi-Fi? Should the SOC operator handle such incidents? Probably not. So filtering Wi-Fi networks from this scenario is a very reasonable and desirable step.

Or another example: usually in a company at the security policy level there is a restriction on the use of Remote Admin Tools. But helpdesk employees, whose responsibilities include servicing remote machines, business travelers, or remote points of sale, are often forced to resort to this tool in their daily work. Isn't it easier for the SOC to identify the locations of helpdesk employees and ignore such events than to handle each suspected incident manually?

Increasing the criticality and priority of an incident depending on a specific asset

The situation, in my opinion, also does not require additional comments. An incident that occurred at a workstation in the CBD at a bank or at the junction of the main and technological segments in the energy sector will always be much more important and priority than even fixing the launch of hacking tools in the user segment. And such an incident should be detected and handled much faster.

From our practice in recent years, it can be seen that attackers have learned to take advantage of weak prioritization of incidents in young SOCs. As one of the final stages of the attack, they use the so-called “incident storm”, creating dozens of suspicions of an incident in different parts of the infrastructure before capturing the target resource or compromising information. The SOC operator, which processes dozens of incidents in turn, simply does not have time to get to the right, truly critical, until it is too late. While proper prioritization would allow him to focus on the most important incident and prevent the consequences of the attack.

The ability to see strange

The more information about the asset and the situation as a whole the operator has at the beginning of the analysis of the incident, the faster he will make a decision, and the more correct it will be. And of course, the more likely it is that a real complex attack, caught on the basis of a small anomaly, will be effectively identified and disassembled in the SOC.

Well, in general, who owns the information, he owns the world. On this I would like to end our dive into the subject of asset management. For all remaining practical and not so questions - welcome to comments. And until we meet again in the SOC for .... series.

We have several times indirectly touched on the topic of asset management: in the article about security control , and in matters of automation and artificial intelligence in SOC. Obviously, without an inventory of the customer’s infrastructure, the monitoring center will not be able to protect it. At the same time, drawing up its detailed description is by no means a trivial task. And most importantly - after a couple of months it is again not relevant: some hosts disappeared, others appeared, new services or systems appeared. But infrastructure protection is a continuous process, and the SOC cannot slow down its activities until the latest information on customer assets is obtained. Let me remind you that the quality of Solar JSOC’s work is governed not by abstract promises, but by a very specific SLA, the violation of which is followed by various celestial vehicles. How to get out in such a situation and not lose in the quality of the service provided?

We suggest now returning to this issue through one of the comparative examples of incident notification:

The RAT utility AmmyAdmin was launched on the host 172.16.13.2.vs

The RAT utility AmmyAdmin was launched on the host 172.16.13.2, the Moscow office on Kuznetsk bridge, the machine of Ivanov Petr Mikhailovich, deputy head of the communication center, the functional processing of flights of the MDC and the work with AWS of the CBD, remote administrator work is prohibited.

It would seem that the difference between these two notifications is in-depth analysis. In the first case, the notification looks like it was received automatically from the SIEM platform, and in the second, the operator’s work and enrichment of incidents are visible. This is so and not so. In order for the operator to be able to analyze the incident and draw some conclusions, it is extremely important for him to understand what (or who) is hiding behind these mysterious and almost identical IP addresses from customers, and to use this information in the analysis of the incident.

About 20% of our readers will now say: “Well, for this, all companies have implemented the CMDB system, which is easier than connecting the data from it to SIEM? You don’t even need to write a connector. ” Let's leave colleagues from integrators and vendors on this conviction. From our practice, no matter how sad it is to realize this, information from CMDB should not even be connected or exported to SIEM due to its complete irrelevance. So you have to roll up your sleeves and do it yourself. Let’s take a closer look at this question and try to understand how to understand the bowels of the infrastructure

We eat the elephant in parts or immerse ourselves in the description of the infrastructure

In our move to inventory our assets, we usually try to go through four milestones.

1. Building a top-level, but detailed network map of the organization

Here, the customer’s IT departments are the first and main contact (unless the company has correctly implemented and configured the network equipment management system or firewall management, but this is still an exception). Both descriptive pieces of information from network equipment, network diagrams, and so on are used.

The target result of this stage is the ability to split all internal addresses into network zones with the identification of the following information:

- Which site (geolocation / location, if applicable) is the specific IP address.

- What type of site (data center / head office / branch / point of sale) does it belong to.

- The global purpose of the dedicated network segment is user / corporate Wi-Fi / guest Wi-Fi / admins / servers.

- The application for this network segment is with respect to the system or business application, if possible.

This leads to the first important conclusion : assets are not only a description of specific hosts with detailed inventory information about them, but also the ability to describe network segments and use this data in reports / correlations / event searches.

Here's what it looks like in SIEM:

2. Description of the main subsystems in the data center

As a rule, the starting identification of these machines appears according to the results of the first business interviews. Communicating with security, IT, networkers, and application specialists makes it possible, as a first approximation, to understand how the company's infrastructure is structured and which resources / systems in it are the most critical. It is easy enough to collect information about their addressing and network affiliation. Further, in the mode of point questions, it is already possible to obtain the required information:

- What kind of services and applications are deployed on this host.

- To which criticality class according to IT is it assigned?

- What is the functional role of the system (planned).

At this stage, unloading from various centralization systems usually helps:

- Virtualization systems. Often, comments on the creation of virtual machines describe their functional roles.

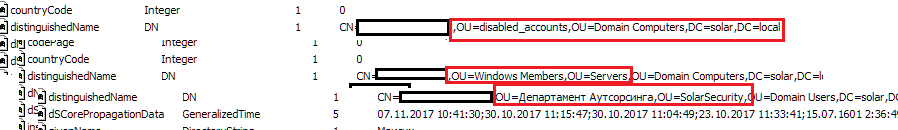

- Active Directory and other directories. At a minimum, the domain structure very often gives answers about the functional purpose of the systems:

- Various SZI (usually antiviruses) have a large amount of inventory information:

- Or just a large Excel file that lists key systems with platform-specific addressing, etc.

The second important conclusion follows from this : you need to be able to “take” information about assets from completely different sources and add this information to SIEM in accordance with configured scenarios and monitoring logic.

3. Description of critical hosts and assets from the point of view of information security

When the customer has identified his “pain points” and the most critical systems, we enter into a dialogue with those responsible for them to understand how these systems are interconnected, what data is stored there and what IS risks associated with them. We also evaluate them by additional parameters, after which we assign to some of the systems coefficients that increase the level of criticality. Here's what we pay attention to:

- How dangerous is this system in terms of attack monetization. Examples are AWS of the CBD, settlement center machines in banks, treasury machines and client banks in other companies.

- How critical is this system in terms of compromising the data on it. Examples are management reporting systems, folders storing information with trade secrets, etc.

- How much can this system be used for hacking to develop an attack on the infrastructure? Examples are central network equipment, anti-virus, AD, and security scanner servers (using them, an attacker can often gain access anywhere).

- Another reason for the high criticality of this host, which the customer tells us about.

Example descriptions:

The third important conclusion : SOC should be able to add its own descriptions of the purpose, functionality and criticality of the host for each asset and for each subnet wired into the system. As they say, “all markers are different in taste and color,” and what is good in IT is not always necessary in information security.

4. Enrichment of information on a host / asset

In the final, we try to enrich information on a host / asset with data from information security systems or security scanners:

- Hardware specifications (processors, memory, disks, etc.).

- Information on the OS and all installed patches (and, if you're lucky, even with vulnerabilities relevant to them).

- List of installed software with versioning.

- List of processes running at the time of scanning.

- A lot of additional information (MD5 libraries, etc.).

This information can be extremely useful for investigation, although, in our opinion, it is not primary in identifying and assessing the criticality of the host incident itself.

Understanding problems

Although even at first glance the process of describing the infrastructure looks rather complicated, in reality these are only the difficulties that lie on the surface. As always, inside you can find a number of underwater

Choosing a unique asset identifier

It would seem that the question is banal and obvious. Choose anything: at least an IP address (if there are several interfaces, assign the main one), at least a domain name, at least a serial number. But in life, everything is not so simple.

Any hardware or software identifiers are really very good for their uniqueness and direct connection with the device. There is only one problem - we work with logs, and we identify incidents based on logs, and they simply do not have these unique identifiers. Therefore, we have (almost) no way to correctly connect what is happening in our network with an asset.

The DNS name is also good from the point of view of its uniqueness (let us ignore those companies that prefer not to use it). But the problem, unfortunately, is completely similar - the vast majority of logs do not contain any information about this DNS name. Therefore, matching an incident with an asset also remains a problem.

It would seem that we have no options left, we will have to work with the IP address. It appears in the vast majority of journals that we have to deal with, and in the case of the server segment is an almost guaranteed criterion for determining the device.

But things get more complicated when we try to use it to identify user hosts. Quite often, in user networks or part of them, we are faced with DHCP and randomly issuing a host IP address within a segment. This breaks the whole logic of working with the incident: at the time the event occurred, the IP address could refer to one machine, and by the time the analyst connected to the parsing or the customer’s IT service blocked it, this IP address could already belong to a completely different host.

There are even more enchanting cases. Look, for example, at Windows logging for RDP authentication:

As a result, depending on the type of event, the identifier will be either the host or IP. And this problem is generally better solved at the parsing level of the event.

Most often, we use the following host definition scheme. When processing events, a number of conditions are sequentially checked and the first non-zero value is selected (problems with parsing from the example above are solved at the parser level :)):

- The network is statically addressed, and an asset for this IP is set up in SIEM (identifier = asset name).

- In active DHCP leases, there is information on HostName when fetching by IP from the log (identifier = host name, without domain).

- Is there a HostName in the log (identifier = host name, without domain).

- Is there an IP address in the log (identifier = IP address).

This process is performed for each incoming event and is used to check all exception lists, profiles, etc.

It is important to note here that it will never work to get assets for all the hosts in the company and keep them up to date. In any case, there are guest networks, VPN address pools, test environments, and Internet . You cannot start an asset in these networks, because everything is constantly changing. But at the same time, it’s still important to correctly determine the source of activity, and not generate alerts for the same host, which is present in the logs in a different format (for example, nb-hostname.domain.local, nb-hostname, 10.10.10.10 (but there is nb -hostname in DHCP)).

Use of relevant inventory information in event enrichment

When we talk about the analysis of an incident, it is very important to operate with up-to-date data on the presence of updates / software / vulnerabilities on the host, etc.

As a rule, regular scans (and, accordingly, updates of asset information) occur at best once a month. Scanning the entire infrastructure more often is practically unrealistic, and this at one point generates another very important detail in incident handling, which is often overlooked: between two inventory scans, the state of the host can change as often as necessary and at the same time eventually return to the original condition. For example, in incidents involving malicious mailings, referrals to infected websites, detection of indicators of compromise, etc., it is very important to understand whether the antivirus was active specifically at that moment.

We solve this problem in two ways:

- Separate alerts for disconnected / changed state of software or host.

- Upload statuses by hosts or use host logs to update the current status.

This information is automatically added to the corresponding incident:

Keeping asset information up to date.

As we have already said, no matter how scrupulous you come to the description of the infrastructure, it will become obsolete very soon. In just a month or two, the customer’s network will begin to change: new services or hosts will appear, old ones will disappear, part of the systems will change their purpose.

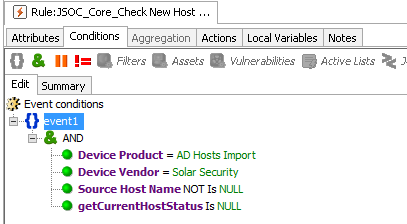

Here are a few approaches that we use in Solar JSOC to maintain the current context:

- Replenishment of information on on-demand assets, upon the occurrence of suspected incident. If the analysis of the incident revealed that the asset (IP address) was not included in our network model, upon notification, we ask the customer for information on the host. This applies to filling out information about both the asset and the subnets.

- Regular inventory of potential new hosts. There are several options here:

- Unload hosts from a domain, antivirus, etc., and compare it with the current state. This is done using the simplest correlation rule, under the conditions of which you can register a specific OrganizationUnit, hostname by mask, etc. - depending on what you want to see.

- Do IP-MAC compliance upload on switches in some network segments.

- Track host network activity.

“For delivery” to the process described above, we get information about hosts that for a long time did not appear in the indicated reports and which probably no longer exist. For example, you can "follow the process of dismissing employees" and make alerts / reports on moving the host / KM to "OU = disabled".

- Unload hosts from a domain, antivirus, etc., and compare it with the current state. This is done using the simplest correlation rule, under the conditions of which you can register a specific OrganizationUnit, hostname by mask, etc. - depending on what you want to see.

- And finally, the most complex, but very important process is the “manual” collection of information about changes, new subsystems or scaling of old ones from responsible specialists of the customer. In Solar JSOC, the contract service manager does this, and often this work brings significantly more results than the automation described above.

The game is not worth the candle, but the result is labor?

Surely not one of our readers, practitioners at the end of this article thought: why do you need to fence all this crazy design, taking into account and updating the asset model? Maybe it’s easier and “cheaper” to manually deal with each host on the fact of an incident, and the end customer does not need such a thorough inventory?

We will try to show with a few examples how the asset model simplifies and automates part of the activities of the SOC operator and helps in its daily work.

Ability to filter the excess

“Correct” SIEM systems allow you to automatically use the asset model to filter potential incidents into exceptions. This can significantly reduce the time that the operator has to spend on the analysis of "garbage".

For example, often we begin to collect primary information about script triggers from the customer before we fill out the first network model, because the rule for detecting calls to C & C servers and malicious sites can be started quite quickly.

Does this mean that the entire customer’s network is teeming with malware, or, conversely, the reputation databases of C&C servers give so many false positives? As a rule, neither one nor the other. It’s just that guest wifi works in the branches of the customer’s company, all user sessions of which use the same Internet access infrastructure as the main client network, and therefore fall into the scope of monitoring.

Is it interesting to the customer how secure are the phones of external people who simply used his guest Wi-Fi? Should the SOC operator handle such incidents? Probably not. So filtering Wi-Fi networks from this scenario is a very reasonable and desirable step.

Or another example: usually in a company at the security policy level there is a restriction on the use of Remote Admin Tools. But helpdesk employees, whose responsibilities include servicing remote machines, business travelers, or remote points of sale, are often forced to resort to this tool in their daily work. Isn't it easier for the SOC to identify the locations of helpdesk employees and ignore such events than to handle each suspected incident manually?

Increasing the criticality and priority of an incident depending on a specific asset

The situation, in my opinion, also does not require additional comments. An incident that occurred at a workstation in the CBD at a bank or at the junction of the main and technological segments in the energy sector will always be much more important and priority than even fixing the launch of hacking tools in the user segment. And such an incident should be detected and handled much faster.

From our practice in recent years, it can be seen that attackers have learned to take advantage of weak prioritization of incidents in young SOCs. As one of the final stages of the attack, they use the so-called “incident storm”, creating dozens of suspicions of an incident in different parts of the infrastructure before capturing the target resource or compromising information. The SOC operator, which processes dozens of incidents in turn, simply does not have time to get to the right, truly critical, until it is too late. While proper prioritization would allow him to focus on the most important incident and prevent the consequences of the attack.

The ability to see strange

The more information about the asset and the situation as a whole the operator has at the beginning of the analysis of the incident, the faster he will make a decision, and the more correct it will be. And of course, the more likely it is that a real complex attack, caught on the basis of a small anomaly, will be effectively identified and disassembled in the SOC.

Well, in general, who owns the information, he owns the world. On this I would like to end our dive into the subject of asset management. For all remaining practical and not so questions - welcome to comments. And until we meet again in the SOC for .... series.