“Tolik - honey”, or how we did a survey of IT-satisfaction for 20 thousand people

In general, is it normal for our employees to work with internal systems? In a small company this is a simple question, the IT service knows almost by heart all employees and their systems. But it’s hard for our IT people to remember 20 thousand people - namely, how many VTBs use IT services in their work. We asked only one question, but this was enough to get a lot of information about our users. In this post we will share our experience of organizing a mass satisfaction survey - we hope it will be useful to you too.

Earlier, we did not conduct a large-scale study of user satisfaction for a long time. Separate applications came, we sorted them, gave feedback. Then the IT department evaluated criticism and suggestions, looked where there are difficulties. All polls were targeted for other purposes. For example, we were interested in how individual units work. Or whether it is necessary to expand the communication channel.

At the same time, everything was done anonymously, so that we could not reveal data by user categories, for example, from certain regions. As a result, it turned out like in a well-known song about the beautiful marquise: according to the polls, everything is fine, but in some conversations - not very.

We needed a new approach. Here are the basic principles that we put into it:

Simply put, we wanted to learn something new about our shortcomings, and not rest on our laurels, relying on a high percentage of satisfied employees.

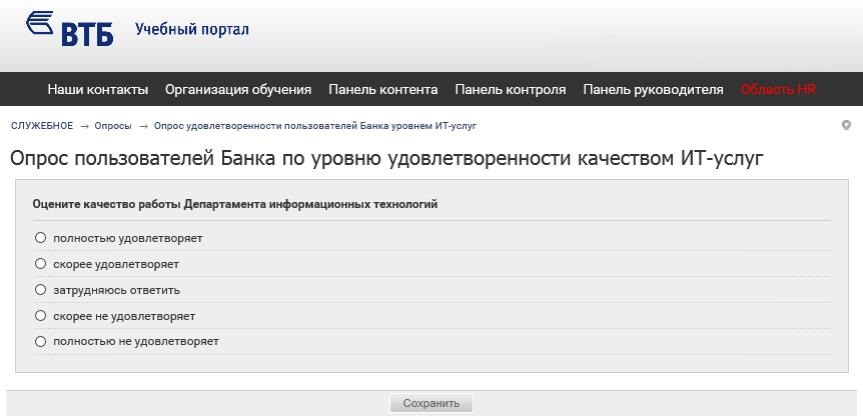

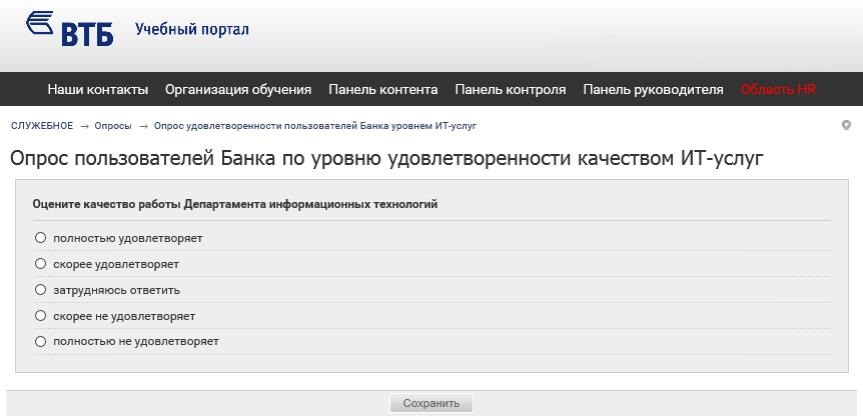

To involve as many people as possible, we tried to formulate the questions as clearly and concisely as possible. Many users generally do not know what an IT service is, they need a computer to work. “Is the service“ accounting and reporting ”good for you?” - “I don’t know. Make 1C work. ” As a result, they agreed on one question with five possible answers - from “completely unsatisfied” to “completely satisfied”.

We explained above about compulsory authentication - without it, you cannot make any slices according to the data, and their benefits are greatly reduced.

We engaged our advertising service and HR department in the preparation of the survey. They helped to make an interesting survey design so that people would like to click on the link and select the answer. All participants decided to send invitations by e-mail, and publish an announcement on the corporate portal. So we were able to once again draw attention to the survey, but not be imposed, so as not to provoke a negative reaction.

We had three weeks to prepare, and for reasons of information security, external services could not be used. So we designed the survey on WebTutor.

The survey lasted about two weeks - even employees who went on vacation could participate in it. We interviewed employees who, at the time of the start of the survey, had an Active Directory account and an email address — that is, attributes of a “live” user. If there is an account in AD, but there is no mail, it may be some kind of technical account, we did not take such into account.

As a result, we achieved the simplest scheme of actions - only two clicks were required from the employee. The first - by the link in one of the e-mail newsletters or in the news on the portal, the second - by the chosen answer to the only question. For the most active, they also screwed up a field for comment:

We did not once again focus on the fact that the survey would not be anonymous, but did not emphasize the opposite. Any competent person understands that there can be no anonymity in a survey within a corporate network - if he uses it, then he is already authenticated. But what we focused on is that we need constructive criticism of our work. For what there is an optional, but important field with comments.

About 3 thousand people took part in our voluntary survey. The weighted average value of user satisfaction is 82.5 (out of a possible 100).

Despite the simplicity of the survey, we received a lot of data for analytics. By the way, I had to analyze directly in the database, because no Excel could cope with the volumes obtained.

We added data from the IT personnel system to the survey results: we compared all personnel information with a specific personnel number of the user. So we got data on regions, positions, divisions and other HR-sections.

After that, we added data from ITSM - 360,000 calls per year to the internal support team. So, for indirect reasons, it was possible to understand why the user gave a specific rating:

We got a lot of useful information from the comments. It became clear which departments had "sores": some are dissatisfied with the work of the software, others with computers, others with something else. Comments were very diverse. Starting with “ Tolik - honey ” (and from two different users) and ending with discussions about how we generally ask: “ Very wrong questions: I can’t evaluate in one answer the whole range of emotions that are overwhelming me when you ask you to scold . "

There was a negative in the style of “everything is bad, nothing works”, but there were many detailed, constructive comments. We could immediately transfer them to the appropriate IT departments. Many wrote: "Maybe I want to give feedback, but I don’t, because I’m not comfortable . ” In response, we designed a new feedback form, with emoticons instead of textual response options. Poke an emoticon, press "Send" and that's it. Just in case, we explain at the same time that the rating is "excellent" - this is when you are completely delighted. If your problems just solved normally, then this rating is “good”. In December, we received 99.5% of the answers “excellent”, and now 92% of such answers - people began to choose more thoughtfully.

What else did the survey do? Increased stability and speed of one of the workflow systems. They made a convenient and understandable page for the IT department support service on the corporate portal. They improved a number of internal procedures for communication with the internal quality control service. And they conducted additional polls on a specific topic.

Many employees in the main survey complained about printers and the process of changing cartridges. We conducted an additional survey on the convenience of printing in the regions. Based on its results, an action plan has been prepared for certain cities. Here, by the way, is the final map:

According to the survey, many more changes are planned. We want to make a page on the corporate portal with basic instructions for users. Like those that are given to newcomers to the company: where to contact on issues related to corporate communications, computer and software, obtaining passwords, using IT services and other useful things. We also evaluate how we can increase the level of IT literacy of employees who are not related to the IT department.

We plan to conduct the next large survey in the near future. We want to repeat about the same thing, but we will increase the level of automation of processing results. Indeed, after the merger of VTB and VTB24, the audience for the survey will grow to about 50 thousand people.

Earlier, we did not conduct a large-scale study of user satisfaction for a long time. Separate applications came, we sorted them, gave feedback. Then the IT department evaluated criticism and suggestions, looked where there are difficulties. All polls were targeted for other purposes. For example, we were interested in how individual units work. Or whether it is necessary to expand the communication channel.

At the same time, everything was done anonymously, so that we could not reveal data by user categories, for example, from certain regions. As a result, it turned out like in a well-known song about the beautiful marquise: according to the polls, everything is fine, but in some conversations - not very.

Concept

We needed a new approach. Here are the basic principles that we put into it:

- the survey should have maximum coverage;

- the survey should require minimal effort from the participants, ideally - one click;

- the survey should not be anonymous;

- the survey should be as general as possible, without suggesting specific scenarios - this will allow you to collect a lot of diverse information and conduct an in-depth analysis of the results.

Simply put, we wanted to learn something new about our shortcomings, and not rest on our laurels, relying on a high percentage of satisfied employees.

To involve as many people as possible, we tried to formulate the questions as clearly and concisely as possible. Many users generally do not know what an IT service is, they need a computer to work. “Is the service“ accounting and reporting ”good for you?” - “I don’t know. Make 1C work. ” As a result, they agreed on one question with five possible answers - from “completely unsatisfied” to “completely satisfied”.

We explained above about compulsory authentication - without it, you cannot make any slices according to the data, and their benefits are greatly reduced.

We engaged our advertising service and HR department in the preparation of the survey. They helped to make an interesting survey design so that people would like to click on the link and select the answer. All participants decided to send invitations by e-mail, and publish an announcement on the corporate portal. So we were able to once again draw attention to the survey, but not be imposed, so as not to provoke a negative reaction.

Conducting a survey

We had three weeks to prepare, and for reasons of information security, external services could not be used. So we designed the survey on WebTutor.

The survey lasted about two weeks - even employees who went on vacation could participate in it. We interviewed employees who, at the time of the start of the survey, had an Active Directory account and an email address — that is, attributes of a “live” user. If there is an account in AD, but there is no mail, it may be some kind of technical account, we did not take such into account.

As a result, we achieved the simplest scheme of actions - only two clicks were required from the employee. The first - by the link in one of the e-mail newsletters or in the news on the portal, the second - by the chosen answer to the only question. For the most active, they also screwed up a field for comment:

We did not once again focus on the fact that the survey would not be anonymous, but did not emphasize the opposite. Any competent person understands that there can be no anonymity in a survey within a corporate network - if he uses it, then he is already authenticated. But what we focused on is that we need constructive criticism of our work. For what there is an optional, but important field with comments.

What we learned and what we did

About 3 thousand people took part in our voluntary survey. The weighted average value of user satisfaction is 82.5 (out of a possible 100).

Despite the simplicity of the survey, we received a lot of data for analytics. By the way, I had to analyze directly in the database, because no Excel could cope with the volumes obtained.

We added data from the IT personnel system to the survey results: we compared all personnel information with a specific personnel number of the user. So we got data on regions, positions, divisions and other HR-sections.

After that, we added data from ITSM - 360,000 calls per year to the internal support team. So, for indirect reasons, it was possible to understand why the user gave a specific rating:

- the user replies that he does not like what he uses. We see that over the past few months, all of his appeals in support were processed in violation of the deadline. The reason for the dissatisfaction is clear;

- the employee puts the "three" and says that he can not say good or bad. Everything seems to be fine, but very old computers. This is an occasion to look at the history of interaction with technical support. Indeed, a person has 10 appeals in the spirit of “computer slows down”, “does not turn on”, “power supply has burned down” in a year;

- there were some users who did not contact support at all, but rated it “poorly”. This is an even more interesting fact, and with the help of various information sections, we tried to understand its causes.

We got a lot of useful information from the comments. It became clear which departments had "sores": some are dissatisfied with the work of the software, others with computers, others with something else. Comments were very diverse. Starting with “ Tolik - honey ” (and from two different users) and ending with discussions about how we generally ask: “ Very wrong questions: I can’t evaluate in one answer the whole range of emotions that are overwhelming me when you ask you to scold . "

There was a negative in the style of “everything is bad, nothing works”, but there were many detailed, constructive comments. We could immediately transfer them to the appropriate IT departments. Many wrote: "Maybe I want to give feedback, but I don’t, because I’m not comfortable . ” In response, we designed a new feedback form, with emoticons instead of textual response options. Poke an emoticon, press "Send" and that's it. Just in case, we explain at the same time that the rating is "excellent" - this is when you are completely delighted. If your problems just solved normally, then this rating is “good”. In December, we received 99.5% of the answers “excellent”, and now 92% of such answers - people began to choose more thoughtfully.

What else did the survey do? Increased stability and speed of one of the workflow systems. They made a convenient and understandable page for the IT department support service on the corporate portal. They improved a number of internal procedures for communication with the internal quality control service. And they conducted additional polls on a specific topic.

Additional polls

Many employees in the main survey complained about printers and the process of changing cartridges. We conducted an additional survey on the convenience of printing in the regions. Based on its results, an action plan has been prepared for certain cities. Here, by the way, is the final map:

Future plans

According to the survey, many more changes are planned. We want to make a page on the corporate portal with basic instructions for users. Like those that are given to newcomers to the company: where to contact on issues related to corporate communications, computer and software, obtaining passwords, using IT services and other useful things. We also evaluate how we can increase the level of IT literacy of employees who are not related to the IT department.

We plan to conduct the next large survey in the near future. We want to repeat about the same thing, but we will increase the level of automation of processing results. Indeed, after the merger of VTB and VTB24, the audience for the survey will grow to about 50 thousand people.