Dagaz: Faster, Better, Smarter ...

- As angels soar together in a row ...

- As angels soar together in a row ... - Friendly in a row, together in a row ...

- Raise their heads! And they fly! And they fly! ..

Sir Terry Pratchett “The Night Watch”

Sooner or later, there always comes a moment when quantity inevitably turns into quality. New games are accumulating that need to be comprehended, the project is overgrown with new opportunities, and opportunities are combined with each other. If everything does not collapse under its own weight, the result can exceed the wildest expectations. What does not kill makes us stronger!

Here is an example of such a “sum of technology." The game, in general, is not too complicated, but very unexpected. Apocalypse - there are four horsemen on the field and the infantry supporting them. Usual chess moves. Pawns, reaching the last line, are expected to become riders, but the number of riders on each side cannot exceed two. The player who first lost all his riders loses. The devil, as always, is hiding in the details. The figures walk at the same time!

What does this mean from a project perspective?

First of all, like puzzles, this is a “game for one” - the player makes a move, and the bot “mixes” his own into it, not knowing what kind of move a person made. This is a game with incomplete information, although in a very unusual form for us. There are no dice or “fog of war” here, but each player, performing a move, does not know how his opponent comes down at the same time.

Of course, collisions are possible. For example, both players can simultaneously go to the same empty field, or a pawn may try to eat a piece that moves away from the blow in the same move. Rules of the gamewell describe these nuances. A pawn is allowed to make a diagonal move, provided that it is about to beat someone, even if the piece has left this position, and the result of the conflict for an empty field is determined by the rank of the pieces. The horseman always kills a pawn, but if the pieces are equal, they are both destroyed (which, by the way, makes draws possible).

The task is not easy, but the mechanism of “expansion of moves” copes with it perfectly . Indeed, as I have repeatedly said before, at the post-processing stage we can not only prohibit a move (violating, for example, the invariant of the game), but also add any arbitrary actions to it, including those obtained from the move formed by the bot.

There is really one subtlety - usually, post-processing is performed immediately after generation, for all generated moves. In this case, this cannot be done, since this will inevitably lead to a “combinatorial explosion” (although the game is small, it’s still not enough pleasant). Most importantly, this is not necessary. There is a simpler way. Nobody said that we can not rewrite the controller . Modularity has its advantages.

Of course, collisions are possible. For example, both players can simultaneously go to the same empty field, or a pawn may try to eat a piece that moves away from the blow in the same move. Rules of the gamewell describe these nuances. A pawn is allowed to make a diagonal move, provided that it is about to beat someone, even if the piece has left this position, and the result of the conflict for an empty field is determined by the rank of the pieces. The horseman always kills a pawn, but if the pieces are equal, they are both destroyed (which, by the way, makes draws possible).

Merge moves

Dagaz.Model.join = function(design, board, a, b) {

var x = getPiece(design, board, a);

var y = getPiece(design, board, b);

if ((x !== null) && (y !== null)) {

var r = Dagaz.Model.createMove();

r.protected = [];

checkPromotion(design, board, a, x, b);

checkPromotion(design, board, b, y, a);

var p = a.actions[0][1][0];

var q = b.actions[0][1][0];

if ((p == q) && (x.type > y.type)) {

r.actions.push(b.actions[0]);

r.actions.push(a.actions[0]);

} else {

r.actions.push(a.actions[0]);

r.actions.push(b.actions[0]);

}

if (p == q) {

if (x.type > y.type) {

r.actions[0][2] = [ Dagaz.Model.createPiece(2, 2) ];

r.protected.push(x.player);

r.captured = p;

} else {

if (x.type == y.type) {

r.actions[0][2] = [ Dagaz.Model.createPiece(2, 1) ];

r.actions[1][2] = [ Dagaz.Model.createPiece(2, 1) ];

r.capturePiece(p);

} else {

r.actions[0][2] = [ Dagaz.Model.createPiece(2, 1) ];

r.protected.push(y.player);

r.captured = p;

}

}

}

return r;

} else {

return a;

}

}

The task is not easy, but the mechanism of “expansion of moves” copes with it perfectly . Indeed, as I have repeatedly said before, at the post-processing stage we can not only prohibit a move (violating, for example, the invariant of the game), but also add any arbitrary actions to it, including those obtained from the move formed by the bot.

There is really one subtlety - usually, post-processing is performed immediately after generation, for all generated moves. In this case, this cannot be done, since this will inevitably lead to a “combinatorial explosion” (although the game is small, it’s still not enough pleasant). Most importantly, this is not necessary. There is a simpler way. Nobody said that we can not rewrite the controller . Modularity has its advantages.

From the point of view of the AI bot, the game, in many ways, "turns out to be." There is no need to perform viewing for many moves in depth. It is important to guess how the enemy will walk! The tactics of the game are changing. It is practically useless to try to attack the riders who are "under battle" - they will probably run away. “Forks” are more promising, but you have to choose which of the riders to beat. If the enemy has only one rider (and you have their complete set), you can try to "watch" him by going to his chosen field. Just don't make it a pawn! There are nuances associated with the transformation of figures, but, in general ...

It all comes down to a set of heuristics

...

var isCovered = function(design, board, pos, player, type) {

var r = false;

_.each(Dagaz.Model.GetCover(design, board)[pos], function(pos) {

var piece = board.getPiece(pos);

if ((piece !== null) && (piece.player == player)) {

if (_.isUndefined(type) || (piece.type == type)) {

r = true;

}

}

});

return r;

}

Ai.prototype.getMove = function(ctx) {

var moves = Dagaz.AI.generate(ctx, ctx.board);

if (moves.length == 0) {

return { done: true, ai: "nothing" };

}

timestamp = Date.now();

var enemies = 0;

var friends = 0;

_.each(ctx.design.allPositions(), function(pos) {

var piece = ctx.board.getPiece(pos);

if ((piece !== null) && (piece.type == 1)) {

if (piece.player == 1) {

enemies++;

} else {

friends++

}

}

});

var eval = -MAXVALUE;

var best = null;

_.each(moves, function(move) {

var e = _.random(0, NOISE_FACTOR);

if (move.isSimpleMove()) {

var pos = move.actions[0][0][0];

var trg = move.actions[0][1][0];

var piece = ctx.board.getPiece(pos);

if (piece !== null) {

var target = ctx.board.getPiece(trg);

if (piece.type == 1) {

if (isCovered(ctx.design, ctx.board, pos, 1)) e += MAXVALUE;

if (target === null) {

if (isCovered(ctx.design, ctx.board, trg, 1, 0)) e += LARGE_BONUS;

if (isCovered(ctx.design, ctx.board, trg, 1, 1)) {

if ((enemies == 1) && (friends == 2)) {

e += BONUS;

} else {

e -= MAXVALUE;

}

}

} else {

if (target.type == 1) {

e += SMALL_BONUS;

} else {

e += BONUS;

}

}

} else {

if (isCovered(ctx.design, ctx.board, pos, 1)) e += SMALL_BONUS;

if ((target === null) && isCovered(ctx.design, ctx.board, trg, 1)) e -= MAXVALUE;

if (friends == 1) e += BONUS;

if (target !== null) e += SMALL_BONUS;

if ((move.actions[0][2] !== null) && (move.actions[0][2][0].type != piece.type)) {

if (friends == 1) {

e += MAXVALUE;

} else {

e -= MAXVALUE;

}

}

}

}

}

if ((best === null) || (eval < e)) {

console.log("Move: " + move.toString() + ", eval = " + e);

best = move;

eval = e;

}

});

return {

done: true,

move: best,

time: Date.now() - timestamp,

ai: "aggressive"

};

}

The other extreme is that the games are large and complex so that a little viewing in depth is technically impossible for them. Here we are forced to use a more casual AI , viewing the position only 1-2 moves forward, and even to this depth, we will not be able to view all the available moves! In any case, for a time convenient for a person to search for a move by a bot for 2-3 seconds.

A little more about performance

Large and complex games expose all performance issues. Usually, how fast the code runs is related to the quality of the AI work (the more positions it manages to consider in the allotted time, the better it works), but sometimes performance problems become more obvious. In the process of working on Tenjiku shogi , I noticed that in some positions the reaction time of the user interface became simply indecently large (about 10-15 seconds).

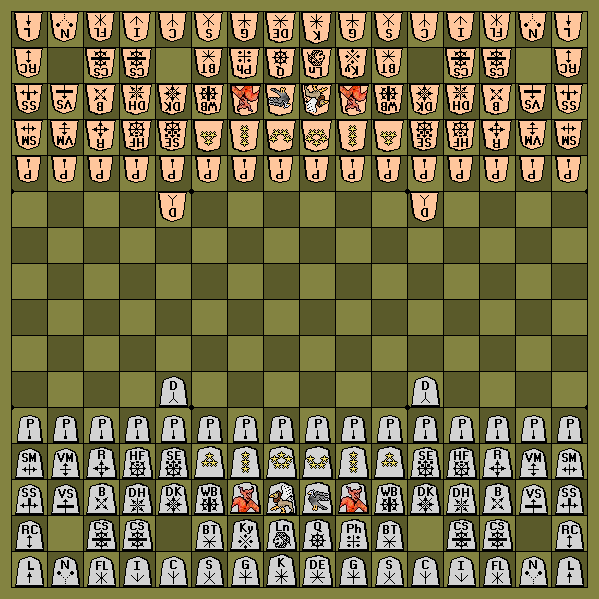

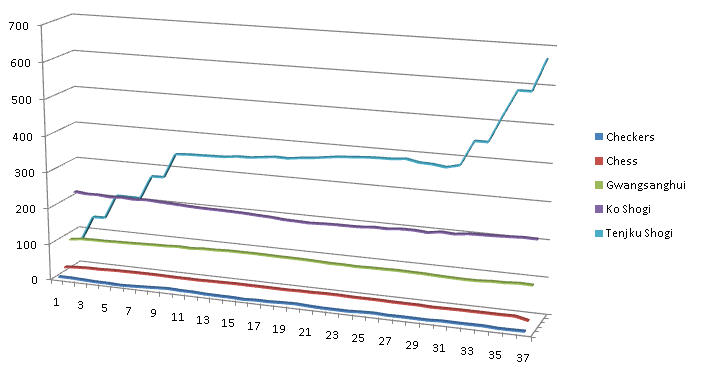

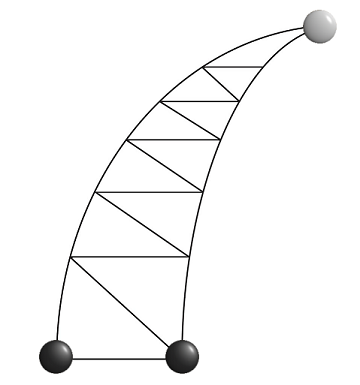

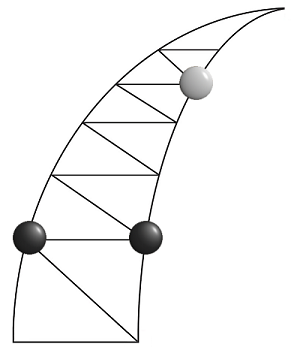

It’s all about the “Fiery Demon” (and similar figures). Pay attention to the diagram on the right. In addition to the usual “range” -attacks, the “demon” has the right, at any time, to perform up to three one-step movements in an arbitrary direction, while he is allowed to return to previously completed fields. This is a true "combinatorial killer" performance! In the initial position, when all such pieces are “squeezed”, this effect is not so pronounced, but when they go to the operational space ... Those who wish can calculate the number of possible moves on their own (the diagram below shows the graphs of changes in the average number of allowed moves during the game , for several famous games).

Here you should talk a bit about the architecture of Dagaz. The main idea is that before transferring control to a user or bot, the game model generates all possible moves from the current position. This allows you to consider the totality of moves “in its entirety” and helps to solve a number of Zillions of Games problems related to compound moves. In addition, this approach is very convenient for developing bots. But there is one problem.

For the user, a complex compound move is a sequencevarious actions (movements, captures and, possibly, dropping new pieces on the board). Somewhere there should be a code that allows, according to the sequence of user “clicks,” to select a single move from a previously formed and possibly large list. And such code in Dagaz, of course, is .

See what the problem is? The getStops function builds a list of all the final fields of each move and, for this, iterates over all the moves in the cycle, but with the smartFrom or smartTo options enabled (the options for immediately executing a move on the first “click”, in the absence of alternative options), a nested search of all moves is performed . A lot of moves are formed!

On small games, such as checkers or chess, the error did not manifest itself. Even in the initial position of Tenjiku shogi, she was not noticeable. It took “productivity killers" to identify it. And to localize the error, the KPI module was very usefulwithout which I simply would not know where to look for the problem. Now the bug is fixed and, as a result, all the code has become better.

It’s all about the “Fiery Demon” (and similar figures). Pay attention to the diagram on the right. In addition to the usual “range” -attacks, the “demon” has the right, at any time, to perform up to three one-step movements in an arbitrary direction, while he is allowed to return to previously completed fields. This is a true "combinatorial killer" performance! In the initial position, when all such pieces are “squeezed”, this effect is not so pronounced, but when they go to the operational space ... Those who wish can calculate the number of possible moves on their own (the diagram below shows the graphs of changes in the average number of allowed moves during the game , for several famous games).

Here you should talk a bit about the architecture of Dagaz. The main idea is that before transferring control to a user or bot, the game model generates all possible moves from the current position. This allows you to consider the totality of moves “in its entirety” and helps to solve a number of Zillions of Games problems related to compound moves. In addition, this approach is very convenient for developing bots. But there is one problem.

For the user, a complex compound move is a sequencevarious actions (movements, captures and, possibly, dropping new pieces on the board). Somewhere there should be a code that allows, according to the sequence of user “clicks,” to select a single move from a previously formed and possibly large list. And such code in Dagaz, of course, is .

There was a mistake in it

MoveList.prototype.isUniqueFrom = function(pos) {

var c = 0;

_.each(this.moves, function(move) {

_.each(this.getActions(move), function(action) {

if ((action[0] !== null) && (_.indexOf(action[0], pos) >= 0)) c++;

});

}, this);

return c == 1;

}

MoveList.prototype.isUniqueTo = function(pos) {

var c = 0;

_.each(this.moves, function(move) {

_.each(this.getActions(move), function(action) {

if ((action[1] !== null) && (_.indexOf(action[1], pos) >= 0)) c++;

});

}, this);

return c == 1;

}

...

MoveList.prototype.getStops = function() {

var result = this.getTargets();

_.each(this.moves, function(move) {

var actions = _.filter(this.getActions(move), isMove);

if ((actions.length > 0) && (actions[0][0].length == 1) &&

(actions[0][1].length == 1)) {

if (Dagaz.Model.smartFrom) {

if (this.isUniqueFrom(actions[0][0][0]) && !this.canPass()) {

result.push(actions[0][0][0]);

}

}

if (Dagaz.Model.smartTo) {

if (this.isUniqueTo(actions[0][1][0])) {

result.push(actions[0][1][0]);

}

}

} else {

...

}

}, this);

return _.uniq(result);

}

See what the problem is? The getStops function builds a list of all the final fields of each move and, for this, iterates over all the moves in the cycle, but with the smartFrom or smartTo options enabled (the options for immediately executing a move on the first “click”, in the absence of alternative options), a nested search of all moves is performed . A lot of moves are formed!

On small games, such as checkers or chess, the error did not manifest itself. Even in the initial position of Tenjiku shogi, she was not noticeable. It took “productivity killers" to identify it. And to localize the error, the KPI module was very usefulwithout which I simply would not know where to look for the problem. Now the bug is fixed and, as a result, all the code has become better.

So, we are limited in depth of viewing and must make the right (or at least not catastrophic) decision in a limited time. In this case, it is highly desirable that the following principles are ensured:

- Of course, the move leading to immediate victory must be chosen

- A move must not be chosen for which there is an answer leading to an immediate victory

- The selected move should provide the greatest improvement in position

How to evaluate a position?

The easiest way is to assess the material balance. Each type of figure is assigned a value, then add up the value of their figures and subtract the value of the opponent’s figures. The estimate is rough, but for really complex games, perhaps the only possible one. An improved assessment should take into account the mobility of the figures and their mutual threats (I will talk about this below). For “big” games with complex rules, evaluating mutual threats may be too expensive.

The second tool is heuristic. This is just a numerical assessment of the move , not the position, allowing us to distinguish between “bad” moves and “good” ones. Of course, first of all, “good” moves will be considered, and there simply may not be time left to consider “bad” ones. The simplest heuristic may include the value of the figure taken, but in addition, it is advisable to evaluate the value of the figure performing the move, possible transformations, threats, etc.

It is important to understand that the maximum value of the heuristic does not mean at all that this particular move will be chosen. Heuristics sets only the order of viewing moves. Within the framework of this order, the move that maximizes the value of the evaluation function (after completing the opponent’s retaliatory move with the maximum heuristic) is selected. Part of the moves can be forcibly excluded from consideration, giving them a negative heuristic value, but this tool should be used with caution only in cases where there is one hundred percent certainty that the move in question is not just useless, but it is harmful.

Remember, I talked about three principles above? It makes sense for royal figures (there may be several types of such figures in the game) to appropriate a very high cost. With this, we kill two birds with one stone: first, the move that takes the royal piece will receive the highest possible heuristic (and will always be considered first), in addition, the absence of the royal piece on the board will noticeably affect the value of the evaluation function, which is also very convenient. Unfortunately, as applied to Chess, this trick is not relevant, since the king is never taken in them.

The simplest evaluation function

Dagaz.AI.eval = function(design, params, board, player) {

var r = 0;

_.each(design.allPositions(), function(pos) {

var piece = board.getPiece(pos);

if (piece !== null) {

var v = design.price[piece.type];

if (piece.player != player) {

v = -v;

}

r += v;

}

});

return r;

}

The second tool is heuristic. This is just a numerical assessment of the move , not the position, allowing us to distinguish between “bad” moves and “good” ones. Of course, first of all, “good” moves will be considered, and there simply may not be time left to consider “bad” ones. The simplest heuristic may include the value of the figure taken, but in addition, it is advisable to evaluate the value of the figure performing the move, possible transformations, threats, etc.

Heuristic example

Dagaz.AI.heuristic = function(ai, design, board, move) {

var r = 0;

var player = board.player;

var start = null;

var stop = null;

var captures = [];

_.each(move.actions, function(a) {

if ((a[0] !== null) && (a[1] === null)) {

var pos = a[0][0];

var piece = board.getPiece(pos);

if ((piece !== null) && (piece.player != player)) {

r += design.price[piece.type] * ai.params.CAPTURING_FACTOR;

if (!_.isUndefined(board.bonus) && (board.bonus[pos] < 0)) {

r -= board.bonus[pos];

}

}

captures.push(pos);

}

if ((a[0] !== null) && (a[1] !== null)) {

if (start === null) {

start = a[0][0];

if (!_.isUndefined(board.bonus)) {

r += board.bonus[start];

}

}

stop = a[1][0];

}

});

var price = 0;

if (start !== null) {

var piece = board.getPiece(start);

if (piece !== null) {

price = design.price[piece.type];

}

}

_.each(move.actions, function(a) {

if ((a[0] !== null) && (a[1] !== null)) {

var pos = a[1][0];

var piece = board.getPiece(pos);

if (_.indexOf(captures, pos) < 0) {

if ((piece !== null) && (piece.player != player)) {

r += design.price[piece.type] * ai.params.CAPTURING_FACTOR;

if (!_.isUndefined(board.bonus)) {

r += Math.abs(board.bonus[pos]);

}

}

if (a[2] !== null) {

var promoted = a[2][0];

r -= price * ai.params.SUICIDE_FACTOR;

if (promoted.player == player) {

r += design.price[promoted.type] * ai.params.PROMOTING_FACTOR;

}

}

} else {

r -= price * ai.params.SUICIDE_FACTOR;

}

}

if ((a[0] === null) && (a[1] !== null) && (a[2] !== null) &&

(_.indexOf(captures, a[1][0]) < 0)) {

var pos = a[1][0];

var piece = board.getPiece(pos);

if (piece !== null) {

if (piece.player != player) {

r += design.price[piece.type] * ai.params.CAPTURING_FACTOR;

}

}

piece = a[2][0];

if (piece.player == player) {

r += design.price[piece.type] * ai.params.CREATING_FACTOR;

}

}

});

if (!_.isUndefined(board.cover) && (start !== null) && (stop !== null)) {

if (isAttacked(design, board, board.player, stop, start, price)) {

r -= price * ai.params.SUICIDE_FACTOR;

}

}

return r;

}

It is important to understand that the maximum value of the heuristic does not mean at all that this particular move will be chosen. Heuristics sets only the order of viewing moves. Within the framework of this order, the move that maximizes the value of the evaluation function (after completing the opponent’s retaliatory move with the maximum heuristic) is selected. Part of the moves can be forcibly excluded from consideration, giving them a negative heuristic value, but this tool should be used with caution only in cases where there is one hundred percent certainty that the move in question is not just useless, but it is harmful.

Cost figures

...

design.addPiece("King", 32, 10000);

design.addPiece("Prince", 33, 10000);

design.addPiece("Blind-Tiger", 34, 3);

design.addPiece("Drunk-Elephant", 35, 3);

design.addPiece("Ferocious-Leopard", 36, 3);

design.addPiece("Gold-General", 37, 3);

design.addPiece("Silver-General", 39, 2);

design.addPiece("Copper-General", 40, 2);

design.addPiece("Chariot-Soldier", 41, 18);

design.addPiece("Dog", 43, 1);

design.addPiece("Bishop-General", 44, 21);

design.addPiece("Rook-General", 46, 23);

design.addPiece("Vice-General", 48, 39);

design.addPiece("Great-General", 49, 45);

...

Remember, I talked about three principles above? It makes sense for royal figures (there may be several types of such figures in the game) to appropriate a very high cost. With this, we kill two birds with one stone: first, the move that takes the royal piece will receive the highest possible heuristic (and will always be considered first), in addition, the absence of the royal piece on the board will noticeably affect the value of the evaluation function, which is also very convenient. Unfortunately, as applied to Chess, this trick is not relevant, since the king is never taken in them.

It should be noted that the position should always be evaluated only at the end of the opponent’s retaliatory move! If there is a chain of exchanges, you should view it to the end, otherwise, it may turn out that the attacking piece will be given for less valuable.

The games of more than two players are another application of casual “one-way” AI. It's all about the evaluation function. Minimax algorithms work only if the score from the point of view of one player coincides with the score of the other, taken with the opposite sign. That which loses one gains another. If there are three ( or more ) players , everything breaks. Of course, you can still apply algorithms based on the Monte Carlo method , but other difficulties are associated with them.

Yonin Shogi - a variant of " Japanese chess " for four players. Most of the rules in this game remain unchanged, but the purpose of the game changes. The concept of "mat", to a certain extent, loses its meaning. In fact, if the "east" threatens the king of the "south" - this is not a reason to defend against the "shah" until the "west" and "north" say their word. On the other hand, if the threat is still not eliminated, the next move, the "east" will eat the king. Thus, Yonin Shogi is allowed to take kings (and this is the purpose of the game).

In addition, the game does not end with the capture of the king (a similar outcome would be too crowded for the remaining three players). The player who loses the king is eliminated from the game, losing the right to move. Since kings can be taken, they, like all other pieces, fall into the reserve and can be put on the board at any time. The player must put the king out of the reserve, if there are no kings left on the board. After all this, the goal of the game becomes obvious - the one who collects all four kings wins (when I made the game for Zillions of Games , Howard McCay helped me to realize this nuance ).

All of the above leads to a simple thought.

There are games in which complex in-depth search algorithms cannot be applied, and the very concept of an evaluation function should be rethought. To keep the quality of the work of AI algorithms acceptable, it is necessary to improve estimates and heuristics, possibly due to their complexity. The obvious way is to introduce mobility - the number of all possible moves made by the player, minus the opponent’s moves.

When using one-way algorithms, mobility assessment works wonders. The stupid game of the bot becomes more "meaningful." There is really one minus - in order to assess mobility, it is necessary to build (or at least recount) all possible moves for each of the players, and this is a very expensive operation. Since we are forced to do this anyway, I want to “squeeze” everything possible out of the generation of moves, and also minimize the number of such operations.

So I came up with the idea of "cover." It is just an array of arrays. For each of the fields (and any field in Dagaz is always encoded as an integer), a possibly empty list of positions is kept that contains the figures that can beat this field. At the same time (and this is important) no distinction is made between empty and occupied fields, as well as the owners of “beating” figures. The list of possible moves is calculated for all players at the same time , and due to caching, also once.

Of course, the universal algorithm for constructing a “cover” is far from suitable for all games. It works for Chess and Checkers , but for Spockno longer (since, in this game, the pieces can freely pass through other pieces of their color). This should not be confusing. As well as the evaluation function and heuristics, the algorithm for constructing a “cover” can be redefined using the name Dagaz.Model.GetCover . Moreover, even in cases where the universal algorithm works, it can be useful to think about customizing it. Specialized algorithms, as a rule, are more productive.

eval = A * material-balance + B * mobility; где A >= 0, B >= 0, A + B = 1

When using one-way algorithms, mobility assessment works wonders. The stupid game of the bot becomes more "meaningful." There is really one minus - in order to assess mobility, it is necessary to build (or at least recount) all possible moves for each of the players, and this is a very expensive operation. Since we are forced to do this anyway, I want to “squeeze” everything possible out of the generation of moves, and also minimize the number of such operations.

Coating

Dagaz.AI.eval = function(design, params, board, player) {

var r = 0;

var cover = board.getCover(design);

_.each(design.allPositions(), function(pos) {

var defended = _.filter(cover[pos], function(p) {

var piece = board.getPiece(p);

if (piece === null) return false;

return piece.player == player;

});

if (defended.length > 0) r++;

});

return r;

}

So I came up with the idea of "cover." It is just an array of arrays. For each of the fields (and any field in Dagaz is always encoded as an integer), a possibly empty list of positions is kept that contains the figures that can beat this field. At the same time (and this is important) no distinction is made between empty and occupied fields, as well as the owners of “beating” figures. The list of possible moves is calculated for all players at the same time , and due to caching, also once.

Of course, the universal algorithm for constructing a “cover” is far from suitable for all games. It works for Chess and Checkers , but for Spockno longer (since, in this game, the pieces can freely pass through other pieces of their color). This should not be confusing. As well as the evaluation function and heuristics, the algorithm for constructing a “cover” can be redefined using the name Dagaz.Model.GetCover . Moreover, even in cases where the universal algorithm works, it can be useful to think about customizing it. Specialized algorithms, as a rule, are more productive.

Here is an example of using “coverage” in a real game. This is still the simplest “one-step” algorithm and it is very easy to fool, but the bot’s actions seem meaningful! By analyzing the coverage, AI never leaves its pieces unprotected and seeks to “maximize” them on the board, maximizing the number of fields under battle. This is a good tactic, certainly leading to victory when playing against one “ Maharaja ”. This algorithm also performs well in " Charge of the Light Brigade ", " Dunsany's Chess ", " Horde Chess ", " Weak!"and other" small "chess games. It is obvious to me that using the" cover "will help to improve more complex algorithms, but before moving on to them, I need to practice.

Please note that somewhere around 5:39, all movements are sharply accelerated. This is explained simply. In parallel with the animation of the movement of the dice (just so that the person does not get bored), the bot searches for the target position and after it finds it, goes to it in a straight line, without wasting time on additional calculations.

By the way

I was not able to observe this effect on FireFox 52.6.0. In Chrome and even in IE, the algorithm found a solution in about 5 minutes, and in the Fire Fox, he continued to slowly move the dice for about fifteen minutes, until I knocked it out (while the memory was devouring as if into itself). I have not yet found an explanation for this phenomenon.

For me, this is a significant step forward compared to the previous version . The algorithm is a simple breadth-first search (not in depth ! This is important, because we are interested in the shortest solution?). Repeated positions are cut off with the help of Zobrist hash , which by the way makes possible (albeit extremely unlikely) a situation in which a solution may not be found as a result of a collision. In addition, search priority is given to nodes that are descendants of the current animation node (in order to minimize the number of necessary returns after finding a solution).

Along the way, I did one more thing

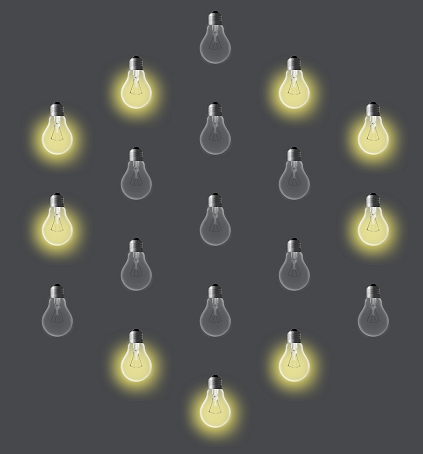

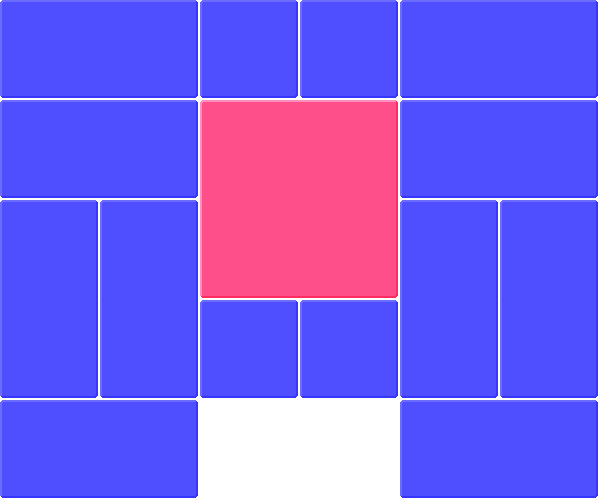

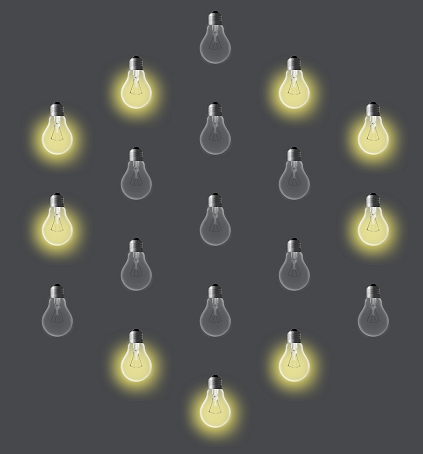

The fact is that in Zillions of Games there is an option whose purpose I never understood. It is called "progressive levels." As soon as you finish one level of the game, it immediately loads the next, just in order. Now, I think I’ve got the point. Try to turn off these bulbs:

Agree, this is addictive. And you can even look at how someone solves puzzles for you, infinitely . But this is only half the battle! Like almost any Dagaz option, my “progressive levels” can be customized.

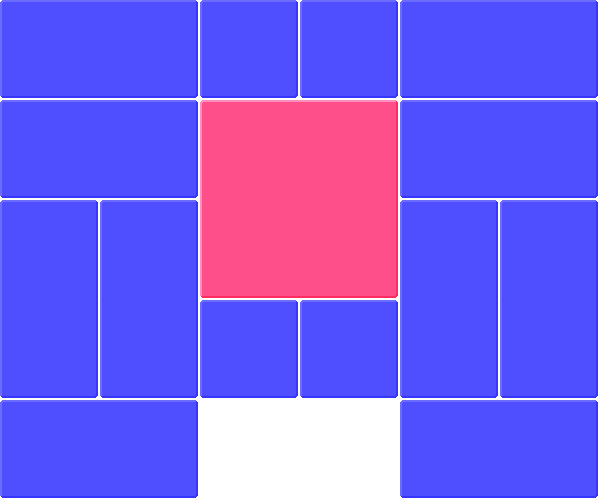

This puzzle was dedicated to the election of George Washington as president and, initially, I implemented it not quite correctly. For the correct decision, it is necessary to draw a red square through all four corners in turn, but in Dagaz you can set only one goal. This is where the custom “progressive levels” come into play .

As soon as we reach the next goal, the next level is loaded, but the arrangement of the figures, at the same time, is taken from the previous one! We just continue from where we left off. Moduleused to transfer positions between levels is valuable in itself. Now, if you turn on the browser log in time, you can return to any previously passed position, in almost every game. It is enough to copy in the URL the line following in the log after the inscription “Setup:”. It helps a lot in debugging!

The custom “progressive levels” are applicable in complex life-cycle games such as Kamisado . This game does not end when one of the figures of the last line is reached ! By the rules, the figure entering the enemy’s camp receives the rank of “sumoist” and additional opportunities, after which, the figures are placed according to the agreed algorithm and the next round begins. Now, Dagaz can do it! I have no doubt, the opportunity will be useful in the implementation of many traditional mankals . And with a very great desire, on its basis, it is quite possible to make some sort of simple bagel .

Agree, this is addictive. And you can even look at how someone solves puzzles for you, infinitely . But this is only half the battle! Like almost any Dagaz option, my “progressive levels” can be customized.

This puzzle was dedicated to the election of George Washington as president and, initially, I implemented it not quite correctly. For the correct decision, it is necessary to draw a red square through all four corners in turn, but in Dagaz you can set only one goal. This is where the custom “progressive levels” come into play .

As soon as we reach the next goal, the next level is loaded, but the arrangement of the figures, at the same time, is taken from the previous one! We just continue from where we left off. Moduleused to transfer positions between levels is valuable in itself. Now, if you turn on the browser log in time, you can return to any previously passed position, in almost every game. It is enough to copy in the URL the line following in the log after the inscription “Setup:”. It helps a lot in debugging!

The custom “progressive levels” are applicable in complex life-cycle games such as Kamisado . This game does not end when one of the figures of the last line is reached ! By the rules, the figure entering the enemy’s camp receives the rank of “sumoist” and additional opportunities, after which, the figures are placed according to the agreed algorithm and the next round begins. Now, Dagaz can do it! I have no doubt, the opportunity will be useful in the implementation of many traditional mankals . And with a very great desire, on its basis, it is quite possible to make some sort of simple bagel .

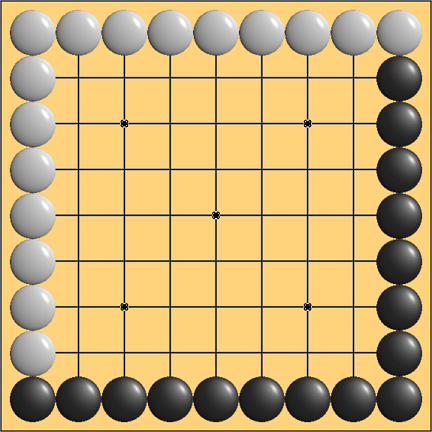

This is a children's game originally from China . Black stones cannot move down, white - moves in any direction. Before reading further, try yourself to "clamp" the white stone at the top of the "horn". If it doesn’t work out, then it’s okay:

I give a hint

It is somewhat similar to the French War Game or the Game of the Dwarves , but, in my opinion, Horn Chess is much deeper and more complex than these games. If you do not believe me, try to handle it . With all the external unpretentious, the game is not at all simple. I saw a couple of scientific articles (in Chinese) dedicated to her. And this is good material for debugging more complex AIs .

The main thing is not to mess with the signs!

Dagaz.AI.eval = function(design, params, board, player) {

var r = 0;

var white = null;

var black = [];

for (var pos = 0; pos < design.positions.length - 3; pos++) {

var piece = board.getPiece(pos);

if (piece !== null) {

if (piece.player == 1) {

if (white === null) {

black.push(pos);

} else {

r += MAXVAL / 2;

}

} else {

white = pos;

}

}

}

if (white !== null) {

r += white;

}

if (black.length == 2) {

if ((black[0] + 1 == black[1]) && (black[1] + 1 == white)) {

if (board.player == 1) {

r = MAXVAL;

} else {

r = -MAXVAL;

}

if (player == 1) {

r = -r;

}

}

}

return r;

}

Dagaz.AI.heuristic = function(ai, design, board, move) {

var b = board.apply(move);

return design.positions.length +

Dagaz.AI.eval(design, ai.params, b, board.player) -

Dagaz.AI.eval(design, ai.params, board, board.player);

}

...

AbAi.prototype.ab = function(ctx, node, a, b, deep) {

node.loss = 0;

this.expand(ctx, node);

if (node.goal !== null) {

return -node.goal * MAXVALUE;

}

if (deep <= 0) {

return -this.eval(ctx, node);

}

node.ix = 0;

node.m = a;

while ((node.ix < node.cache.length) && (node.m <= b) &&

(Date.now() - ctx.timestamp < this.params.AI_FRAME)) {

var n = node.cache[node.ix];

if (_.isUndefined(n.win)) {

var t = -this.ab(ctx, n, -b, -node.m, deep - 1);

if ((t !== null) && (t > node.m)) {

node.m = t;

node.best = node.ix;

}

} else {

node.loss++;

}

node.ix++;

}

return node.m;

}

Here is one of the key points. Move the right black stone up. The weaker “one-step” algorithm goes down, after which Black easily drives it into a corner. Minimax implementation - makes the correct move, after which it can win if Black makes a mistake. This does not mean that it is impossible to catch whites, but looking several moves ahead significantly improves their game!

Above, using the example of Tenjiku Shogi , I have already shown that various board games can be very different from each other. First of all, this concerns variability - the average number of permissible moves at a given stage of the game. This parameter determines the applicability of the AI algorithm when developing the bot. It’s clear that the minimax algorithm I used in Horn Chesswon't work fine in really big games like Ko Shogi or Gwangsanghui . On the other hand, the “aggressive” algorithm used in them will play too weakly in games such as Chess . But this is only part of the problem.

The main differences are in the mechanics. Fortunately, this is exactly what can be customized by the evaluation function and heuristics. The bad news is that to think of how they should be built is simply not always the case. Games with " Custodian " capture (such as the Tibetan " Ming-Mang ") illustrate my point perfectly.

It is not enough to simply assess the material balance!

var eval = Dagaz.AI.eval;

Dagaz.AI.eval = function(design, params, board, player) {

var r = eval(design, params, board, board.player);

var cover = board.getCover(design);

var cnt = null;

_.each(cover, function(list) {

var cn = 0;

_.each(list, function(pos) {

var piece = board.getPiece(pos);

if (piece !== null) {

if (piece.player == board.player) {

r--;

} else {

cn++;

}

}

});

if ((cnt === null) || (cnt < cn)) {

cnt = cn;

}

});

r += cnt * 3;

if (board.player != player) {

return -r;

} else {

return r;

}

}

var done = function(design, board, player, pos, dir, trace, captured) {

var p = design.navigate(player, pos, dir);

if (p !== null) {

var piece = board.getPiece(p);

if (piece !== null) {

if (piece.player == player) {

_.each(trace, function(pos) {

if (_.indexOf(captured, pos) < 0) {

captured.push(pos);

}

});

} else {

trace.push(p);

done(design, board, player, p, dir, trace, captured);

trace.pop();

}

}

}

}

var capture = function(design, board, player, pos, dir, dirs, trace, captured) {

var p = design.navigate(player, pos, dir);

if (p !== null) {

var piece = board.getPiece(p);

if (piece !== null) {

if (piece.player == player) {

_.each(trace, function(pos) {

if (_.indexOf(captured, pos) < 0) {

captured.push(pos);

}

});

} else {

trace.push(p);

capture(design, board, player, p, dir, dirs, trace, captured);

if (trace.length > 1) {

_.each(dirs, function(dir) {

var pos = design.navigate(player, p, dir);

if (pos !== null) {

var piece = board.getPiece(pos);

if ((piece !== null) && (piece.player != player)) {

trace.push(pos);

done(design, board, player, pos, dir, trace, captured);

trace.pop();

}

}

});

}

trace.pop();

}

}

}

}

var checkCapturing = function(design, board, pos, player, captured) {

var trace = [];

capture(design, board, player, pos, 3, [0, 1], trace, captured);

capture(design, board, player, pos, 1, [3, 2], trace, captured);

capture(design, board, player, pos, 2, [0, 1], trace, captured);

capture(design, board, player, pos, 0, [3, 2], trace, captured);

}

Dagaz.Model.GetCover = function(design, board) {

if (_.isUndefined(board.cover)) {

board.cover = [];

_.each(design.allPositions(), function(pos) {

board.cover[pos] = [];

if (board.getPiece(pos) === null) {

var neighbors = [];

var attackers = [];

_.each(design.allDirections(), function(dir) {

var p = design.navigate(1, pos, dir);

if (p !== null) {

var piece = board.getPiece(p);

if (piece !== null) {

neighbors.push(piece.player);

attackers.push(piece.player);

} else {

while (p !== null) {

piece = board.getPiece(p);

if (piece !== null) {

attackers.push(piece.player);

break;

}

p = design.navigate(1, p, dir);

}

}

}

});

if (neighbors.length > 1) {

var captured = [];

if ((_.indexOf(attackers, 1) >= 0) &&

(_.indexOf(neighbors, 2) >= 0)) {

checkCapturing(design, board, pos, 1, captured);

}

if ((_.indexOf(attackers, 2) >= 0) &&

(_.indexOf(neighbors, 1) >= 0)) {

checkCapturing(design, board, pos, 2, captured);

}

if (captured.length > 0) {

board.cover[pos] = _.uniq(captured);

}

}

}

});

}

return board.cover;

}

In the evaluation function, I had to not only use the “coverage”, but also seriously remake the algorithm for calculating it. As for heuristics, it is completely elementary in this game:

Dagaz.AI.heuristic = function(ai, design, board, move) {

return move.actions.length;

}

The more pieces the move takes (its size depends on it) - the better! I had to work hard on the evaluation function, but the result was definitely worth it:

I note that the video shows the operation of the simplest “one-way” algorithm (you can see that in the absence of “safe” moves it does not always play quite efficiently). As I said above, accounting in assessing mobility and “coverage” works wonders!

And finally, a video of another, extremely unusual, game with custodian capture:

And I’m hurrying to congratulate all on the upcoming holiday!