Basics of lossy audio coding. Testing beta version of Opus 1.3

0. About the author

Hello everyone, my name is Maxim Logvinov and I am a student at Kharkov National University of Radio Electronics.

I have always been interested in sound and music. I myself loved to writeelectronic dance music and I have always been interested, as a person who is not well versed in the high matters of mathematics, to find out what happens to sound in a computer: how it is written, compressed, what technologies exist for this, and so on. After all, from school and physics, I understood that sound is “analog”: not only does it need to be converted to digital (which requires devices such as an ADC), but it needs to be somehow preserved. And even better, that this music takes up less disk space, so that you can put more music in a stingy folder. And to sound good, without any audible compression artifacts. A musician, after all. A trained ear, not devoid of musical hearing, is quite difficult to deceive with methods that are used to compress sound with losses - at least at fairly low bitrates. You are so fastidious.

And let's see what the sound is, how it is encoded and what tools are used for this very encoding. Moreover, we will experiment with the bitrates of one of the most advanced codecs to date - Opus and evaluate what digits can be encoded with which to eat the fish, and ... Actually, just why not? Why not try to describe in simple language not only how the computer stores and encodes audio, but also test one of the best codecs today? Especially when it comes to ultra-low bitrates, where almost all existing codecs start to do incredible things with sound in an attempt to fit into a small file size. If you want to escape from the routine and find out what conclusions were obtained when testing the new codec - welcome to Cat.

1. Sound coding

Sound has a physical nature. Any sound is vibrations in space (in this case, in the air) that are caught by our ear. The oscillations are continuous in nature, which can be described by mathematical models. Of course, we will not do this, but we will pose the question: how can vibrations of a continuous nature be written in a machine that operates only with zeros and ones?

1.1. No compression, no loss

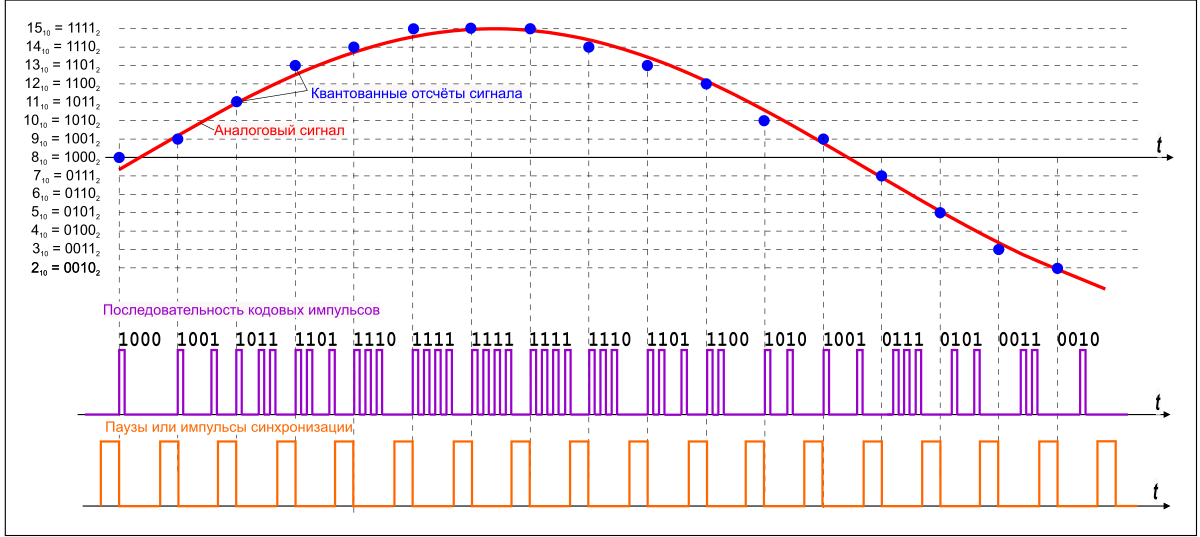

Fig. 1.1 - Graphic Description of Pulse Code Modulation

The WAV (WAVE) format saves the audio track in its true quality without any manipulation of the audio file itself.

In order to record a sound, we need to convert it into a set of zeros and ones. In the case of the WAV format, this is done in the simplest way: the incoming sound stream is divided into the smallest segments (quanta, hence the terms "quantization frequency", "sampling frequency" or " sampling frequency ") and the current value of the analog signal in binary is written in each such time interval form. WAV files can be recorded with a sampling frequency, for example, from 8 kHz to 192 kHz, but, de facto, a sampling frequency of 44.1 kHz is considered the standard.

It should be noted that WAV, as a container, supports other ways of storing audio information: for example, ADPCM, which is able, depending on the bandwidth, to encode audio data with a variable sampling rate.

The frequency of 44.1 kHz was not accidental. If we allow inaccuracies in the description, this figure occurred as a statement of Kotelnikov ’s theorem : to maintain the most correct waveform at frequencies up to 20 kHz (theoretical limit of audibility of the human ear), a sampling frequency twice as high — 40 kHz — is needed. Actually, the frequency at 44.1 kHz is due to technical aspects, the details of which can be found here .

In each such segment, the actual voltage of the analog signal is encoded in binary form: the highest level can be represented in the form of "1111", the lowest - in the form of "0000". And here the second parameter comes into play - the depth of sound, which determines how accurately the value of the wave will be digitized in a period of time. Often, WAV files are written in 16-bit or 32-bit resolution. Higher bit depth - more precisely, recording.

Speaking of PCM. What is recording on an ordinary CD, which were so popular after audio cassettes? Namely - a stream of uncompressed zeros and ones in PCM format. The resolution is 16 bits, the sampling frequency is 44.1 kHz. What bitrate will such a record have then?

- 44100 times a second, a 16-bit number is written. 44100 * 16 = 705600 bps for one channel;

- for stereo recording, this value is multiplied by 2 - 1411200 bps or ours ~ 1411 kbit / s;

- for 32-bit recording, this value will be twice as high - ~ 2822 kbit / s.

Conclusion: hence the gluttony of these files to free space on the hard disk, but as a gain - the complete absence of losses when recording and listening to an audio file.

1.2. Lossless compression

I won’t write much about lossless compression. This term can be found here . In fact, this method is, roughly speaking, archiving audio recordings with the algorithms embedded in the codec, but the data is not lost and the ability to restore the audio record with bit-to-bit accuracy is retained. When decoding such formats, we get, in fact, the same WAVE format, only it takes up less disk space; compression is approximately twofold and depends on the nature of the encoded composition. When listening to a recording, the codec “unzips” the composition and sends a stream of uncompressed zeros and ones to the processing of the sound card.

There are many such codecs: this is FLAC (Free Lossless Audio Codec), developed by Xiph(she also developed Opus), ALAC (Apple Lossless) from the company of the same name, APE (Monkey's audio), WV (WavPack) and other, less well-known lossless audio compression formats.

1.3. Lossy compression - tricking your ears

Scientists began to think about the fact that, in principle, it often does not make sense to keep complete information about the audio recording, since our ear is imperfect. It may not hear quiet sounds after loud, it may not hear frequencies that are too high or too low, and so on. These phenomena are called the masking effect.

As a result, we realized: you can throw it away here , cut it there , and the listener will notice almost nothing - an imperfect ear will simply enable the listener to deceive himself. Therefore, it becomes possible to get rid of psychoacoustic redundancy in the file.

Actually, psychoacousticsexists as a discipline and studies the psychological and physiological characteristics of the perception of sounds by a person. Actually, these psychoacoustic models were laid in the foundation of work of compression programs with losses and one of the first such formats was MPEG 1 Layer III or just MP3. Here I’ll make a reservation that marketing, operating with facts almost without exaggeration, did its job: an audio file takes up ten times less space (with the caveat: this is when encoding with a bitrate of 128 kbps, which allows you to get “quality acceptable for a typical listener ”- recall 1411 kbit / s for WAVE), and, therefore, already more than an audio record will fit on a CD or on a hard disk. Furor! The popularity of the format has blown up the digital audio industry. During periods of not the fastest connections to the Internet, transferring such files became very convenient. It’s convenient to transfer, convenient to store, conveniently throw a pack of songs onto your player. A lot of hardware MP3 decoders were created, in connection with which the file was played almost on the

At the time of this writing, patent restrictions have expired and license fees have been discontinued.

As for lossy compression, and how is it possible to compress an audio file by an order of magnitude without significant loss to the quality of the listener? In short, the following sequence differs little from one codec to another. The description of this process is simplified to disgrace, but its course is approximately as follows:

- the incoming stream of uncompressed data is divided into equal segments - into frames (frames);

- in order to create a continuous section of the spectrum, for analysis, in addition to the already selected frame, the previous and next frame are taken;

- compression No. 1: the section passes through MDCT (modified discrete cosine transform). Roughly speaking, this transformation carries out a spectral analysis of the sound signal - it makes it possible to obtain information about how high the sound energy is in each segment of the spectrum.

In the case of Layer III, the second MDCT conversion is performed, which increases the efficiency of encoding high frequencies at lower bitrates - this turned out to be a silver bullet for the explosion of popularity of this codec.Thanks to user interrupt for correcting: MPEG 1 Layer III uses a hybrid approach for converting audio data: first, the spectrum of the encoded audio file is divided into many spectral bands, as happens in SBC (Sub-band coding, English ); each of these bands is converted by the MDCT to a frequency form, which, in fact, already gives specific information about which frequencies and with what energy are present in the frame. - The result of the MDCT analysis is transmitted to the psychoacoustic model, which is something like a “virtual ear”. At this stage, answers are given to the questions of what to leave and what can be thrown out of the audio signal without significant damage to perception.

- compression # 2: a frame that went through such a conversion can be compressed using Huffman codes; in fact, roughly speaking again, each frame is additionally archived, getting rid of redundancy. This looks like something like packing long chains of zeros and ones into a shorter format;

- frames are glued together; in each such frame the necessary information for the codec is added:

- frame number / size;

- format version (MPEG1 / 2 / 2.5);

- layer version (Layer I / II / III);

- sampling frequency;

- stereo base mode (mono, stereo, stereo combined).

It should be noted the fact that the MP3 format is not without significant flaws, while the format itself does not allow to properly expand its capabilities. Take, for example, a phenomenon like pre-echo . The phenomenon of this compression artifact is briefly and fairly well described here., but its essence lies in the distortion of sharply increasing relatively quiet sounds, for example, the Hi Hat instrument. When encoding such a tool in silence, due to the features of the MDCT, an abrupt transition will be created with many vibrations. In the original recording, these fluctuations do not exist, but if they are present in the resulting record, they are quite distinctly caught by the ear. Modern codecs such as AAC and OGG are also not without this drawback, but they try to deal with them using more accurate and advanced algorithms. But MusePack (about it below), for example, is devoid of this drawback, since itdoes not use the second MDCT conversion to increase efficiencyuses SBC coupled with a very high-quality psychoacoustic model, which explains its high-quality coding only starting with bitrates of 160 kbps. Rarely, but aptly: with such a bitrate, the codec encodes audio better than MP3 with the same bitrate.

It should be noted that the developers of the free Lame codec still try to improve their brainchild by improving psychoacoustic models and variable bitrate coding algorithms; The release of version 3.100 was introduced in December 2017.

Detailed information on how MP3 is arranged internally in terms of frame format can be found here .

But an amazing article (or rather, its translation) about how MP3 audio codec compression works can be read here . Recommend!

1.4. Lossy Coding Enhanced: AAC Format Brief

At the time of writing, the MP3 codec is over 23 years old. In order not to repeat with the article ( its newer version ), where OGG Vorbis codecs are already described (and again, greetings from the Xiph organizations - this is also its development), MPC (Musepack), WMA (Windows Media Audio) and AAC, I will describe here in brief AAC from the point of view of technologies that were until recently advanced in the field of lossy coding.

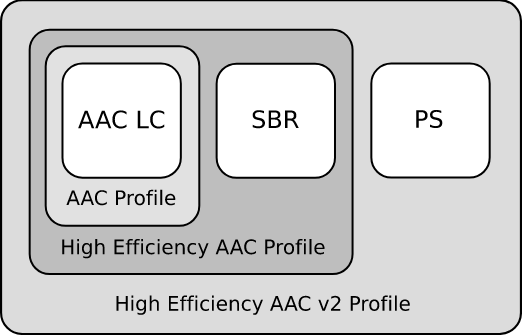

In my humble opinion, AAC (Advanced Audio Codec) is one of the most advanced formats in the field of data encoding. I will describe the main features of this format, starting with popular profiles that can be represented by a nested doll (see figure below):

Fig. 1.2 - AAC Profile Hierarchy, source - Wikipedia

- Low Complexity Advanced Audio Coding (LC-AAC)

Low decoding complexity is great for implementing a hardware codec; hardware requirements for CPU and RAM are also low, which has earned great popularity for this profile. Enoughly encodes a signal with 96 kbit / s.

- High-Efficiency Advanced Audio Coding (HE-AAC).

The HE-AAC profile is an extension of LC-AAC and is complemented by the patented SBR technology (Spectral Band Replication, coarse. - "spectral repetition", article in English ). It is the technology of spectral repetition that allows you to "save" high frequencies when encoding with low bitrates.

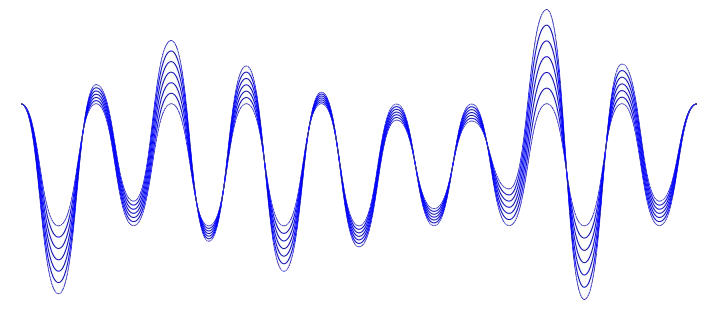

Fig. 1.3 - Graphic representation of the principle of restoration of high frequencies

Why “save” is in quotation marks? Because the king is not real: the codec makes room for additional information that the codec synthesizer uses to restore high frequencies, but since these frequencies are synthesized, that is, recreated by the codec, they are, in fact, an approximate copy of the high frequencies that existed in the source file . In practice, a signal encoded with a bit rate of 48 kbit / s will sound, for example, similar to the mp3 @ 98 kbit / s format, if supported by the decoder; otherwise, such a file will be played simply without restoring high frequencies and its bitrate will correspond to its quality similar to mp3.

- High-Efficiency Advanced Audio Coding Version 2 (HE-AACv2)

This profile is relatively young (described in 2006), it was created for more efficient audio coding in low bandwidth conditions.

The second version of the profile is an extension, in fact, of the first profile, the changes are in the addition of PS technology (Parametric Stereo). The principle is somewhat similar to SBR technology: the codec also takes up space for information to restore the stereo base, sacrificing accuracy.

Fig. 1.4 - Graphical image encoding parametric stereo

The operating conditions for this profile are the same as for the HE-AAC described above; the lack of profile support by the decoder will result in the recording being mono.

- AAC-LD (Advanced Audio Coding - Low Delay)

The AAC-LD profile has advanced coding algorithms to reduce delays (up to 20 ms.);

- AAC-ELD (Advanced Audio Coding - Enhanced Low Delay)

This profile, which inherits all the features of HE-AACv2 (uses analogs of SBR and PS technologies, but designed for low latencies);

- AAC Main Profile

This profile was introduced as MPEG-2 AAC or HC-AAC (High Complexity Advanced Audio Coding). Not compatible with LC-AAC;

- AAC-LTP (Advanced Audio Coding - Long Term Prediction)

This profile is more complex and resource-intensive (but also better) than all the others. Also not compatible with LC-AAC.

That is all I wanted to write about this codec. I made the main emphasis on technologies that are used in various AAC profiles (which, by the way, generate many abbreviations: AAC, LC-AAC, eAAC +, aacPlus, HE-AAC, etc.), as I will compare them with those in Opus, but the codec does its job: it is widely used in Internet radio, as well as in digital radio broadcasting technologies: DRM (Digital Radio Mondiale) and DAB (Digital Audio Broadcasting) (you can familiarize yourself with these technologies here ), YouTube, how audio track to many clips in mp4, mkv containers, etc.

2. Introduction to Opus: format description

Fig. 2.1 - Opus logo

On December 21, 2017, Xiph introduced the beta version of the Opus audio codec version 1.3. I will not go into high matters when describing this codec, since such information is freely available (for example, here , here , and for those who know English - here and here .)). Release notes for this beta can be found here . Here I note that this codec is a great candidate to replace the rest of the codecs. He has many advantages:

- bit rate from 6 to 510 Kbit / s;

- sampling rate from 8 to 48KHz;

- support of constant (CBR) and variable (VBR) bitrates;

- support for narrowband and broadband sound;

- voice and music support;

- stereo and mono support;

- support for variable bit rate (VBR);

- the ability to restore the sound stream in case of frame loss (PLC);

- support for up to 255 channels;

- Availability of implementations using floating-point and fixed-point arithmetic.

The codec is distributed under the BSD license and is completely free from all patent prosecutions, and is also approved as an Internet standard. Opus can be used in any of its projects, including commercial ones, without the need to open source. At the moment, the codec is used in the Telegram messenger to implement VoIP , in the WebRTC project , for encoding the audio track in YouTube videos, etc. The prospects of this codec should not be underestimated.

A reasonable question may arise: what is so special about the theses described above? All this is in almost any more or less modern codec. Answers will follow later in the article.

One of the key features of this codec is its extremely low encoding delays: from 2.5 ms. (!) Up to 60 ms, which is simply necessary, like air, to applications that allow users to communicate by voice on the Internet. Such low latencies also allow you to build interactive applications, such as a digital sound studio for co-writing music or something like that. It is worth noting that in this parameter the codec competes with the relatively new AAC-ELD profile described above; however, the minimum delay of coding algorithms is approx. 20 ms., Which is almost a problem for the free, open and free codec Opus.

I will not consider all the subtleties associated with encoding audio with this codec, but below I will describe the modes that change the codec depending on the change in bit rate.

2.1. Opus: Coding Modes

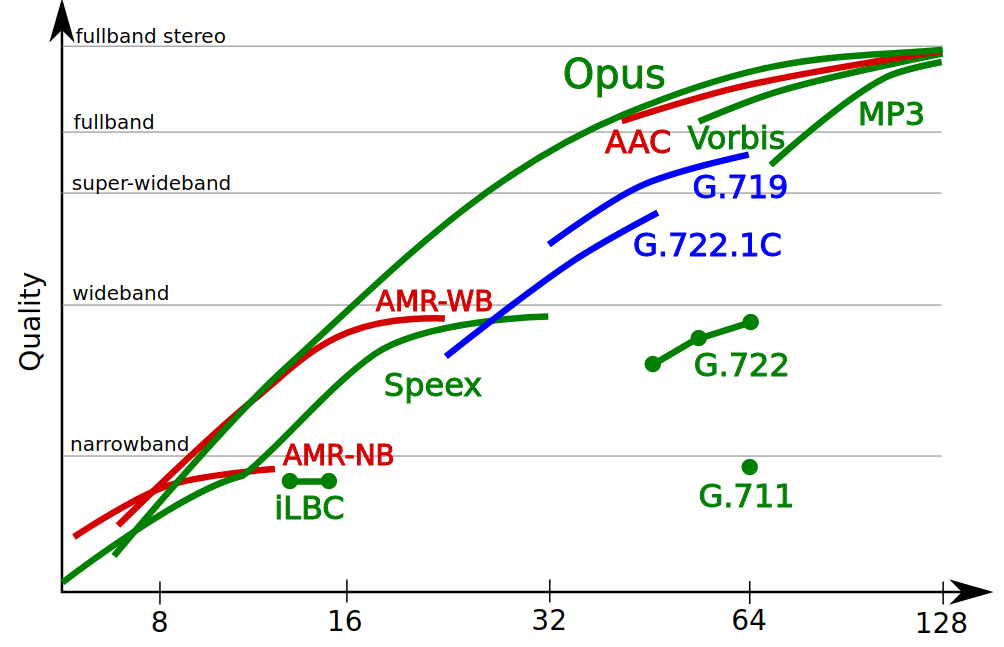

Fig. 2.2 - Comparison of the quality of coding with different codecs - the official schedule from the opus-codec.org website

After the release of this beta version, I became interested in how coding modes change depending on the bitrate, so I decided to conduct experiments starting from the lowest bitrates, but only with them. To experiment with higher bitrates, I do not see the point - this is perfectly described in the articles (for example, here ).

Now let's list the modes that the codec operates with:

- Signal coding mode - LP, hybrid, MDCT:

- LPC or LP (linear prediction coding, article in English ) is used in voice compression codecs and allows you to encode voice with sufficient quality for perception, using very low bitrates. It is used in GSM , AMR , SILK codecs (also used in Skype), Speex (and Hi Xiph again; however, she declared the codec as “deprecated” and recommends using Opus). The Opus codec uses a modified SILK codec for encoding voice at low bitrates, which is not backward compatible with Skype;

- MDCT (modified discrete cosine transform) is a type of Fourier transform (you can read about it here ), it is used in almost all codecs for lossy compression of music: MP3, AAC, OGG Vorbis, etc. The Opus codec uses the CELT codec ( article in English );

- Hybrid mode (hybrid coding mode) is a development of the Xiph organization and consists in the fact that LP is used for coding the lower part of the signal spectrum (up to 8 kHz), and MDCT for the upper part (from 8 kHz and higher), and we get a compromise quality at the output sound while maintaining a fairly low bitrate.

- Stereo base:

- mono coding;

- stereo coding;

- Joint Stereo mode encoding .

- Based on the above, a change in the width of the spectrum depending on the bitrate:

- Narrowband (narrowband coding) - coding of a signal with a spectrum width of up to 6 kHz, corresponds to the quality of coding by GSM and AMR-NB codecs;

- Wideband (wideband coding) - coding of a signal with a bandwidth from 6 kHz to 14 kHz, corresponds to the quality of coding by the AMR-WB codec (or the so-called " HD Voice " operators ), which is now used in third-generation ( 3G ) networks ;

- Fullband (full band coding) - preservation of the entire band heard by the human ear (from 20 Hz to 20 kHz), mono signal;

- Fullband stereo - see above, but stereo signal.

2.2. Low bitrates - high frequencies. How are such results achieved?

At the beginning of this article, I was not in vain looking at the AAC codec and its sophisticated profiles, which, in fact, build a stereo base and restore high frequencies, as they say, "out of thin air". I exaggerate, of course, but the expression is not far from the truth. But the trouble is: the codec is patented and is a proprietary development of the alliance from Bell Labs , the Fraunhofer Institute for Integrated Circuits (which, incidentally, is the key creator of the MP3 format), Dolby Laboratoriesetc. Therefore, the use of these technologies will require royalties, which is unacceptable for a fully open and free codec. Therefore, the Opus developers took a different path: they implemented their own algorithms for reproducing high frequencies - Band Folding (Spectral Folding, Hybrid Folding). On this approach, the coding of high frequencies can be found, respectively, is (there's even an interactive image is), here (see para. 4.4.1) and here. The codec does not synthesize high frequencies from additional data, as HE-AACv2 does, it takes as a basis the signal itself, based on the energy in the high frequency region encoded in the original signal. Blind testing by enthusiasts shows that this method is very effective, not to mention the fact that such a method of reproducing high frequencies, according to the developers, is simpler to implement than SBR or its analogue and we implement it with less algorithmic delays.

By the way: the results of blind testing can be viewed on the graphs at the following link .

Let us experience this last word in the field of lossy sound compression.

2.3. Opus: coding and testing tools

This test was conducted on a short excerpt from an audio book by Vadim Zeland - Reality Transerfing. The book was voiced by Russian actor and radio host Mikhail Chernyak , who has a pleasant voice timbre.

How was the passage received?

- The “youtube-dl” utility downloaded a fragment of a WebM format file - a container in which there is only an audio track:

youtube-dl "https://www.youtube.com/watch?v=_-OUXW3a0Yw" -f 250 - The fragment was transcoded into the WAV format to feed its opusenc codec (the source file was renamed for convenience):

ffmpeg -i tr1_original.webm -acodec pcm_s16le tr1.wav - In order not to bother with encoding a lot of files with different bitrates, I used a simple one-line program in the Bash language and got a lot of files I needed:

for i in `seq 8 21`; do opusenc --bitrate $i tr1.wav tr-enc-${i}.opus ; done - all of these files were imported into Audacity and into the Qmmp player in order to evaluate the sound quality by ear and visually evaluate the corresponding track.

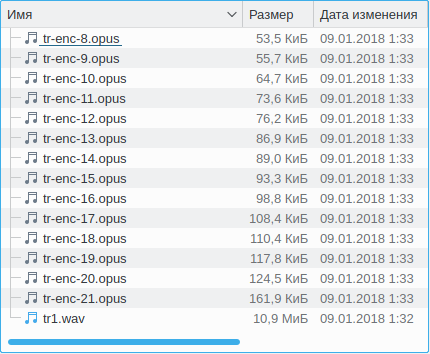

Fig. 2.3 - The result of the script execution is a screenshot of the Dolphin program.

Next will be a description of almost each of them - with the application of screenshots and a subjective description of the sound, followed by small conclusions.

3. Evaluation of the sound of encoded tracks

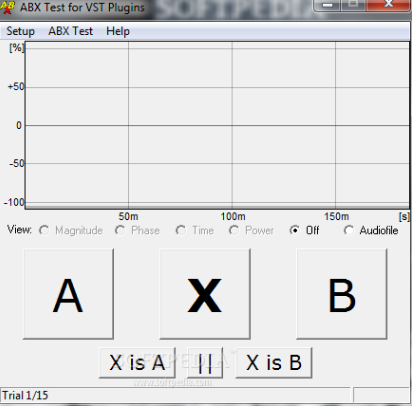

In a good way, the sound assessment should be objective and carried out, for example, using the blind ABX testing method ( article in English ). Testing is carried out in order to exclude the effect of a dummy (placebo) .

In short, the essence of the method is listening with the help of auxiliary software (Foobar or a similar web application, see the example in Fig. 3.1) of two samples: reference and compressed - buttons A and B, respectively. The listener does not know in advance which one is compressed and which one is the reference one.

Fig. 3.1 - An example of the appearance of one of the programs of blind ABX testing

Then the listener listens to the audio recording substituted by the program under the X button and tries to determine which of the two samples (A or B) refers to the sample on the X button. After selecting the answer, the listener repeats the cycle a certain number of times, after which the program displays the result, where, with how likely the listener pressed the buttons randomly.

It is worth mentioning that there are people who do not perceive the effects of psychoacoustic compression and, in fact, cannot distinguish, for example, mp3 @ 128 kbps from FLAC - both files sound “wonderful” for them. There are many such people, and for them 128 kbps is a completely transparent sound, since they do not think about what artifacts are there and how they sound. Is there any music? Are the instruments heard? Excellent. This is another reason for the high popularity of the MP3 format.

I basically did not conduct blind ABX testing, but I wanted to describe the subjective perception of sound with screenshots of the spectrogram of each sample in the hope that the readers of this article would be interested.

Go.

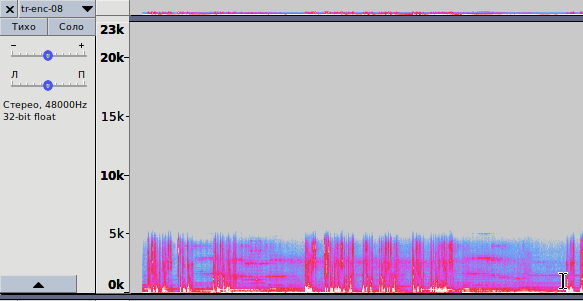

Fig. 3.2 - Spectrogram recording, encoded with a bitrate of 8 kbps

opus-enc-8.opus

1. In Fig. 3.2 shows a spectrogram for coding with practically the lowest bit rate. The audio file is encoded using the LP method, the codec assigns a frequency spectrum of 6 kHz; everything above is cut off. As a result, the file size is extremely small, the sound quality matches that of the AMR-NB (Narrowband) codec. Classics of the genre in second generation cellular networks (GSM). The behavior of the Opus codec corresponds to the above in the range of bitrates from 6 to 9 kbit / s.

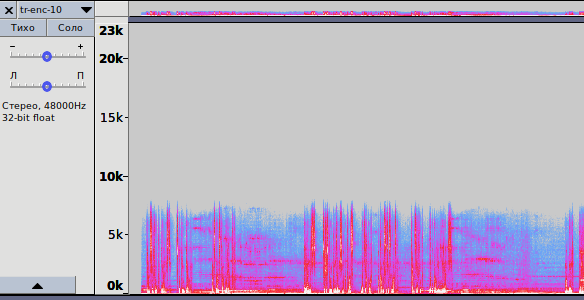

Fig. 3.3 - Spectrogram recording, encoded with a bitrate of 10 kbps

opus-enc-10.opus

2. In Fig. 3.3 shows a spectrogram for encoding with a bit rate of 10 kbit / s. The same situation: LP encoding, but the frequency spectrum is already wider - up to 8 kHz. The sound is the average between AMR-NB and AMR-WB.

Fig. 3.4 - Spectrogram of records encoded with bit rates of 12 and 13 kbps

opus-enc-12.opus

opus-enc-13.opus

3. In Fig. 3.4 two spectrograms are shown: for bitrates 12 and 13 kbit / s, respectively. Here the situation is more interesting: at 12 kbps the same LP is used, but the width of the spectrum is expanded even more: up to 10 kHz and the sound is almost identical to that in AMR-WB.

Starting with a bit rate of 13 kbps, the codec switches to hybrid mode and starts using three methods at once: LP, MDCT and Band Folding. Everything that lies in the range from 0 to 8 kHz is encoded by LP, from 8 to 10 kHz - MDCT; it is this segment of the spectrum at 2 kHz that is used as the initial information for using Band Folding - from here we get the actually restored high frequencies, up to 20 kHz.

Clearly visible "strokes" along the recording, starting at 10 kHz; the codec’s attempt to preserve maximum information about high frequencies is visible. Interestingly, already at 13 kbps. the codec in hybrid mode, using Hybrid Folding, tries to work in Fullband mode, restoring the spectrum up to 20 kHz.

What about the sound? The sound of the voice is simply Fantastic - with a capital F. Even HE-AAC with its SBR is not capable of such a result - even not nearly capable. High frequencies, in the area of which there are hissing, whistling, clicking (W, U, C, C) sounds are reproduced amazingly, it is pleasant to listen to human speech. Do not forget about the “13 kbit / s” figure, and after all, GSM codecs (AMR-NB) used to work with such a bitrate, where you really can’t make out where “Sh” and where “C” ...

Nevertheless, do not forget that such a mode is still poorly suited for music coding: due to LP coding of the lower part of the spectrum, there are significant distortions in the low-frequency region, especially in the transition region where there is no voice, but the “atmospheric rusty” FX effect corresponding to this region is heard squeak. "

Fig. 3.5 - Spectrogram of records encoded with bit rates of 14 and 15 kbps

opus-enc-14.opus

opus-enc-15.opus

Fig. 3.6 - Pay attention to the selected area of the FX effect

4. Figure 3.5 shows how the encoding method of the signal changes from moving to a higher bitrate - from 14 to 15 kbit / s. While the recording spectrogram for the 14 kbit / s bit rate is similar to the one described above with 13 kbit / s, then starting with the 15 kbit / s bitrate, the use of hybrid mode stops and the codec relies completely on MDCT and Band Folding.

Why did I decide so? Because when listening to a recording in the field of FX effects, all the distortions that are inherent in LP disappear. Yes, and if you look closely at the spectrogram of both records (see also Fig. 3.6), you can see that the accuracy of the reproduction of the spectrum is increased. However, the characteristic “smear” dividing the spectrum in half, in the region of 10 kHz, can be seen in both cases.

This is just the case when the quality here is comparable to that of mp3 @ 80 kbps, if not higher. Again, I do not have the right to make my judgments the ultimate truth, since I did not conduct blind ABX testing.

5. Starting at a bit rate of 18 kbps ( opus-enc-18.opus), the latter becomes enough to switch to Fullband Stereo mode, which means that at this bitrate you can get “acceptable” recording quality in conditions of very low network bandwidth. No, this is not a fiasco bro, this is a victory!

Further, everything is quite simple: proportionally, with an increase in bitrate, the codec uses Band Folding less and less, since, in fact, the bitrate becomes sufficient to encode higher frequencies without the need for their artificial restoration. The higher the bitrate, the wider the range will be encoded without using Band Folding.

4. Instead of a conclusion

As for me, the “threshold of transparency” (or “Transparency”, as native English speakers call it), which is expressed in my inability to distinguish the original from the compressed analogue, is approx. 170 kbps For mp3, this parameter is in the range of 224-256 kbit / s, depending on the nature of the music.

What is there to say. Technology is developing rapidly. And not only audio data compression technologies - all technologies, I’m not afraid to generalize. It is especially pleasant that such high-quality technologies, which allow deceiving the human ear so qualitatively and allowing being so universal, also develop and remain free and open. Thanks to the developers and those amazing people who create and drive progress. And also thanks to everyone for their attention and to everyone who mastered the article to the end.

PS: The article will be amended if significant inaccuracies are detected; syntax and semantic errors will be corrected.

PPS: Link to my own article written using the telegra.ph service . It has not been published anywhere, it is my work of authorship (the author can be verified) and is an older version of the current article.