Collecting and filtering logon events using Log Parser

Hello dear community!

IT infrastructure is always dynamic. Thousands of changes occur every minute. Many of them are required to be registered. System audit is an integral part of information security organizations. Change control helps prevent further serious incidents.

In the article I want to talk about my experience in tracking user login (and logout) events on the organization’s servers, describe in detail the details that arose during the task of analyzing audit logs, and also give a solution to this problem in steps.

The goals we pursue are:

- Monitoring daily user connections to the organization’s servers, including terminal ones.

- Event registration, both from domain users and from local ones.

- Monitoring user activity (arrival / departure).

- Control of connections of IT departments to information security servers.

First you need to enable auditing and record Windows logon events. As a domain controller, we are provided with Windows Server 2008 R2. By enabling auditing, you will need to retrieve, filter, and analyze the events defined by the audit policy. In addition, it is supposed to be sent to a third system for analysis, for example, in DLP. As an alternative, we will provide the ability to generate a report in Excel.

Log analysis is a routine operation of the system administrator. In this case, the volume of recorded events in the domain is such that it is difficult in itself. Auditing is included in Group Policy.

Logon events are generated by:

1. domain controllers, in the process of checking domain accounts;

2. local computers when working with local accounts.

If both categories of policies (accounts and auditing) are enabled, then logins using a domain account will generate logon or logoff events on the workstation or server and logon events on the domain controller. Thus, the audit on domain machines will need to be configured through the GPO snap-in on the controller, and local audit through the local security policy using the MMC snap-in.

Audit setup and infrastructure preparation:

Consider this step in detail. To reduce the amount of information we look only at successful entry and exit events. It makes sense to increase the size of the Windows security log. The default is 128 megabytes.

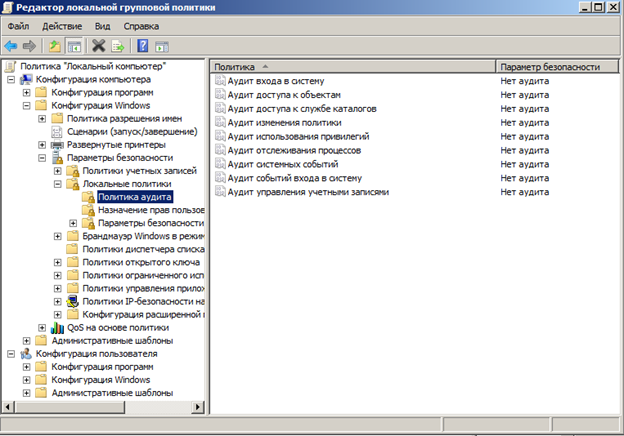

To configure local policy:

Open the politician editor - Start, in the search bar write gpedit.msc and press Enter.

Open the following path: Local Computer Policy → Computer Configuration → Windows Settings → Security Settings → Local Policies → Audit Policy.

Double-click the group policy setting Audit logon events ( logon audit) and Audit account logon events (audit logon events ). In the properties window, set the Success checkbox to write to the log of successful logins. CheckboxI do not recommend installing Failure to avoid overflow. To apply the policy, you must type gpupdate / force in the console.

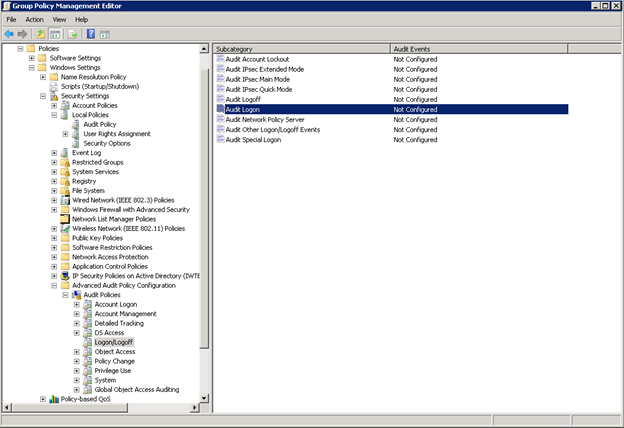

To configure Group Policy:

Create a new GPO (Group Policy) with the name "Audit AD". Go to the editing section and expand the branch Computer Configuration → Policies → Windows Settings → Security Settings → Advanced Audit Configuration . This group policy branch contains advanced audit policies that can be activated in the Windows family of operating systems to monitor various events. In Windows 7 and Windows Server 2008 R2, the number of events for which you can audit is increased to 53. These 53 audit policies (so-called granular audit policies) are located in the Security Settings \ Advanced Audit Policy branch

Configuration and grouped in ten categories:

Include:

- Account Logon - audit of credential verification, Kerberos authentication service, Kerberos ticket operations and other login events;

- Logon / Logoff - audit of interactive and network attempts to log on to computers and domain servers, as well as account locks.

After the changes, run gpupdate / force.

Immediately specify the types of events that our future script will analyze:

- Remote access - remote login through an RDP session.

- Interactive - local user login from the console.

- Computer unlocked - unlock a locked station.

- Logoff - logout.

In Windows 2008, a successful login event has Event ID 4624, and logoff has Event ID 4672. You must select a tool that can analyze a large number of records. It would seem that everything can be written using standard tools. However, requests for Powershell of the form

get-eventlog security | where {$_.EventId -eq 4624 -and ($_.TimeGenerated.TimeOfDay

-gt '08:00:00' )}show themselves well only at the stand with a domain controller for two users. In production environments, collecting logs from the server took 20 minutes each. The search for a solution led to the LOG PARSER utility, which was previously reviewed at Habr.

The data processing speed has increased significantly, the full run with the formation of a report from one PC has been reduced to 10 seconds. The utility uses many command line options, so we will call cmd from powershell to get rid of escaping a bunch of special characters. To write queries, you can use the GUI - Log Parser Lizard. It is not free, but a trial period of 65 days is enough. Below is the query itself. In addition to those of interest to us, we will write down other options for entering the system, in case of further use.

SELECT

eventid,

timegenerated,

extract_token(Strings, 5, '|' ) as LogonName,

extract_token(Strings, 18, '|' ) as LogonIP,

case extract_token(Strings, 8, '|' )

WHEN '2' THEN 'interactive'

WHEN '3' THEN 'network'

WHEN '4' THEN 'batch'

WHEN '5' THEN 'service'

WHEN '7' THEN 'unlocked workstation'

WHEN '8' THEN 'network logon using a cleartext password'

WHEN '9' THEN 'impersonated logons'

WHEN '10' THEN 'remote access'

ELSE extract_token(Strings, 8, '|' )

end as LogonType,

case extract_token(Strings, 1, '|' )

WHEN 'SERVER$' THEN 'logon'

ELSE extract_token(Strings, 1, '|' )

end as Type

INTO \\127.0.0.1\c$\AUDIT\new\report(127.0.0.1).csv

FROM \\127.0.0.1\Security

WHERE

(EventID IN (4624) AND extract_token(Strings, 8, '|' ) LIKE '10') OR (EventID IN (4624) AND extract_token(Strings, 8, '|' ) LIKE '2') OR (EventID IN (4624) AND extract_token(Strings, 8, '|' ) LIKE '7') OR EventID IN (4647) AND

TO_DATE( TimeGenerated ) = TO_LOCALTIME( SYSTEM_DATE() )

ORDER BY Timegenerated DESCThe following is a general description of the logic:

- Manually create a list of hosts with a locally configured audit, or specify the domain controller from which we collect the logs.

- The script traverses the list of host names in a loop, connects to each of them, starts the Remote registry service and, using the parser, executes an audit.sql SQL query to collect system security logs. The request is modified for each new host using a primitive regular expression at each iteration. The received data is saved in csv files.

- From the CSV files, a report is generated in the Excel file (for the beauty and convenience of the search) and the body of the letter in HTML format.

- An e-mail message is created separately for each report file and sent to the third system.

We prepare the site for the test script:

For the script to work correctly, the following conditions must be met:

Create a directory on the server / workstation from which the script is executed. We place the script files in the C: \ audit \ directory. The list of hosts and the script are in the same directory.

Install additional software on the MS Log Parser 2.2 and Windows powershell 3.0 server as part of the management framework . You can check the Powershell version by typing $ Host.version in the PS console.

We fill in the list of servers of list.txt that are of interest to us for audit in the C: \ audit \ directory by the names of workstations. We configure the Audit policy. We make sure that it works.

Check if the remote registry service is running (the script makes an attempt to start and transfer the service to automatic mode if there are appropriate rights). On 2008/2012 servers, this service is started by default.

Check for administrator rights to connect to the system and collect logs.

We check the ability to run unsigned powershell scripts on a remote machine (sign the script or bypass / disable restriction policy).

Attention to the startup parameters of unsigned scripts - execution policy on the server: You can

bypass the ban by signing the script, or disable the policy itself at startup. For instance:

powershell.exe -executionpolicy bypass -file С:\audit\new\run_v5.ps1I give the entire script listing:

Get-ChildItem -Filter report*|Remove-Item -Force

$date= get-date -uformat %Y-%m-%d

cd 'C:\Program Files (x86)\Log Parser 2.2\'

$datadir="C:\AUDIT\new\"

$datafile=$datadir+"audit.sql"

$list=gc $datadir\"list.txt"

$data=gc $datafile

$command="LogParser.exe -i:EVT -o:CSV file:\\127.0.0.1\c$\audit\new\audit.sql"

$MLdir= [System.IO.Path]::GetDirectoryName($datadir)

function send_email {

$mailmessage = New-Object system.net.mail.mailmessage

$mailmessage.from = ($emailfrom)

$mailmessage.To.add($emailto)

$mailmessage.Subject = $emailsubject

$mailmessage.Body = $emailbody

$attachment = New-Object System.Net.Mail.Attachment($emailattachment, 'text/plain')

$mailmessage.Attachments.Add($attachment)

#$SMTPClient.EnableSsl = $true

$mailmessage.IsBodyHTML = $true

$SMTPClient = New-Object Net.Mail.SmtpClient($SmtpServer, 25)

#$SMTPClient.Credentials = New-Object System.Net.NetworkCredential("$SMTPAuthUsername", "$SMTPAuthPassword")

$SMTPClient.Send($mailmessage)

}

foreach ($SERVER in $list) {

Get-Service -Name RemoteRegistry -ComputerName $SERVER | set-service -startuptype auto

Get-Service -Name RemoteRegistry -ComputerName $SERVER | Start-service

$pattern="FROM"+" "+"\\$SERVER"+"\Security"

$pattern2="report"+"("+$SERVER+")"+"."+"csv"

$data -replace "FROM\s+\\\\.+", "$pattern" -replace "report.+", "$pattern2"|set-content $datafile

<# #IF WE USE IP LIST

$data -replace "FROM\s+\\\\([0-9]{1,3}[\.]){3}[0-9]{1,3}", "$pattern" -replace "report.+", "$pattern2"|set-content $datafile

#>

cmd /c $command

}

cd $datadir

foreach ($file in Get-ChildItem $datadir -Filter report*) {

#creating excel doc#

$excel = new-object -comobject excel.application

$excel.visible = $false

$workbook = $excel.workbooks.add()

$workbook.workSheets.item(3).delete()

$workbook.WorkSheets.item(2).delete()

$workbook.WorkSheets.item(1).Name = "Audit"

$sheet = $workbook.WorkSheets.Item("Audit")

$x = 2

$colorIndex = "microsoft.office.interop.excel.xlColorIndex" -as [type]

$borderWeight = "microsoft.office.interop.excel.xlBorderWeight" -as [type]

$chartType = "microsoft.office.interop.excel.xlChartType" -as [type]

For($b = 1 ; $b -le 5 ; $b++)

{

$sheet.cells.item(1,$b).font.bold = $true

$sheet.cells.item(1,$b).borders.ColorIndex = $colorIndex::xlColorIndexAutomatic

$sheet.cells.item(1,$b).borders.weight = $borderWeight::xlMedium

}

$sheet.cells.item(1,1) = "EventID"

$sheet.cells.item(1,2) = "TimeGenerated"

$sheet.cells.item(1,3) = "LogonName"

$sheet.cells.item(1,4) = "LogonIP"

$sheet.cells.item(1,5) = "LogonType"

$sheet.cells.item(1,6) = "Type"

Foreach ($row in $data=Import-Csv $file -Delimiter ',' -Header EventID, TimeGenerated, LogonName, LogonIP, LogonType, Tipe)

{

$sheet.cells.item($x,1) = $row.EventID

$sheet.cells.item($x,2) = $row.TimeGenerated

$sheet.cells.item($x,3) = $row.LogonName

$sheet.cells.item($x,4) = $row.LogonIP

$sheet.cells.item($x,5) = $row.LogonType

$sheet.cells.item($x,6) = $row.Tipe

$x++

}

$range = $sheet.usedRange

$range.EntireColumn.AutoFit() | Out-Null

$Excel.ActiveWorkbook.SaveAs($MLdir +'\'+'Audit'+ $file.basename.trim("report")+ $date +'.xlsx')

if($workbook -ne $null)

{

$sheet = $null

$range = $null

$workbook.Close($false)

}

if($excel -ne $null)

{

$excel.Quit()

$excel = $null

[GC]::Collect()

[GC]::WaitForPendingFinalizers()

}

$emailbody= import-csv $file|ConvertTo-Html

$EmailFrom = "audit@vda.vdg.aero"

$EmailTo = foreach ($a in (Import-Csv -Path $file).logonname){$a+"@"+"tst.com"}

$EmailSubject = "LOGON"

$SMTPServer = "10.60.34.131"

#$SMTPAuthUsername = "username"

#$SMTPAuthPassword = "password"

$emailattachment = "$datadir"+"$file" #$$filexls

send_email

}For full reports in Excel, install Excel on the station / server with which the script works.

Add a script to the Windows scheduler for daily execution. The best time is the end of the day — events are searched for over the past 24 hours.

Event search is possible starting from Windows 7, Windows server 2008 systems.

Earlier Windows have different event codes (the code value is less than 4096).

Notes and conclusion:

Once again, summarize the actions performed:

- We set up local and domain audit policies on the necessary servers and collected a list of servers.

- We chose a machine to run the script, installed the necessary software (PS 3.0, LOG PARSER, Excel).

- Wrote a request for LOG PARSER.

- We wrote a script that runs this request in a loop for the list.

- We wrote the rest of the script processing the results.

- Set up a scheduler for daily launch.

Previously generated reports are in the directory until the next script execution. The list of users who are connected is automatically added to the message recipients. This is done for correct processing when sending a report to the analysis system. In general, thanks to LOG PARSER, it turned out to be quite powerful, and perhaps the only means of automating this task. Surprisingly, such a useful utility with extensive features is not widespread. The disadvantages of the utility include weak documentation. Requests are performed by trial and error. I wish you successful experiments!