Need to know where to put zero

- Transfer

Some optimizations require complex data structures and thousands of lines of code. In other cases, a serious increase in productivity gives a minimal change: sometimes you just need to put a zero. It looks like an old bike about a boilermaker who knows the right place for hitting with a hammer, and then bills the customer: $ 0.50 for hitting the valve and $ 999.50 for knowing where to hit.

I personally met several performance errors that were corrected by entering one zero, and in this article I want to share two stories.

Importance of measurement

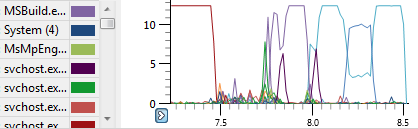

In the days of the original Xbox, I helped optimize a lot of games. In one of them, the profiler pointed to the matrix transformation function, which consumed 7% of CPU time — the biggest jump on the graph. Therefore, I diligently set to work on optimizing this function.

In the days of the original Xbox, I helped optimize a lot of games. In one of them, the profiler pointed to the matrix transformation function, which consumed 7% of CPU time — the biggest jump on the graph. Therefore, I diligently set to work on optimizing this function.  It is evident that I was not the first to try to do this. The function has already been rewritten in assembly language. I found several potential improvements in assembly language and tried to measure their effect. This is an important step, otherwise it is easy to do “optimization”, which will not change anything or even worsen the situation.

It is evident that I was not the first to try to do this. The function has already been rewritten in assembly language. I found several potential improvements in assembly language and tried to measure their effect. This is an important step, otherwise it is easy to do “optimization”, which will not change anything or even worsen the situation. However, the measurement turned out to be difficult. I started the game, played a bit with parallel profiling, and then I studied the profile: did the code get faster? It seemed that there was some slight improvement, but it was impossible to say for sure.

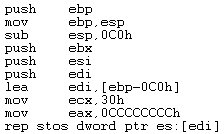

So I appliedscientific method. Wrote a collection of tests for managing old and new versions of code to accurately measure differences in performance.

This did not take long: as expected, the new code was about 10% faster than the old one.

This did not take long: as expected, the new code was about 10% faster than the old one. But it turned out that 10% acceleration is nonsense.

It is much more interesting that inside the test the code was executed about 10 times faster than in the game. Here it was an exciting discovery.

After checking the results, I looked for a while into the void, but then it dawned on me.

Caching role

To give game developers complete control and maximum performance, game consoles allow you to allocate memory with various attributes. In particular, the original Xbox allows you to allocate non-cacheable memory. This type of memory (in fact, the type of tag in the page tables) is useful when writing data for the GPU. Since the memory is not cached, the recording will almost immediately go to RAM without delays and cache pollution with “normal” mapping.

Thus, non-cached memory is an important optimization, but it should be used carefully. In particular, it is extremely important that games never try to read from noncacheable memory, otherwise their performance will seriously decrease. Even a relatively slow 733 MHz CPUThe original Xbox needs its own caches to ensure sufficient performance when reading data.

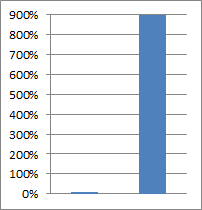

Now it becomes clear what is happening. Apparently for this function, the data is allocated in noncacheable memory, hence the poor performance. A small test confirmed this hypothesis, so it's time to fix the problem. I found the line where memory is allocated, double-clicked on the flag value, and indicated zero.

Instead of approximately 7% of the CPU time, the function began to consume about 0.7% and no longer presented a problem.

At the end of the week, my report looked like this: “39,999 hours of research, 0.001 hours of programming is a huge success!”

Developers usually do not need to worry about randomly allocating noncacheable memory: on most operating systems, this option is not available in user space by standard methods. But if you are wondering how much noncacheable memory can slow down the program, try the PAGE_NOCACHE or PAGE_WRITECOMBINE flags in VirtualAlloc .

0 GiB is better than 4 GiB

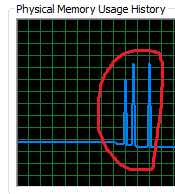

I want to tell you another story. It is about a bug that I found, but corrected by someone else. A couple of years ago, I noticed that the disk cache on my laptop is too often cleaned. I tracked that this happens when the 4 GiB line was reached, and it turned out that the driver of my new HDD for backups sets SectorSize to 0xFFFFFFFF (or −1) when indicating an unknown sector size. The Windows kernel interprets this value as 4 GiB and allocates the appropriate block of memory, which was the cause of the problem.

I want to tell you another story. It is about a bug that I found, but corrected by someone else. A couple of years ago, I noticed that the disk cache on my laptop is too often cleaned. I tracked that this happens when the 4 GiB line was reached, and it turned out that the driver of my new HDD for backups sets SectorSize to 0xFFFFFFFF (or −1) when indicating an unknown sector size. The Windows kernel interprets this value as 4 GiB and allocates the appropriate block of memory, which was the cause of the problem. I do not have contacts in Western Digital, but it is safe to assume that they corrected this error by replacing the constant 0xFFFFFFFF (or −1) with zero. One character entered - and solved a serious performance problem.

(Read more about this study in the article"Windows Slowdown: Exploration and Identification" )

Observations

- In both cases, the problem is with caching.

- The use of the profiler to determine the exact problem was decisive.

- If the patch is not verified by measurements, it will not necessarily help.

- I could write about many other such cases, but they are either too secret or too boring.

- The correct decision does not have to be difficult. Sometimes a huge improvement gives a slight change. You just need to know where

I happened to optimize the code, having discomposed with #define and by other trivial changes. Tell us in the comments if you have such stories.