What will happen in Rancher 2.0 and why is it migrating to Kubernetes?

A week ago, Rancher developers presented a preliminary release of their future major version - 2.0, - along the way announcing the transition to Kubernetes as a single basis for container orchestration. What prompted developers to go this way?

Background: Cattle

Rancher 1.0 was released in March last year, and today in the world, according to Rancher Labs, there are more than 10 thousand of its existing installations and more than 100 commercial users. The first version of Rancher included Cattle , which, as a "high-level component written in Java," was called the "basic orchestration engine" and the "main loop of the entire system." In essence, Cattle was not even a framework for container orchestration, but a special layer that manages metadata (+ resources, relationships, states, etc.) and transfers all real tasks for execution to external systems.

Cattle supported many solutions available on the market: Docker Swarm, Apache Mesos, Kubernetes, because, as the authors write, “Rancher users liked the idea of introducing a management platform that gives them the freedom to choose a framework for container orchestration.”

However, the growing popularity of Kubernetes has contributed to the emergence of more and more new requirements imposed by Rancher users on the capabilities and ease of interaction with this system. At the same time, the view of Rancher Labs management on the current problems of Kubernetes and its prospects has changed.

Kubernetes: yesterday, today, tomorrow

In 2015, when Rancher Labs started working with Kubernetes, i.e. add its support to your platform, the main problem in K8s, according to the developers, was the installation and initial configuration of the system. Therefore, the pride of the announcement of the primary support for Kubernetes in the Rancher v0.63 release (March 17, 2016) was: “Now you can start the Kubernetes environment with one click and after 5-10 minutes get access to the fully deployed cluster.”

Time passed, and the next major obstacle to Kubernetes, according to the head of Rancher Labs, was the continued operation and updating of the clusters. By the end of last year, this problem has ceased to be serious - thanks to the active development of utilities like kopsand the emerging tendency to offer Kubernetes as a service (Kubernetes-as-a-Service). This confirmed the thoughts of Joe Beda, the founder of the Kubernetes project at Google, and now the head of Heptio, that the next platform for launching modern applications will be Kubernetes, or rather, “Kubernetes will become a key part of this platform, but the story on this is definitely not going to end. ”

And other and more objective confirmation can be provided by very recent events, such as support for Kubernetes in DC / OS from Mesosphere and the official connection of IT giants like Microsoft and Oracle to the Kubernetes ecosystem . The latest accession to CNCF alone prompted the online edition of ARCHITECHTstate that "now, with Oracle on board, Kubernetes should become the de facto standard for container orchestration."

At Rancher Labs, they “bet” that the next step on the way of Kubernetes will be its transformation into a “universal standard for infrastructure”, which will happen at the moment when the notorious Kubernetes-as-a-Service becomes a standard service for most infrastructure providers:

“The DevOps team will no longer need to independently manage Kubernetes clusters. The only difficulty that remains is to manage and use Kubernetes clusters that are available everywhere. ”

With this in mind, the company's engineers took up the major update to Rancher - version 2.0.

Rancher 2.0

As in Rancher 1.0, in version 2.0 the platform consists of a server (for managing the entire installation) and agents (installed on all hosted hosts). The main difference between Rancher 2.0 and 1.0 is that Kubernetes is now built into the server. This means that when the Docker image is

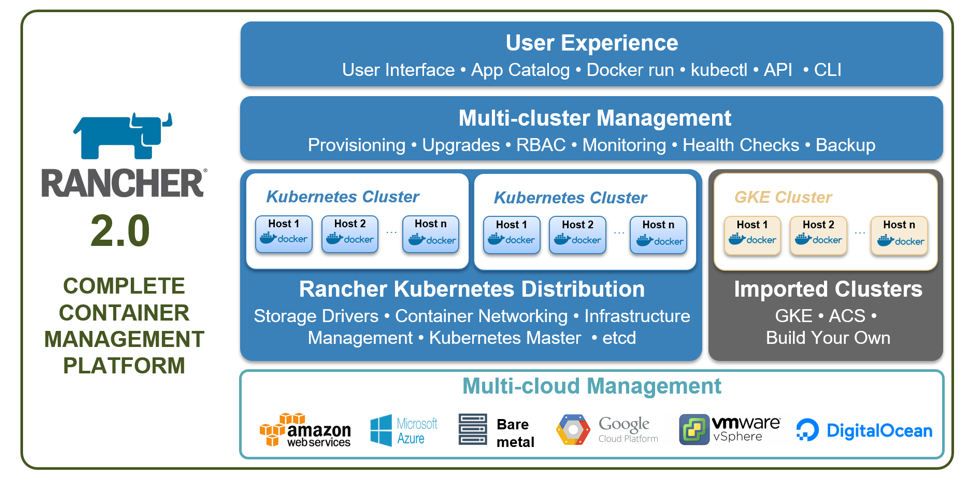

rancher/serverlaunched, the Kubernetes cluster starts, and each new host that is added becomes a part of it (has kubelet ). In addition, you can create additional clusters in addition to this wizard, as well as import existing clusters using kops or from external providers like Google (GKE). The Rancher Agent runs on all embedded and imported clusters.Above all this set of Kubernetes clusters, Rancher implements common layers for centralized management of them (authentication and RBAC, provisioning, updates, monitoring, backups) and interaction with them (web-based user interface, API, CLI):

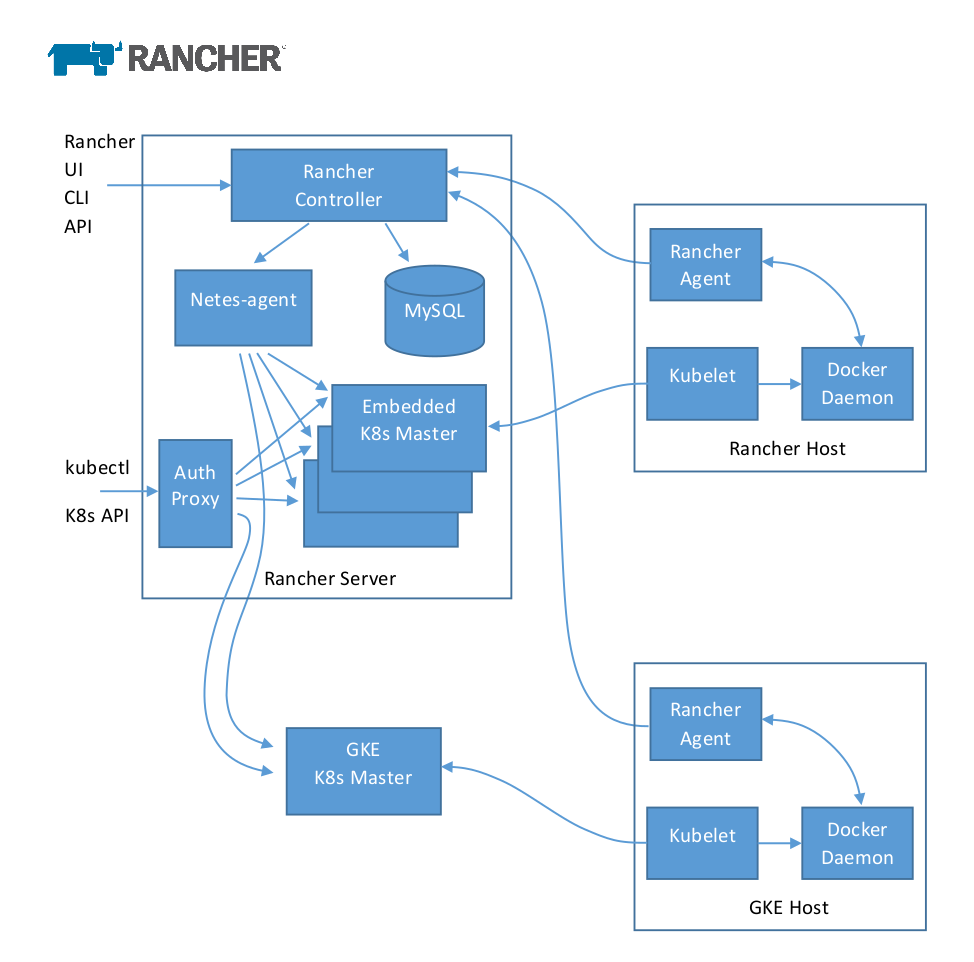

In more detail (up to the level host) Rancher 2.0 architecture looks like this:

Among the other features of this solution, I will highlight the following:

- The Netes-agent shown in the diagram is a component that is responsible for implementing Rancher container definitions in Kubernetes pods. This agent connects to all Kubernetes clusters, making them manageable.

- Kubernetes wizard built into Rancher 2.0 is its own distribution kit, which includes API Server , Scheduler , Controller Manager . These three components are combined into a single process running in one container. By default, the backend from the database of all clusters is placed in one database ("this greatly simplifies the management of embedded clusters in Rancher").

- There is only one component left in Rancher written in Java (all the rest are written in Go) - this is the Core controller . It implements Rancher services in Kubernetes pods: services, load balancers, DNS records. Along with the Websocket proxy and Compose executor, it is part of the Rancher Controller , which runs on the Rancher server (see diagram above).

- Tom Rancher is PersistentVolumeClaims (PVC). There are three types of volumes that differ in their life cycle: Container scoped (created when the container is created, deleted when the container is deleted), Service scoped (similar for services), Environment scoped (exist while there is an environment).

- The network supports Rancher IPSec or VXLAN overlay solutions (it was before), as well as third-party CNI plug - ins for embedded clusters. Two new network modes are also offered for launching containers in isolated space: “layer 3 routed” (per host subnet) and “layer 2 flat”.

- Rancher 2.0 is positioned as a solution that works with "standard SQL-DBMS, such as MySQL." To do this, it is proposed to use ready-made Database-as-a-Service solutions (for example, RDS from AWS), and configure them yourself using Galera Cluster for MySQL or MySQL NDB Cluster.

- The planned limitations on scalability: 1000 clusters, 1000 hosts per cluster (and 10 thousand hosts per cluster), 30 thousand containers per cluster (and 300 thousand containers per cluster).

- Currently supported versions of Docker: 1.12.6, 1.13.1, 17.03-ce, 17.06-ce (which is close to the compatibility list released recently the Kubernetes 1.8 ) .

Rancher 2.0 technical device details are available in this PDF .

In addition, of course, the developers took care of the improvements in the interface for the end user, making the Rancher UI (and the application directory) even easier and leaving advanced users access to kubectl and Kubernetes dashboard. A 15-minute presentation of Rancher 2.0 has been posted on YouTube , demonstrating both internal and external changes.

The current version of Rancher 2.0, marketed as a tech preview and released a week ago, is v2.0.0-alpha6 . The final release is scheduled for the 4th quarter of 2017.

CoreOS and fleet

I will end the story about Rancher 2.0 with a completely different example - from CoreOS. The fact is that with their fleet , a fairly well-known Open Source product in the DevOps world, a similar story occurred when viewed in the context of the universal adaptation of Kubernetes. Characterized as a “distributed init system”, fleet linked systemd and etcd to use cluster-wide systemd (instead of a single machine). It was created in order to become "the basis for a higher level orchestration."

At the end of last year, the fleet project changed its status from “verified in production” to “no longer being developed and maintained”, and at the beginning of this year, it was clarified that the image with fleet would cease to be part of Container Linux from February 1, 2018. Why?

Instead, for all cluster needs, CoreOS recommends installing Kubernetes.

In this issue devoted to the note in CoreOS blog (from February 2017) clarifies that Kubernetes «becomes the de facto standard for orchestration of Open Source-containers". The authors also claim: “For a number of technical and market reasons, Kubernetes is the best tool for managing container infrastructure and automating it on a large scale.”

PS

Read also in our blog:

- A series about the success stories of Kubernetes in production: “ No. 1: 4200 hearths and TessMaster by eBay ”, “ No. 2: Concur and SAP ”, “ No. 3: GitHub ”;

- « Kubernetes 1.8: overview of the major new features ";

- “ Our experience with Kubernetes in small projects ” (video report, which includes an introduction to the technical device of Kubernetes);

- “ Why is Kubernetes needed and why is it bigger than PaaS? ".