The basis of the rate of evolution may be mathematical simplicity.

- Transfer

Computer scientists are turning to evolutionary biology for inspiration to find optimal solutions in sets of astronomical dimensions

Studying the vast spaces of possible solutions to the problem, we are faced with the fact that most of the paths will be dead-end. But evolution may have found ways to increase the chances of success.

Creationists love to insist that evolution would have to collect up to 300 amino acids in the correct order, only to create a single human medium-sized protein. And since at each position could be located one of the 20 possible amino acids, it would seem that there are more than 20 300variants of enumeration, which is many orders of magnitude greater than the number of atoms in the observable universe. Even if we find redundancy, due to which some of these options are equivalent, the likelihood that evolution stumbled upon the right combination by chance, conducting random mutations, seems monstrously small, even with billions of years gone by.

The main disadvantage of such arguments is that evolution did not experience these sequences by accident: the process of natural selection eliminated the unnecessary. In addition, it is likely that nature has found other workarounds, ways to narrow a huge number of probabilities to small, research-able subsets that are more likely to give useful solutions.

Informatics specialists have similar problems, which include the search for optimal solutions among the many options of astronomical size. Some of them are turning to biology for inspiration - despite the fact that biologists themselves are only trying to understand how nature works this way.

Genetic algorithms, optimization methods that have been popular for several decades, use the principles of natural selection to create new designs (for robots, drugs and transport systems, among other things), to train neural networks or to encrypt and decrypt data. This technology begins with the fact that random solutions to a problem are regarded as certain “organisms” possessing certain characteristics, or elements, “genetically” given in their code. These solutions are not particularly good, but then they undergo various random mutations (and sometimes other changes that replicate the gene shuffling process) and give out the second generation of organisms, which in turn are tested for suitability in solving the problem. In the end, after many repetitions, this process leads to the appearance of an extremely well-adjusted individual,

Some experts bring this method to the next level by doing genetic programming in order to get programs that can write programs and produce effective solutions (here, “genes” can be lines of code). This goal turned out to be particularly difficult to achieve, since researchers have to take into account certain types of data and structures, as well as many other conditions.

Interestingly, these ways of thinking based on evolution (especially genetic programming) conceptually overlap with mathematical theory, which has always been somewhere on the periphery of both biology and computer science. Recently, some scientists are trying to use it to understand how evolution, natural and artificial, can work effectively, create something new and learn to learn. The main thing here was a special concept of complexity, randomness and information, which had no practical applications - until today.

Monkeys behind keyboards

This theory, invented in the 1960s, works with what is called algorithmic information. It builds on the intuitive way of thinking about probability and complexity: the idea that for some input data from a computational point of view, it will be easier to describe how to create something than to create it. Take the well-known analogy of a monkey, shuffling keys randomly. The chances of it printing the first 15,000 digits of π are ridiculously small — and they decrease exponentially with the number of digits.

But if you interpret keystrokes as random text for a computer program that outputs the number π, then the chances of success, or the “algorithmic probability,” are radically improved. The code for displaying the first 15,000 digits of the number π in C, for example, can be pressed down to just 133 characters.

In other words, the algorithmic theory of information says that the probability of producing some types of output data is much higher when random processes run at the level of the program describing this data than at the level of the data themselves, since the program will be short. In this sense, complex structures — for example, fractals — are much easier to obtain by chance.

However, a problem soon arose with this approach: mathematicians found that algorithmic complexity (also known as Kolmogorov complexity , named after Andrei Nikolaevich Kolmogorov, one of the founders of the theory) given the output data - the length of the shortest possible program that gives them out - cannot be calculated. Therefore, computer scientists cannot find an ideal way to compress a string or another object.

The algorithmic complexity of the network on the left is high, since for its description it is required to list all the edges connecting the vertices. In the middle, the complexity is less, since in describing this network, we can write down that vertex A is connected to all the others. The right network has the smallest complexity, since its description is that all vertices are connected by edges in pairs.

As a result, the algorithmic theory of information was developed mainly in the field of pure mathematics, where it is used to study related theorems and determine the concepts of randomness and structure. Its practical use seemed inaccessible. “Mathematically, this is a simple and beautiful measure of complexity,” said the famous mathematician Gregory Chaytin, one of the founders of the theory, who worked at the Thomas J. Watson IBM Center and the Federal University of Rio de Janeiro. “But in terms of applicability in the real world, she looked impregnable.”

But this did not make him retreat. He hoped that this theory could be used to formalize the idea that DNA behaves like a program. In 2012, he published a book where he described how evolution can be presented as a random walk through the program space. Mutations along this path, he wrote, do not obey the statistically random probability distribution; they obey a distribution based on Kolmogorov complexity. But he could not verify it.

Now, some scientists hope to revive this theory in this context, and to link it simultaneously with both biology and computer science.

Pursuit of simplicity

Hector Zenil , a computer scientist from the Karolinska Institute in Sweden, is one of those who are trying to revive this theory. He works with other researchers to use Kolmogorov complexity as a metric for analyzing the complexity of biological networks - for example, gene regulatory networks or the interaction of proteins in cells. The researchers roughly estimate the algorithmic content of the network (the exact value is not computable), then conduct a network mutation and check how much it affected Kolmogorov complexity. They hope that this method will give them an idea of the relative importance of the various elements of the network, and of its functional response to intentional changes.

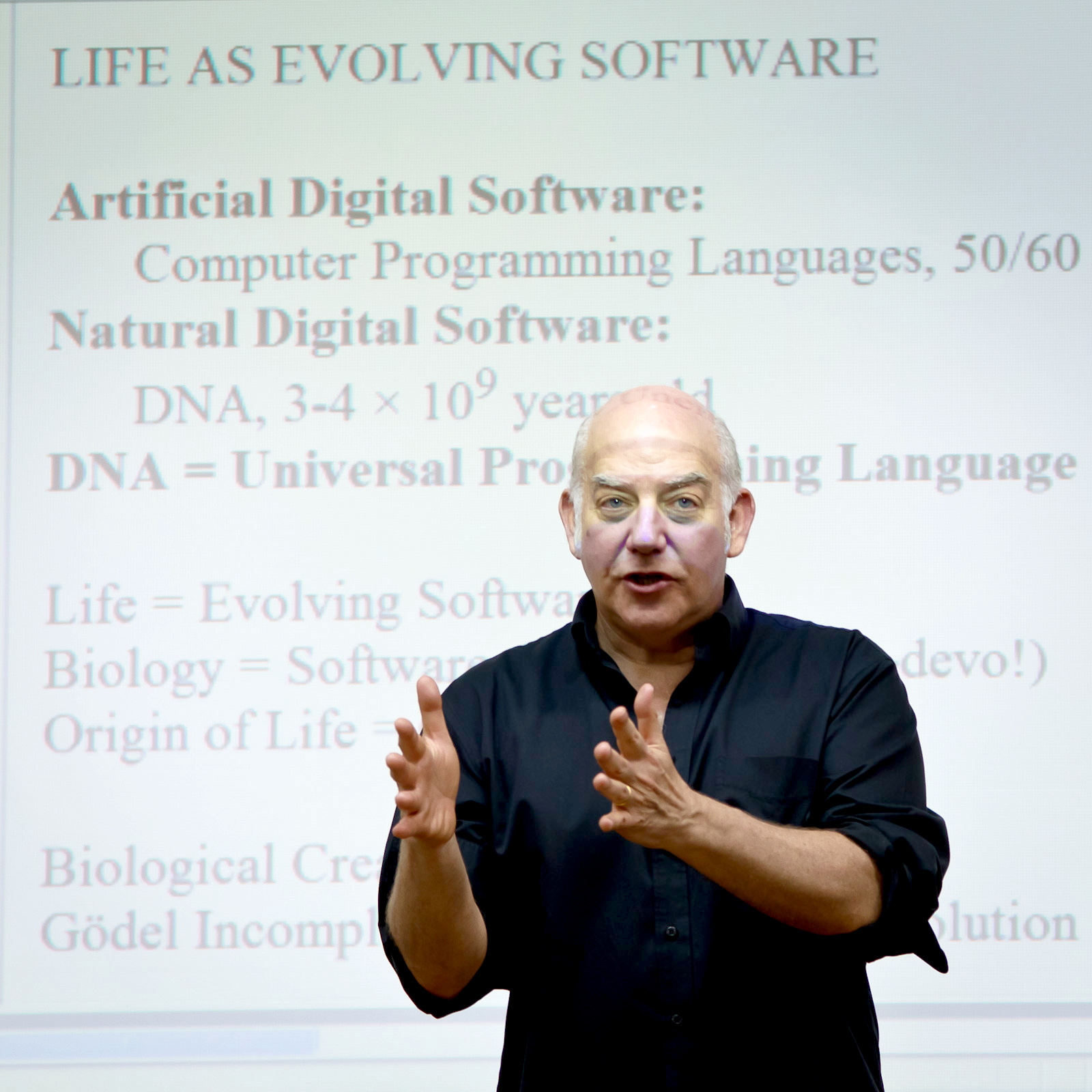

Famous mathematician Gregory Chitin, one of the founders of algorithmic information theory

In a recent paper laid out on arxiv.org, they described that if you force the network to move towards an increase in Kolmogorov complexity — by introducing mutations that cause the program describing the network to become larger — this usually leads to an increase in the number of functions that the network, while making it more sensitive to disturbances. When they made the network simpler, the functions became smaller, and the stability increased.

However, it remains unclear whether the Kolmogorov complexity can play any greater role than a simple tool — for example, as Chitin believes, to be the main driver of change. Despite all the problems, algorithmic information seems an attractive theory for biology. Traditionally, mathematics has been used in the field of population genetics to describe evolutionary dynamics.- statistical models describing the frequency of occurrence of genes in a population. However, population genetics has its limitations: it does not describe the origin of life and other basic biological transition processes, or the appearance of completely new genes. “In this beautiful mathematical theory, the idea of biological creativity was somehow lost,” said Chitin. But if we take into account algorithmic information, he said, "then creativity is naturally embedded in the overall picture."

Like the idea that the evolutionary process improves over time and increases efficiency. “I’m convinced that evolution is learning,” said Daniel Polany., a computer scientist and professor of artificial intelligence from the University of Hertfordshire in England. “I would not be surprised if this can be expressed through the asymptotic reduction of algorithmic complexity.”

Zenil and the team decided to experimentally test the biological and computational consequences of the influence of the platform of algorithmic complexity. Using the same technique of approximate assessment of complexity, which they used to analyze and perturb networks, they carried out an “evolution” of artificial genetic networks towards certain targets — matrices of zeros and ones, indicating gene interactions — making a choice in favor of mutations that produced matrices with less algorithmic complexity. In other words, they made the selection in favor of larger structures.

Гектор Зенил, специалист по информатике из Каролинского института

They recently published results in the journal Royal Society Open Science, from which it follows that, compared with statistically random mutations, their selection of mutations led to a significant acceleration of the development of networks towards solutions. Other features appeared, for example, permanent and regular structures - sections of matrices that have already reached a certain degree of simplicity, which new generations could hardly have improved. “Some regions were more or less mutated simply because they had already reached a certain level of simplicity,” said Zenil. “It was very similar to genes.” This genetic memory helped larger structures to emerge faster - researchers believe that this implies that algorithmically probable mutations can lead to outbreaks of diversity and extinction.

"This means," said Zenil, "that it will be quite fruitful to consider computational processes when studying evolution." He hopes to use this understanding of chance and complexity to identify pathways of exchange that may be more susceptible to mutations, or to understand why certain gene interactions can be associated with diseases such as cancer.

Evolution of programs

Zenil hopes to understand whether biological evolution works by the same computational rules, but most experts have doubts. It is not clear which natural mechanism could be responsible for a rough estimate of the algorithmic complexity or make mutations develop purposefully. Moreover, “to assume that life is fully encoded in four letters will be wrong,” said Giuseppe Longo , a mathematician from the National Center for Scientific Research in France. "DNA is extremely important, but it does not make sense outside the cell, the organism, the ecosystem." Other interactions work, and the use of algorithmic information cannot cover all this complexity.

And yet this concept has generated a certain interest - in particular, because such views on evolution and computational processes have something in common, at least a common theme, with the goal of genetic programming - to get a program that can evolve.

There were already quite intriguing hints of a potential connection between the ideas of Chaytin and Zenil, associated with Kolmogorov complexity and methods of genetic programming. For example, in 2001, a team of researchers reported that the complexity of the outputs of the genetic program is limited by the Kolmogorov complexity of the original program.

But for the most part, Kolmogorov complexity did not play a role in the attempts of computer scientists to understand these ideas. They tried other ways to change genetics and mutations. Some groups changed the rate of mutations, others forced the system to lean toward mutations that replaced large chunks of code. “People came up with dozens, and possibly hundreds of different versions of mutations and genotypes,” said Lee Spector , an information technology specialist from Hampshire College in Massachusetts. Spector recently led a team that demonstrated the advantages of adding and removing mutations across the entire genome of an organism before directly replacing one gene with another. This new type of genetic operator exponentially increased the number of paths in the genetic search space and eventually led to better solutions.

Lee Spector, a computer scientist from Hampshire College in Massachusetts

Many researchers went in the opposite direction, searching for clever ways to speed up the process, narrowing the search field, but not limiting it so much that the search would miss optimal results. One idea was to make simplicity a goal: in the 1960s, Eugene Wigner noted "the unreasonable efficiency of mathematics in the natural sciences," and computer scientists found that often simpler and more elegant models are more effective and more often applicable. “The question is,” Spector said, “does this fact tell us something deep about the structure of the universe, or not?” And will it be useful to us? "

He also warns that attempts to push evolving programs to simplicity can be destructive: rewarding programs for brevity can lead to cuts in what seems like garbage now, but it can be useful to future generations, which will result in sacrificing optimal solutions. “And we will get stuck,” he said.

However, simplicity remains a seductive goal, which, moreover, has demonstrated its usefulness. In a paper published last year, the work of Spector and his colleagues found that if you reduce the size - sometimes only 25% of the original length - after applying the techniques of genetic programming, the program to better deal with the new data, and they can be used for a wide range of common problems.

In particular, because of this, he is following the work in the field of algorithmic information theory, although he says that there is still to be seen its impact on this area of research.

We learn from life

The Zenil team may have taken the first step in the search for this influence - however, in order to realistically apply their work, they first need to test their method on other types of search problems.

And yet, “they convincingly showed the need for constraints based on structure,” said Larisa Albantakis , a neuroscientist-theorist from the University of Wisconsin, who also worked to accelerate genetic algorithms by limiting search space. "Nature is structured, and if we take this as a starting point, it would be foolish to try to check all possible homogeneous mutations." She added: "Everything that makes sense to us is somehow structured."

And while Spector is skeptical about the fact that Zenil's work can be applied to something beyond the solution of a specific problem that he studied, “the information theory underlying their concepts is intriguing and potentially very important,” he said. - It seems interesting to me partly because it looks like something alien. Perhaps there are ideas that comrades from our community are unaware of. ” After all, algorithmic information is related to a large assortment of ideas that some genetic programming experts may not include in their work, for example, the unlimited nature of evolution.

“I have a strong sense of having something important in this area,” Spector said. However, he added, so far "there is a great distance between their work and practical applications."

“The idea of imagining life as an evolving program is very fruitful,” said Chitin, although it is too early to determine its value. Whether we reason about artificial, or about biological life, “we must see how far we can go.”