Virtual reality auto-optimization or What is the difference between reprojection, timewarp and spacewarp

Optimization of projects for virtual reality requires its own special approach. In addition to the general things that you should pay attention to when developing 3D games, VR imposes a number of strict restrictions. Any application requires not only an almost instantaneous response to any movement of the player (whether it be a head turn or a wave of the hand), but also ensuring a stable frame rate that far exceeds the standard requirements for "classic" games of any genre.

Modern helmets such as Oculus Rift and HTC Vive support a number of special technologies that are designed to smooth out performance drops. This should allow to compensate for FPS subsidence, artificially increasing the frame rate; improve user experience; Provide a little extra freedom to end-product developers and lower minimum system requirements. But is everything so perfect in practice? How do these technologies work and what is the difference between them? This will be discussed in this article.

If the ring games are often enough the FPS 30, and 60 is already considered an excellent indicator, the modern VR-device minimumthe value is 90 frames per second (60 FPS for Sony VR on the PS4, but 90 is also officially recommended). It is important to add that for the comfort of the user in the helmet, the frame rate must be stable and not change throughout the game. A short-term drop in FPS, say, to 30 in a regular game on a monitor or TV, is likely to go completely unnoticed, but for a person in VR this will mean the complete destruction of the immersion effect. The picture immediately begins to twitch, lag behind head turns and unpleasant sensations right up to motion sickness and nausea the player is provided with. So how can all these negative effects be eliminated at least partially?

Alternating reprojection and Synchronous Timewarp

(Interleaved Reprojection and Synchronous Timewarp)

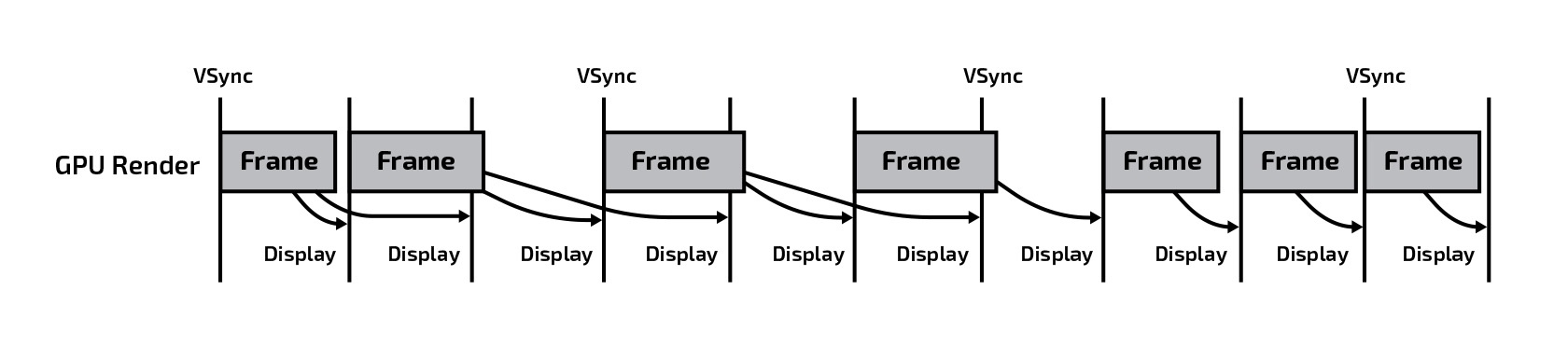

In the beginning, a few words should be said about the vertical frame synchronization technology close to this topic. In the most basic mode, the game engine for each iteration of the main loop produces one frame displayed on the screen. Depending on the time required for one iteration, the frame rate will change, because the complexity of the game scene and logic is also fickle. If this frequency does not coincide with the refresh rate of the monitor image, this often leads to the fact that the picture begins to “crumble”. To combat this effect, vertical synchronization (V-Sync) is used, which allows the engine to give a new frame strictly after a clock pulse, the frequency of which is proportional to the refresh rate of the image on the monitor (usually 60 Hz).

Thus, the maximum frame rate will be 60, but if the frame preparation time exceeds 1/60 of a second, the next frame will only be drawn on every second clock and the FPS will drop exactly 2 times to 30 frames per second and so on.

In VR projects, as mentioned above, this approach to synchronization is not applicable, because It is required to maintain a consistently high frame rate. But the engine just as well may not have time to produce 90 images per second, what should I do? And here comes the trick, called alternating projection. As soon as the system detects that the FPS has dropped below 90, it forcibly reduces the frame rate of the game by 2 times (up to 45) and begins to “draw” intermediate frames on its own, taking the previous image as a basis and rotating it by an angle through 2D transformation, which this time the player’s head turned.

Thus, with 45 frames per second generated by the game, 90 is still displayed on the screen! As soon as the engine again becomes capable of producing 90 frames per second on its own, projection is automatically turned off.

It is important to note that in this way exactly the rotation of the head is compensated, but not its movement in space. The result of displacement by such 2D transformations cannot be obtained due to the resulting parallax effect. But usually the rotation of the head occurs much more often and faster than its movement, so this method is quite effective.

This approach was implemented first for both Oculus Rift and HTC Vive. For SteamVR, it is called Interleaved Reprojection, and for Oculus SDK, it is called Synchronous Timewarp.

The biggest minus is that at the moment of switching on / off the projection, the user notices a noticeable “startle” and a change in the smoothness of the picture. In addition, even with a slight decrease in game performance, we immediately get a 2-fold drop in the real frame rate, and no matter how well the 2D transformation algorithm is implemented, it cannot provide the same accuracy and smoothness of the picture as the engine itself. Therefore, later on in both SDKs this method was improved.

Asynchronous and reprojection Timewarp

(Asynchronous Reprojection and Timewarp)

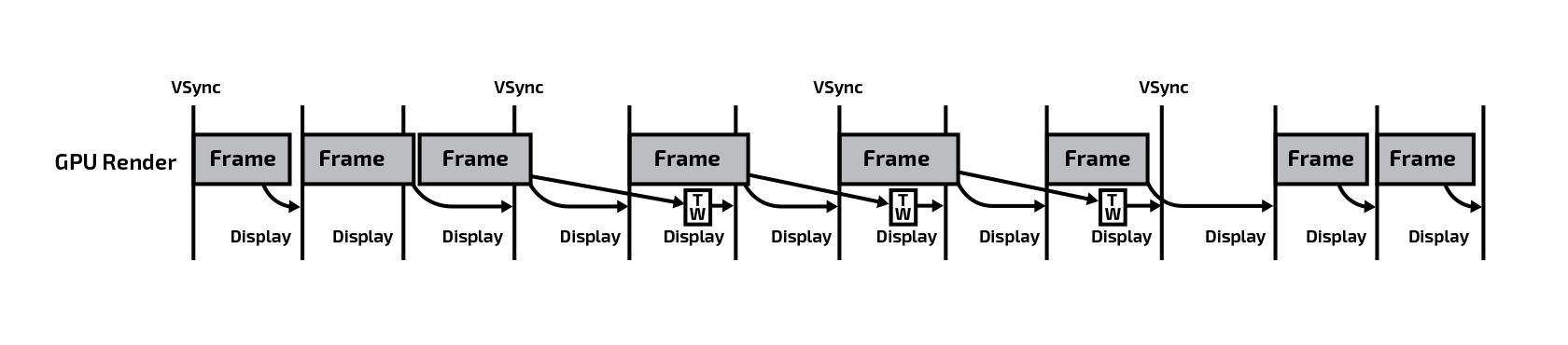

Oculus was the first to implement this approach under the name Asynchronous Time Warp (ATW), and it is also the main one for Oculus Rift since the official release. In general, the idea is the same - to supplement the sequence of frames in order to maintain stable 90 FPS, but the addition process itself is allocated in a separate stream independent of the main render. Immediately before each clock pulse, ATW displays either a frame successfully generated by the engine or the result of the previous frame being reproduced if a new frame is not yet ready. Thus, two scenarios are possible:

- the engine managed to render a frame - this frame is displayed on the screen as it is and enters the ATW buffer;

- the engine didn’t manage to render the frame - the unfinished frame is “delayed” until the next VSync tick, and the result of 2D reproduction of the previous frame from the ATW buffer is displayed on the screen.

This makes it possible not to artificially limit the game engine and allows it to generate frames with the frequency at which it is most capable. At the same time, ATW “hedges” the game, compensating for the performance drop by re-projection strictly at the moments when it is needed. As a result, there is no noticeable “startle” of the picture when changing the reprojection mode, much less “useful” frames of the engine are discarded, and in general the user feels much more natural.

For game developers, supporting this technology does not cost anything - it just works with any VR application. But on the part of the SDK, OS and video card manufacturers, the implementation of such a method required a fair amount of additional joint efforts. Modern hardware and software for the GPU are optimized for very high bandwidth, but not for the continuity of frames needed for ATW. Oculus had to work closely with Microsoft, NVidia, and AMD to adapt the processor microcode and GPU core driver for better ATW support. These extensions are called AMD's Liquid VR and NVIDIA's VRWorks.

At the end of 2016, Valve also introduced its version of this method, which was called Asynchronous Reprojection. However, according to the company itself, everything turned out to be not so simple: according to its research, despite the decrease in specific jerks and “flinches” when switching projection, periodically randomly occurring “false” frames obtained as a result of 2D transformations are also felt by players as annoying factor. Everything is quite individual, so this function can, if desired, be disabled in the SteamVR settings, returning to the classic synchronous reprojection.

Asynchronous Spacewarp

(Asynchronous Spacewarp)

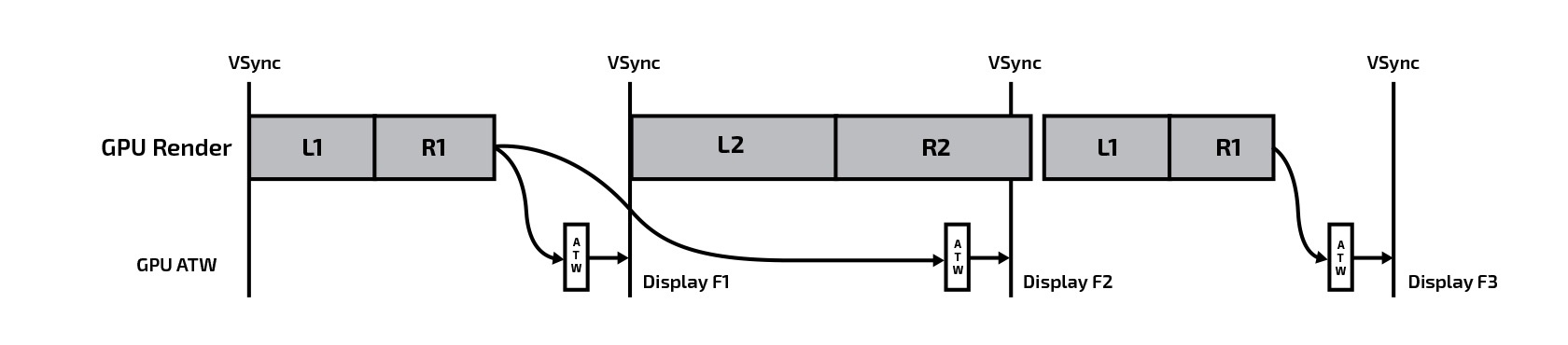

As already mentioned, all the described methods are able to complement the sequence of frames, compensating only for the turns of the player’s head. But what if the player also moved to the side? The position of the review will change and, due to the parallax effect, conventional 2D transformations will simply not be enough to generate more or less reliable intermediate frames. As a result, if a player actively moves his head at the moment of subsidence FPS, the whole “world” around him will twitch and tremble, destroying the effect of immersion. To solve this problem, Oculus developed another technology working on top of the asynchronous timewarp, and called it Spacewarp (from the English space - space) or ASW.

Unlike timewarp, here when generating “intermediate” frames, the movements of the character, camera, VR-controllers and the player are taken into account. ASW processes the parallax effect created by shifting the viewpoint and, as a result, near objects relative to distant ones. For this, an additional depth buffer of the last rendered frame is used, according to which ASW understands which objects are in the background and which are in the far. As a result, on the “intermediate” frame, it becomes possible not only to shift the viewpoint according to the player’s movements, but also to simulate the movement of some objects relative to others.

Of course, the system will not be able to find out what is behind the area that was blocked by nearby objects, so the corresponding zones will, in fact, be generated from the surrounding environment by “stretching” it. This is clearly illustrated by the following picture:

ASW is inextricably linked with ATW and each of them performs its own task. Timewarp is ideal for static images at a certain distance from the viewer, and spacewarp, in turn, is responsible for moving objects near the player.

It is also worth noting that ASW support is present only in Windows 8 and later. And the best implementation is available only in Windows 10, because it was in it that Microsoft carried out additional work in this direction. An analog of this technology for HTC Vive has not yet been announced.

Technology Conclusions

Reprojection, ATW and ASW, of course, make life easier for both developers and end users, allowing you to make virtual reality more like real life and smoothing out the flaws of 3D rendering, but you should not consider them as a panacea. In theory, it may seem that these methods can reduce the performance bar to 45 frames per second or even lower, but in practice this is far from always the case. Depending on what kind of virtual world the player is in, what events are taking place there and how he moves, all methods of artificially “adding” a video stream can cause various artifacts, jerks and “trembling” of the picture, which is felt by the user, often on the subconscious level.

By the userif you are just starting to use a VR helmet, be it Oculus Rift or HTC Vive, leave all help systems turned on by default. This is especially important if your system is not the most productive. Most likely, it is in this case that your experience of immersion in virtual reality will be the best, and the range of supported content will expand. However, all people are individual, and when we talk about VR, this is doubly true. What is comfortable for one can cause persistent rejection in the other. Therefore, if you notice that everything seems to be smooth, but sometimes something is confusing, something is not moving as it should, there is a feeling of motion sickness - try disabling ASW sequentially, then ATW and return to the classic reprojection. Perhaps this is exactly what you need.

From developersbut the main advice is one - do not rely on the fact that modern methods of reprojection will allow you to pay less attention to optimization and solve all problems with a stable frame rate. 90 FPS is still the "gold standard" that you should strive for, remembering to leave a margin for weaker systems and the most difficult moments of the game. But it’s still nice to realize that at any unforeseen moment such technologies will smooth out “sharp corners” for your user, hide some flaws and allow you to fully enjoy immersion in virtual worlds!