Annealing and Freezing: Two Fresh Ideas for Accelerating Deep Network Learning

- Transfer

This post outlines two recently published ideas on how to speed up the process of learning deep neural networks while increasing the accuracy of prediction. The methods proposed (by different authors) are orthogonal to each other, and can be used together and separately. The methods proposed here are simple to understand and implement. Actually, links to the original publications:

1. The ensemble of shots: many models for the price of one

Regular ensembles of models

Ensembles are groups of models used collectively to obtain predictions. The idea is simple: train several models with different hyperparameters, and average their predictions when testing. This technique gives a noticeable increase in prediction accuracy; most winners of machine learning competitions

So what's the problem?

Training N models will require N times more time than training one model.

Mechanics SGD

Stochastic Gradient Descent (SGD) is a greedy algorithm. It moves in the parameter space in the direction of the largest slope. At the same time, there is one key parameter: the speed of learning. If the learning speed is too high, SGD ignores the narrow gullies in the relief of the parameter hyperplane (minima) and jumps through them like a tank through trenches. On the other hand, if the learning speed is low, SGD falls into one of the local minimums and cannot get out of it.

However, it is possible to pull SGD out of the local minimum by increasing the learning speed.

Watch your hands ...

The authors of this article use this controlled SGD parameter to roll into a local minimum and exit from there. Different local minima can give the same percentage of errors during testing, but the specific errors for each local minimum will be different !

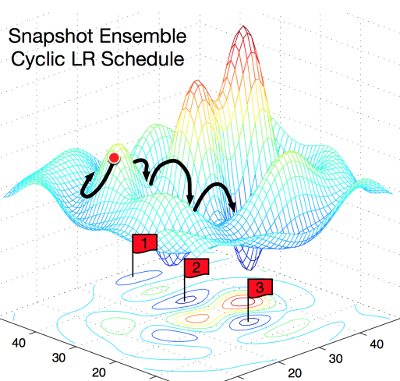

This picture illustrates the concept very clearly. The left shows how a regular SGD works, trying to find a local minimum. Right: SGD falls into the first local minimum, a snapshot of the trained model is taken, then SGD is selected from the local minimum and looks for the next one. So you get three local minimums with the same percentage of errors, but with different error characteristics.

What does the ensemble consist of?

The authors exploit the property of local minima to reflect various “points of view” on model predictions. Each time the SGD reaches a local minimum, a snapshot of the model is saved, of which, during testing, it will be an ensemble.

Cyclic Cosine Annealing

For automatic decision making, when to plunge into a local minimum, and when to exit it, the authors use the function of annealing the learning speed: The

formula looks cumbersome, but actually quite simple. The authors use a monotonically decreasing function. Alpha is the new value for learning speed. Alpha zero is the previous value. T is the total number of iterations you plan to use (batch size X number of eras). M - the number of pictures of the model that you want to receive (ensemble size).

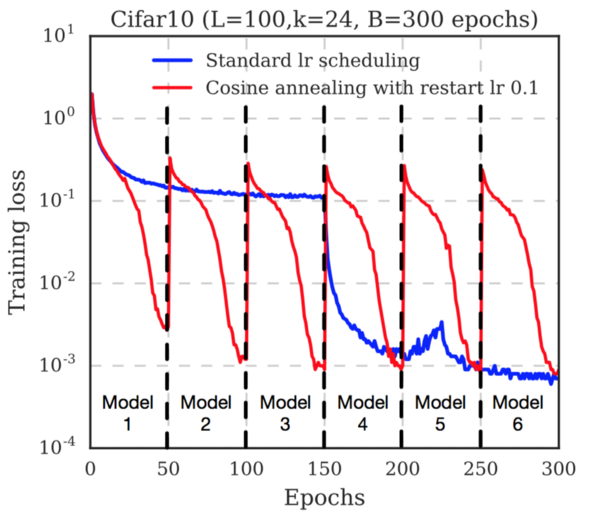

Notice how quickly the loss function decreases before each shot is saved. This is because learning speed is constantly decreasing.. After saving the image, the learning speed is restored (the authors use the level 0.1). This takes the trajectory of the gradient descent from the local minimum, and the search for a new minimum begins.

Conclusion

The authors present test results on several datasets (Cifar 10, Cifar 100, SVHN, Tiny IMageNet) and several popular neural network architectures (ResNet-110, Wide-ResNet-32, DenseNet-40, DenseNet-100). In all cases, the ensemble trained by the proposed method showed the lowest percentage of errors.

Thus, a useful strategy is proposed for obtaining a gain in accuracy without additional computational costs when training models. For the effect of different parameters, such as T and M on performance, see the original article.

2. Freezing: accelerate learning by sequentially freezing layers

The authors of the article demonstrated the acceleration of learning by freezing the layers without losing the accuracy of the prediction.

What does layer freezing mean?

Freezing the layer prevents changes in the weights of the layer during training. This technique is often used in transfer learning, when a base model trained on another dataset is frozen.

How does freezing affect model speed?

If you do not want to change the weight coefficients of a layer, the backward passage through this layer can be completely eliminated. This seriously speeds up the computation process. For example, if half of the layers in your model are frozen, you will need half the calculation to train the model.

On the other hand, you still need to train the model, so if you freeze the layers too early, the model will give inaccurate predictions.

What is the novelty?

The authors showed a way to freeze layers one by one as early as possible, optimizing training time by eliminating back passages. In the beginning, the model is fully trained, as usual. After several iterations, the first layer of the model is frozen, and the remaining layers continue to be trained. After a few more iterations, the next layer is frozen, and so on.

(Again) annealing training speed

The authors used annealing training rates. An important difference between their approach: the learning rate varies from layer to layer , and not for the entire model. They used the following expression:

Here, alpha is the learning speed, t is the iteration number, i is the layer number in the model.

Please note, since the first layer of the model will be frozen first, its training will last the least number of cycles. To compensate for this, the authors scaled the initial learning coefficient for each layer:

As a result, the authors achieved an acceleration of learning by 20% due to a drop in accuracy of 3%, or acceleration by 15% without reducing the accuracy of the prediction.

However, the proposed method does not work very well with models that do not use layer skips (such as VGG-16). In such networks, neither acceleration nor influence on the accuracy of prediction were found.