Typical probability distributions: data scientist cheat sheet

- Transfer

Data scientists have hundreds of probability distributions for every taste. Where to begin?

Data science, whatever it is, is that thing. From a guru at your gatherings or hackathons you can hear: "Data scientist understands statistics better than any programmer." Applied mathematicians are taking revenge for the fact that statistics are no longer so much heard as in the golden 20s . They even have their own unfunny Venn diagram for this reason . And so, then, suddenly, you, the programmer, are completely out of work in the conversation about confidence intervals, instead of habitually grumbling at analysts who had never heard of the Apache Bikeshed project in order to distribute comments in a distributed manner. For such a situation, to be in the stream and again become the soul of the company - you need an express course on statistics. Maybe it’s not deep enough for you to understand everything, but quite enough so that it might seem at first glance.

Probabilistic distributions are the basis of statistics, just as data structures are the basis of computer science. If you want to speak the language of a data scientist, you must start by studying them. In principle, if you are lucky, you can do simple analyzes using R or scikit-learn without understanding the distributions at all, just as you can write a Java program without understanding the hash functions. But sooner or later it will end with tears, mistakes, false results, or - much worse - with oohs and bulging eyes from senior statisticians.

There are hundreds of different distributions, some of which sound like monsters of medieval legends such as Muth or Lomax. Nevertheless, in practice, about 15 are more or less often used. What are they, and what smart phrases do you need to remember about them?

So what is a probability distribution?

Something happens all the time: cubes are thrown, it is raining, and buses are approaching. After this something happened, you can be sure of some outcome: the cubes fell on 3 and 4, 2.5 cm of rain fell, the bus pulled in 3 minutes. But up to this point, we can only talk about how much each outcome is possible. Probability distributions describe how we see the probability of each outcome, which is often much more interesting than knowing only one, the most possible outcome. Distributions come in different forms, but strictly of the same size: the sum of all the probabilities in the distribution is always 1.

For example, tossing the right coin has two outcomes: it will fall either with an eagle or tails (assuming that it does not land on the edge and is not pulled off by a seagull in the air). Before the throw, we believe that with a chance of 1 to 2 or with a probability of 0.5, she will fall an eagle. Just like tails. This is the probability distribution of the two outcomes of the throw, and if you carefully read this sentence, then you already understood the Bernoulli distribution .

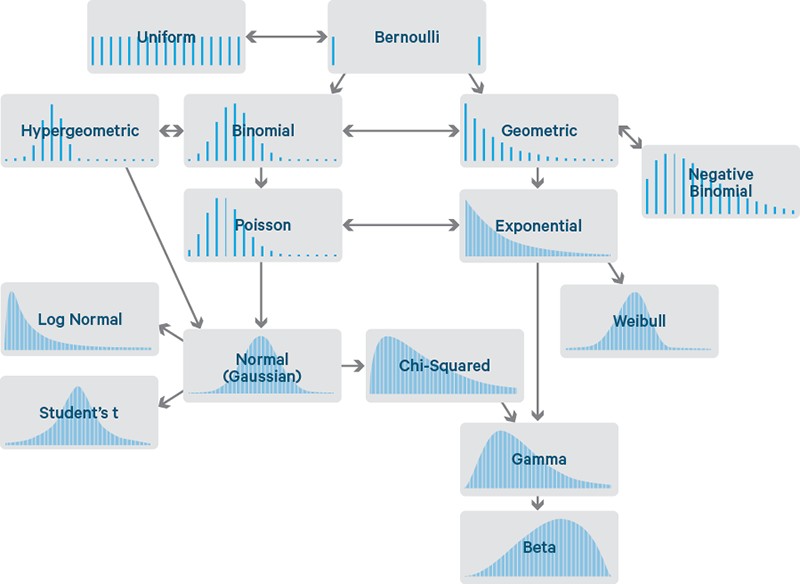

Despite the exotic names, the common distributions are related to each other in quite intuitive and interesting ways that make it easy to recall them and confidently talk about them. Some naturally follow, for example, from the Bernoulli distribution. Time to show a map of these connections.

Each distribution is illustrated by an example of itsdistribution density function (DPR). This article is only about those distributions for which the outcomes are singular. Therefore, the horizontal axis of each chart is a set of possible outcome numbers. Vertical - the probability of each outcome. Some distributions are discrete - their outcomes must be integers, such as 0 or 5. These are denoted by rare lines, one for each outcome, with a height corresponding to the probability of this outcome. Some are continuous, their outcomes can take any numerical value, such as -1.32 or 0.005. These are shown by tight curves with areas under the curve sections that give probabilities. The sum of the heights of the lines and areas under the curves is always 1.

Print, cut along the dotted line and carry with you in your wallet. This is your guide to the country of distributions and their relatives.

Bernoulli and uniform

You have already met with the Bernoulli distribution above, with two outcomes - heads or tails. Imagine it now as a distribution over 0 and 1, 0 is an eagle, 1 is a tails. As already clear, both outcomes are equally probable, and this is reflected in the diagram. The RDF Bernoulli contains two lines of the same height, representing 2 equally probable outcomes: 0 and 1, respectively.

The Bernoulli distribution may also represent non-probable outcomes, such as throwing the wrong coin. Then the probability of the eagle will be not 0.5, but some other value of p, and the probability of tails is 1-p. Like many other distributions, this is actually a whole family of distributions defined by certain parameters, as p above. When you think “ Bernoulli ” - think about “throwing a (possibly wrong) coin.”

This is a very small step to presenting a distribution over several equally probable outcomes: a uniform distribution characterized by a flat PDF. Imagine the correct dice. Its outcomes 1-6 are equally likely. It can be set for any number of outcomes n, and even in the form of a continuous distribution.

Think of even distribution as the “right dice”.

Binomial and hypergeometric

The binomial distribution can be represented as the sum of the outcomes of those things that follow the Bernoulli distribution.

Throw an honest coin twice - how many times will the eagle be? This is a number obeying the binomial distribution. Its parameters are n, the number of tests, and p is the probability of "success" (in our case, an eagle or 1). Each roll is a Bernoulli-distributed outcome, or test . Use the binomial distribution when counting the number of successes in things such as tossing a coin, where each roll is independent of the others and has the same probability of success.

Or imagine an urn with the same number of white and black balls. Close your eyes, pull out the ball, write down its color and put it back. Repeat. How many times has a black ball been pulled? This number also obeys the binomial distribution.

We presented this strange situation in order to make it easier to understand the meaning of the hypergeometric distribution . This is the distribution of the same number, but in a situation if we did not return the balls back. It is certainly a cousin of the binomial distribution, but not the same, since the probability of success varies with each ball drawn. If the number of balls is large enough compared to the number of pulls, then these distributions are almost the same, since the chance of success varies with each pull very little.

When people talk about pulling balls out of ballot boxes somewhere without returning, it’s almost always safe to screw “yes, hypergeometric distribution”, because in my life I have never met anyone who really filled ballot boxes with balls and then pulled them out and returned, or vice versa. I don’t even know anyone with urns. Even more often, this distribution should pop up when choosing a meaningful subset of some general population as a sample.

Note perev.

Тут может быть не очень понятно, а раз туториал и экспресс-курс для новичков — надо бы разъяснить. Генеральная совокупность — есть нечто, что мы хотим статистически оценить. Для оценки мы выбираем некоторую часть (подмножество) и производим требуемую оценку на ней (тогда это подмножество называется выборкой), предполагая, что для всей совокупности оценка будет похожей. Но чтобы это было верно, часто требуются дополнительные ограничения на определение подмножества выборки (или наоборот, по известной выборке нам надо оценить, описывает ли она достаточно точно совокупность).

Практический пример — нам нужно выбрать от компании в 100 человек представителей для поездки на E3. Известно, что в ней 10 человек уже ездили в прошлом году (но никто не признаётся). Сколько минимум нужно взять, чтобы в группе с большой вероятностью оказался хотя бы один опытный товарищ? В данном случае генеральная совокупность — 100, выборка — 10, требования к выборке — хотя бы один, уже ездивший на E3.

В википедии есть менее забавный, но более практичный пример про бракованные детали в партии.

Практический пример — нам нужно выбрать от компании в 100 человек представителей для поездки на E3. Известно, что в ней 10 человек уже ездили в прошлом году (но никто не признаётся). Сколько минимум нужно взять, чтобы в группе с большой вероятностью оказался хотя бы один опытный товарищ? В данном случае генеральная совокупность — 100, выборка — 10, требования к выборке — хотя бы один, уже ездивший на E3.

В википедии есть менее забавный, но более практичный пример про бракованные детали в партии.

Poisson

What about the number of customers calling the technical support hotline every minute? This is the outcome, whose distribution at first glance is binomial, if we count every second as a Bernoulli test, during which the customer either does not call (0) or calls (1). But the power supply organizations are well aware: when they turn off the electricity, two or even more than a hundred can call in a secondpeople. Presenting this as 60,000 millisecond tests will not help either - there are more tests, the likelihood of a call in a millisecond is less, even if two or more are not taken into account at the same time, but technically this is still not a Bernoulli test. Nevertheless, logical reasoning is triggered with the transition to infinity. Let n tend to infinity, and p - to 0, and so that np is constant. It’s like dividing into ever smaller fractions of time with an increasingly less likely call. In the limit, we obtain the Poisson distribution .

Just like the binomial, the Poisson distribution is the distribution of quantity: the number of times that something happens. It is parameterized not by the probability p and the number of tests n, but by the average intensity λ, which, in analogy with the binomial, is simply a constant value of np. The Poisson distribution is something that needs to be remembered when it comes to counting events for a certain time at a constant given intensity.

When there is something, such as packets arriving on the router or buyers appearing in a store or something waiting in line, think Poisson .

Note perev.

Я бы месте автора я рассказал про отсутствие памяти у Пуассона и Бернулли (распределений, а не людей) и предложил бы в разговоре ввернуть что-нибудь умное про парадокс закона больших чисел как его следствие.

Geometric and negative binomial

From simple Bernoulli trials, another distribution appears. How many times does a coin fall out tails before being dropped by an eagle? The number of lattices obeys a geometric distribution . Like the Bernoulli distribution, it is parameterized by the probability of a successful outcome, p. It is not parameterized by the number n, the number of test rolls, because the number of failed tests is precisely the outcome.

If the binomial distribution is “how many successes”, then the geometric distribution is “How many failures are there to success?”.

Negative binomial distribution- a simple generalization of the previous one. This is the number of failures before r, and not 1, successes. Therefore, it is additionally parameterized by this r. It is sometimes described as the number of successes to r failures. But, as my life coach says: “You decide what is success and what is failure”, so this is the same, if you do not forget that the probability p should also be the correct probability of success or failure, respectively.

If you need a joke to relieve stress, you can mention that the binomial and hypergeometric distribution is an obvious pair, but the geometric and negative binomial distribution are also very similar, and then say “Well, who calls them all like that, eh?”

Exponential and Weibula

Again about technical support calls: how much will it take before the next call? The distribution of this waiting time is as if geometrical, because every second, until no one calls, is like a failure, up to a second, until, finally, the call comes about. The number of failures is like the number of seconds until no one called, and this is practically the time until the next call, but “practically” is not enough for us. The bottom line is that this time will be the sum of whole seconds, and thus it will not work to calculate the wait inside this second until the call is directly made.

Well, as before, we pass to the limit in the geometric distribution, with respect to time fractions, and voila. We get the exponential distribution, which accurately describes the time before the call. This is a continuous distribution, the first one we have, because the outcome is not necessary in whole seconds. Like the Poisson distribution, it is parameterized by the intensity λ.

Repeating the connection between binomial and geometric, Poisson's “how many events are in time?” Is associated with the exponential “how many before the event?”. If there are events whose number per unit time obeys the Poisson distribution, then the time between them obeys an exponential distribution with the same parameter λ. This correspondence between the two distributions must be noted when either of them is discussed.

An exponential distribution should come to mind when thinking about "time to event", possibly, "time to failure." In fact, this is such an important situation that there are more generalized distributions to describe the mean time-to-failure, such as the Weibul distribution . While the exponential distribution is suitable when the rate of wear, or failure, for example, is constant, the Weibull distribution can simulate an increase (or decrease) over time in the failure rate. An exponential, in general, special case.

Think Weibul when it comes to running -on-failure.

Normal, lognormal, student and chi-square

The normal , or Gaussian , distribution is probably one of the most important. Its bell-shaped form is recognized immediately. Like e , it is a particularly curious entity that manifests itself everywhere, even from the seemingly simplest sources. Take a set of values that obey one distribution — any! - and fold them. The distribution of their sum submits (approximately) to the normal distribution. The more things are summed up - the closer their sum corresponds to the normal distribution (catch: the distribution of terms must be predictable, be independent, it tends only to normal). That this is so, despite the initial distribution, is amazing.

Note perev.

Меня удивило, что автор не пишет про необходимость сопоставимого масштаба суммируемых распределений: если одно существенно доминирует надо остальными — сходиться будет крайне плохо. И, в общем-то, абсолютная взаимная независимость необязательна, достаточна слабая зависимость.

Ну сойдёт, наверное, для вечеринок, как он написал.

Ну сойдёт, наверное, для вечеринок, как он написал.

This is called the “ central limit theorem, ” and you need to know what it is, why it is so named, and what it means, otherwise they will instantly be laughed at.

In its context, normal is associated with all distributions. Although, basically, it is associated with the distribution of all kinds of sums. The sum of the Bernoulli tests follows a binomial distribution and, with an increase in the number of tests, this binomial distribution is getting closer and closer to the normal distribution. Similarly, his cousin is a hypergeometric distribution. The Poisson distribution — the limiting form of the binomial — also approaches normal with increasing intensity parameter.

Outcomes that obey the lognormal distribution, give values whose logarithm is normally distributed. Or in another way: the exponent of a normally distributed value is lognormally distributed. If the sums are normally distributed, then remember also that the works are distributed lognormally.

Student t-distribution is the basis of the t-test , which many non-statisticians study in other areas. It is used for assumptions about the average of the normal distribution and also tends to a normal distribution with an increase in its parameter. A distinctive feature of the t-distribution is its tails, which are thicker than the normal distribution.

If a thick-tailed anecdote didn’t enough shake your neighbor, go to a rather funny beer bike . More than 100 years agoGuinness used statistics to improve his stout. Then William Seeley Gosset invented a completely new statistical theory for improved cultivation of barley. Gosset convinced the boss that other brewers would not understand how to use his ideas, and received permission to publish, but under the pseudonym "Student". Gosset's most famous achievement is precisely this t-distribution, which, one might say, is named after him.

Finally, the chi-square distribution is the distribution of the sums of squares of normally distributed quantities. A chi-square test is built on this distribution , which itself is based on the sum of the squared differences that should be normally distributed.

Gamma and Beta

At this point, if you are already talking about something chi-square, the conversation starts in earnest. You may already be talking to real statisticians, and you should probably take a look already, because things like gamma distribution may come up . This is a generalization of both the exponential and chi-square distribution. Like the exponential distribution, it is used for complex wait-time models. For example, a gamma distribution appears when the time to the next n events is simulated. It appears in machine learning as a “ conjugate a priori distribution ” to a couple of other distributions.

Do not talk about these conjugate distributions, but if you still have to, do not forget to say aboutbeta distribution , because it is conjugate a priori to most of the distributions mentioned here. Data scientists are sure that it is for this purpose that it is made. Mention this inadvertently and go to the door.

The beginning of wisdom

Probability distributions are things that you cannot know too much about. Those who are truly interested can turn to this super-detailed map of all probability distributions . I hope this comic guide will give you the confidence to appear “in the subject” in modern technology culture. Or, at least, a way with a high probability to determine when to go to a less nerdy party.

Sean Aries is Director of Data Science at Cloudera, London. Prior to Clauder, he founded Myrrix Ltd. (now an Oryx project) for commercializing large-scale real-time recommender systems on Hadoop. He is also a contributor to Apache Spark and co-author of O'Reilly Media's Advanced Analytics with Spark.