What is a service mesh and why do I need it [for a cloud application with microservices]?

- Transfer

Preface from the translator : This week was marked by the release of Linkerd 1.0 , which is an excellent occasion to tell not only about this product, but also about the very category of such software - service mesh (literally translated as “service grid”). Moreover, Linkerd authors just published the corresponding article.

tl; dr: Service mesh is a dedicated infrastructure layer to ensure safe, fast and reliable interaction between services. If you are creating an application to run in the cloud (i.e. cloud native) , you need a service mesh.

Over the past year, the service mesh has become a critical component in the cloud stack. High-traffic companies such as PayPal, Lyft, Ticketmaster, and Credit Karma have already added a service mesh to their applications in production, and in January Linkerd , an Open Source implementation of the service mesh for cloud applications, became the official project of the Cloud Native Computing Foundation ( containerd and rkt have recently been transferred to the same fund , and it is also known at least by Kubernetes and Prometheus - approx. transl.) . But what is a service mesh? And why did he suddenly become necessary?

In this article, I will define the service mesh and trace its origin through changes in the application architecture over the past decade. I will separate the service mesh from related but different concepts: API gateway, edge proxy, enterprise service bus. Finally, I will describe where the service mesh is needed and what to expect from adapting this concept in the cloud native world.

Service mesh is a dedicated infrastructure layer for interoperability between services. He is responsible for the reliable delivery of requests through the complex topology of services that make up a modern application designed to work in the cloud. In practice, a service mesh is usually implemented as an array of lightweight network proxies that are deployed with the application code, without the need for the application to know about it. (But we will see that this idea has different variations.)

The concept of service mesh as a separate layer is associated with the growth of applications created specifically for cloud environments. In such a cloud model, a single application can consist of hundreds of services, each service can have thousands of instances, and each instance can have constantly changing states depending on the dynamic planning carried out by an orchestration tool like Kubernetes . In this world, the interaction of services is not just a very complex process, but also a ubiquitous, fundamental part of the behavior of the executable environment. Managing it is very important to maintain performance and reliability.

Yes, a service mesh is a network model that is at the level of abstraction above TCP / IP. It is understood that the underlying L3 / L4 network is represented and is capable of transmitting bytes from point to point. (It is also understood that this network, like all other aspects of the environment, is not reliable; the service mesh must provide for network failure processing.)

In some ways, the service mesh is similar to TCP / IP. Just as a TCP stack abstracts from the mechanics of reliable byte transfers between network endpoints, so a service mesh abstracts from the mechanics of sending requests between services. Like TCP, the service mesh does not attach importance to the actual load and how it is encoded. The application has a high-level task (“send something from A to B”), and the service mesh, as in the case of TCP, can solve this problem by processing any problems encountered along the way.

In contrast to TCP, the service mesh has a significant goal in addition to “just make something work” - to provide a unified entry point for the entire application, providing visibility and control of its executable environment. The direct goal of the service mesh is to bring the interaction between services from an invisible, supposed infrastructure, offering him the role of a full-fledged participant in the ecosystem where everything is subject to monitoring, management, control.

Reliable query transfer in a cloud infrastructure application can be very complex. And a service mesh like Linkerd deals with this complexity with a set of powerful techniques: protecting from network problems, balancing workloads taking into account delays, detecting services (based on the consistency model in the long run ), retries and deadlines. All these features should work together, and the interactions between them and the environment in which they operate can be very difficult.

For example, when a request is made in a service through Linkerd, a very simplified sequence of events is as follows:

And this is only a simplified version: Linkerd can also initiate and terminate TLS, perform protocol updates, dynamically switch traffic, and perform switching between data centers.

It’s important to note that these features are designed to provide resilience both at the endpoint level and at the application level as a whole. Large-scale distributed systems, regardless of their architecture, have one defining characteristic: there are many possibilities for small, local drops to become catastrophic for the entire system. Therefore, the service mesh should be designed to provide protection against such problems, reducing the load and quickly falling when the underlying systems reach their limits.

Service mesh is, of course, not providing new functionality, but rather a shift in where this functionality is placed. Web applications have always been forced to drag the burden of interaction between services. The origin of the service mesh model can be traced to the evolution of these applications over the past 15 years.

Imagine a typical mid-sized web application architecture in the 2000s: these are 3 tiers. In this model, application logic, content delivery logic, and storage logic are separate layers. The interaction between these levels is complex, but limited in scope - after all, there are only two transit sections. There is no “mesh” (ie mesh) - only the interaction of logic between transit sections, carried out in the code of each layer.

When this architectural approach reached a very large scale, it began to break. Companies such as Google, Netflix, and Twitter are faced with the need to service large traffic, the implementation of which was the forerunner of the cloud (cloud native) approach: the application layer was divided into many services (sometimes called microservices), and the levels became the topology. In these systems, a generic layer for interaction quickly became a necessity, but usually took the form of a “thick client” library: Finagle from Twitter, Hystrix from Netflix, Stubby from Google.

In many ways, these libraries (Finagle, Stubby, Hystrix) were the first “service grids”. Although they were sharpened to work in their specific environment and required the use of specific languages and frameworks, they already constituted a dedicated infrastructure for managing the interaction between services and (in the case of Finagle and Hystrix Open Source libraries) were used not only in the companies that developed them .

There was a rapid movement towards modern applications designed to run in the clouds. The cloud native model combines a microservice approach of many small services with two additional factors:

These three components allow applications to naturally adapt to scale under load and handle always present partial glitches in the cloud. But with hundreds of services and thousands of instances, and even an orchestration layer for planning the launch of instances, the path of a single request following the service topology can be incredibly complicated, and since the containers simplified the ability to create services in any different languages, the library approach has ceased to be practical.

The combination of complexity and critical importance led to the need for a dedicated layer for interaction between services, separated from the application code and able to cope with the very dynamic nature of the underlying environment. This layer is the service mesh.

While there is a rapid growth in the adaptation of service mesh in the cloud ecosystem, an extensive and exciting roadmap [for further development of this concept and its implementations - approx. perev.]has yet to open. The requirements for computing without servers (for example, Amazon Lambda) fit perfectly into the service mesh model by name and binding [components], which forms a natural extension of its application in the cloud ecosystem. The roles of certifying services and access policies are still very young in cloud environments, and here the service mesh is well suited to become a fundamental part of this need. Finally, the service mesh, like TCP / IP before, will continue to penetrate the underlying infrastructure. As Linkerd evolved from systems like Finagle, the current implementation of the service mesh as a separate proxy in user space that can be added to the cloud stack will continue its evolution.

Service mesh is a critical component of the cloud native category stack. Linkerd, appearing a little over 1 year ago, is part of the Cloud Native Computing Foundation and has gained a growing community of contributors and users. Its users are diverse: from startups like Monzo, destroying the UK banking industry [it's a fully digital bank, offering developers APIs for accessing financial data - approx. trans.] , to large-scale Internet companies like PayPal, Ticketmaster and Credit Karma, as well as companies with hundreds of years of business history like Houghton Mifflin Harcourt.

The Linkerd Open Source Community of Users and Contributors daily demonstrates the value of the service mesh model. We are committed to creating a great product and the continued growth of our community.

PS The author of the article is William Morgan, one of the founders of Linkerd in 2015, the founder and CEO of Buoyant Inc (the development company that transferred Linkerd to CNCF).

UPDATED (02.20.2018): also read on our blog an overview of Buoyant's new product - “ Conduit - a lightweight service mesh for Kubernetes ”.

tl; dr: Service mesh is a dedicated infrastructure layer to ensure safe, fast and reliable interaction between services. If you are creating an application to run in the cloud (i.e. cloud native) , you need a service mesh.

Over the past year, the service mesh has become a critical component in the cloud stack. High-traffic companies such as PayPal, Lyft, Ticketmaster, and Credit Karma have already added a service mesh to their applications in production, and in January Linkerd , an Open Source implementation of the service mesh for cloud applications, became the official project of the Cloud Native Computing Foundation ( containerd and rkt have recently been transferred to the same fund , and it is also known at least by Kubernetes and Prometheus - approx. transl.) . But what is a service mesh? And why did he suddenly become necessary?

In this article, I will define the service mesh and trace its origin through changes in the application architecture over the past decade. I will separate the service mesh from related but different concepts: API gateway, edge proxy, enterprise service bus. Finally, I will describe where the service mesh is needed and what to expect from adapting this concept in the cloud native world.

What is a service mesh?

Service mesh is a dedicated infrastructure layer for interoperability between services. He is responsible for the reliable delivery of requests through the complex topology of services that make up a modern application designed to work in the cloud. In practice, a service mesh is usually implemented as an array of lightweight network proxies that are deployed with the application code, without the need for the application to know about it. (But we will see that this idea has different variations.)

The concept of service mesh as a separate layer is associated with the growth of applications created specifically for cloud environments. In such a cloud model, a single application can consist of hundreds of services, each service can have thousands of instances, and each instance can have constantly changing states depending on the dynamic planning carried out by an orchestration tool like Kubernetes . In this world, the interaction of services is not just a very complex process, but also a ubiquitous, fundamental part of the behavior of the executable environment. Managing it is very important to maintain performance and reliability.

Is the service mesh a network model?

Yes, a service mesh is a network model that is at the level of abstraction above TCP / IP. It is understood that the underlying L3 / L4 network is represented and is capable of transmitting bytes from point to point. (It is also understood that this network, like all other aspects of the environment, is not reliable; the service mesh must provide for network failure processing.)

In some ways, the service mesh is similar to TCP / IP. Just as a TCP stack abstracts from the mechanics of reliable byte transfers between network endpoints, so a service mesh abstracts from the mechanics of sending requests between services. Like TCP, the service mesh does not attach importance to the actual load and how it is encoded. The application has a high-level task (“send something from A to B”), and the service mesh, as in the case of TCP, can solve this problem by processing any problems encountered along the way.

In contrast to TCP, the service mesh has a significant goal in addition to “just make something work” - to provide a unified entry point for the entire application, providing visibility and control of its executable environment. The direct goal of the service mesh is to bring the interaction between services from an invisible, supposed infrastructure, offering him the role of a full-fledged participant in the ecosystem where everything is subject to monitoring, management, control.

What does the service mesh do?

Reliable query transfer in a cloud infrastructure application can be very complex. And a service mesh like Linkerd deals with this complexity with a set of powerful techniques: protecting from network problems, balancing workloads taking into account delays, detecting services (based on the consistency model in the long run ), retries and deadlines. All these features should work together, and the interactions between them and the environment in which they operate can be very difficult.

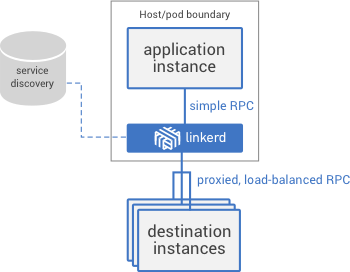

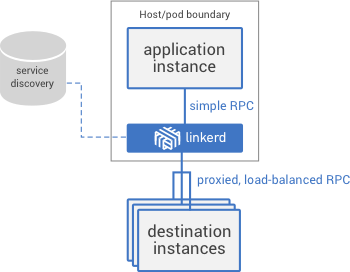

For example, when a request is made in a service through Linkerd, a very simplified sequence of events is as follows:

- Linkerd applies dynamic routing rules to determine which service the request is for. Should the request be passed to the service in production or staging? Service in the local data center or in the cloud? The latest service version that has been tested, or an older version tested in production? All these routing rules are dynamically configured, can be applied globally or for selected traffic slices.

- Finding the desired recipient, Linkerd queries the appropriate pool of instances from the discovery service of the corresponding endpoint (there may be several). If this information is at variance with what Linkerd sees in practice, he decides which source of information to trust.

- Linkerd selects the instance that is likely to return a quick response based on a number of factors (including the latency recorded for recent requests).

- Linkerd tries to send a request to the instance, recording the result of the operation (delay and response type).

- If the instance crashes, does not respond, or cannot process the request, Linkerd tries this request on another instance (only if it knows that the request is idempotent ).

- If the instance constantly returns errors, Linkerd removes it from the load balancing pool and will periodically check it in the future (the instance may experience a short-term failure).

- If the deadline for the request is reached, Linkerd proactively returns a request error, and does not add load with repeated attempts to execute it.

- Linkerd takes into account every aspect of the behavior described above in the form of metrics and distributed tracking - all this data is sent to a centralized metric system.

And this is only a simplified version: Linkerd can also initiate and terminate TLS, perform protocol updates, dynamically switch traffic, and perform switching between data centers.

It’s important to note that these features are designed to provide resilience both at the endpoint level and at the application level as a whole. Large-scale distributed systems, regardless of their architecture, have one defining characteristic: there are many possibilities for small, local drops to become catastrophic for the entire system. Therefore, the service mesh should be designed to provide protection against such problems, reducing the load and quickly falling when the underlying systems reach their limits.

Why is a service mesh needed?

Service mesh is, of course, not providing new functionality, but rather a shift in where this functionality is placed. Web applications have always been forced to drag the burden of interaction between services. The origin of the service mesh model can be traced to the evolution of these applications over the past 15 years.

Imagine a typical mid-sized web application architecture in the 2000s: these are 3 tiers. In this model, application logic, content delivery logic, and storage logic are separate layers. The interaction between these levels is complex, but limited in scope - after all, there are only two transit sections. There is no “mesh” (ie mesh) - only the interaction of logic between transit sections, carried out in the code of each layer.

When this architectural approach reached a very large scale, it began to break. Companies such as Google, Netflix, and Twitter are faced with the need to service large traffic, the implementation of which was the forerunner of the cloud (cloud native) approach: the application layer was divided into many services (sometimes called microservices), and the levels became the topology. In these systems, a generic layer for interaction quickly became a necessity, but usually took the form of a “thick client” library: Finagle from Twitter, Hystrix from Netflix, Stubby from Google.

In many ways, these libraries (Finagle, Stubby, Hystrix) were the first “service grids”. Although they were sharpened to work in their specific environment and required the use of specific languages and frameworks, they already constituted a dedicated infrastructure for managing the interaction between services and (in the case of Finagle and Hystrix Open Source libraries) were used not only in the companies that developed them .

There was a rapid movement towards modern applications designed to run in the clouds. The cloud native model combines a microservice approach of many small services with two additional factors:

- Containers (such as Docker) that provide resource isolation and dependency management

- An orchestration layer (e.g. Kubernetes) that abstracts from the hardware and offers a single pool.

These three components allow applications to naturally adapt to scale under load and handle always present partial glitches in the cloud. But with hundreds of services and thousands of instances, and even an orchestration layer for planning the launch of instances, the path of a single request following the service topology can be incredibly complicated, and since the containers simplified the ability to create services in any different languages, the library approach has ceased to be practical.

The combination of complexity and critical importance led to the need for a dedicated layer for interaction between services, separated from the application code and able to cope with the very dynamic nature of the underlying environment. This layer is the service mesh.

Future service mesh

While there is a rapid growth in the adaptation of service mesh in the cloud ecosystem, an extensive and exciting roadmap [for further development of this concept and its implementations - approx. perev.]has yet to open. The requirements for computing without servers (for example, Amazon Lambda) fit perfectly into the service mesh model by name and binding [components], which forms a natural extension of its application in the cloud ecosystem. The roles of certifying services and access policies are still very young in cloud environments, and here the service mesh is well suited to become a fundamental part of this need. Finally, the service mesh, like TCP / IP before, will continue to penetrate the underlying infrastructure. As Linkerd evolved from systems like Finagle, the current implementation of the service mesh as a separate proxy in user space that can be added to the cloud stack will continue its evolution.

Conclusion

Service mesh is a critical component of the cloud native category stack. Linkerd, appearing a little over 1 year ago, is part of the Cloud Native Computing Foundation and has gained a growing community of contributors and users. Its users are diverse: from startups like Monzo, destroying the UK banking industry [it's a fully digital bank, offering developers APIs for accessing financial data - approx. trans.] , to large-scale Internet companies like PayPal, Ticketmaster and Credit Karma, as well as companies with hundreds of years of business history like Houghton Mifflin Harcourt.

The Linkerd Open Source Community of Users and Contributors daily demonstrates the value of the service mesh model. We are committed to creating a great product and the continued growth of our community.

PS The author of the article is William Morgan, one of the founders of Linkerd in 2015, the founder and CEO of Buoyant Inc (the development company that transferred Linkerd to CNCF).

UPDATED (02.20.2018): also read on our blog an overview of Buoyant's new product - “ Conduit - a lightweight service mesh for Kubernetes ”.