How Discord Stores Billions of Messages

- Transfer

Discord continues to grow faster than we expected, as does custom content. The more users, the more chat messages. In July, we announced 40 million messages a day , in December we announced 100 million , and in mid-January we crossed 120 million. We immediately decided to keep the chat history forever, so that users can return at any time and access their data from any devices. This is a lot of data, the flow and volume of which is growing, and all of them should be accessible. How do we do this? Cassandra!

What did we do

The original version of Discord was written faster than two months in early 2015. Perhaps one of the best DBMS for fast iteration is MongoDB. Everything in Discord was specially stored in a single replica set of MongoDB, but we also prepared everything for simple migration to a new DBMS (we knew that we were not going to use MongoDB sharding because of its complexity and unknown stability). In fact, it is part of our corporate culture: develop quickly to experience a new product feature, but always with a focus on a more reliable solution.

Messages were stored in the MongoDB collection with a single composite index on

channel_idandcreated_at. Around November 2015, we reached the line of 100 million messages in the database, and then we began to understand the problems that await us: data and the index no longer fit in RAM, and delays become unpredictable. It's time to migrate to a more appropriate DBMS.Choosing the Right DBMS

Before choosing a new DBMS, we needed to understand the existing read / write patterns and why there were problems with the current solution.

- It quickly became clear that the read operations were extremely random, and the read / write ratios were approximately 50/50.

- Heavy Discord voice chat servers almost never sent messages. That is, they sent one or two messages every few days. In a year, a server of this type is unlikely to reach the milestone of 1000 messages. The problem is that even despite such a small number of messages, this data is more difficult to deliver to users. Just returning 50 messages to the user can lead to many random disk searches, which forces the disk cache out.

- Heavy Discord private text chat servers send a decent amount of messages, easily falling between 100,000 and 1 million messages per year. They usually request only the most recent data. The problem is that these servers usually have less than 100 participants, so the data request speed is low and they are unlikely to be in the disk cache.

- Large public Discord servers send a lot of messages. There are thousands of participants sending thousands of messages per day. Millions of messages per year are easily typed. They almost always request messages sent in the last hour, and this happens often. Therefore, data is usually in the disk cache.

- We knew that in the coming year, users will have even more ways to generate random readings: this is the ability to view their mentions in the last 30 days and then skip at that moment in history, view and go to attached messages and search in full text. All this means even more random readings!

Then we determined our requirements:

- Linear scalability - We don’t want to revise the solution later or manually transfer data to another shard.

- Auto Resiliency - We like to sleep at night and make Discord as self-healing as possible.

- A little support - It should work as soon as we install it. We are only required to add more nodes as the data grows.

- Proven in work - We love to try new technologies, but not too new.

- Predictable performance - Messages will be sent to us if the response time of the API in 95% of cases exceeds 80 ms. We also do not want to face the need to cache messages in Redis or Memcached.

- Not blob storage - Recording thousands of messages per second will not work fine if we have to continuously deserialize blobs and attach data to them.

- Open source - We believe that we control our own destiny, and do not want to depend on a third-party company.

Cassandra was the only DBMS that met all of our requirements. We can simply add nodes when scaling, and it copes with the loss of nodes without any effect on the application. Large companies like Netflix and Apple have thousands of Cassandra nodes. Related data is stored nearby on the disk, providing a minimum of search operations and easy distribution across the cluster. It is supported by DataStax, but is distributed open source and community powered.

Having made a choice, it was necessary to prove that he was really justified.

Data modeling

The best way to describe a newcomer to Cassandra is the abbreviation KKV. The two letters “K” contain the primary key. The first “K” is the partition key. It helps to determine in which node the data lives and where to find it on the disk. There are many lines inside the section, and the second “K” defines the specific line inside the section - the clustering key. It works as the primary key inside the section and determines how the rows are sorted. You can imagine the section as an ordered dictionary. All these qualities combined allow a very powerful data modeling.

Remember that messages in MongoDB were indexed using

channel_idand created_at? channel_idbecame the key of the section, since all messages work in the channel, butcreated_atdoes not give a good clustering key, because two messages can be created at the same time. Fortunately, every Discord ID is actually created in Snowflake , i.e. chronologically sorted. So it was possible to use them. The primary key has turned into (channel_id, message_id), where message_idis Snowflake. This means that when loading a channel, we can tell Cassandra the exact range where to look for messages. Here is a simplified diagram for our message table (it skips about 10 columns).

CREATE TABLE messages (

channel_id bigint,

message_id bigint,

author_id bigint,

content text,

PRIMARY KEY (channel_id, message_id)

) WITH CLUSTERING ORDER BY (message_id DESC);Although Cassandra’s schemas are similar to relational database schemas, they are easy to modify, which does not have any temporary performance impact. We took the best from blob storage and relational storage.

As soon as the import of existing messages into Cassandra began, we immediately saw in the warning logs that partitions larger than 100 MB were found. Yah?! After all, Cassandra declares support for 2 GB partitions!Apparently, the very possibility does not mean that it should be done. Large sections place a heavy load on the garbage collector in Cassandra when compacting, expanding a cluster, etc. The presence of a large partition also means that the data in it cannot be distributed across the cluster. It became clear that we would have to somehow limit the size of partitions, because some Discord channels can exist for years and constantly increase in size.

We decided to distribute our messages in buckets over time. We looked at the largest channels in Discord and determined that if you store messages in blocks of about 10 days, we will comfortably invest in a limit of 100 MB. Blocks need to be obtained from

message_idor timestamps.DISCORD_EPOCH = 1420070400000

BUCKET_SIZE = 1000 * 60 * 60 * 24 * 10

def make_bucket(snowflake):

if snowflake is None:

timestamp = int(time.time() * 1000) - DISCORD_EPOCH

else:

# When a Snowflake is created it contains the number of

# seconds since the DISCORD_EPOCH.

timestamp = snowflake_id >> 22

return int(timestamp / BUCKET_SIZE)

def make_buckets(start_id, end_id=None):

return range(make_bucket(start_id), make_bucket(end_id) + 1)Cassandra partition keys can be composite, so it has become our new primary key

((channel_id, bucket), message_id).CREATE TABLE messages (

channel_id bigint,

bucket int,

message_id bigint,

author_id bigint,

content text,

PRIMARY KEY ((channel_id, bucket), message_id)

) WITH CLUSTERING ORDER BY (message_id DESC);To request recent messages in the channel, we generated a range of blocks from the current time to

channel_id(it is also chronologically sorted as Snowflake and must be older than the first message). Then we sequentially poll the sections until we collect enough messages. The flip side of this method is that occasionally, active Discord instances will have to poll many different blocks in order to collect enough messages over time. In practice, it turned out that everything is in order, because for the active instance of Discord there are usually enough messages in the first section, and most of them are. The import of messages into Cassandra went smoothly, and we were ready to test it in production.

Heavy launch

Putting a new system into production is always scary, so it’s a good idea to test it without affecting users. We configured the system to duplicate read / write operations in MongoDB and Cassandra.

Immediately after launch, errors appeared in the bug tracker, which

author_idis zero. How can it be zero? This is a required field!Consensus ultimately

Cassandra is an AP type system , that is, guaranteed integrity is sacrificed here for accessibility, which is what we wanted in general. In Cassandra, reading before writing is contraindicated (read operations are more expensive) and therefore all that Cassandra does is update and insert (upsert), even if only certain columns are provided. You can also write to any node, and it will automatically resolve conflicts using the semantics “last record wins” for each column. So how did this affect us?

Example race condition edit / delete

In case the user edited the message while another user deleted the same message, we had a line with completely missing data, except for the primary key and text, because Cassandra records only updates and inserts. There are two possible solutions to this problem:

- Write back the whole message while editing the message. Then there is the possibility of resurrecting deleted messages and the odds of conflict are added for simultaneous entries in other columns.

- Identify a damaged message and remove it from the database.

We chose the second option by defining the required column (in this case

author_id) and deleting the message if it is empty. Solving this problem, we noticed that we were quite ineffective with write operations. Since Cassandra is ultimately agreed upon, it cannot take and immediately delete data like this. She needs to replicate deletions to other nodes, and this should be done even if the nodes are temporarily unavailable. Cassandra does this by equating the deletion to a peculiar recording form called “tombstone”. During the reading operation, she simply slips through the “tombstones” that occur along the way. The life time of the “tombstones” is adjusted (by default, 10 days), and they are permanently deleted during the base compaction, if the deadline is over.

Deleting a column and writing zero to a column are exactly the same thing. In both cases, a “tombstone” is created. Since all entries in Cassandra are updates and inserts, then you create a “gravestone” even if you initially write zero. In practice, our complete communication scheme consisted of 16 columns, but the average message had only 4 set values. We recorded 12 “tombstones” in Cassandra, usually for no reason. The solution to the problem was simple: only write non-zero values to the database.

Performance

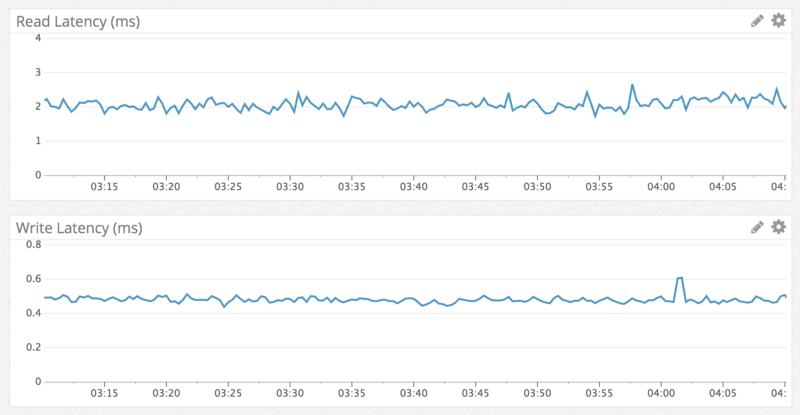

Cassandra is known to perform write operations rather than reads, and we observed exactly that. Write operations occurred in the interval of less than a millisecond, and read operations occurred in less than 5 milliseconds. Such indicators were observed regardless of the type of data accessed. Performance remained unchanged for a week of testing. No wonder, we got exactly what we expected.

Read / write delay, according to the log data.

In accordance with the fast, reliable read performance, here is an example of a transition to a message a year ago in a channel with millions of messages:

Big surprise

Everything went smoothly, so we rolled out Cassandra as our main database and disabled MongoDB for a week. She continued to work flawlessly ... for about 6 months, until one day she stopped responding.

We noticed that Cassandra stops continuously for 10 seconds during garbage collection, but we could not understand at all why. They started digging and found the Discord channel, which took 20 seconds to load. The culprit was the public Discord server of the Puzzles & Dragons subreddit . Since it is public, we joined to watch. To our surprise, there was only one message on the channel. At that moment, it became obvious that they deleted millions of messages through our APIs, leaving only one message on the channel.

If you read carefully, remember how Cassandra handles deletions using “tombstones” (mentioned in the chapter “Consistency in the long run”). When a user downloads this channel, at least one message is there, Cassandra has to effectively scan millions of “tombstones” of messages. Then it generates garbage faster than the JVM can collect it.

We solved this problem as follows:

- They reduced the life time of tombstones from 10 days to 2 days, because every evening we launch the repair of Cassandra (anti-entropy process) on our message cluster.

- We changed the request code to track empty blocks on the channel and avoid them in the future. This means that if the user again initiated this request, then in the worst case, Cassandra will only scan the most recent block.

Future

We currently have a cluster of 12 nodes with a replication factor of 3, and we will continue to add new Cassandra nodes as needed. We believe that this approach works in the long run, but as Discord grows, a distant future is seen when you have to save billions of messages per day. Netflix and Apple have clusters with hundreds of nodes, so for now we have nothing to worry about. However, I want to have a couple of ideas in reserve.

Near future

- Upgrade our message cluster from Cassandra 2 to Cassandra 3. The new storage format in Cassandra 3 can reduce storage by more than 50%.

- Newer versions of Cassandra are better at handling more data in each node. We currently store approximately 1 TB of compressed data in each of them. We think that it is safe to reduce the number of nodes in a cluster by increasing this limit to 2 TB.

Distant future

- Explore Scylla is a Cassandra-compatible DBMS written in C ++. In normal operation, our Cassandra nodes actually consume a few CPU resources, however, during off-peak hours during the repair of Cassandra (anti-entropy process), they are quite dependent on the CPU, and the repair time increases depending on the amount of data recorded since the last repair. Scylla promises to significantly increase the speed of repair.

- Create a system for archiving unused channels in Google Cloud Storage and downloading them back on demand. We want to avoid this and do not think that we will have to do this.

Conclusion

More than a year has passed since the transition to Cassandra, and despite the “big surprise” , it was a calm swim. We went from over 100 million total messages to over 120 million messages per day, while maintaining productivity and stability.

Due to the success of this project, we have since transferred all our other data in production to Cassandra, and also successfully.

In the continuation of this article, we will explore how we perform a full-text search on billions of messages.

We still do not have specialized DevOps engineers (only four backend engineers), so it’s very cool to have a system that you don’t have to worry about. We are recruiting employees, so contactif such puzzles tickle your imagination.