Rendering and optimization in VR development

- Transfer

This article is the second in a series about the quality development of VR projects. Earlier, we already studied the principles of working with the camera in the article “VR-Design: a look into the virtual world ” . Links to other materials can be found in the review article .

To create virtual reality, a complex interaction of hardware and software is required. Moreover, each individual component plays an important role.

Today we will look at the software in more detail and understand what is happening at its various levels. If you can understand these basics, you will receive the necessary knowledge in order to properly optimize your projects.

In particular, we want to expand your knowledge of rendering and optimizing VR applications so that it is easier for you to develop high-quality VR projects. You need to know, for example, how the camera affects development.

It’s crucial that we provide 90 frames per second (FPS for short). It was not easy when developing classic games, but in VR this problem got even more acute.

Since the visible area must be rendered twice for each eye with its own perspective. Dual rendering accelerators are already built into 3D engines, but they still cannot be a full counterweight for this additional load.

You may already have heard the concept of motion to photon latency. It describes the time elapsed between the change of position and the actualization of images in VR glasses. Ideally, this time span should be less than 30 milliseconds.

As a rule, the most common engines and SDKs allow the developer not to think about it. They take on this difficult job. Thanks to them, our 3D scenes are optimized for any type of device, lens position and curvature.

In addition, we automatically get new rendering accelerators using engines. For example, Unity developers recently introduced an extensive update to improve optimization, part of the load was transferred from the CPU to the GPU - from the processor to the graphics card - and so the load is reduced.

The difficulties that occur at this level of rendering are too voluminous for this article. But if you want to seriously deal with this topic, then I advise you to Alex Alex Vlachos report on the topic "Advanced VR Rendering"!

While we control our FPS and it is quite high, we are on the right track. To achieve this, you need to pay attention to a couple of points during development.

When developing, you should not rely on your own feelings, as there are very useful tools for testing the performance of our VR applications.

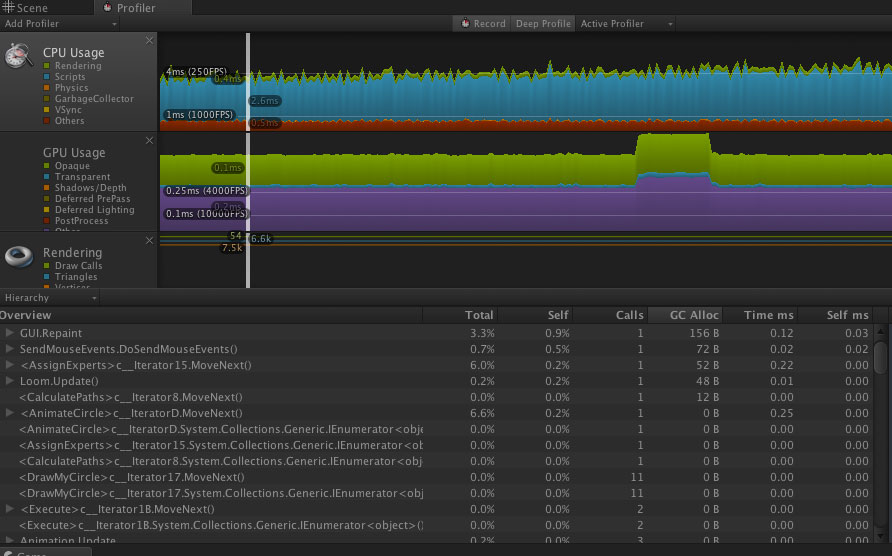

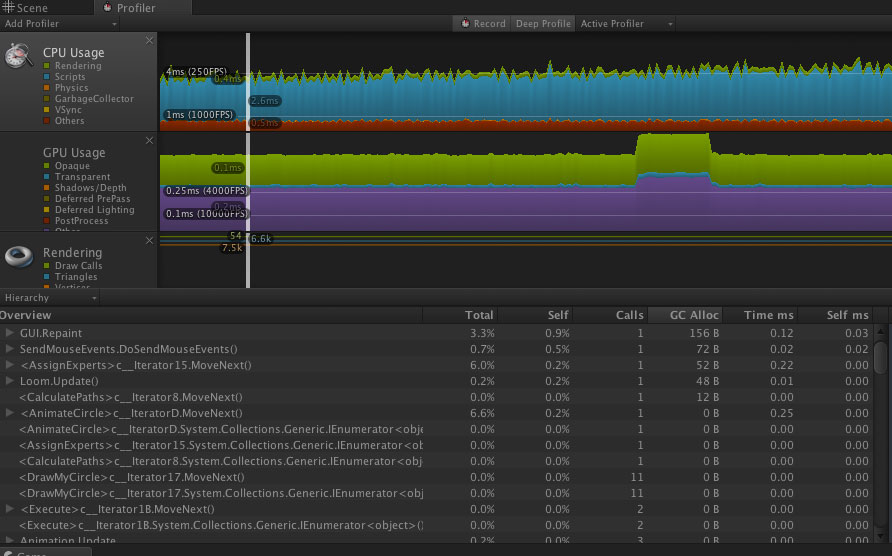

NVIDIA's Tool Nsight works closely with the graphics card and is popular among engine developers. But in practice, you can use Unity or Unreal Engine tools to accurately control performance.

Unity 3D Profiler provides important performance information for your VR application.

In addition, it would be ideal if you used a computer for testing your VR projects that meets the minimum requirements of any necessary device. So you can be sure that you will see the VR application in the same way as users will later see them.

On the technical side, there are certain points that we can optimize. But these are all special cases, depending on the context.

However, we can draw inspiration from mobile game optimization methods . Since their development there are similar performance problems, especially with high resolution (for example, iPad Retina).

We have tremendous potential to improve our VR applications. In visual terms, our virtual worlds should not compete with classic games in the level of detail.

In VR, the visual component recedes into the background, since completely different elements are important. Therefore, we can easily simplify our 3D models and effects and, thus, reduce the load.

The world of a VR project stands on three pillars: animation, sound, and interaction. Therefore, you should aim all your time and inspiration precisely on these aspects.

Better make a strong simplification of the graphics and invest your time and saved power in live animation and suitable sound, they will complement the scene and make it even brighter. Do not forget about the interactive. It is he who will interest and entertain the user.

A great example of this method of VR development is the game “Job Simulator”:

The fact that an excellent visual component in VR projects is not necessary, and the combination of live animation and simple graphics looks completely believable, you can see in this video:

Help for you:

How important is the correct implementation of sound effects in VR projects, and why, when simulating 3D sounds, they still resort to fraud, we will look in the next article.

If in your VR project you go beyond the limits of acceptable performance and are looking for help in optimization, you can contact me .

As already mentioned, the use of various optimization methods is appropriate and appropriate depending on the context and the project. And it is often not so easy to understand which component of the project is responsible for degrading productivity.

Next, we will study the principles of working with sound in the article “Sound Effects in Virtual Worlds” .

To create virtual reality, a complex interaction of hardware and software is required. Moreover, each individual component plays an important role.

Today we will look at the software in more detail and understand what is happening at its various levels. If you can understand these basics, you will receive the necessary knowledge in order to properly optimize your projects.

In particular, we want to expand your knowledge of rendering and optimizing VR applications so that it is easier for you to develop high-quality VR projects. You need to know, for example, how the camera affects development.

90 frames per second

It’s crucial that we provide 90 frames per second (FPS for short). It was not easy when developing classic games, but in VR this problem got even more acute.

Since the visible area must be rendered twice for each eye with its own perspective. Dual rendering accelerators are already built into 3D engines, but they still cannot be a full counterweight for this additional load.

You really, really have to watch framerate.

You don't wanna make yourself sick.

- Dylan Fitterer, developer from Audioshield

You may already have heard the concept of motion to photon latency. It describes the time elapsed between the change of position and the actualization of images in VR glasses. Ideally, this time span should be less than 30 milliseconds.

As a rule, the most common engines and SDKs allow the developer not to think about it. They take on this difficult job. Thanks to them, our 3D scenes are optimized for any type of device, lens position and curvature.

In addition, we automatically get new rendering accelerators using engines. For example, Unity developers recently introduced an extensive update to improve optimization, part of the load was transferred from the CPU to the GPU - from the processor to the graphics card - and so the load is reduced.

The difficulties that occur at this level of rendering are too voluminous for this article. But if you want to seriously deal with this topic, then I advise you to Alex Alex Vlachos report on the topic "Advanced VR Rendering"!

Also, go for quantity.

Try a lot of different things, new concepts.

- Dylan Fitterer, developer from Audioshield

While we control our FPS and it is quite high, we are on the right track. To achieve this, you need to pay attention to a couple of points during development.

Useful tools

When developing, you should not rely on your own feelings, as there are very useful tools for testing the performance of our VR applications.

NVIDIA's Tool Nsight works closely with the graphics card and is popular among engine developers. But in practice, you can use Unity or Unreal Engine tools to accurately control performance.

Unity 3D Profiler provides important performance information for your VR application.

In addition, it would be ideal if you used a computer for testing your VR projects that meets the minimum requirements of any necessary device. So you can be sure that you will see the VR application in the same way as users will later see them.

Focus on animation, sound and interaction

On the technical side, there are certain points that we can optimize. But these are all special cases, depending on the context.

However, we can draw inspiration from mobile game optimization methods . Since their development there are similar performance problems, especially with high resolution (for example, iPad Retina).

We have tremendous potential to improve our VR applications. In visual terms, our virtual worlds should not compete with classic games in the level of detail.

In VR, the visual component recedes into the background, since completely different elements are important. Therefore, we can easily simplify our 3D models and effects and, thus, reduce the load.

The world of a VR project stands on three pillars: animation, sound, and interaction. Therefore, you should aim all your time and inspiration precisely on these aspects.

Better make a strong simplification of the graphics and invest your time and saved power in live animation and suitable sound, they will complement the scene and make it even brighter. Do not forget about the interactive. It is he who will interest and entertain the user.

A great example of this method of VR development is the game “Job Simulator”:

The fact that an excellent visual component in VR projects is not necessary, and the combination of live animation and simple graphics looks completely believable, you can see in this video:

Help for you:

How important is the correct implementation of sound effects in VR projects, and why, when simulating 3D sounds, they still resort to fraud, we will look in the next article.

If in your VR project you go beyond the limits of acceptable performance and are looking for help in optimization, you can contact me .

As already mentioned, the use of various optimization methods is appropriate and appropriate depending on the context and the project. And it is often not so easy to understand which component of the project is responsible for degrading productivity.

Next, we will study the principles of working with sound in the article “Sound Effects in Virtual Worlds” .