Draft Andrew Eun's The Thirst for Machine Learning book, chapters 1-7

- Transfer

Last December, in a correspondence between American data science colleagues, there was a wave of discussion about the long-awaited draft of the new machine learning guru book, Andrew Ng, “The Thirst for Machine Learning: Strategies for Engineers in the Deep Learning Age.” The long-awaited, because the book was announced back in the summer of 2016, and now, finally, several chapters appeared.

I present to the attention of the Habra community a translation of the first seven chapters of the fourteen currently available. I note that this is not the final version of the book, but a draft. It has a number of inaccuracies. Andrew Eun suggests writing his comments and comments here . The author begins with things that seem obvious. Further more complex concepts are expected.

Machine Learning (ML) is the foundation of an infinite number of important products and services, including Internet search, anti-spam, speech recognition, recommendation systems and so on. I assume that you and your team are working on products with machine learning algorithms, and you want to make rapid progress in development. This book will help you with this.

Let's say you create a startup that will provide a continuous stream of cat photos for cat lovers. You use a neural network to create a computer vision system to detect cats in photos taken with smartphones.

But, unfortunately, the accuracy of your algorithm is not high enough. You are under tremendous pressure, you must improve the “cat detector”. What to do?

Your team has a ton of ideas, for example:

• Collect more data: take more images of cats.

• Collect a more diverse training sample: for example, cats in unusual poses, cats of a rare color, photographs taken with different camera settings, ...

• Train the model for longer, use more iterations of the gradient descent.

• Try a larger neural network: more layers / links / parameters.

• Experiment with a smaller network.

• Add regularization, for example, L2.

• Change the network architecture: activation function, number of hidden layers, etc.

• ...

If the choice among the possible alternatives is successful, then it will be possible to create a leading system for searching photos of cats, and this will lead the company to success. If the choice is unsuccessful, then you will lose months. How to act in such a situation?

This book will tell you how. Most machine learning tasks leave key clues that say what is good to try and what is not. Being able to see such tips can save months and years of development time.

After reading this book, you will know how to set development directions in a project with machine learning algorithms. However, your colleagues may not understand why you have chosen this or that direction. You might want your team to define a quality metric as a single numerical parameter, but colleagues disagree with using only one indicator. How to convince them? That's why I made the chapters in this book short: just print and let colleagues read 1-2 pages of key information that they find useful.

Minor changes in the priority of tasks can have a huge effect on team performance. I hope you can become a superhero if you help your team make such changes.

If you studied the “Machine Learning” course at Coursera or have experience using supervised learning algorithms, then this is enough to understand the text of the book. I assume that you are familiar with teaching methods with a teacher: finding a function that, by values of x, allows you to get y for all x, having a labeled training sample for some (x, y). Algorithms of this kind include, for example, linear regression, logistic regression, and neural networks. There are many areas of machine learning, but most of the practical results have been achieved using teacher learning algorithms.

I will often talk about neural networks (NN) and deep learning. You only need a general understanding of their concepts. If you are not familiar with the concepts mentioned above, then first watch a video of the lectures on the Machine Learning course on Coursera.

Many deep learning / neural network ideas have been in the air for many years. Why right now we started to apply them? Two factors made the greatest contribution to the progress of recent years:

• Data availability: now people constantly use digital devices (laptops, smartphones, etc.), their digital activity generates huge amounts of data that we can “feed” our algorithms.

• Computing power: only a few years ago it became possible to mass train large enough neural networks to take advantage of the huge data sets that are available now.

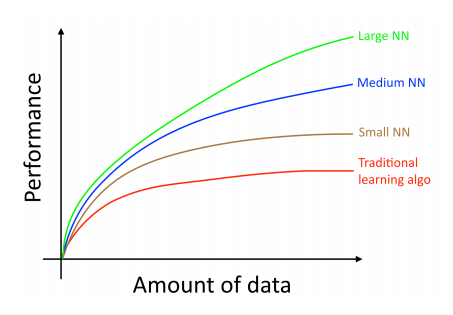

Usually, the quality indicator of “old” algorithms, such as logistic regression, reaches a constant level, even if you have accumulated more data for training, i.e. from a certain limit, the quality of the algorithm ceases to improve with an increase in the training sample. If you train a small neural network in the same task, you can get the result a little better. Here, by a “small” neural network, I mean a network with a small number of hidden layers, links, and parameters. In the end, if you train a larger and larger network, you can get even better results.

Author's note: This graph shows that NN works better than traditional ML algorithms, including in the case of a small amount of training data. This is less correct than for a huge amount of data. For small samples, traditional algorithms may be better or worse depending on features designed by hand. For example, if you have only 20 samples / use cases for training, it doesn’t matter much whether you use logistic regression or a neural network, a set of signs will give a much greater effect compared to the choice of algorithm. But if you have a million samples for training, then I prefer to use a neural network.

Thus, the best results are achieved:

1. when a very large neural network is trained (so as to be on the green curve in the figure);

2. When a huge amount of data is used for training.

Many other details are also important, such as network architecture, where many innovations constantly appear. However, one of the most reliable ways to improve the quality of the algorithm remains (1) to train a large NN and (2) to use more data for training. In practice, following paragraphs (1) and (2) is extremely difficult. This book discusses the details of this process. We will start with general approaches that are useful for both traditional ML algorithms and NN, then we will select the most effective strategies for building deep learning systems.

Translator's Note: Andrew Eun uses the term “development set” to refer to the sample on which model parameters are set. Currently, this sample is often called the “validation set” - a validation sample. Indeed, the term “validation” does not correspond to the variety of tasks for which this sample is applied, and the name “development set” is more consistent with the status quo. However, I could not find a good translation for the “development set”, so I write “development selection” or “development selection”. It might be wise to call this sample “tuning” (thanks kfmn ).

Let's go back to the example with photos of cats. So, you are developing a mobile application, users upload various photos to your application, which automatically finds photos of cats among them. Your team created a large data set by downloading photos of cats (positive samples) and “non-cats” (negative samples) from websites. Then this data set was divided into training (70%) and test (30%) samples. Using the sampling data, a “cat detector” was created, which worked well for objects from the test and training samples. But when this classifier was implemented in a mobile application, you found that the quality of its work is really low! What happened?

You have found that the photos that users upload to your application look different than the images from the websites that make up the training set: users upload photos taken with smartphones, these images have different resolutions, are less clear, and the scene is not perfectly lit. Since the training and test samples were composed of images from web sites, your algorithm is not generalized to the actual distribution of data, for which the algorithm is developed, i.e. not summarized in photos from smartphones.

Before the era of “big data”, the general rule in machine learning was to randomly split a 70% / 30% data set into training and test samples. This approach may work, but it’s a bad idea for more and more tasks, where the distribution of data in the training set differs from the distribution of data for which the problem is ultimately solved.

Usually we define three samples:

• Training, which runs the learning algorithm;

• For development (development set), which is used to configure parameters, select features and make other decisions regarding the learning algorithm, sometimes such a sample is called a hold-out cross validation set;

• Test, on which they evaluate the quality of the algorithm, but on its basis do not make any decisions about which training algorithm or parameters to use.

As soon as you make a test sample and a sample for development, your team will begin to try many ideas, for example, various parameters of the training algorithm, to see what works better. These samples allow you to quickly evaluate how well the model works. In other words, the goal of test and development (development) samples is to direct your team towards the most important changes in the development of machine learning. Thus, you should do the following: create working and test samples so that they match the data that you expect to receive in the future, and on which your system should work well. In other words, your test sample should not just include 30% of the available data, especially if in the future you expect data (photos from smartphones),

If you have not yet begun distributing your mobile application, then you have no users, and there is no way to collect exactly the data that you expect in the future. But you can try to approximate them. For example, ask your friends and acquaintances to take photos with mobile phones and send them to you. Once you publish the mobile application, you can update the working (development) and test samples with real user data.

If there is no way to obtain data that approximates the expected in the future, then perhaps you can start working with images from web sites. But you must clearly understand that it is a risk to create a system that has a low generalizing ability for this task.

It is necessary to evaluate how much time and effort you are willing to invest in creating two powerful samples: for development and test. Do not make blind assumptions that the distribution of data in the training set is exactly the same as in the test set. Try to pick up test cases that reflect what you should ultimately work well on, and not any data that you are fortunate enough to have for training.

Photos of the “cat” mobile application are segmented into four regions that correspond to the largest application markets: USA, China, India and the rest of the world. You can create a working selection from the data of two randomly selected segments, and put the data of the two remaining segments into the test selection. Right? No mistake! Once you define these two samples, the team will focus on improving quality for the working sample. Therefore, this sample should reflect the whole problem that you are solving, and not part of it: you need to work well in all markets, and not just two.

There is another problem in the mismatch of data distributions in the working and test samples: it is likely that your team will create something that works well on the development sample, and then make sure that it does not work well on the test sample. As a result, there will be many disappointments and wasted efforts. Avoid this.

Suppose your team has developed a system that works well on a sample for development, but not on a test one. If both samples are obtained from the same source / distribution, then there is a fairly clear diagnosis of what went wrong: you overfit on the working sample. The obvious cure: increase the amount of data in the working sample. But if these two samples do not match, then there are many things that could “go wrong.” In general, it is quite difficult to work on applications with machine learning algorithms. The discrepancy between the working and test samples introduces additional uncertainty to the question: will the improvement in work in the working sample lead to improvements in the test. Having such a mismatch, it is difficult to understand what does not work, it is difficult to prioritize: what should be tried first.

If you are working on a task when the samples are provided by a third-party company / organization, then the working and test samples may have different distributions, and you cannot influence this situation. In this case, luck rather than skill will have a greater impact on the quality of the model. Training a model on data having one distribution for processing data (also with high generalizing ability) having another distribution is an important research problem. But if your goal is to obtain a practical result, and not research, then I recommend using data from the same source and equally distributed for the working and test samples.

The working sample should be large enough to detect the difference between the algorithms you are trying. For example, if classifier A gives an accuracy of 90.0%, and classifier B gives an accuracy of 90.1%, then a working sample of 100 samples will not allow you to see a change of 0.1%. In general, the working range of 100 samples is too small. Common practice is to sample from 1,000 to 10,000 samples. With 10,000 samples, you have a chance to see an improvement of 0.1%.

Author’s note: theoretically, statistical tests can be performed to determine whether changes in the algorithm have led to significant changes in the working sample, but in practice most teams don’t bother with this (unless they write a scientific article), I also don’t find such tests useful for evaluating intermediate progress.

For developed and important systems, such as advertising, search, recommendation systems, I saw teams that sought to achieve improvements of even 0.01%, since this directly affected the profit of their companies. In this case, the working sample must be much larger than 10,000 in order to be able to determine the slightest change.

What about the size of the test sample? It should be large enough to evaluate with high confidence the quality of the system. One popular heuristic was to use 30% of the available data for a test sample. This works quite well when you have a very small amount of data, say from 100 to 10,000 samples. But in the era of “big data”, when there are tasks with more than a million samples for training, the share of data in the working and test samples decreases, even when the absolute size of these samples increases. There is no need to have working and test samples much larger than the volume that allows you to evaluate the quality of your algorithms.

I present to the attention of the Habra community a translation of the first seven chapters of the fourteen currently available. I note that this is not the final version of the book, but a draft. It has a number of inaccuracies. Andrew Eun suggests writing his comments and comments here . The author begins with things that seem obvious. Further more complex concepts are expected.

1. Why are machine learning strategies?

Machine Learning (ML) is the foundation of an infinite number of important products and services, including Internet search, anti-spam, speech recognition, recommendation systems and so on. I assume that you and your team are working on products with machine learning algorithms, and you want to make rapid progress in development. This book will help you with this.

Let's say you create a startup that will provide a continuous stream of cat photos for cat lovers. You use a neural network to create a computer vision system to detect cats in photos taken with smartphones.

But, unfortunately, the accuracy of your algorithm is not high enough. You are under tremendous pressure, you must improve the “cat detector”. What to do?

Your team has a ton of ideas, for example:

• Collect more data: take more images of cats.

• Collect a more diverse training sample: for example, cats in unusual poses, cats of a rare color, photographs taken with different camera settings, ...

• Train the model for longer, use more iterations of the gradient descent.

• Try a larger neural network: more layers / links / parameters.

• Experiment with a smaller network.

• Add regularization, for example, L2.

• Change the network architecture: activation function, number of hidden layers, etc.

• ...

If the choice among the possible alternatives is successful, then it will be possible to create a leading system for searching photos of cats, and this will lead the company to success. If the choice is unsuccessful, then you will lose months. How to act in such a situation?

This book will tell you how. Most machine learning tasks leave key clues that say what is good to try and what is not. Being able to see such tips can save months and years of development time.

2. How to use this book to help your team?

After reading this book, you will know how to set development directions in a project with machine learning algorithms. However, your colleagues may not understand why you have chosen this or that direction. You might want your team to define a quality metric as a single numerical parameter, but colleagues disagree with using only one indicator. How to convince them? That's why I made the chapters in this book short: just print and let colleagues read 1-2 pages of key information that they find useful.

Minor changes in the priority of tasks can have a huge effect on team performance. I hope you can become a superhero if you help your team make such changes.

3. What should you know?

If you studied the “Machine Learning” course at Coursera or have experience using supervised learning algorithms, then this is enough to understand the text of the book. I assume that you are familiar with teaching methods with a teacher: finding a function that, by values of x, allows you to get y for all x, having a labeled training sample for some (x, y). Algorithms of this kind include, for example, linear regression, logistic regression, and neural networks. There are many areas of machine learning, but most of the practical results have been achieved using teacher learning algorithms.

I will often talk about neural networks (NN) and deep learning. You only need a general understanding of their concepts. If you are not familiar with the concepts mentioned above, then first watch a video of the lectures on the Machine Learning course on Coursera.

4. What major changes contribute to the progress of machine learning?

Many deep learning / neural network ideas have been in the air for many years. Why right now we started to apply them? Two factors made the greatest contribution to the progress of recent years:

• Data availability: now people constantly use digital devices (laptops, smartphones, etc.), their digital activity generates huge amounts of data that we can “feed” our algorithms.

• Computing power: only a few years ago it became possible to mass train large enough neural networks to take advantage of the huge data sets that are available now.

Usually, the quality indicator of “old” algorithms, such as logistic regression, reaches a constant level, even if you have accumulated more data for training, i.e. from a certain limit, the quality of the algorithm ceases to improve with an increase in the training sample. If you train a small neural network in the same task, you can get the result a little better. Here, by a “small” neural network, I mean a network with a small number of hidden layers, links, and parameters. In the end, if you train a larger and larger network, you can get even better results.

Author's note: This graph shows that NN works better than traditional ML algorithms, including in the case of a small amount of training data. This is less correct than for a huge amount of data. For small samples, traditional algorithms may be better or worse depending on features designed by hand. For example, if you have only 20 samples / use cases for training, it doesn’t matter much whether you use logistic regression or a neural network, a set of signs will give a much greater effect compared to the choice of algorithm. But if you have a million samples for training, then I prefer to use a neural network.

Thus, the best results are achieved:

1. when a very large neural network is trained (so as to be on the green curve in the figure);

2. When a huge amount of data is used for training.

Many other details are also important, such as network architecture, where many innovations constantly appear. However, one of the most reliable ways to improve the quality of the algorithm remains (1) to train a large NN and (2) to use more data for training. In practice, following paragraphs (1) and (2) is extremely difficult. This book discusses the details of this process. We will start with general approaches that are useful for both traditional ML algorithms and NN, then we will select the most effective strategies for building deep learning systems.

5. What should be the samples?

Translator's Note: Andrew Eun uses the term “development set” to refer to the sample on which model parameters are set. Currently, this sample is often called the “validation set” - a validation sample. Indeed, the term “validation” does not correspond to the variety of tasks for which this sample is applied, and the name “development set” is more consistent with the status quo. However, I could not find a good translation for the “development set”, so I write “development selection” or “development selection”. It might be wise to call this sample “tuning” (thanks kfmn ).

Let's go back to the example with photos of cats. So, you are developing a mobile application, users upload various photos to your application, which automatically finds photos of cats among them. Your team created a large data set by downloading photos of cats (positive samples) and “non-cats” (negative samples) from websites. Then this data set was divided into training (70%) and test (30%) samples. Using the sampling data, a “cat detector” was created, which worked well for objects from the test and training samples. But when this classifier was implemented in a mobile application, you found that the quality of its work is really low! What happened?

You have found that the photos that users upload to your application look different than the images from the websites that make up the training set: users upload photos taken with smartphones, these images have different resolutions, are less clear, and the scene is not perfectly lit. Since the training and test samples were composed of images from web sites, your algorithm is not generalized to the actual distribution of data, for which the algorithm is developed, i.e. not summarized in photos from smartphones.

Before the era of “big data”, the general rule in machine learning was to randomly split a 70% / 30% data set into training and test samples. This approach may work, but it’s a bad idea for more and more tasks, where the distribution of data in the training set differs from the distribution of data for which the problem is ultimately solved.

Usually we define three samples:

• Training, which runs the learning algorithm;

• For development (development set), which is used to configure parameters, select features and make other decisions regarding the learning algorithm, sometimes such a sample is called a hold-out cross validation set;

• Test, on which they evaluate the quality of the algorithm, but on its basis do not make any decisions about which training algorithm or parameters to use.

As soon as you make a test sample and a sample for development, your team will begin to try many ideas, for example, various parameters of the training algorithm, to see what works better. These samples allow you to quickly evaluate how well the model works. In other words, the goal of test and development (development) samples is to direct your team towards the most important changes in the development of machine learning. Thus, you should do the following: create working and test samples so that they match the data that you expect to receive in the future, and on which your system should work well. In other words, your test sample should not just include 30% of the available data, especially if in the future you expect data (photos from smartphones),

If you have not yet begun distributing your mobile application, then you have no users, and there is no way to collect exactly the data that you expect in the future. But you can try to approximate them. For example, ask your friends and acquaintances to take photos with mobile phones and send them to you. Once you publish the mobile application, you can update the working (development) and test samples with real user data.

If there is no way to obtain data that approximates the expected in the future, then perhaps you can start working with images from web sites. But you must clearly understand that it is a risk to create a system that has a low generalizing ability for this task.

It is necessary to evaluate how much time and effort you are willing to invest in creating two powerful samples: for development and test. Do not make blind assumptions that the distribution of data in the training set is exactly the same as in the test set. Try to pick up test cases that reflect what you should ultimately work well on, and not any data that you are fortunate enough to have for training.

6. Development (development) and test samples should be obtained from the same source and have the same distribution

Photos of the “cat” mobile application are segmented into four regions that correspond to the largest application markets: USA, China, India and the rest of the world. You can create a working selection from the data of two randomly selected segments, and put the data of the two remaining segments into the test selection. Right? No mistake! Once you define these two samples, the team will focus on improving quality for the working sample. Therefore, this sample should reflect the whole problem that you are solving, and not part of it: you need to work well in all markets, and not just two.

There is another problem in the mismatch of data distributions in the working and test samples: it is likely that your team will create something that works well on the development sample, and then make sure that it does not work well on the test sample. As a result, there will be many disappointments and wasted efforts. Avoid this.

Suppose your team has developed a system that works well on a sample for development, but not on a test one. If both samples are obtained from the same source / distribution, then there is a fairly clear diagnosis of what went wrong: you overfit on the working sample. The obvious cure: increase the amount of data in the working sample. But if these two samples do not match, then there are many things that could “go wrong.” In general, it is quite difficult to work on applications with machine learning algorithms. The discrepancy between the working and test samples introduces additional uncertainty to the question: will the improvement in work in the working sample lead to improvements in the test. Having such a mismatch, it is difficult to understand what does not work, it is difficult to prioritize: what should be tried first.

If you are working on a task when the samples are provided by a third-party company / organization, then the working and test samples may have different distributions, and you cannot influence this situation. In this case, luck rather than skill will have a greater impact on the quality of the model. Training a model on data having one distribution for processing data (also with high generalizing ability) having another distribution is an important research problem. But if your goal is to obtain a practical result, and not research, then I recommend using data from the same source and equally distributed for the working and test samples.

7. How large should the test and development samples be?

The working sample should be large enough to detect the difference between the algorithms you are trying. For example, if classifier A gives an accuracy of 90.0%, and classifier B gives an accuracy of 90.1%, then a working sample of 100 samples will not allow you to see a change of 0.1%. In general, the working range of 100 samples is too small. Common practice is to sample from 1,000 to 10,000 samples. With 10,000 samples, you have a chance to see an improvement of 0.1%.

Author’s note: theoretically, statistical tests can be performed to determine whether changes in the algorithm have led to significant changes in the working sample, but in practice most teams don’t bother with this (unless they write a scientific article), I also don’t find such tests useful for evaluating intermediate progress.

For developed and important systems, such as advertising, search, recommendation systems, I saw teams that sought to achieve improvements of even 0.01%, since this directly affected the profit of their companies. In this case, the working sample must be much larger than 10,000 in order to be able to determine the slightest change.

What about the size of the test sample? It should be large enough to evaluate with high confidence the quality of the system. One popular heuristic was to use 30% of the available data for a test sample. This works quite well when you have a very small amount of data, say from 100 to 10,000 samples. But in the era of “big data”, when there are tasks with more than a million samples for training, the share of data in the working and test samples decreases, even when the absolute size of these samples increases. There is no need to have working and test samples much larger than the volume that allows you to evaluate the quality of your algorithms.

Only registered users can participate in the survey. Please come in.

Would you like to see here a translation of the following chapters of the book?

- 90.9% Yes 291

- 6.2% No, I will read in the original 20

- 2.8% No, dull chatter, no code examples 9