Overview of the Knime Analytics Platform - open source data analysis systems

About KNIME

Your attention is presented a review of the Knime Analytics Platform - an open source data analysis framework. This framework allows you to implement a full cycle of data analysis, including reading data from various sources, converting and filtering, actually analyzing, visualizing and exporting.

You can download KNIME (eclipse-based desktop application) from here: www.knime.org

Who may be interested in this platform:

- For those who want to analyze data

- For those who want to analyze data and do not have programming skills

- Those who want to delve into a good library of implemented algorithms and, perhaps, learn something new

Workflows

In Knime, the process of programming logic is done through the creation of Workflow. Workflow consists of nodes that perform a particular function (for example, reading data from a database, transformation, visualization). The nodes, respectively, are interconnected by arrows that indicate the direction of data movement.

(picture from the official site)

After the workflow is created, it can be launched for execution. After the workflow is launched for execution, in the base scenario, the workflow nodes begin to execute one by one, starting from the very first. If an error occurred during the execution of one or another node, then the execution of the entire branch following it stops. It is possible to restart the workflow not from the first, but from an arbitrary node.

The traffic light at each node reflects its current state - red - error, yellow - ready for execution, green - completed.

Nodes

Workflow consists of nodes (or "nodes"). Almost every node has a configuration dialog in which you can configure properties.

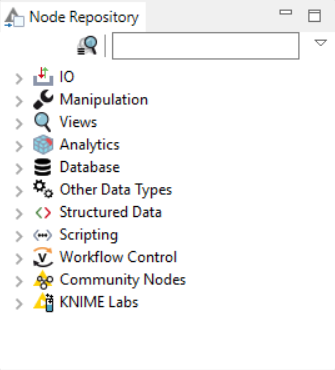

All nodes are divided into categories:

The following node types are supported: IO - input / output of data (for example, reading CSV), Manipulation - data conversion (including filtering of rows, columns, sorting), Views - data visualization (construction of various graphs including Histogram, Pie Chart, Scatter Plot, etc) , Database - the ability to connect to a database, read / write, Workflow Control - creating loops, iterating groups during a workflow, and more.

Various statistical methods (including linear correlation, hypothesis testing) and Data Mining methods (e.g. neural networks, decision trees, cluster view) are available from nodes that implement data analysis.

There is a good diagram on the official site showing how to assemble nodes of different types into a single flow:

Workflow # 1 Example: Building a Simple Scatter Plot

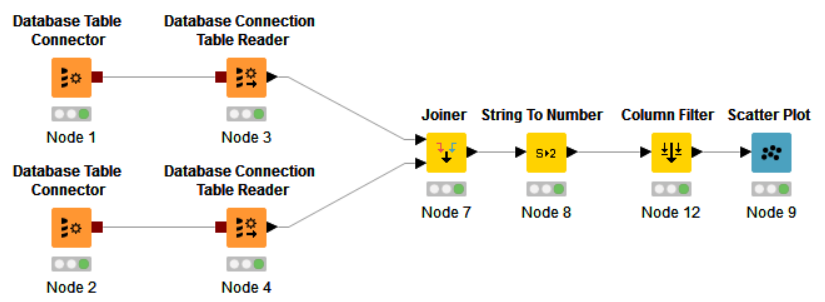

Consider an example of a simple workflow that pulls data, produces JOIN values for a certain ID field, filtering and visualizing the result on a Scatter Plot.

In this workflow, we create two database connections through the Database Table Connector. Inside the Database Table Connectors are SQL queries that pull the required data. Using Database Connection Table Reader, data is directly uploaded. After the data is read by the Readers, they come to the Joiner node, in which the operation, respectively, JOIN is performed. In the String to Number node, string values are converted to numeric, then extra columns are filtered, and finally the data comes to the Scatter Plot visualization node.

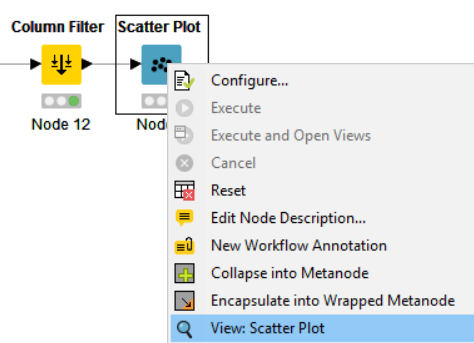

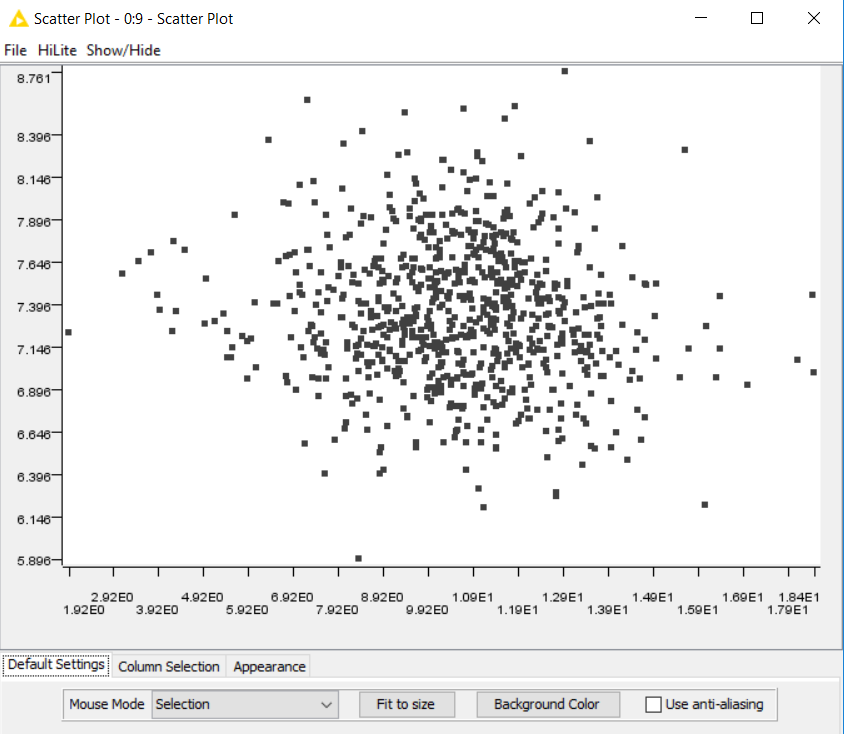

Further, after the successful execution of Flow, you can click on View Scatter Plot in the context menu and see the visualization result:

The constructed chart opens in a new window.

Thus, in a short time, without writing a single line of code, you can select the required data from the source, apply various filtering, sorting and visualize the result.

Workflow # 2 Example: Correlation Analysis

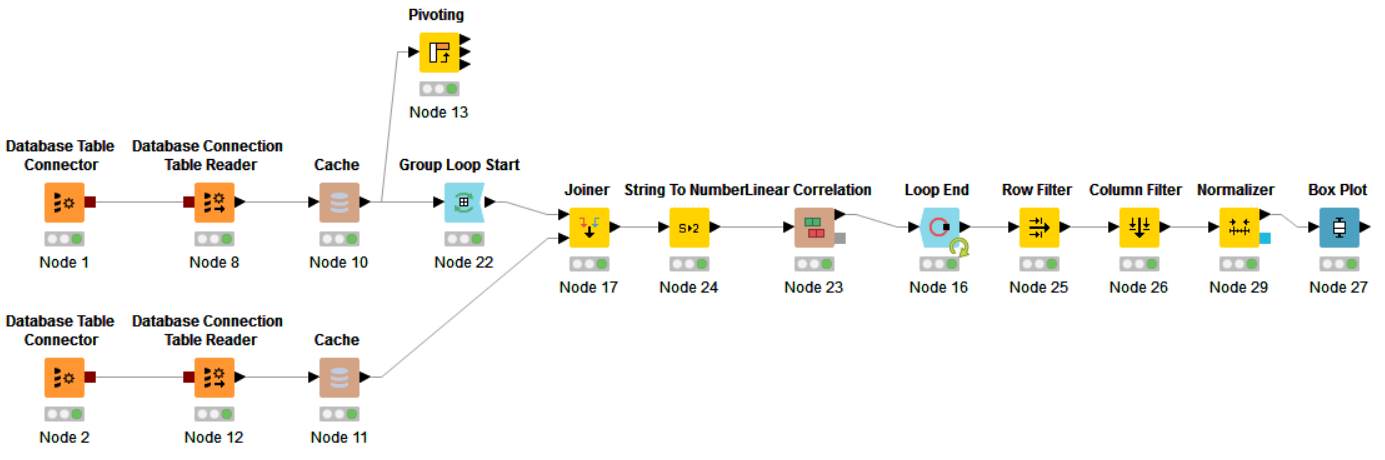

Consider another example. I would like to make a relatively large selection of data from the database, group the selection by the values of a certain field, and within each group find the correlation of values from this group and the target vector.

In this example, two connections to the database are opened. Through a single connection (Node 2), a SQL query draws a vector from several values. This will be the target vector to which we will seek a correlation.

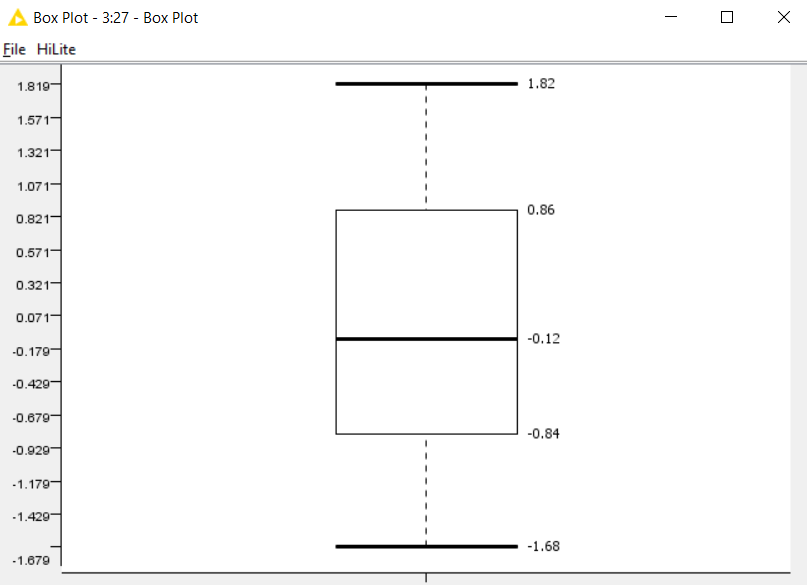

A relatively large selection of data is pulled through another connection (also with an SQL query). Next, the data falls into the Group Loop Start - Workflow Control operator that does GROUP BY, inside this loop, the target vector goes to the JOIN data, the string values are converted to numeric values and linear correlation is considered. The calculation results are accumulated in the Loop End node. At the exit from this node, row and column filtering, normalization of values, and construction of Box Plot are applied.

After executing the entire workflow and clicking on View: Box Plot, a window with the calculated values for Box Plot opens.

An alternative is to use the Pivot operation and use the JOIN to add the target vector, and then iterate through the columns and read the correlation. At workflow, a blank for an alternative option is provided (in the form of a Pivot node), but the approach itself is not implemented.

Additional features

Of the interesting features that I noticed, the following can be distinguished:

- Execution of workflow on the server and providing access to the results of work through the REST API. This functionality is available upon purchase of KNIME-Server.

- The full KNIME distribution with all the plugins weighs almost 2 gigabytes. This distribution includes a large number of third-party libraries (for example, JFreeChart), which become available as nodes

- Implemented the ability to make a Pivot operation directly on the database or on data loaded into the local cache

- Large sample library available

- Work with Hadoop and other BigData sources

Problems and Conclusions

This system provides a fairly flexible approach to the construction of algorithms for analyzing, converting and visualizing data, but nevertheless, in complex workflow, in my opinion, one may encounter the following problems:

- Insufficiently flexible behavior of nodes - they work as they want and to tighten up some nuances of work can be quite difficult (if you don’t get inside the code)

- Programming complex flow can result in a long and complex diagram with cycles, conditions, which will lead to difficulties in reading and debugging. In this case, writing code in R or Python might be a better choice.

This framework is well suited for people who are not very familiar with programming, with it you can quickly create simple and medium complexity workflows and provide access to them through REST. This may be in demand in any organization.

Data scientists may also find a lot of interesting things for themselves and may consider this system as an addition to R or Python.

This framework is also good for working with students, since everything that happens with the data is clearly visible, on which branches they move and how they are transformed. Students can study the implementation of existing nodes, add their components (nodes) and replenish their library.