Deep learning reinforced by a virtual manager in the game against inefficiency

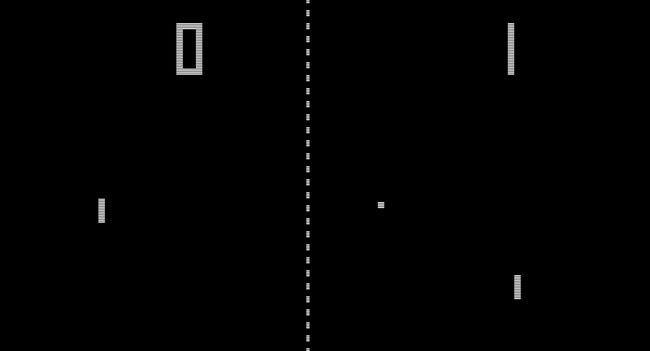

The successes of Google Deepmind are now known and talked about. DQN (Deep Q-Network) algorithms defeat Man with a good margin in more and more games. The achievements of recent years are impressive: in just dozens of minutes of training, algorithms learn and win a person in pong and other Atari games. Recently, they entered the third dimension - they defeat a person in DOOM in real time, and also learn to control cars and helicopters.

DQN was used to train AlphaGo by playing thousands of games alone. When it was still not fashionable, in 2015, anticipating the development of this trend, the management of Phobos in the person of Alexei Spassky ordered the Research & Development department to conduct a study. It was necessary to consider the existing technologies of machine learning for the possibility of using them to automate the victory in management games. Thus, this article will discuss the design of a self-learning algorithm in the game of a virtual manager against a living team for improving productivity.

The applied task of analyzing machine learning data classically has the following solution steps:

- formulation of the problem;

- data collection;

- data preparation;

- formulation of hypotheses;

- model building;

- model validation;

- presentation of the results.

This article will talk about key solutions in designing an intelligent agent.

More detailed descriptions of the stages from the statement of the problem to the presentation of the results will be described in the following articles, if the reader is interested. Thus, probably, we will be able to solve the problem of the story about the multidimensional and ambiguous result of the study without losing understanding.

Algorithm selection

So, to complete the task of finding the maximum efficiency of team management, it was decided to use deep learning with reinforcement, namely Q-learning. An intelligent agent forms the utility function Q of each action from those available to him on the basis of reward or punishment from the transition to a new state of the environment, which gives him the opportunity to choose a behavior strategy, but to take into account the experience of the previous interaction with the game environment.

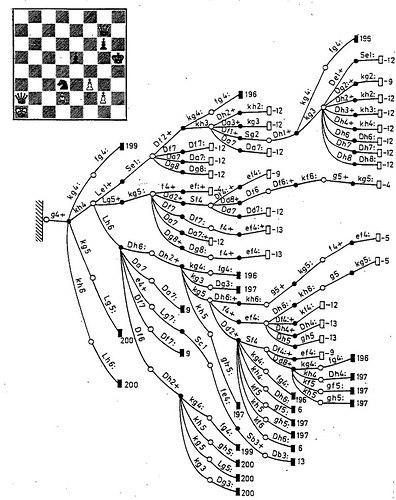

The main reason for choosing DQN is that for training an agent using this method, a model is not required either for training or for choosing an action. This is a critical requirement for a teaching method for the simple reason that a formalized model of a collective of people with practical predictive power does not yet exist. Nevertheless, an analysis of the successes of artificial intelligence in logical games shows that the advantages of an expert-based approach become more pronounced as the environment becomes more complex. This is found in checkers and chess, where the assessment of actions based on the model was more successful than Q-learning.

One of the reasons that reinforcement training does not leave office clerks without a job is that the method does not scale well. A Q-learning agent conducting environmental research is an active student who must repeatedly apply each action in each situation in order to compose his Q-function to evaluate the profitability of all possible actions in all possible situations.

If, as in old vintage games, the number of actions is calculated by the number of buttons on the joystick, and the states by the position of the ball, then the agent will take tens of minutes and hours to train to defeat a person, then in chess and GTA5 a combinatorial explosion already makes the number of combinations of game states and possible cosmic actions for the student to go through.

Hypothesis and model

To effectively use Q-learning to manage the team, we must minimize the dimensionality of environmental conditions and actions.

Our solutions:

- The first engineering solution was to present management activities in the form of a set of mini-games. Each of them has discrete amounts of states and actions such that the order of combinations is comparable to successfully solved game problems. Thus, it is not necessary to build an algorithm that will seek the optimal control strategy in a multidimensional space, but many agents are superior to the human player in tactical games. An example of such a game is task management in YouTrack. State of the environment (roughly) - time in work and status, and actions - opening, rediscovery task, appointment of the responsible. More details below.

An example of online learning a simple game:

https://cs.stanford.edu/people/karpathy/convnetjs/demo/rldemo.html

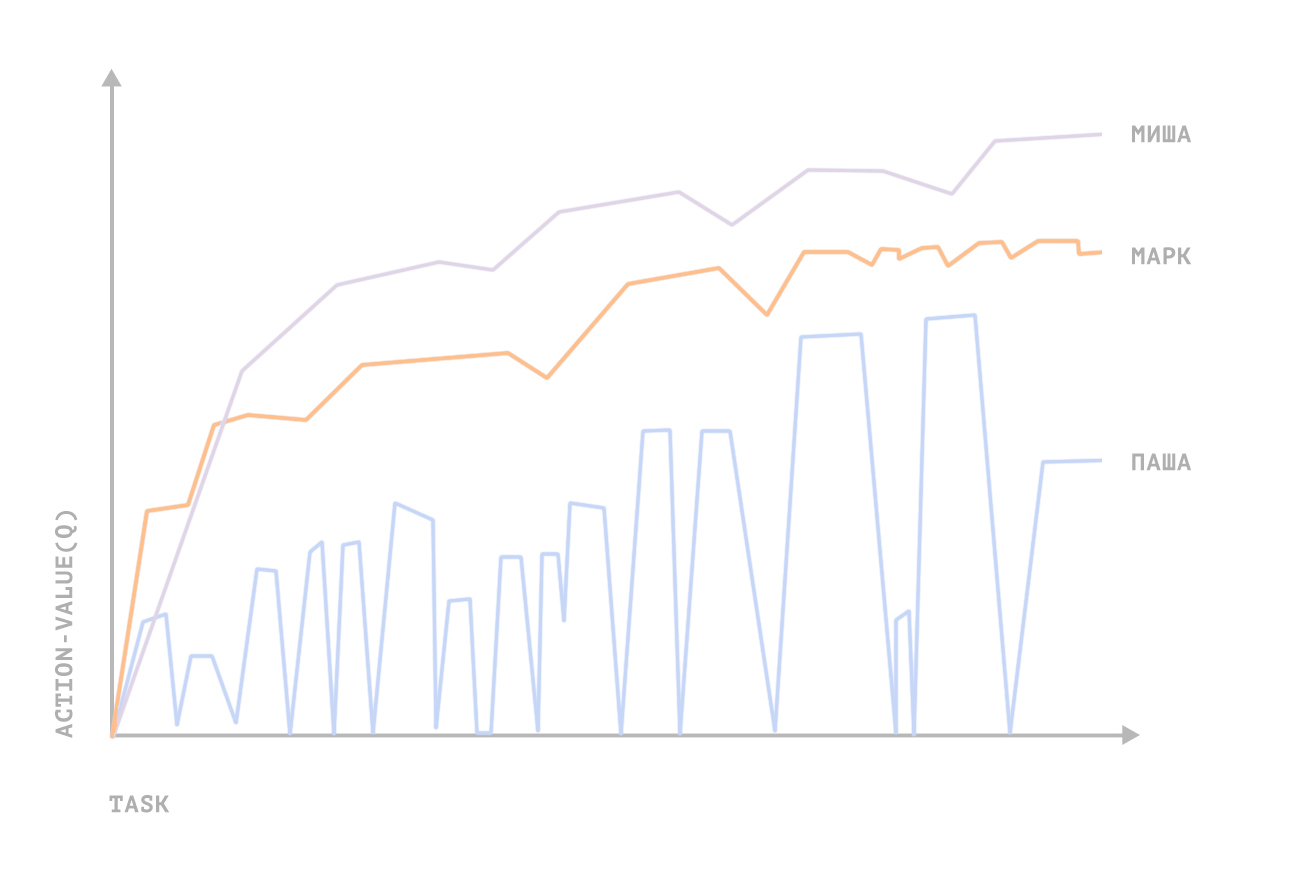

- Each office employee is an intelligent agent complicating the environment with the variety of his behavior. Personalization allows you to avoid multi-agent environments, therefore, one learning agent plays with one employee. In the task-setting mini-game, the agent receives a reward when the employee works more efficiently (solves tasks faster). If an employee (for example, a developer) is unmanageable for a Q-learning agent, then the algorithm will not converge.

- The most important simplification. In a mini-game for setting the task for employees, there is continuous multidimensional game states, if only because of the time parameter spent on the task. And the worst part is that the rewards for the agent’s actions are not obvious. The gaming environment, as a finite set of conditions for each mini-game and the calculation of the promotion in one state or another, is 90% formalized by our managers business logic. This is the most time-consuming and important point, since it is in the formula of expert assessment of states that the expert knowledge is contained, which are an implicit model of the environment and actions, and also determine the amount of remuneration in the agent’s training. The success of agent training depended on the predictive power of this implicit model.

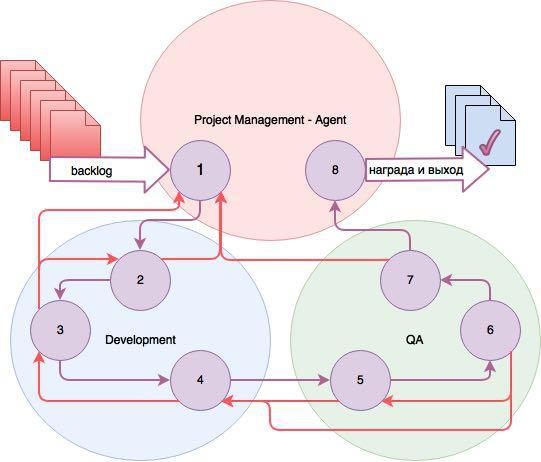

The diagram shows the states of three game environments for three agents that control the progress of work on a task.

Status:

- Task registered (backlog);

- The task is open;

- In work;

- Development is complete, the task is open for testing (QA);

- Open for testing;

- Being tested;

- Ready, tested;

- Closed.

The list of actions for each of the three agents is different. Project Manager - Agent assigns the executor and tester, time and priority of the task. Agents working with Dev and QA are personal to each executor and tester. If the task proceeds further, the agents receive rewards; if the task returns, punishments.

All agents receive the greatest reward when closing the task. Also, for Q-training, DF and LF (discount factor and learning factor, respectively) were selected so that the agents were focused specifically on closing the task. The calculation of reinforcements in the general case takes place according to the optimal control formula, taking into account, inter alia, the difference in the estimation of time and real costs, the number of task returns, etc. The advantage of this solution is its scalability to a larger team.

Conclusion

The hardware on which the calculations were performed is the GeForce GTX 1080.

For the above mini-game with setting and conducting tasks in Youtrack, the control functions converged to values above average (employee productivity increased relative to working with a human manager) for 3 out of 5 people. Overall productivity (in hours) almost doubled. There were no employees from the test group satisfied with the experiment; dissatisfied 4; one refrained from evaluations.

Nevertheless, we have drawn conclusions for ourselves that in order to use the “in battle” method, it is necessary to introduce expert knowledge in psychology into the model. The total development and testing duration is more than a year.