Creating Art with DCGAN on Keras

Good day. Six months ago, I started learning machine learning, went through a couple of courses and got some experience with that. Then, seeing a variety of news about which neural networks are cool and can do a lot, I decided to try to study them. I started reading Nikolenko’s book about deep learning and in the course of reading I had a few ideas (which are not new to the world, but were of great interest to me), one of which is to create a neural network that would generate art for me that would seem cool only to me, the “father of the drawing child,” but also to other people. In this article I will try to describe the path that I have followed in order to get the first results that satisfy me.

Dataset collection

When I read the chapter on adversary networks, I realized that I could now write something.

One of the first tasks was to write a web page parser for collecting datasets. The wikiart site was great for this , there are a large number of pictures on it and everything is collected in styles. It was my first parser, so I wrote it for 4-5 days, the first 3 of which took the poke along the completely wrong path. The correct way was to go to the network tab in the source code of the page and to track how images appear when you click the "more" button. Actually, for the same beginners like me, it will be good to show the code.

from scipy.misc import imresize, imsave

from matplotlib.image import imread

import requests

import json

from bs4 import BeautifulSoup

from itertools import count

import os

import globIn the first cell of the jupiter, I imported the necessary libraries.

- glob - A handy thing to get a list of files in a directory.

- requests, BeautifulSoup - Masthev for parsing

- json - library, to get a dictionary, which is returned when you click the "more" button on the site

- resize, save, imread - for reading images and preparing them.

defget_page(style, pagenum):

page = requests.get(url1 + style + url2 + str(pagenum) + url3)

return page

defmake_soup(page):

soup = BeautifulSoup(page.text, 'html5lib')

return soup

defmake_dir(name, s):

path = os.getcwd() + '/' + s + '/' + name

os.mkdir(path)I describe functions for convenient work.

The first - gets a page in the form of text, the second makes this text more convenient for work. Well, the third to create the necessary folders for styles.

styles = ['kubizm']

url1 = 'https://www.wikiart.org/ru/paintings-by-style/'

url2 = '?select=featured&json=2&layout=new&page='

url3 = '&resultType=masonry'In the styles array, there should have been several styles, but it turned out that I loaded them completely unevenly.

for style in styles:

make_dir(style, 'images')

for style in styles:

make_dir(style, 'new256_images')Create the necessary folders. The second cycle creates folders in which the image will be saved flattened in a square 256x256.

(At first I thought about somehow not rationing the size of the pictures so that there were no distortions, but I realized that this is either impossible or too difficult for me)

for style in styles:

path = os.getcwd() + '\\images\\' + style + '\\'

images = []

names = []

titles = []

for pagenum in count(start=1):

page = get_page(style, pagenum)

if page.text[0]!='{': break

jsons = json.loads(page.text)

paintings = jsons['Paintings']

if paintings isNone: breakfor item in paintings:

images_temp = []

images_dict = item['images']

if images_dict isNone:

images_temp.append(item['image'])

names.append(item['artistName'])

titles.append(item['title'])

else:

for inner_item in item['images']:

images_temp.append(inner_item['image'])

names.append(item['artistName'])

titles.append(item['title'])

images.append(images_temp)

for char in ['/','\\','"', '?', ':','*','|','<','>']:

titles = [title.replace(char, ' ') for title in titles]

for listimg, name, title in zip(images, names, titles):

if len(name) > 30:

name = name[:25]

if len(title) > 50:

title = title[:50]

if len(listimg) == 1:

response = requests.get(listimg[0])

if response.status_code == 200:

with open(path + name + ' ' + title + '.png', 'wb') as f:

f.write(response.content)

else: print('Error from server')

else:

for i, img in enumerate(listimg):

response = requests.get(img)

if response.status_code == 200:

with open(path + name + ' ' + title + str(i) + '.png', 'wb') as f:

f.write(response.content)

else: print('Error from server')Here you can download images and save them to the desired folder. Here pictures do not change the size, originals remain.

Interesting things happen in the first nested loop:

I decided to stupidly constantly ask for json's (json is a dictionary that the server returns when you click the More button. In the dictionary, all the information about the pictures), and stop when the server returns something unintelligible and not like typical values . In this case, the first character of the returned text should have been an opening brace, followed by the body of the dictionary.

It was also noted that the server can return something like an album of pictures. That is, in fact, an array of paintings. At first I thought that single paintings were returning, the name of the artists to them, and it may be that at the same time with an artist’s name the array of paintings is given.

for style in styles:

directory = os.getcwd() + '\\images\\' + style + '\\'

new_dir = os.getcwd() + '\\new256_images\\' + style + '\\'

filepaths = []

for dir_, _, files in os.walk(directory):

for fileName in files:

#relDir = os.path.relpath(dir_, directory)#relFile = os.path.join(relDir, fileName)

relFile = fileName

#print(directory)#print(relFile)

filepaths.append(relFile)

#print(filepaths[-1])

print(filepaths[0])

for i, fp in enumerate(filepaths):

img = imread(directory + fp, 0) #/ 255.0

img = imresize(img, (256, 256))

imsave(new_dir + str(i) + ".png", img)Here the images are resized and stored in a folder prepared for them.

Well, it is assembled, you can proceed to the most interesting!

Starting small

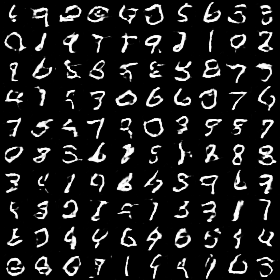

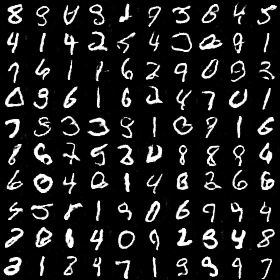

Next, after reading the original article, I started to create! But what was my disappointment when nothing good happened. During these attempts, I trained the network in the same style of pictures, but even so, nothing worked out, so I decided to start learning how to generate numbers from a ministry. I will not stop here in detail, I will tell only about the architecture and the turning point, thanks to which the numbers began to be generated.

defbuild_generator():

model = Sequential()

model.add(Dense(128 * 7 * 7, input_dim = latent_dim))

model.add(BatchNormalization())

model.add(LeakyReLU())

model.add(Reshape((7, 7, 128)))

model.add(Conv2DTranspose(64, filter_size, strides=(2,2), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(32, filter_size, strides=(1, 1), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(img_channels, filter_size, strides=(2,2), padding='same'))

model.add(Activation("tanh"))

model.summary()

return modellatent_dim is an array of 100 randomly generated numbers.

defbuild_discriminator(): model = Sequential() model.add(Conv2D(64, kernel_size=filter_size, strides = (2,2), input_shape=img_shape, padding="same")) model.add(LeakyReLU(alpha=0.2)) model.add(Dropout(0.25)) model.add(Conv2D(128, kernel_size=filter_size, strides = (2,2), padding="same")) model.add(BatchNormalization(momentum=0.8)) model.add(LeakyReLU(alpha=0.2)) model.add(Dropout(0.25)) model.add(Conv2D(128, kernel_size=filter_size, strides = (2,2), padding="same")) model.add(BatchNormalization(momentum=0.8)) model.add(LeakyReLU(alpha=0.2)) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(1)) model.add(Activation('sigmoid')) model.summary() return modelThat is, in total, the size of the outputs of the convolutional layers and the number of layers are generally less than in the original article. 28x28 because I generate, not interiors!

Well, the same trick, thanks to which everything turned out - on the even iteration of training the discriminator looked at the generated pictures, and on the odd one - on the real ones.

On this, in general, everything. This DCGAN learned very quickly, for example, the picture at the beginning of this sub-topic was obtained at the 19th epoch,

These are already confident, but nevertheless from time to time unrealistic figures turned out for the 99th epoch of education.

Satisfied with the preliminary result, I stopped learning and began to think about how to solve the main problem.

Creative adversarial network

The next step was to read about GAN with labels: the discriminator and generator are served with the class of the current picture. And after Ghana with labels, I learned about CAN - the decoding in general in the name of the subtopic.

In CAN, the discriminator tries to guess the class of the picture if the picture is from a real set. And, accordingly, in the case of training in the real picture, an error from guessing the class is presented as an error to the discriminator other than the default one.

When training on the generated picture, the discriminator needs only to predict whether this picture is real or not.

The generator, moreover, in order to simply deceive the discriminator, it is necessary to ensure that the discriminator is at a loss when guessing the class of the picture, that is, the generator will be interested in that the outputs to the classes of discriminators are as far as possible from 1, full confidence.

Turning to CAN, I again experienced difficulties, dysmoral because nothing works and is not trained. After a few unpleasant failures, I decided to start everything from the beginning and save all changes (Yes, I didn’t do it before), weight and architecture (to interrupt training).

First, I wanted to make a network that would generate one single image of 256x256 (all the following pictures of this size) for me without any labels. The tipping point here was that, on the contrary, in each iteration of learning, the discriminator should be given a look at the generated pictures and the real ones.

This is the result I stopped at and moved on to the next stage. Yes, the colors are different from the real picture, but I was more interested in the ability of the network to select contours and objects. With this she coped.

Then you could proceed to the main task - the generation of art. Immediately present the code, simultaneously commenting on it.

First, as always, you need to import all the libraries.

import glob

from PIL import Image

from keras.preprocessing.image import array_to_img, img_to_array, load_img

from datetime import date

from datetime import datetime

import tensorflow as tf

import numpy as np

import argparse

import math

import os

from matplotlib.image import imread

from scipy.misc.pilutil import imresize, imsave

import matplotlib.pyplot as plt

import cv2

import keras

from keras.models import Sequential, Model

from keras.layers import Dense, Activation, Reshape, Flatten, Dropout, Input

from keras.layers.convolutional import Conv2D, Conv2DTranspose, MaxPooling2D

from keras.layers.normalization import BatchNormalization

from keras.layers.advanced_activations import LeakyReLU

from keras.optimizers import Adam, SGD

from keras.datasets import mnist

from keras import initializers

import numpy as np

import randomGenerator creation.

The layer outputs are again different from the article. Somewhere in order to save memory (Conditions: home computer with gtx970), and somewhere because of the success with the configuration

defbuild_generator():

model = Sequential()

model.add(Dense(128 * 16 * 8, input_dim = latent_dim))

model.add(BatchNormalization())

model.add(LeakyReLU())

model.add(Reshape((8, 8, 256)))

model.add(Conv2DTranspose(512, filter_size_g, strides=(1,1), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(512, filter_size_g, strides=(1,1), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(256, filter_size_g, strides=(1,1), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(128, filter_size_g, strides=(2,2), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(64, filter_size_g, strides=(2,2), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(32, filter_size_g, strides=(2,2), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(16, filter_size_g, strides=(2,2), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(8, filter_size_g, strides=(2,2), padding='same'))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU())

model.add(Conv2DTranspose(img_channels, filter_size_g, strides=(1,1), padding='same'))

model.add(Activation("tanh"))

model.summary()

return modelThe discriminator creation function returns two models, one of which is trying to find out if the picture is real and the other is trying to find out the class of the picture.

defbuild_discriminator(num_classes):

model = Sequential()

model.add(Conv2D(64, kernel_size=filter_size_d, strides = (2,2), input_shape=img_shape, padding="same"))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(128, kernel_size=filter_size_d, strides = (2,2), padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(256, kernel_size=filter_size_d, strides = (2,2), padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(512, kernel_size=filter_size_d, strides = (2,2), padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(512, kernel_size=filter_size_d, strides = (2,2), padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Conv2D(512, kernel_size=filter_size_d, strides = (2,2), padding="same"))

model.add(BatchNormalization(momentum=0.8))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.25))

model.add(Flatten())

model.summary()

img = Input(shape=img_shape)

features = model(img)

validity = Dense(1)(features)

valid = Activation('sigmoid')(validity)

label1 = Dense(1024)(features)

lrelu1 = LeakyReLU(alpha=0.2)(label1)

label2 = Dense(512)(label1)

lrelu2 = LeakyReLU(alpha=0.2)(label2)

label3 = Dense(num_classes)(label2)

label = Activation('softmax')(label3)

return Model(img, valid), Model(img, label)Function to create a competitive model. In the competitive model, the discriminator is not trained.

defgenerator_containing_discriminator(g, d, d_label):

noise = Input(shape=(latent_dim,))

img = g(noise)

d.trainable = False

d_label.trainable = False

valid, target_label = d(img), d_label(img)

return Model(noise, [valid, target_label])Function to load batcha with real pictures and labels. data is an array of addresses that will be defined later. In the same function, the image is normalized.

defget_images_classes(batch_size, data):

X_train = np.zeros((batch_size, img_rows, img_cols, img_channels))

y_labels = np.zeros(batch_size)

choice_arr = np.random.randint(0, len(data), batch_size)

for i in range(batch_size):

rand_number = np.random.randint(0, len(data[choice_arr[i]]))

temp_img = cv2.imread(data[choice_arr[i]][rand_number])

X_train[i] = temp_img

y_labels[i] = choice_arr[i]

X_train = (X_train - 127.5)/127.5return X_train, y_labelsThe function for the beautiful output of batch images. Actually, all the pictures from this article were collected by this function.

defcombine_images(generated_images):

num = generated_images.shape[0]

width = int(math.sqrt(num))

height = int(math.ceil(float(num)/width))

shape = generated_images.shape[1:3]

image = np.zeros((height*shape[0], width*shape[1], img_channels),

dtype=generated_images.dtype)

for index, img in enumerate(generated_images):

i = int(index/width)

j = index % width

image[i*shape[0]:(i+1)*shape[0], j*shape[1]:(j+1)*shape[1]] = \

img[:, :, :,]

return imageAnd here is the data. It returns in a more or less convenient way a set of addresses of pictures, which we, above, put into folders

defget_data():

styles_folder = os.listdir(path=os.getcwd() + "\\new256_images\\")

num_styles = len(styles_folder)

data = []

for i in range(num_styles):

data.append(glob.glob(os.getcwd() + '\\new256_images\\' + styles_folder[i] + '\\*'))

return data, num_stylesFor the passage of the era exhibited a randomly large number, because it was too lazy to count the number of all the pictures. The same function provides for loading weights, if you need to continue training. Every 5 epochs of weight and architecture are preserved.

It is also worth writing that I tried to add noise to the input images, but in the last training I decided not to.

Smooth class labels have been used, they are very helpful for learning.

deftrain_another(epochs = 100, BATCH_SIZE = 4, weights = False, month_day = '', epoch = ''):

data, num_styles = get_data()

generator = build_generator()

discriminator, d_label = build_discriminator(num_styles)

discriminator.compile(loss=losses[0], optimizer=d_optim)

d_label.compile(loss=losses[1], optimizer=d_optim)

generator.compile(loss='binary_crossentropy', optimizer=g_optim)

if month_day != '':

generator.load_weights(os.getcwd() + '/' + month_day + epoch + ' gen_weights.h5')

discriminator.load_weights(os.getcwd() + '/' + month_day + epoch + ' dis_weights.h5')

d_label.load_weights(os.getcwd() + '/' + month_day + epoch + ' dis_label_weights.h5')

dcgan = generator_containing_discriminator(generator, discriminator, d_label)

dcgan.compile(loss=losses[0], optimizer=g_optim)

discriminator.trainable = True

d_label.trainable = Truefor epoch in range(epochs):

for index in range(int(15000/BATCH_SIZE)):

noise = np.random.normal(0, 1, (BATCH_SIZE, latent_dim))

real_images, real_labels = get_images_classes(BATCH_SIZE, data)

#real_images += np.random.normal(size = img_shape, scale= 0.1)

generated_images = generator.predict(noise)

X = real_images

real_labels = real_labels - 0.1 + np.random.rand(BATCH_SIZE)*0.2

y_classif = keras.utils.to_categorical(np.zeros(BATCH_SIZE) + real_labels, num_styles)

y = 0.8 + np.random.rand(BATCH_SIZE)*0.2

d_loss = []

d_loss.append(discriminator.train_on_batch(X, y))

discriminator.trainable = False

d_loss.append(d_label.train_on_batch(X, y_classif))

print("epoch %d batch %d d_loss : %f, label_loss: %f" % (epoch, index, d_loss[0], d_loss[1]))

X = generated_images

y = np.random.rand(BATCH_SIZE) * 0.2

d_loss = discriminator.train_on_batch(X, y)

print("epoch %d batch %d d_loss : %f" % (epoch, index, d_loss))

noise = np.random.normal(0, 1, (BATCH_SIZE, latent_dim))

discriminator.trainable = False

d_label.trainable = False

y_classif = keras.utils.to_categorical(np.zeros(BATCH_SIZE) + 1/num_styles, num_styles)

y = np.random.rand(BATCH_SIZE) * 0.3

g_loss = dcgan.train_on_batch(noise, [y, y_classif])

d_label.trainable = True

discriminator.trainable = True

print("epoch %d batch %d g_loss : %f, label_loss: %f" % (epoch, index, g_loss[0], g_loss[1]))

if index % 50 == 0:

image = combine_images(generated_images)

image = image*127.5+127.5

cv2.imwrite(

os.getcwd() + '\\generated\\epoch%d_%d.png' % (epoch, index), image)

image = combine_images(real_images)

image = image*127.5+127.5

cv2.imwrite(

os.getcwd() + '\\generated\\epoch%d_%d_data.png' % (epoch, index), image)

if epoch % 5 == 0:

date_today = date.today()

month, day = date_today.month, date_today.day

# Генерируем описание модели в формате json

d_json = discriminator.to_json()

# Записываем модель в файл

json_file = open(os.getcwd() + "/%d.%d dis_model.json" % (day, month), "w")

json_file.write(d_json)

json_file.close()

# Генерируем описание модели в формате json

d_l_json = d_label.to_json()

# Записываем модель в файл

json_file = open(os.getcwd() + "/%d.%d dis_label_model.json" % (day, month), "w")

json_file.write(d_l_json)

json_file.close()

# Генерируем описание модели в формате json

gen_json = generator.to_json()

# Записываем модель в файл

json_file = open(os.getcwd() + "/%d.%d gen_model.json" % (day, month), "w")

json_file.write(gen_json)

json_file.close()

discriminator.save_weights(os.getcwd() + '/%d.%d %d_epoch dis_weights.h5' % (day, month, epoch))

d_label.save_weights(os.getcwd() + '/%d.%d %d_epoch dis_label_weights.h5' % (day, month, epoch))

generator.save_weights(os.getcwd() + '/%d.%d %d_epoch gen_weights.h5' % (day, month, epoch))Initializing variables and starting training. Due to the low "power" of my computer, learning is possible at a maximum on a batch size of 16 pictures.

img_rows = 256

img_cols = 256

img_channels = 3

img_shape = (img_rows, img_cols, img_channels)

latent_dim = 100

filter_size_g = (5,5)

filter_size_d = (5,5)

d_strides = (2,2)

color_mode = 'rgb'

losses = ['binary_crossentropy', 'categorical_crossentropy']

g_optim = Adam(0.0002, beta_2 = 0.5)

d_optim = Adam(0.0002, beta_2 = 0.5)

train_another(1000, 16)Actually, I want to write a post on a habr about this idea of mine for a long time now, this is not the best time for this, because this neuronka has been studying for three days and is now in the 113th era, but today I found interesting pictures, so I decided it was time already write a post!

These are the pictures turned out today. Perhaps by calling them, I can convey to the reader my personal perception of these pictures. It is quite noticeable that the network is not trained enough (or maybe it will not learn by such methods at all), especially considering that the pictures were taken by cherry writing, but today I received a result that I liked.

Further plans to train this configuration to the point where it becomes clear what it is capable of. Also plans to create a network that would increase these pictures to sane size. This has already been invented and there is implementation.

I would be extremely happy constructive criticism, good advice and questions.