Load Testing Veeam Backup & Replication

In the spring of 2018, Selectel launched a backup service for VMware-based Cloud through Veeam® Backup & Replication ™ (hereinafter referred to as VBR). We approached the project implementation thoroughly, planned and executed the following list of works:

- Study documentation and best practices on Veeam products

- Designing a VBR Service Provider Level

- Deploying VBR Infrastructure

- Testing the solution, determining the optimal settings and modes of operation

- Launch of the solution into industrial (commercial) operation

As it turned out - not in vain. The service is stable, customers can back up their virtual machines, and we have a certain expertise that we want to share.

In this article we want to talk about the results of load testing VBR for the two most popular backup proxy modes of operation, taking into account the variation in the number of parallel tasks.

Here you can see:

- Description of the Selectel production infrastructure used for testing

- Features of backup proxy (backup proxy) in various transport modes

- Description of the testing program and VBR component settings for its implementation

- Quantitative indicators, their comparison and conclusions

Test infrastructure configuration

Source Infrastructure

As a platform for testing the performance of VBR, one of the production clusters of the public Cloud based on VMware was made .

- Hardware configuration of hosts in this cluster:

- Intel® Xeon® Gold 6140 Processors

- NVMe Intel® DC Drives P4600 and P3520

- 4 10GbE ports per host

The cluster is based on the following solutions:

- Physical network - Ethernet factory on Brocade VDX switches, Leaf-Spine architecture (10GbE ports - connecting hosts, 40GbE uplinks to Spine)

- Virtualization Environment - VMware vSphere® 6.5

- VM data storage - VMware vSAN ™ 6.6 (All-Flash vSAN Cluster)

- Network Virtualization - VMware NSX® 6.4

The performance of the testing platform is more than enough and is not in doubt. Of course, for high speed, all this should be properly configured, but since this is production, with lively and satisfied customers, you can be sure that everything is fine in this regard.

Together with the VMware-based Cloud, Selectel launched a service for its backup on the VBR platform. Customers receive a self-service web portal where they can backup and restore vApps and VMs from their VDC (virtual data center).

Clients access this portal (Veeam® Enterprise Manager Self-service portal) with the same rights as vCloud Director® (vCD). This is possible thanks to the integration of Veeam® Backup Enterprise Manager (EM) and vCD, while each client, when connected to the EM, is limited to the resources of its VDC, it will not see other VMs.

The client does not need to deploy its own VBR and its associated backup infrastructure, which implies costs for computing and network resources, storage, Veeam and MS licenses, administration. It is long, expensive and difficult. Selectel provides the basic features of VBR as a BaaS (Backup-as-a-Service) service: instantly, simply, conveniently, economically.

To provide this service, VBR provider infrastructure was deployed in Selectel, covering all vSphere and VDC clusters of VMware cloud clients, including the cluster in which this testing was conducted. Thus, the test results will judge the maximum speed with which customers can back up their VMs.

Test VMs

To test backup performance in a vSphere cluster, 6 identical VMs were deployed in the following configuration:

- Windows Server 2016, 2 vCPU, 4GB RAM

- 200GB vDisk

The disk is occupied almost completely - 193GB. In addition to the OS files, a folder has been created on it with distributions of various OS and 60GB of DBMS (unique data). On the same disc, 3 copies of this folder were created - a total of 180GB of non-system data.

No applications for these VMs were installed, only a “clean” OS and “cold” data. No load, computing or network, started. This test did not require this.

DRS is enabled in the vSphere cluster, so test VMs are automatically optimally distributed to VMware ESXi ™ hosts for load balancing.

Backup proxy

VM with backup proxy is deployed directly in the vSphere cluster described above (source infrastructure, hereinafter referred to as vSphere cluster), this is a necessary condition for testing in the Virtual Appliance mode.

VM configuration:

- 8 vCPU

- 8GB RAM

- 40GB vDisk

- 10GbE vNIC vmxnet3

- Windows Server 2016

The “Max concurrent tasks” parameter for backup proxy at VBR level is set to 6. This means that backup proxy can simultaneously (in parallel) process up to 6 backup tasks (task). One task is a backup of one virtual disk VM.

Backup repository

The front end of the backup repository is a physical server that acts as a VBR backup repository. Server configuration:

- CPU E5-1650v3

- 32GB RAM

- 2 10GbE ports

Backend storage - CephFS cluster with NVMe-cache.

The backup repository and Ceph nodes communicate over a 10GbE network, each of which is connected to the switches by two ports.

A detailed description of the Ceph cluster configuration is beyond the scope of this article. Note that for reliability and fault tolerance, the data on it is stored in three copies. The performance of the cluster does not cause complaints and laid with a margin, the test results showed that in none of them the backup storage was not a bottleneck.

The “Limit maximum concurrent tasks” parameter for the backup repository at the VBR level is set to 6. This means that the backup repository can simultaneously (concurrently) process up to 6 backup tasks.

Backup network

The physical network of the infrastructure described above is limited by the bandwidth of 10 Gbit / s, 10GbE switches and ports are used everywhere. This is true not only for the vSAN network, but also for the management interfaces of the ESXi hosts.

To host a backup proxy at the VMware NSX level, a dedicated subnet has been created with its logical switch. For its connectivity with physics and the implementation of routing, the NSX-edge, the size of X-large, is deployed.

Looking ahead, the test results show that the network can handle up to 8 Gbit / s. This is a very solid bandwidth, which is enough at this stage; if necessary, it can be increased.

Component interaction scheme

Backup proxies and test VMs are deployed within the same VMware vSAN cluster. After starting the backup task (backup-joby) depending on the selected transport mode, the features of which are discussed below, backup-proxy:

- Retrieves data from backup VMs via vSAN network (HotAdd) or via management network (NBD)

- Sends the processed data to the backup repository on a dedicated subnet for this purpose.

Backup Proxy Transport Modes

Backup proxy (Backup proxy) is a component of the VBR infrastructure that directly performs the processing of a backup job. It extracts data from the VM, processes it (compresses, deduplicates, encrypts) and sends it to the repository, where it is stored in the backup files.

Backup proxy allows you to work in three transport modes:

- Direct storage access

- Virtual appliance

- Network

VMware Selectel based cloud uses vSAN as storage , in this configuration Direct storage access is not supported, therefore this mode is not considered and has not been tested. The remaining two modes work fine on each of our vSphere clusters, let's look at them in more detail.

Virtual appliance mode (HotAdd)

Virtual appliance is the recommended mode when deploying a backup proxy as a VM. ESXi hosts on which backup proxies are deployed must have access to all vSphere cluster Datastore storing backed up VMs. The essence of the mode is that the proxy mounts to itself the disks of backup VMs (VMware SCSI HotAdd) and takes data from them as from its own. Data retrieval occurs from the Datastore over the storage network.

In our case, the backup proxy VM should be located on one of the ESXi hosts of the vSAN cluster, which we back up. Data retrieval takes place over the vSAN network. Thus, to work in the Virtual appliance mode, at least one backup proxy must be deployed in each vSAN cluster. Deploy a backup proxy pair (for example, in a management cluster) and backup all vSAN clusters with them.

| pros | Minuses |

| Fast is usually much faster than NBD, especially in the case of full backup or large increments. The speed can be inferior only to Direct storage access. | The disk mount operation (HotAdd) to the proxy can take up to 2 minutes to disk. With incremental backups of small portions of data, NBD may be faster. |

| Disposes of the storage network. Does not load the management interface and hypervisor. | Proxy VM consumes part of host resources. Sometimes there may be problems with removing snapshots. |

Network Mode (NBD)

It is the simplest and universal mode, suitable for both physical and virtual backup proxies. Extraction of data, in contrast to the previous two modes, is not on the storage network. The backup proxy collects VM data by connecting to the management interface of the ESXi hosts on which they rotate.

This approach has the following disadvantages:

- Often, ESXi control interfaces do not hang on the fastest uplinks, usually 1GbE

- Even if the management interface will have 10GbE ports, ESXi will not give the proxy the entire band - it artificially restricts it and allocates only a certain amount of interface bandwidth for backups

| pros | Minuses |

| Simple and versatile. Proxy can be physical and virtual. | As a rule, HotAdd is much slower, especially on large volumes of backup and a small number of parallel tasks (tasks). |

| Quickly starts, no delay on mounting disks. No problems with snapshots. | It creates a load (small) on the management interface and the hypervisor. |

Testing program

On the infrastructure described above, it is necessary to backup test VMs and record the following indicators:

- CPU load,%

- RAM consumption, GB

- Network load, Gbit / s

- Backup performance, MB / s

- Backup time, mm: ss

Indicators should be fixed for backup of one test VM and for parallel backup of two, four and six test VMs.

Indicators should be fixed for the Virtual appliance and Network backup proxy operation modes. Every time a full backup should be performed, no increments.

Thus, you need to create 4 backup jobs:

- For one test VM

- For two test VMs

- For four test VMs

- For six test VMs

As part of testing it is necessary:

- Sequentially run all the tasks in one mode

- Delete created backups so that there are no increments

- Repeat runs in the second mode, each time fixing indicators

In the settings of each task, you need to manually select the backup proxy prepared for testing, since it is not the only one in the common VBR infrastructure, but by default the proxy is automatically selected.

The mode of backup proxy by default is also automatically selected. Therefore, in the backup proxy settings, before each run, we manually set the required transport mode.

The most interesting indicator is the average speed or performance of the backup. It can be seen in the results of the job in the console VBR. There will also be shown the backup time.

In addition, it is necessary to estimate the load on the backup proxy in each of the tests. The CPU, memory, and network utilization rates can be monitored using the guest OS tools (Windows 2016) and at the VMware level.

In the backup proxy and backup repositories, the parameter for the maximum number of simultaneous tasks is set to 6. This means that as part of testing, all VMs in each task will be processed in parallel, none of them will wait in the queue, and performance will be maximal.

Veeam® recommends that the number of parallel tasks does not exceed the number of processor cores in the proxy and repository. The recommended amount of RAM in the repository is 2 GB per core, for a total of 12 GB. The infrastructure configuration shows that all recommendations are followed.

Backup speed and load in Virtual Appliance mode (Hot Add)

Backup 1 VM

Load Backup Proxy

| Indicator | Value |

| CPU load,% | 55-95 |

| RAM consumption, GB | 2-2.2 |

| Network load, Gbit / s | 4.7-6.4 |

Backup speed

| Indicator | Value |

| Backup performance, MB / s | 709 |

| Backup time, mm: ss | 06:35 |

Backup 2 VM

Load Backup Proxy

| Indicator | Value |

| CPU load,% | 70-100 (shelf 100% with sharp short drops to 70%) |

| RAM consumption, GB | 2.3-2.5 |

| Network load, Gbit / s | 5-7.7 |

Backup speed

| Indicator | Value |

| Backup performance, MB / s | 816 |

| Backup time, mm: ss | 10:03 |

Backup 4 VM

Load Backup Proxy

| Indicator | Value |

| CPU load,% | 100 (shelf 100% with rare small drops) |

| RAM consumption, GB | 3-3,5 |

| Network load, Gbit / s | 5-8,2 |

Backup speed

| Indicator | Value |

| Backup performance, MB / s | 885 |

| Backup time, mm: ss | 17:10 |

Backup 6 VM

Load Backup Proxy

| Indicator | Value |

| CPU load,% | 100 (shelf 100% with rare small drops) |

| RAM consumption, GB | 4-4,2 |

| Network load, Gbit / s | 5-8,2 |

Backup speed

| Indicator | Value |

| Backup performance, MB / s | 888 |

| Backup time, mm: ss | 24:42 |

Backup speed and load in Network mode (NBD)

Backup 1 VM

Load Backup Proxy

| Indicator | Value |

| CPU load,% | 18-24 |

| RAM consumption, GB | 1.9-2.1 |

| Network load, Gbit / s | 1.2-1.8 |

Backup speed

| Indicator | Value |

| Backup performance, MB / s | 192 |

| Backup time, mm: ss | 18:30 |

Backup 2 VM

Load Backup Proxy

| Indicator | Value |

| CPU load,% | 25-33 |

| RAM consumption, GB | 2.2-2.4 |

| Network load, Gbit / s | 1.5-2.5 |

Backup speed

| Indicator | Value |

| Backup performance, MB / s | 269 |

| Время бэкапа, мм: сс | 25:50 |

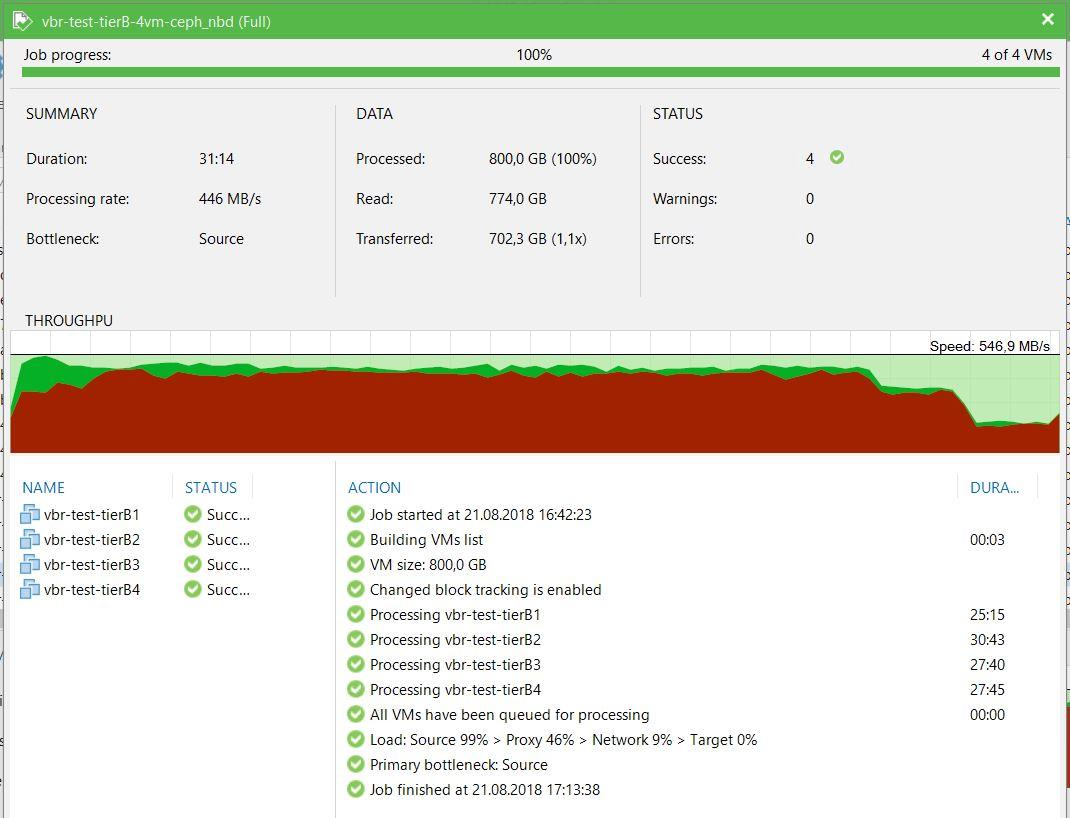

Backup 4 VM

Load Backup Proxy

| Показатель | Значение |

| Нагрузка на CPU, % | 45-55 |

| Потребление RAM, GB | 2,8-3,5 |

| Нагрузка на сеть, Гбит/с | 2,8-4,5 |

Backup speed

| Показатель | Значение |

| Производительность бэкапа, МБ/с | 446 |

| Время бэкапа, мм: сс | 31:14 |

Backup 6 VM

Load Backup Proxy

| Показатель | Значение |

| Нагрузка на CPU, % | 50-70 |

| Потребление RAM, GB | 3,5-4 |

| Нагрузка на сеть, Гбит/с | 3,5-5 |

Backup speed

| Показатель | Значение |

| Производительность бэкапа, МБ/с | 517 |

| Время бэкапа, мм: сс | 40:02 |

Сравнение производительности и нагрузки в режимах Virtual Appliance (HotAdd) и Network Mode (NBD)

| Кол-во ВМ | Скорость – HotAdd, МБ/с | Скорость – NBD, МБ/с | HotAdd/NBD |

| 1 | 709 | 192 | 3,69 |

| 2 | 816 | 269 | 3,03 |

| 4 | 885 | 446 | 1,98 |

| 6 | 888 | 517 | 1,72 |

| Кол-во ВМ | Загрузка CPU – HotAdd, % | Загрузка CPU – NBD, % | HotAdd/NBD |

| 1 | 55-95 | 18-24 | 3,06-3,96 |

| 2 | 70-100 | 25-33 | 2,8-3,03 |

| 4 | 100 | 45-55 | 1,82-2,22 |

| 6 | 100 | 50-70 | 1,43-2 |

| Кол-во ВМ | Загрузка RAM – HotAdd, GB | Загрузка RAM – NBD, GB | HotAdd/NBD |

| 1 | 2-2,2 | 1,9-2,1 | 1,05 |

| 2 | 2,3-2,5 | 2,2-2,4 | 1,04-1,05 |

| 4 | 3-3,5 | 2,8-3,5 | 1-1,07 |

| 6 | 4-4,2 | 3,5-4 | 1,14-1,05 |

| Кол-во ВМ | Загрузка сети – HotAdd, Гб/с | Загрузка сети – NBD, Гб/с | HotAdd/NBD |

| 1 | 4,7-6,4 | 1,2-1,8 | 3,56-3,92 |

| 2 | 5-7,7 | 1,5-2,5 | 3,08-3,33 |

| 4 | 5-8,2 | 2,8-4,5 | 1,79-1,82 |

| 6 | 5-8,2 | 3,5-5 | 1,43-1,64 |

Выводы по результатам тестирования

The performance indicators of the backup, obtained as a result of testing, unequivocally confirm the fact that the Virtual appliance mode is significantly superior in speed compared to the Network mode, especially in a small number of parallel tasks.

Let me remind you that the tests for both modes were run in absolutely identical conditions on the same platform. The network bandwidth was also the same - the management interfaces through which the proxy collects data in NBD mode give out 10 Gb / s, like the vSAN network for HotAdd mode, we did not set any limits on the bandwidth.

Obviously, ESXi really slows down Veeam® and gives it only a portion of the band in Network mode, hence the differences in backup speed. However, with an increase in the number of streams — simultaneous backup tasks — the Network mode significantly reduces the backlog.

We see that in the Virtual appliance mode, already on 4 VM backup proxy rests on the processor, it cannot work faster, for 6 VM the backup speed has not changed much. In this case, the backup speed of 1-2 VM in this mode lags slightly, the capabilities of backup proxy and platform are used to the maximum even on a small number of threads.

In Network mode, on the contrary, there is a significant increase in productivity with an increase in the number of simultaneous tasks. At the same time, the load on the backup proxy processor is much lower than in HotAdd mode, even on 6 threads it does not exceed 70%.

The consumption of RAM backup proxy is small and about the same in both modes.

The load on the backup proxy network correlates with the backup speed, exceeding it by ~ 10-17%. Apparently, the proxy takes data from the WM sources somewhat faster than uploads to the repository, since they need to be processed.

It is interesting to observe the Load line in the pictures with the results of the execution of the job. It shows the level of load on various elements of the backup infrastructure: source, proxy, network, repository.

In the Virtual appliance mode, we see that the performance of the backup rests on the proxy and the network, they are always about equally loaded. The source and repository are not a bottleneck.

In Network mode, the source is always the bottleneck, even for a single stream. It can be seen that the remaining elements of the infrastructure can give more, but ESXi does not give them.

Summary

Testing has confirmed that backup proxy in the studied transport modes in practice behaves exactly as the theory suggests.

ON Veeam® proved to be very worthy:

- In HotAdd mode, all infrastructure features were utilized efficiently and completely.

- In NBD mode, performance is expected to be more modest, but this is not a Veeam® problem, but a feature of the ESXi network stack.

We obtained real indicators of productivity and load, which is very useful for choosing the optimal mode of operation and subsequent scaling of the system.

At the moment, we are quite satisfied with the existing performance of the backup, we understand how to properly increase it when such a need arises.