Packages and package managers for k8s

We all use some kind of package managers, including the cleaning lady Aunt Galya, who has an iPhone in her pocket right now updated. But there is no general agreement on the functions of package managers, and the standard rpm and dpkg OSs and build systems are called package managers. We offer to reflect on the topic of their functions - what it is and why they are needed in the modern world. And then we will dig towards Kubernetes and carefully consider Helm in terms of these functions.

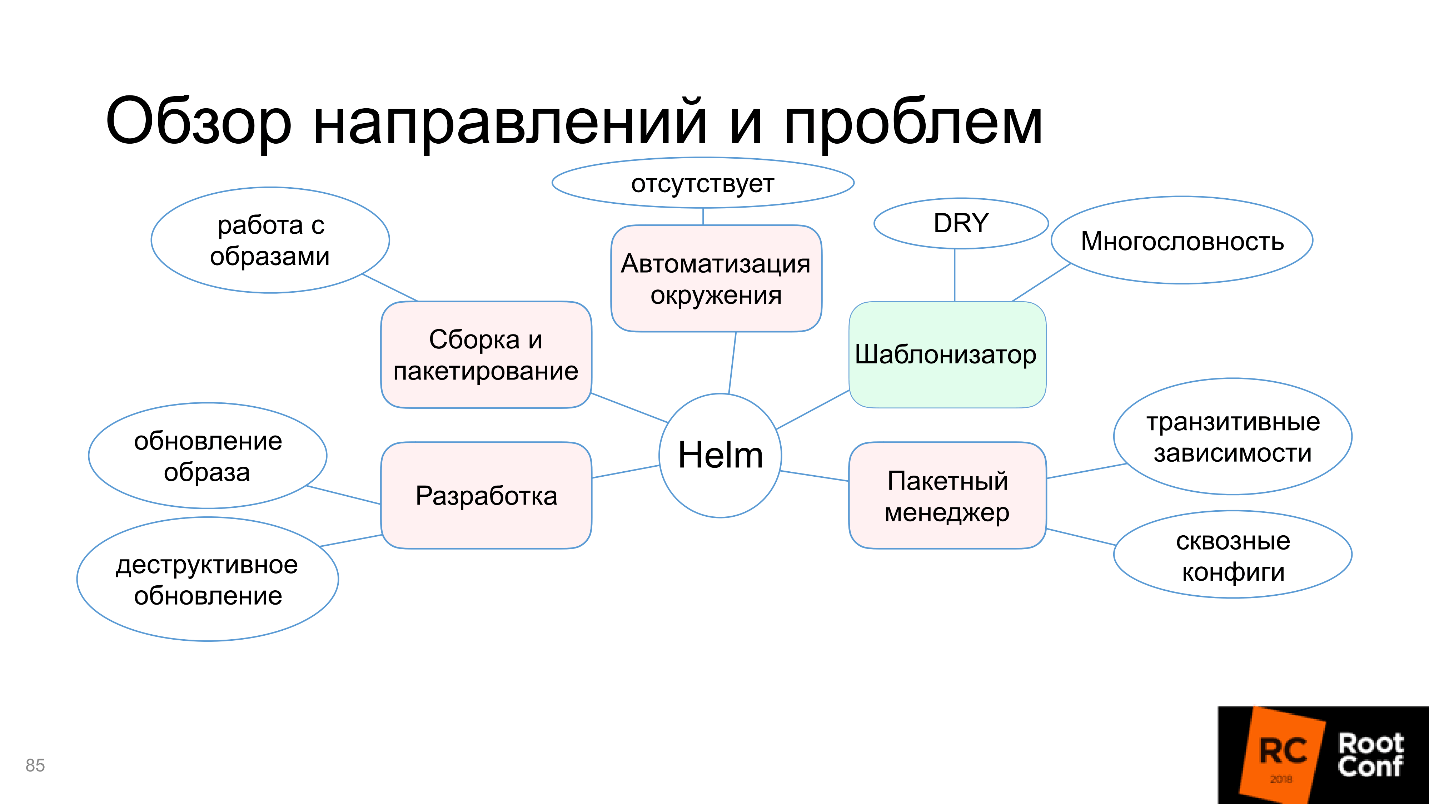

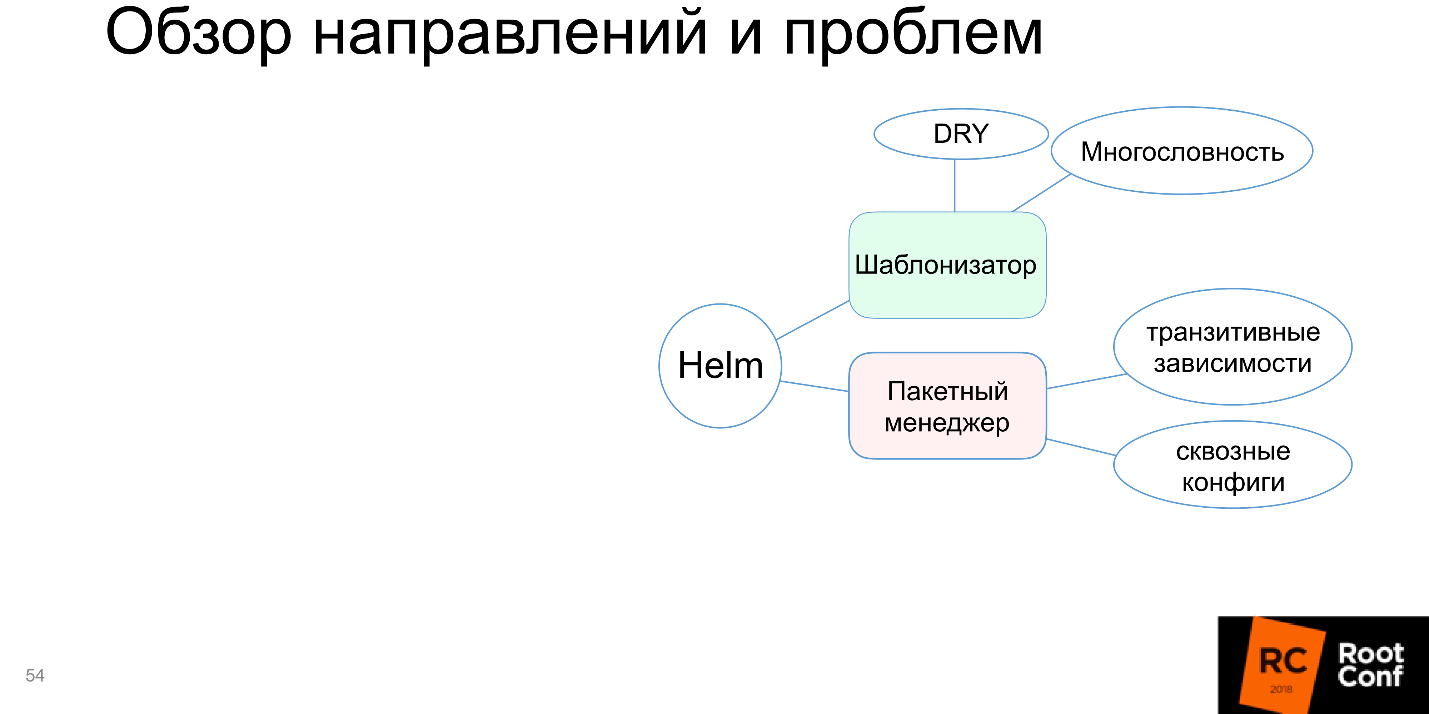

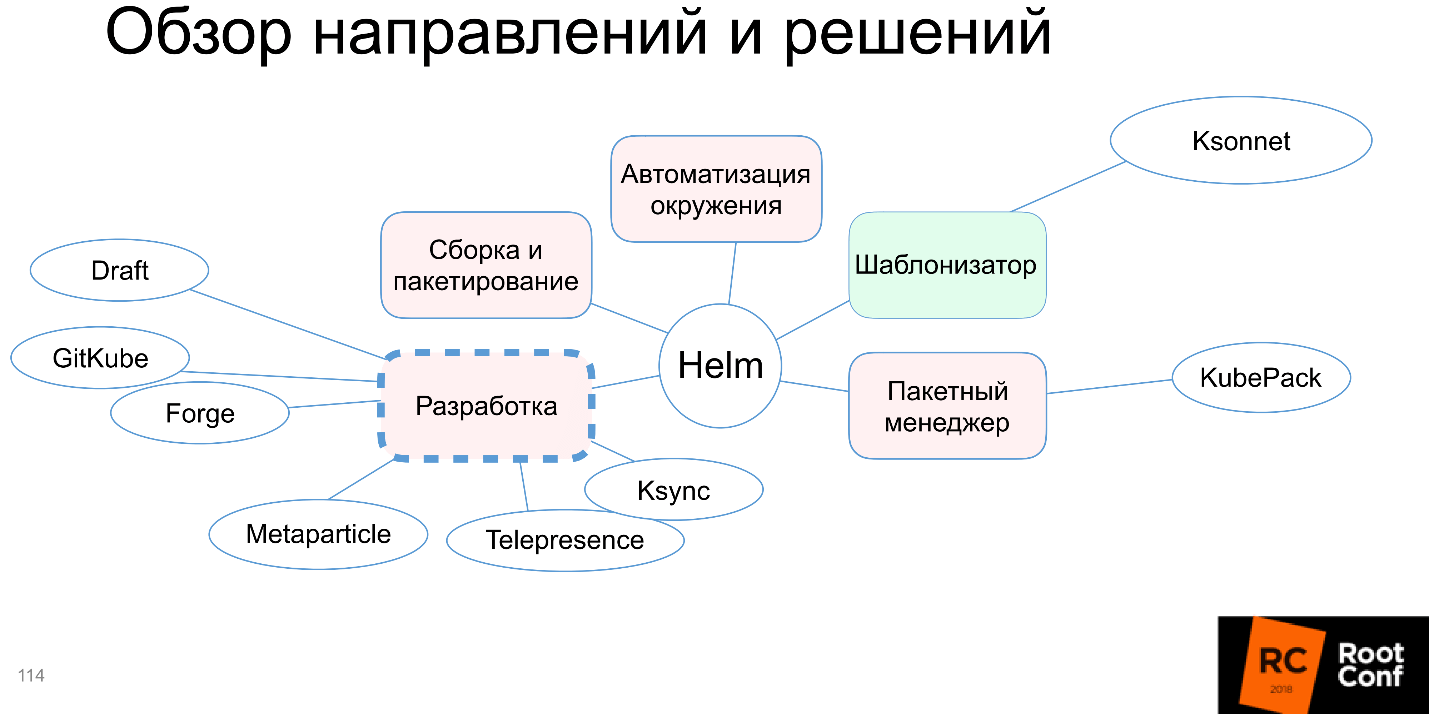

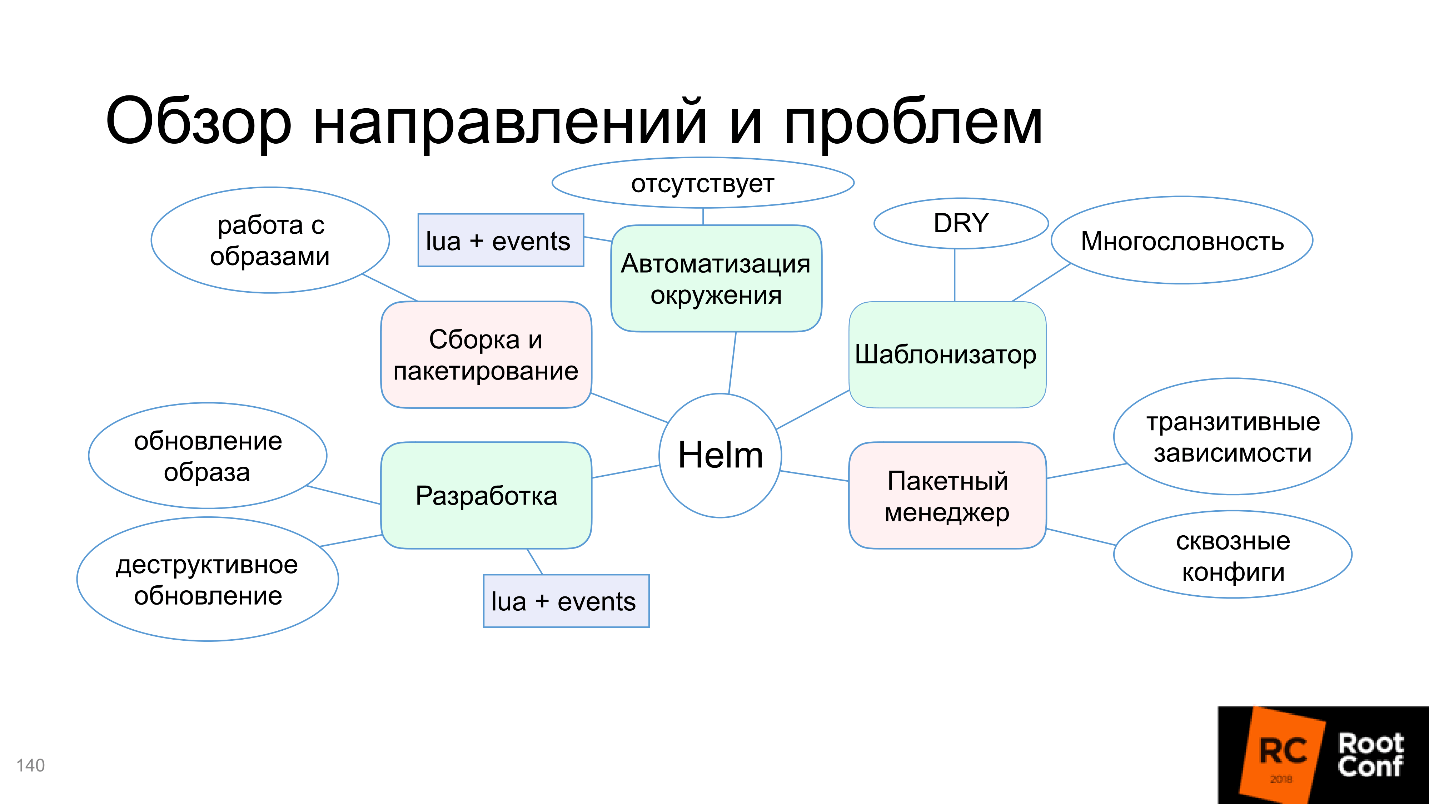

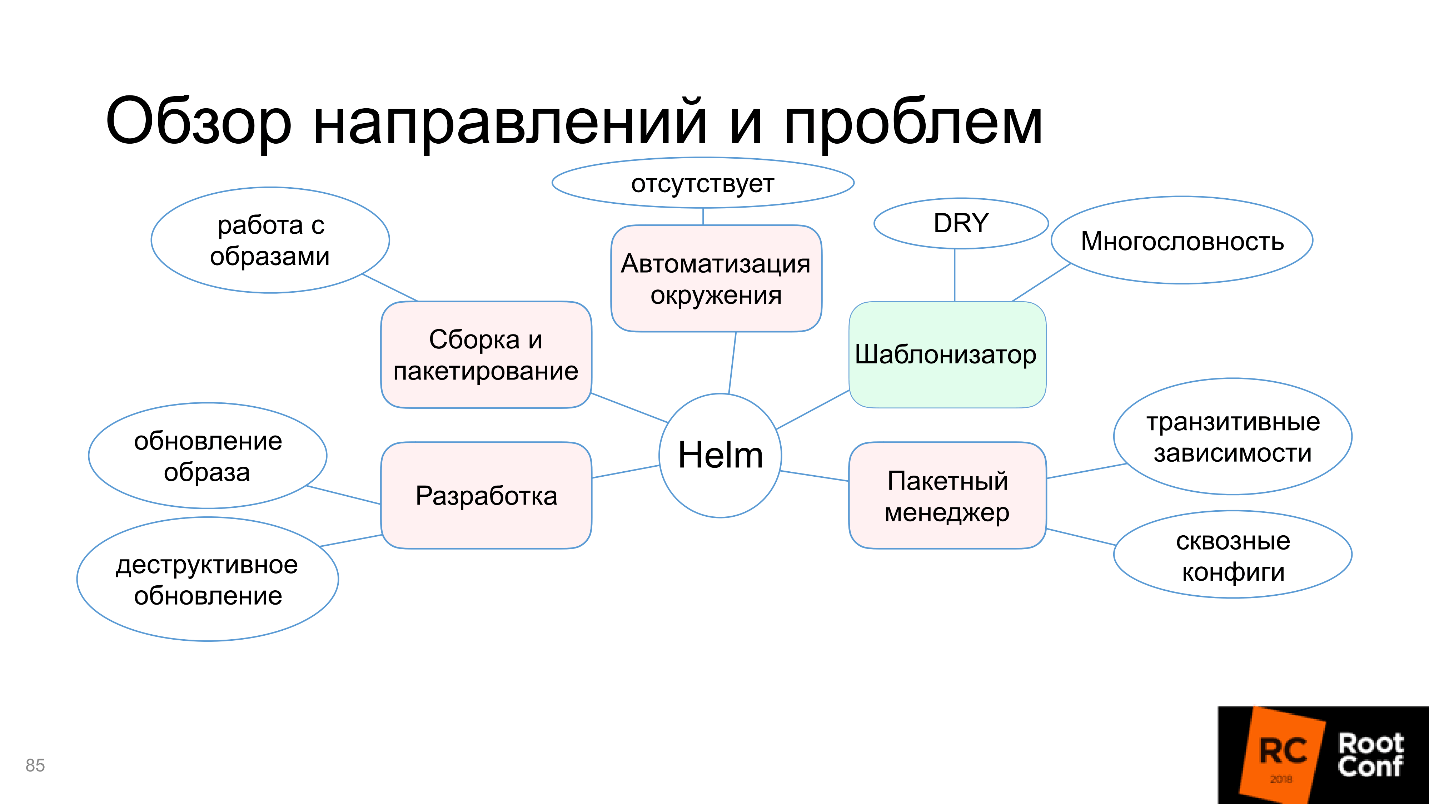

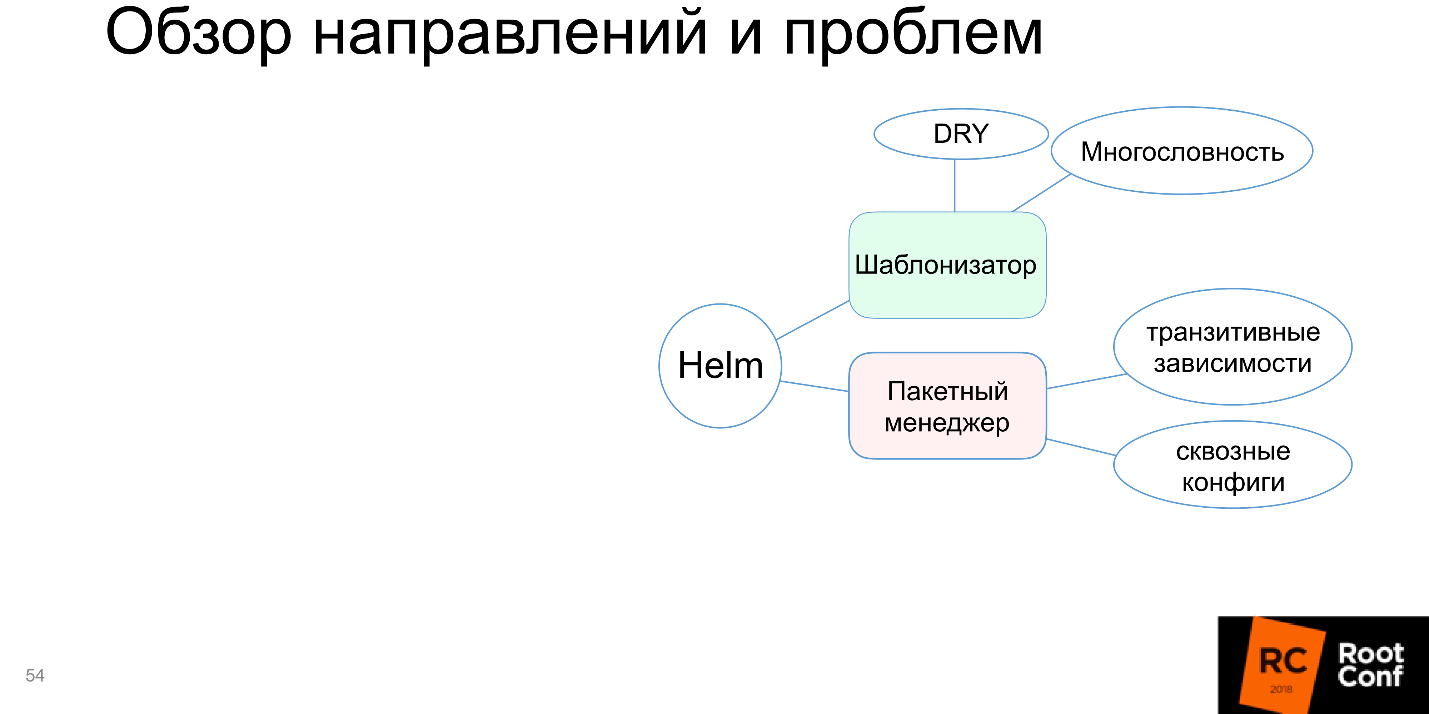

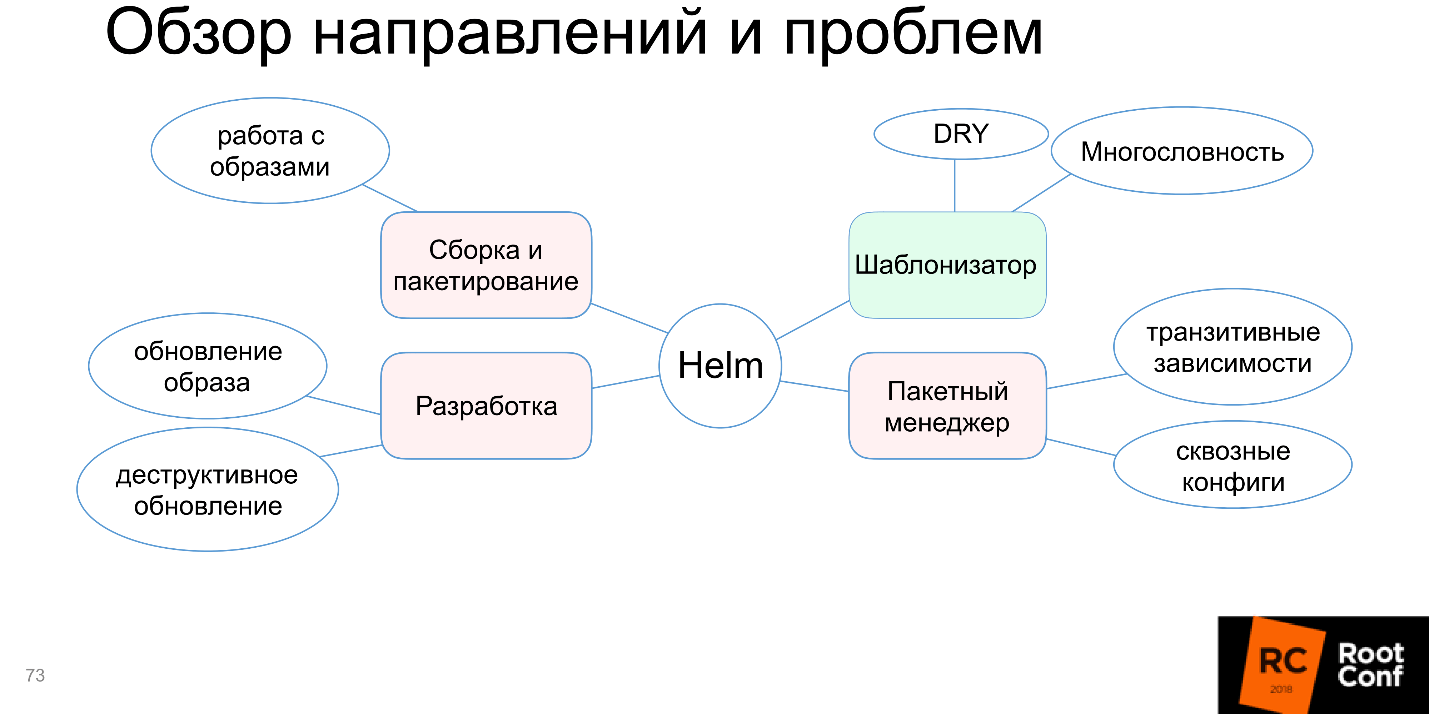

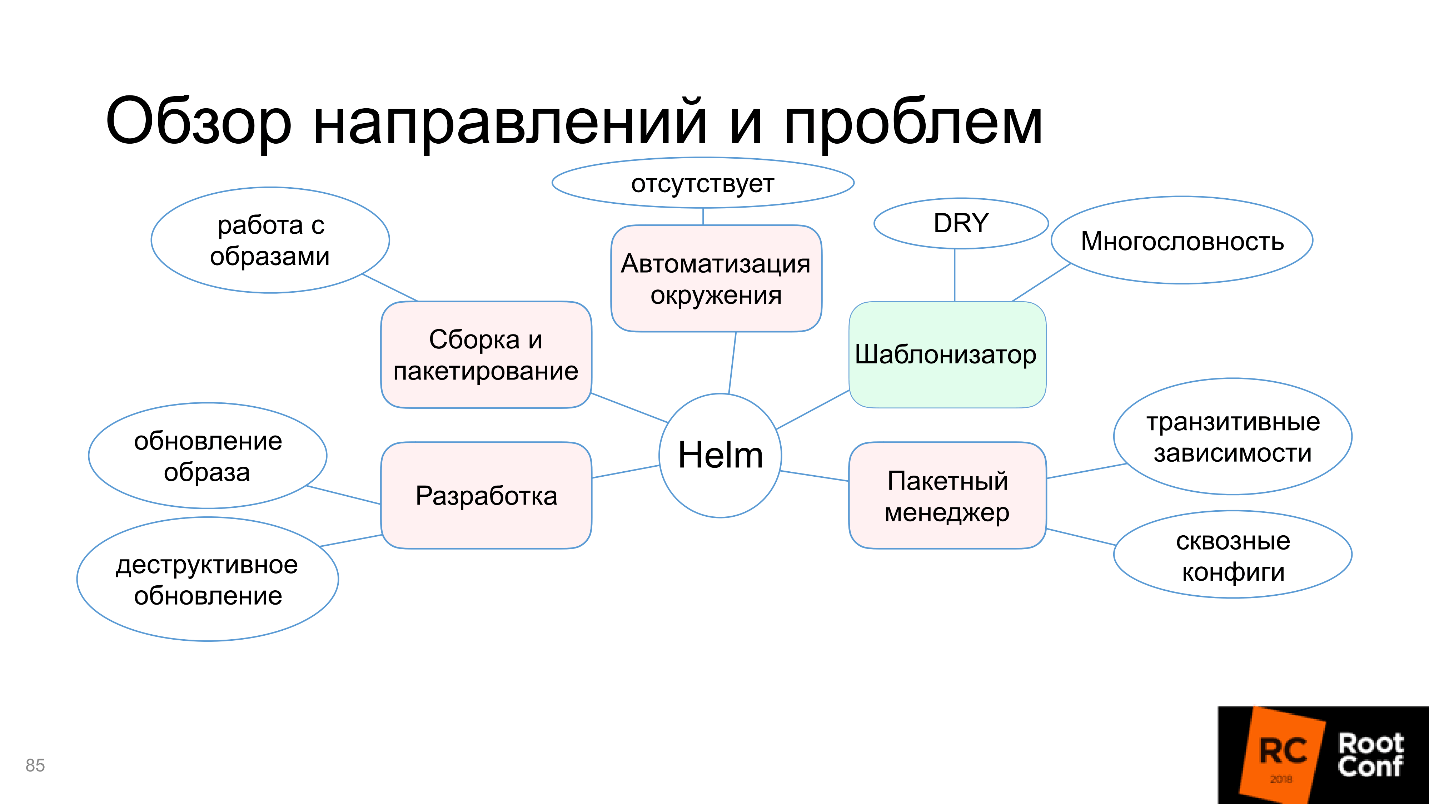

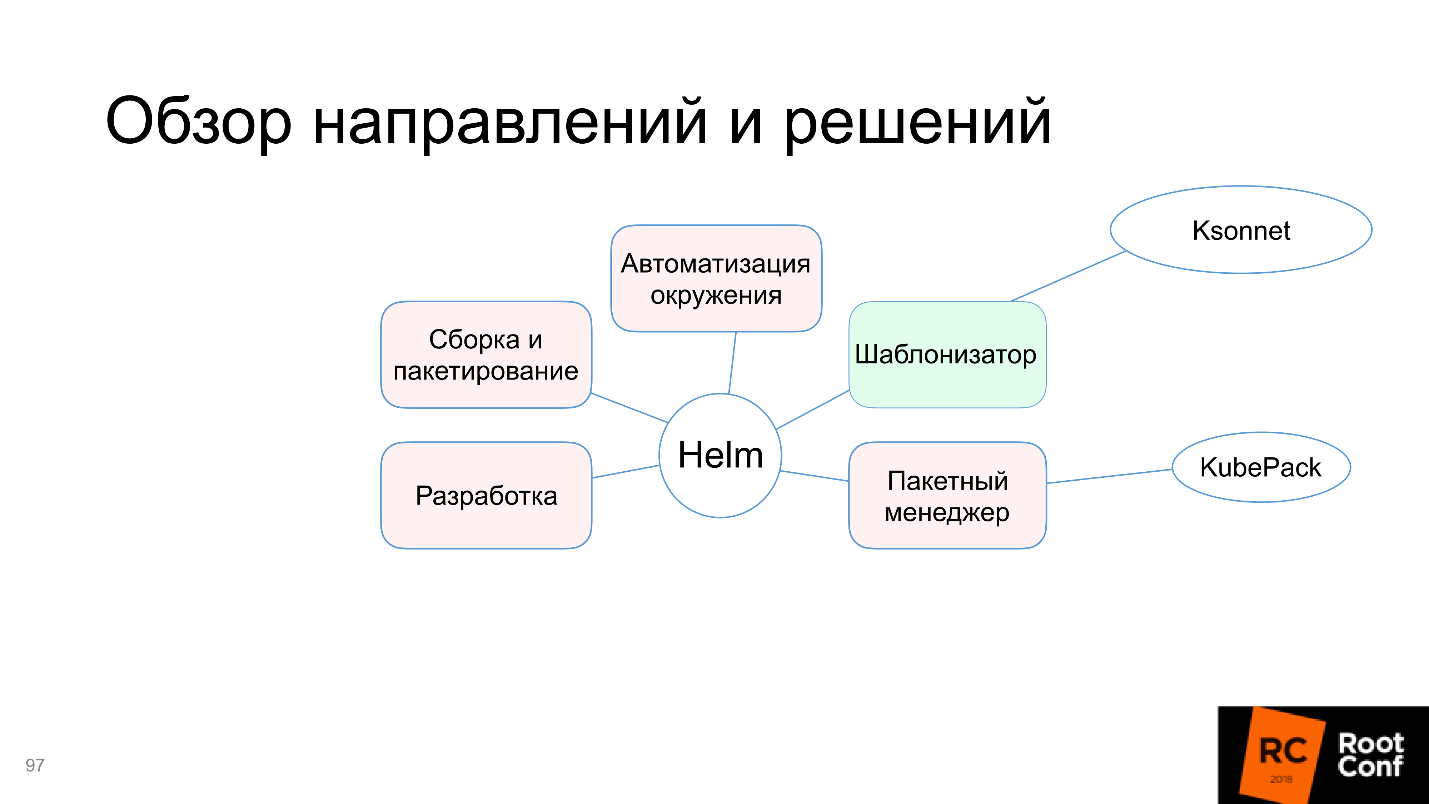

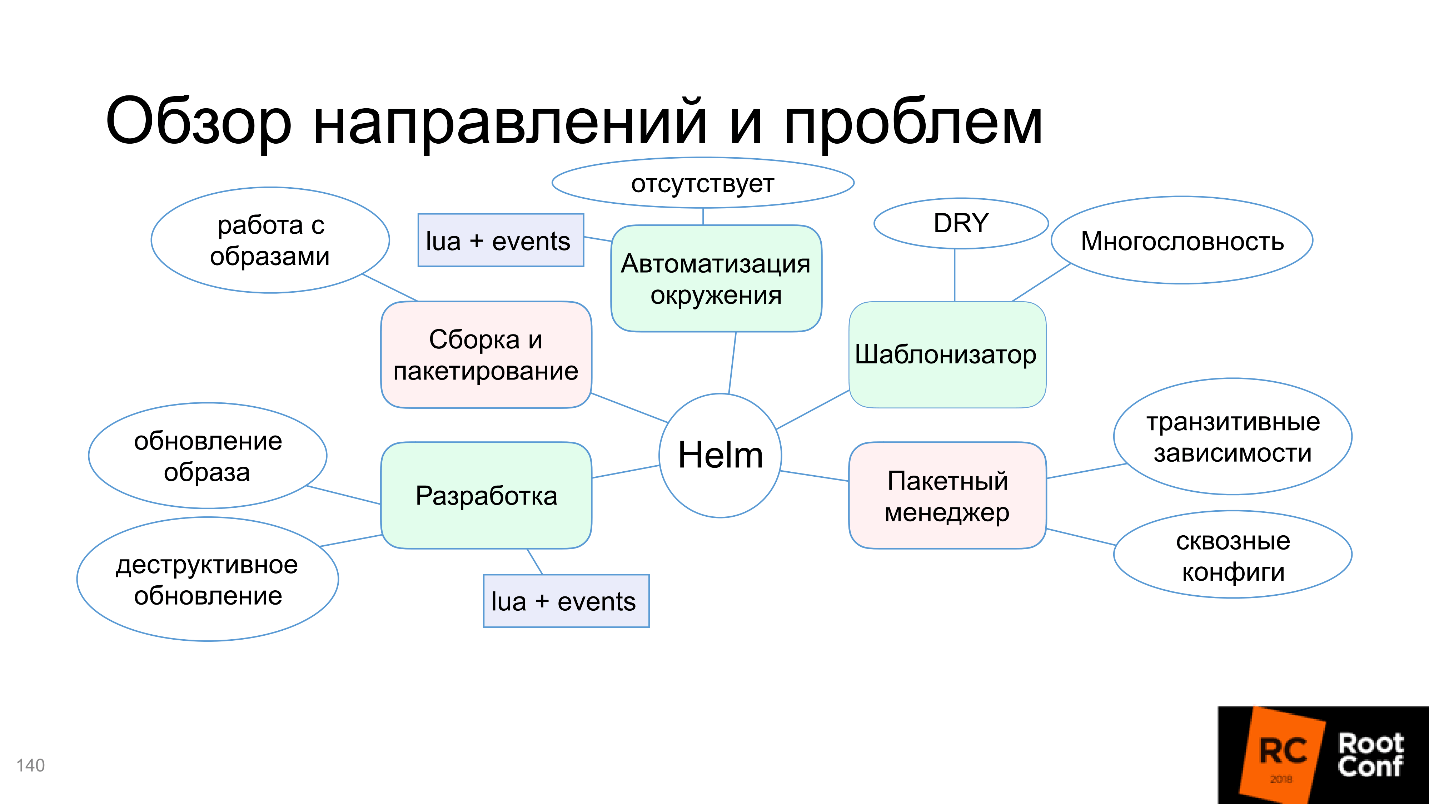

We will understand why in this diagram only the template function is highlighted in green, and what are the problems with assembly and packaging, automation of the environment, and more. But do not worry, the article does not end with the fact that everything is bad. The community could not come to terms with this and offers alternative tools and solutions - we will deal with them.

Ivan Glushkov ( gli ) helped us with this with his report on RIT ++, a video and text version of this detailed and detailed presentation below.

About the speaker: Ivan Glushkov has been developing software for 15 years. I managed to work in MZ, in Echo on a platform for comments, participate in the development of compilers for the Elbrus processor in MCST. He is currently involved in infrastructure projects at Postmates. Ivan is one of the leading DevZen podcast in which they talk about our conferences: here is about RIT ++, and here about HighLoad ++.

Package managers

Although everyone uses some kind of package managers, there is no single agreement on what it is. There is a common understanding, and each has its own.

Let's recall what types of package managers come to mind first:

Their main function is to execute commands for installing a package, updating a package, uninstalling a package, and managing dependencies. In package managers inside programming languages, things are a little more complicated. For example, there are commands like “launch a package” or “create a release” (build / run / release). It turns out that this is already a build system, although we also call it a package manager.

All this is only due to the fact that you can’t just take it and ... let Haskell lovers forgive this comparison. You can run the binary file, but you cannot run the program in Haskell or C, first you need to prepare it somehow. And this preparation is rather complicated, and users want everything to be done automatically.

Development

The one who worked with GNU libtool, which was made for a large project consisting of a large number of components, does not laugh at the circus. This is really very difficult, and some cases cannot be resolved in principle, but can only be circumvented.

Compared to it, modern package language managers like cargo for Rust are much more convenient - you press the button and everything works. Although in fact, under the hood, a large number of problems are solved. Moreover, all these new functions require something additional, in particular, a database. Although in the package manager itself it can be called whatever you like, I call it a database, because data is stored there: about installed packages, about their versions, connected repositories, versions in these repositories. All this must be stored somewhere, so there is an internal database.

Development in this programming language, testing for this programming language, launches - all this is built-in and located inside, the work becomes very convenient . Most modern languages have supported this approach. Even those who did not support begin to support, because the community presses and says that in the modern world it is impossible without it.

But any solution always has not only advantages, but also disadvantages . The downside here is that you need wrappers, additional utilities and a built-in “database”.

Docker

No matter how, but essentially yes. I do not know a more correct utility in order to completely put the application together with all the dependencies, and to make it work at the click of a button. What is this if not a package manager? This is a great package manager!

Maxim Lapshin has already said that with Docker it has become much easier, and this is so. Docker has a built-in build system, all of these databases, bindings, utilities.

What is the price of all the benefits? Those who work with Docker give little thought to industrial applications. I have such an experience, and the price is actually very high:

For example, I had a task to transfer one program to use Docker. The program was developed over the years by a team. I come, we do everything that is written in books: we paint users stories, roles, see what and how they do, their standard routines.

I say:

- Docker can solve all your problems. Watch how this is done.

- Everything will be on the button - great! But we want SSH to do inside Kubernetes containers.

- Wait, no SSH anywhere.

- Yes, yes, everything is fine ... But is SSH possible?

In order to turn the perception of users into a new direction, it takes a lot of time, it takes educational work and a lot of effort.

Another price factor is that the docker-registry - An external repository for images, it needs to be installed and controlled somehow. It has its own problems, a garbage collector and so on, and it can often fall if you do not follow it, but this is all solved.

Kubernetes

Finally we got to Kubernetes. This is a cool OpenSource application management system that is actively supported by the community. Although it originally left one company, Kubernetes now has a huge community, and it is impossible to keep up with it, there are practically no alternatives.

Interestingly, all Kubernetes nodes work in Kubernetes itself through containers, and all external applications work through containers - everything works through containers ! This is both a plus and a minus.

Kubernetes has a lot of useful functionality and properties: distribution, fault tolerance, the ability to work with different cloud services, and orientation towards microservice architecture. All this is interesting and cool, but how to install the application in Kubernetes?

How to install the app?

Behind this phrase lies an abyss. You imagine - you have an application written, say, in Ruby, and you must put the Docker image in the Docker registry. This means you must:

In fact, this is a big, big pain in one line.

Plus, you still need to describe the application manifest in terms (resources) of k8s. The easiest option:

Gandhi rubs his hands on the slide - it looks like I found the package manager in Kubernetes. But kubectl is not a package manager. He just says that I want to see such a final state of the system. This is not installing a package, not working with dependencies, not building - it's just "I want to see this final state."

Helm

Finally we came to Helm. Helm is a multi-purpose utility. Now we will consider what areas of development of Helm and work with it are.

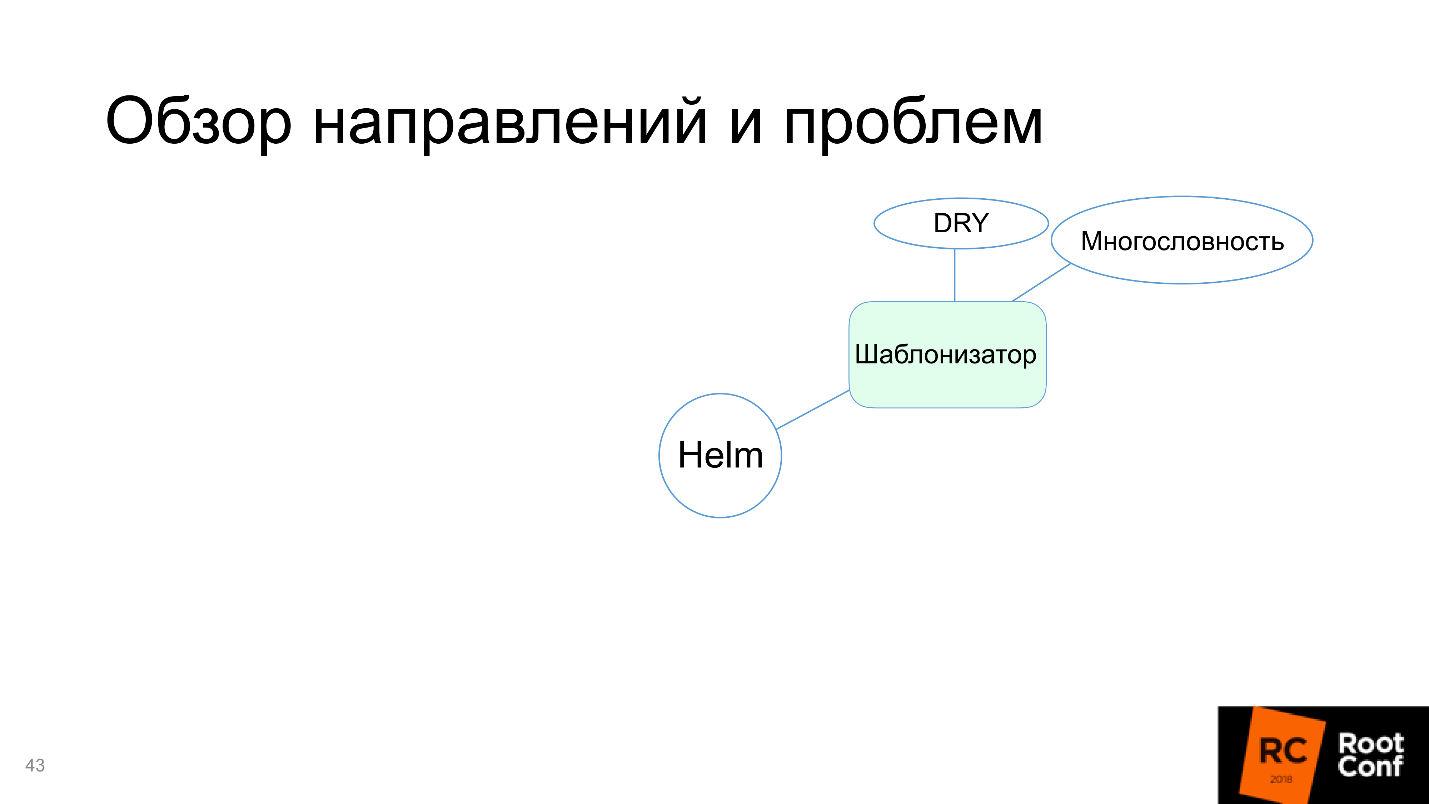

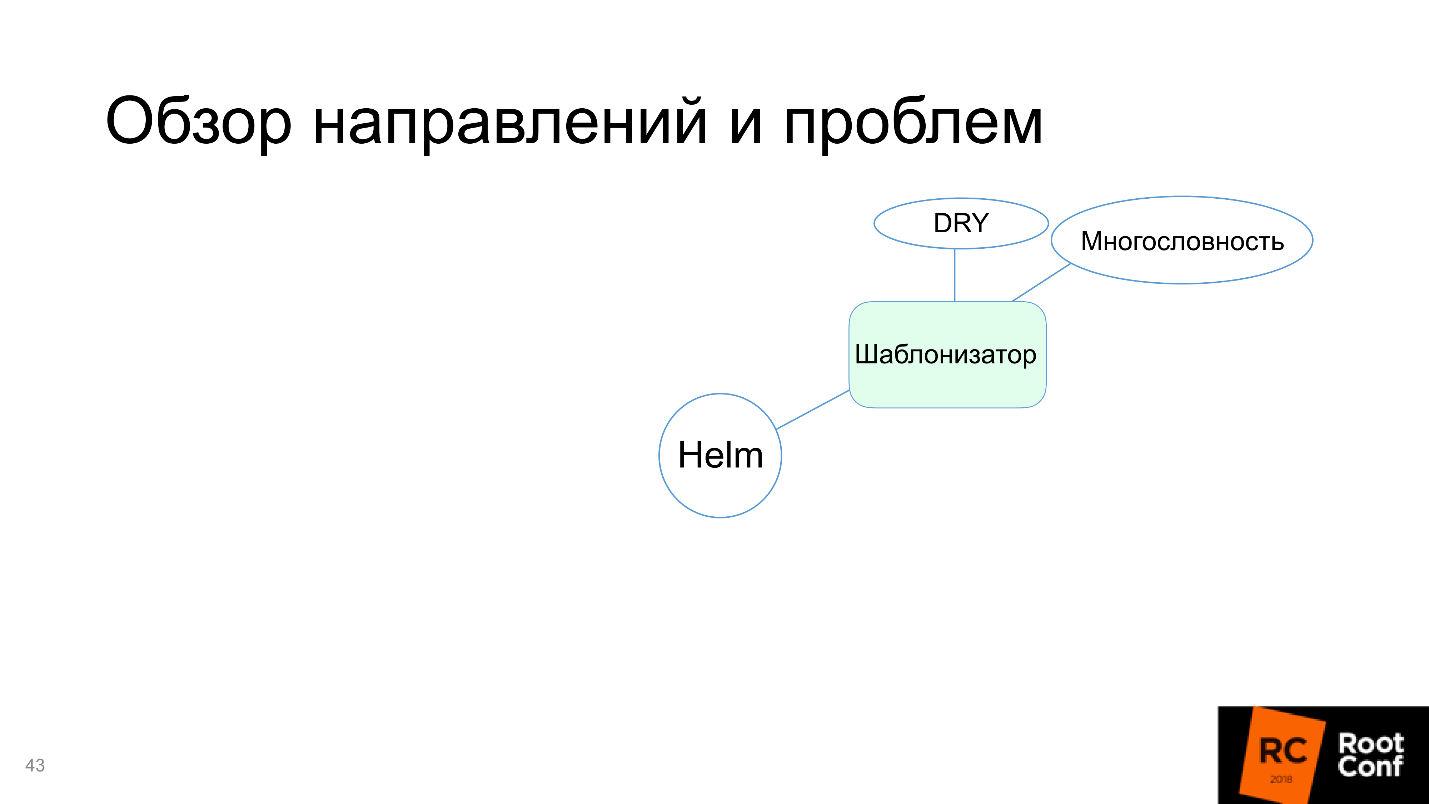

Template Engine

Firstly, Helm is a template engine. We discussed what resources need to be prepared, and the problem is to write in terms of Kubernetes (and not only in yaml). The most interesting thing is that these are static files for your specific application in this particular environment.

However, if you work with several environments and you have not only Production, but also Staging, Testing, Development and different environments for different teams, you need to have several similar manifests. For example, because in one of them there are several servers, and you need to have a large number of replicas, and in the other - only one replica. There is no database, access to RDS, and you need to install PostgreSQL inside. And here we have the old version, and we need to rewrite everything a bit.

All this diversity leads to the fact that you have to take your manifest for Kubernetes, copy it everywhere and fix it everywhere: here replace one digit, here is something else. This is becoming very uncomfortable.

The solution is simple - you need to enter templates . That is, you form a manifest, define variables in it, and then submit the variables defined externally as a file. The template creates the final manifest. It turns out to reuse the same manifest for all environments, which is much more convenient.

For example, the manifest for Helm.

The simplest startup command for installing chart is helm install ./wordpress (folder). To redefine some parameters, we say: "I want to redefine precisely these parameters and set such and such values."

Helm copes with this task, so in the diagram we mark it green.

True, cons appear:

Before plunging into the direction of Helm - a package manager, for which I tell you all this, let's see how Helm works with dependencies.

Work with dependencies

Helm is difficult to work with dependencies. Firstly, there is a requirements.yaml file that fits into what we depend on. While working with requirements, he does requirements.lock - this is the current state (nugget) of all the dependencies. After that, he downloads them to a folder called / charts.

There are tools to manage: whom, how, where to connect - tags and conditions , with which it is determined in which environment, depending on what external parameters, to connect or not to connect some dependencies.

Let's say you have PostgreSQL for the Staging environment (or RDS for Production, or NoSQL for tests). By installing this package in Production, you will not install PostgreSQL, because it is not needed there - just using tags and conditions.

What is interesting here?

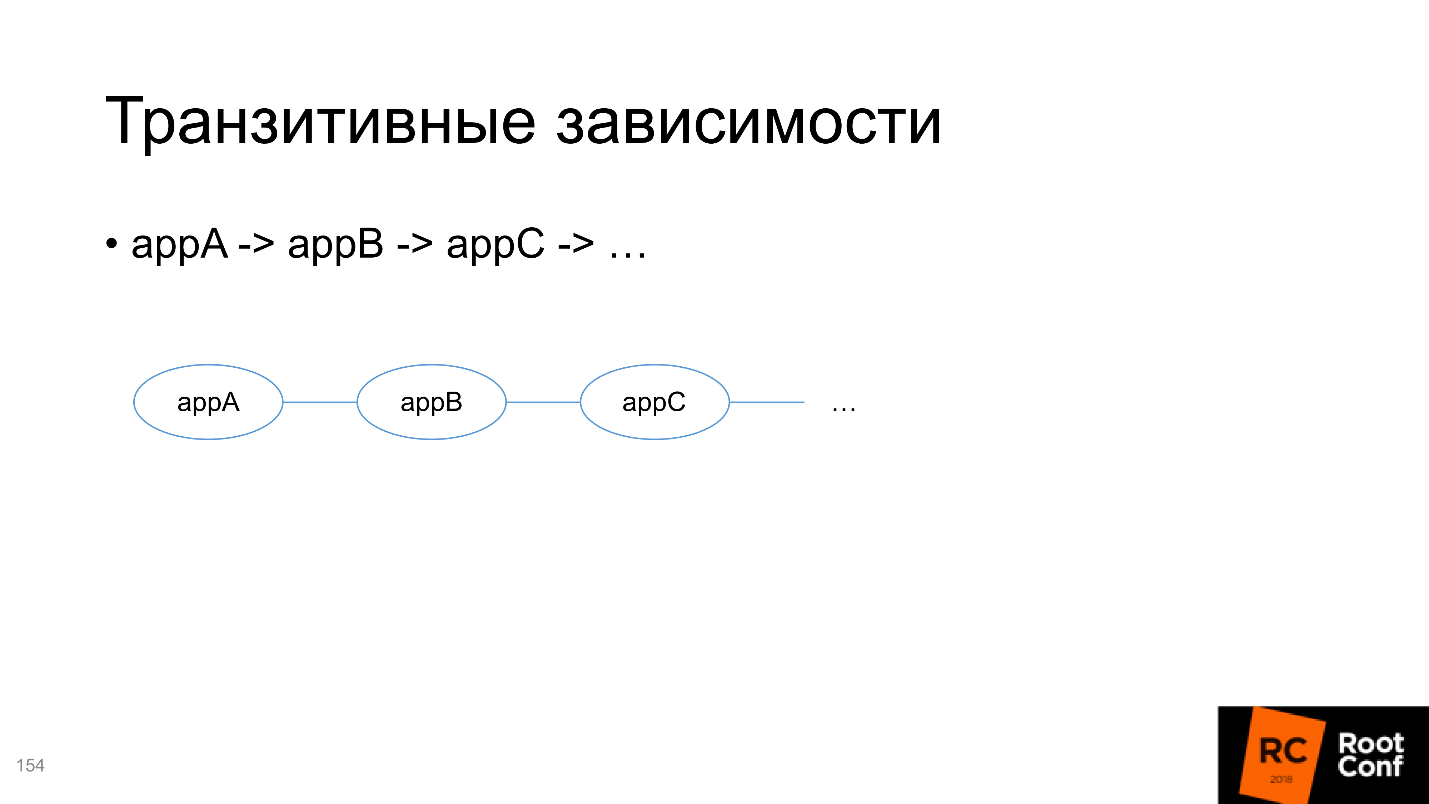

After we have downloaded all the dependencies in / charts (these dependencies may be, for example, 100), Helm takes and copies all the resources inside. After he rendered the templates, he collects all the resources in one place and sorts in some sort of his own order. You cannot influence this order. You must determine for yourself what your package depends on, and if the package has transitive dependencies, then you need to include all of them in the description in requirements.yaml. This must be borne in mind.

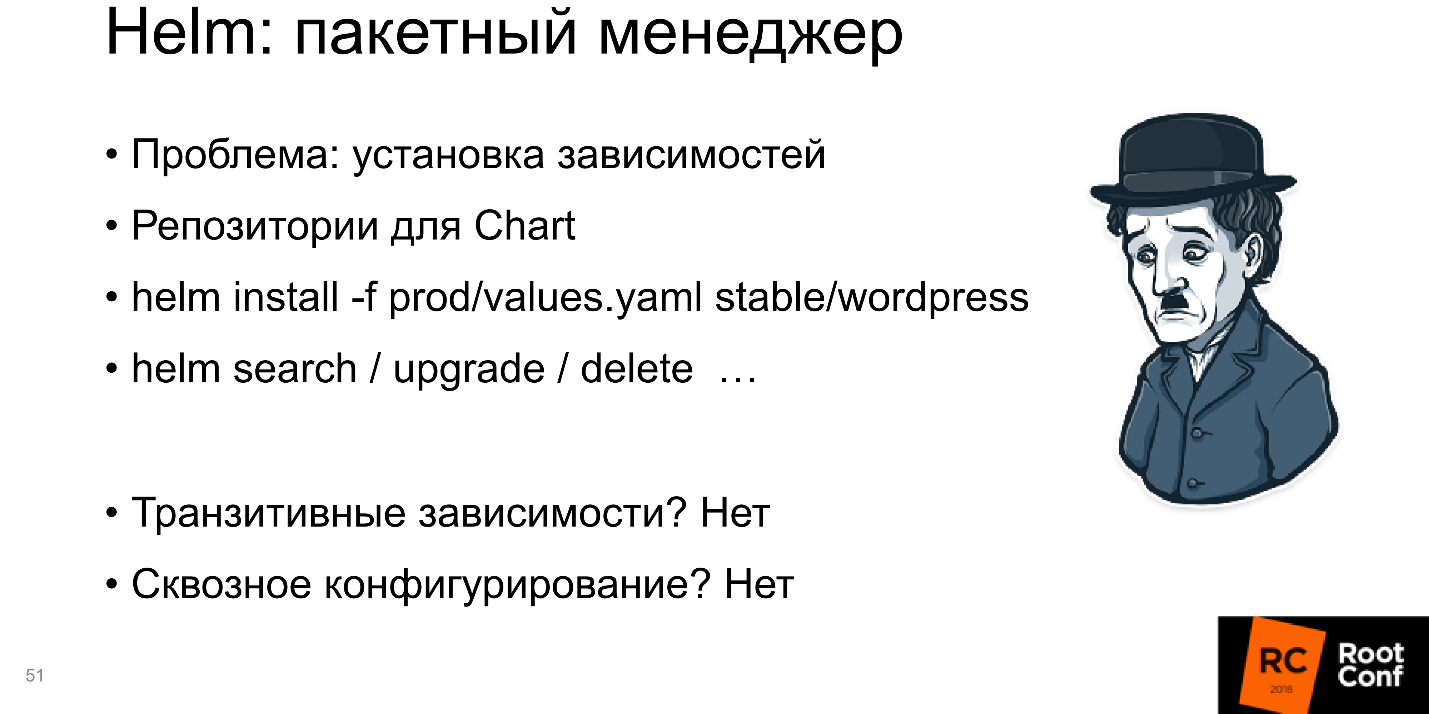

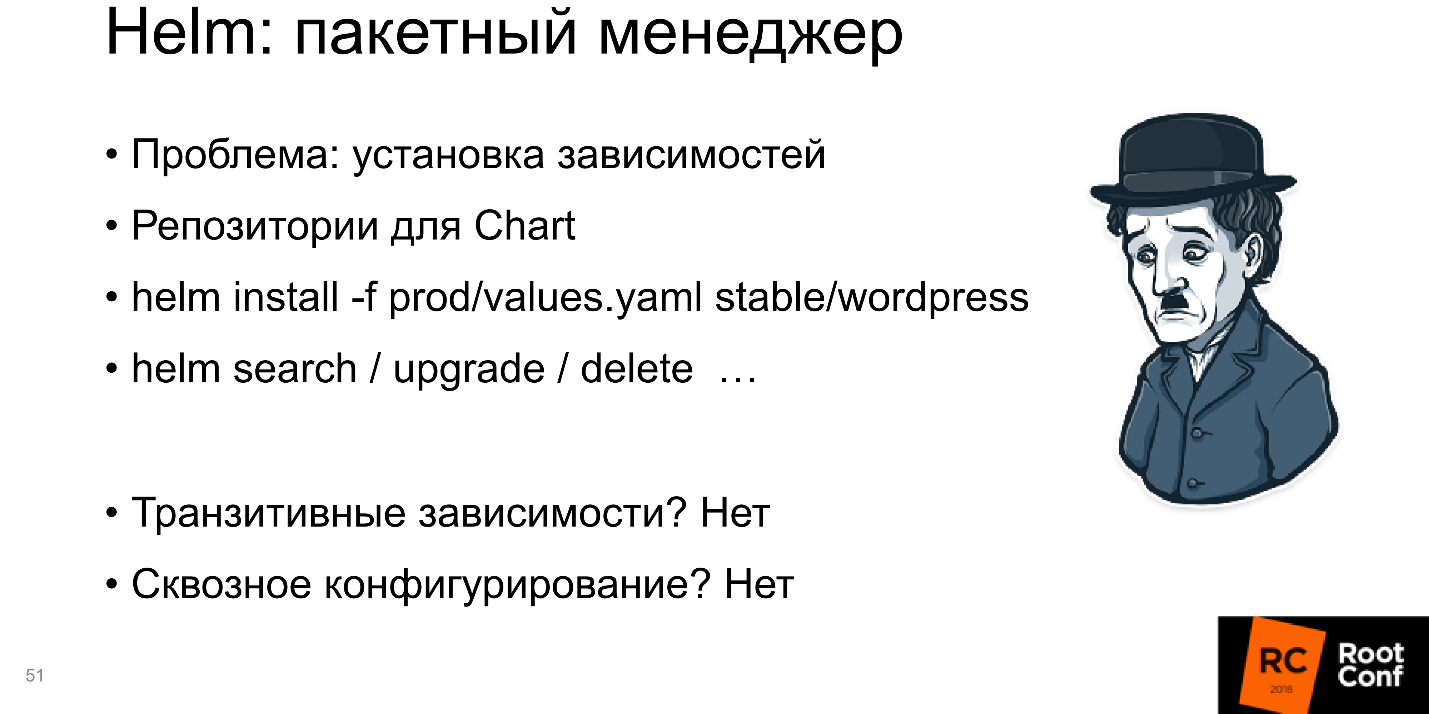

Package manager

Helm installs applications and dependencies, and you can tell Helm install - and it will install the package. So this is a package manager.

At the same time, if you have an external repository where you upload the package, you can access it not as a local folder, but simply say: “From this repository, take this package, install it with such and such parameters.”

There are open repositories with lots of packages. For example, you can run: helm install -f prod / values.yaml stable / wordpress

From the stable repository, you will take wordpress and install it on you. You can do everything: search / upgrade / delete. It turns out, really, Helm is a package manager.

But there are cons: all transitive dependenciesmust be included inside. This is a big problem when transitive dependencies are independent applications and you want to work with them separately for testing and development.

Another minus is end-to - end configuration . When you have a database and its name needs to be transferred to all packages, this can be, but it is difficult to do.

More often than not, you have installed one small packet and it works. The world is complex: the application depends on the application, which in turn also depends on the application - you need to configure them somehow cleverly. Helm does not know how to support this, or supports it with big problems, and sometimes you have to dance a lot with a tambourine to make it work. This is bad, so the “package manager" in the diagram is highlighted in red.

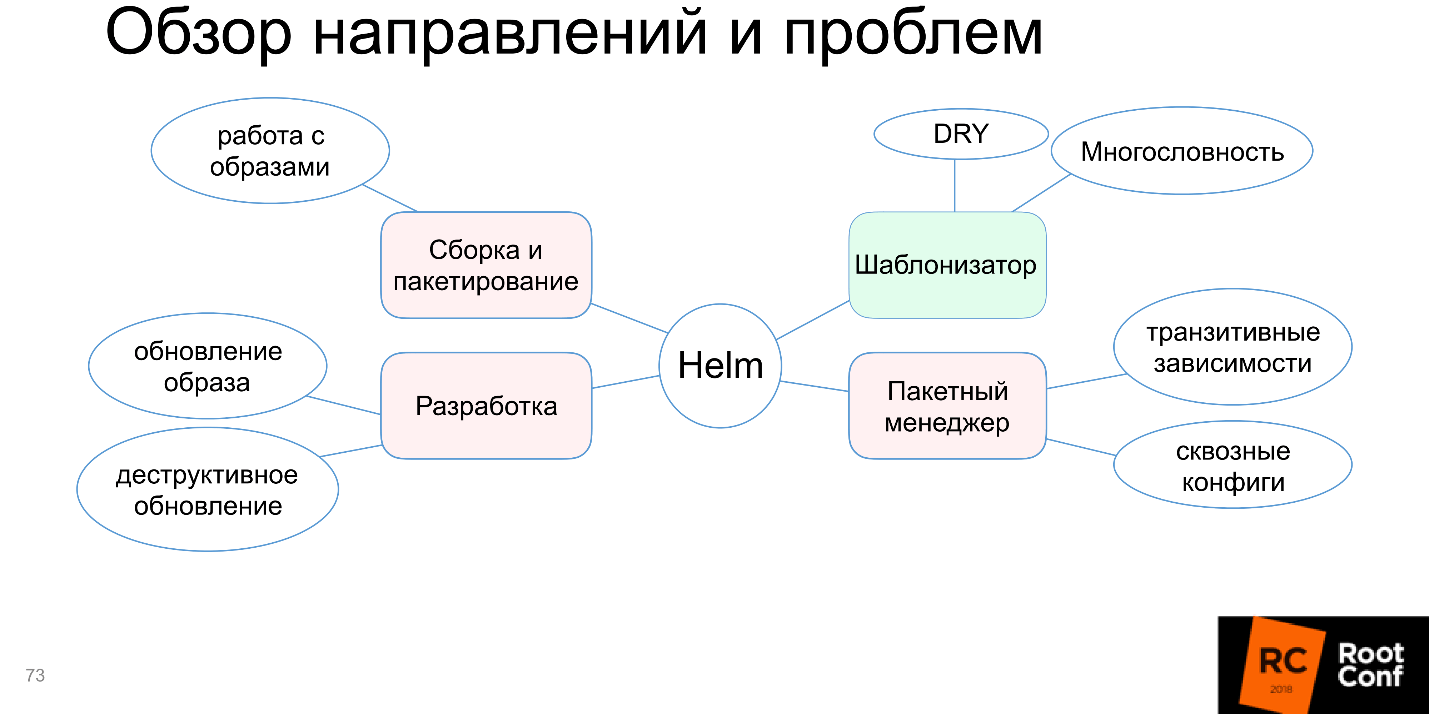

Assembly and packaging

“You can’t just get and run” the application in Kubernetes. You need to assemble it, that is, make a Docker image, write it to the Docker registry, etc. Although the whole package definition in Helm is. We determine what the package is, what functions and fields should be there, signatures and authentication (your company’s security system will be very happy). Therefore, on the one hand, assembly and packaging seem to be supported, and on the other hand, work with Docker images is not configured.

This is the same as if, to make an upgrade install for some small library, you would be sent to a distant folder to run the compiler.

Therefore, we say that Helm does not know how to work with images.

Development

The next headache is development. In development, we want to quickly and conveniently change our code. The time has passed when you punched holes on punch cards, and the result was obtained after 5 days. Everyone is used to replacing one letter with another in the editor, pressing compilation, and the already modified program works.

It turns out here that when changing the code, a lot of additional actions are needed: prepare a Docker file; Run Docker so that it builds the image; to push it somewhere; deploy to Kubernetes cluster. And only then you will get what you want on Production, and you can check the code.

There are still inconveniences due to destructive updateshelm upgrade. You looked at how everything works, through kubectl exec you looked inside the container, everything is fine. At this point, you start the update, a new image is downloaded, new resources are launched, and the old ones are deleted - you need to start everything from the very beginning.

The biggest pain is big images. Most companies do not work with small applications. Often, if not a supermonolith, then at least a small monolithic. Over time, annual rings grow, the code base increases, and gradually the application becomes quite large. I have repeatedly come across Docker images larger than 2 GB. Imagine now that you are making a change in one byte in your program, press a button, and a two-gigabyte Docker image begins to assemble. Then you press the next button, and the transfer of 2 GB to the server begins.

Docker allows you to work with layers, i.e. checks if there is one layer or another and sends the missing one. But the world is such that most often it will be one large layer. While 2 GB will go to the server, while they will go to Kubernetes with the Docker-registry, they will be rolled out in all ways, until you finally start, you can safely have tea.

Helm does not offer any help with large Docker images. I believe that this should not be, but Helm developers know better than all users, and Steve Jobs smiles at it.

The block with the development also turned red.

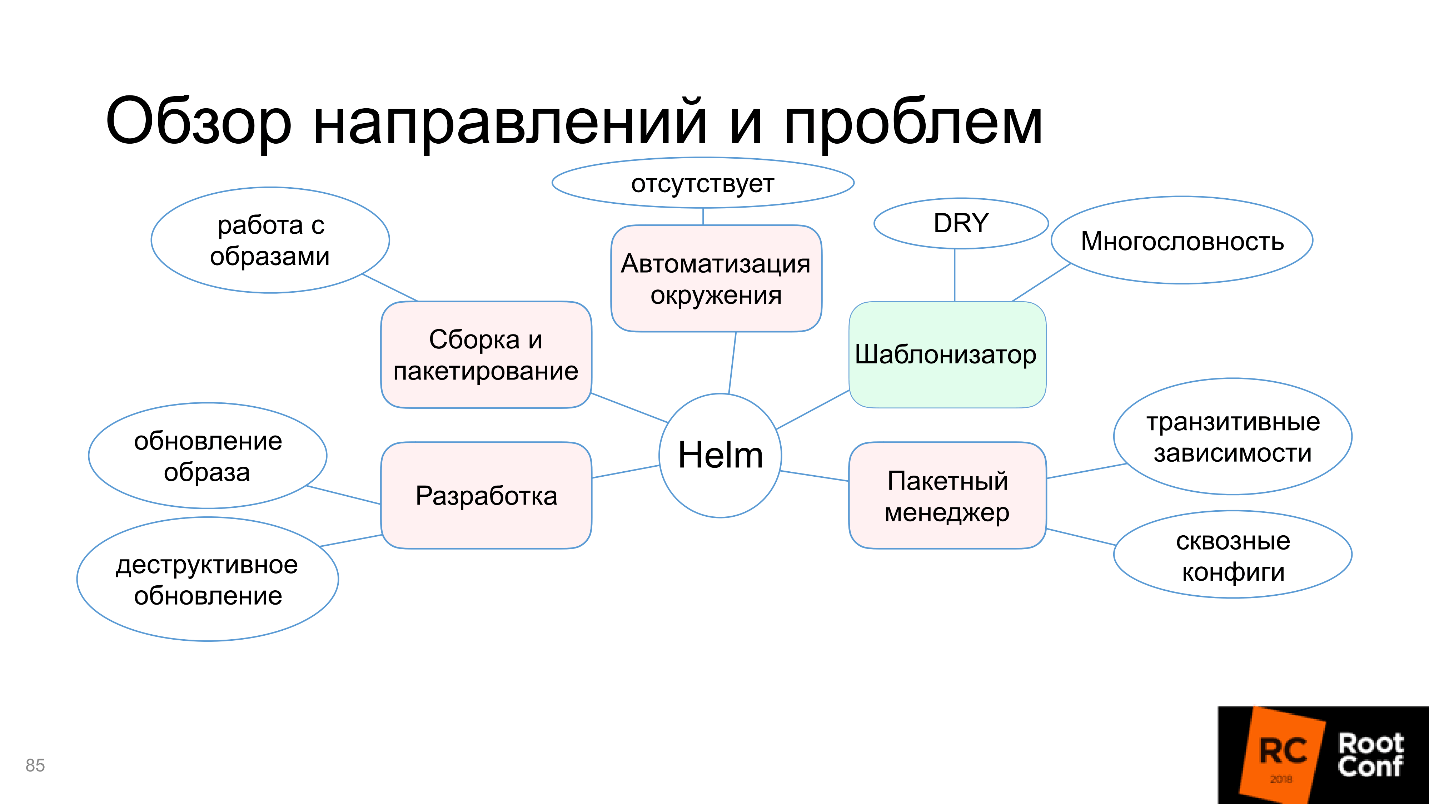

Environment Automation

The last direction - automation of the environment - is an interesting area. Before the world of Docker (and Kubernetes, as a related model), it was not possible to say: “I want to install my application on this server or on these servers so that there are n replicas, 50 dependencies, and it all works automatically!” Such, one might say, what was, but was not!

Kubernetes provides this and it’s logical to use it somehow, for example, to say: “I’m deploying a new environment here and I want all the development teams that prepared their applications to just be able to click a button and all these applications would be automatically installed in the new environment” . Theoretically, Helm should help in this, so that the configuration can be taken from an external data source - S3, GitHub - from anywhere.

It is advisable that there is a special button in Helm “Do me good already finally!” - and it would immediately become good. Kubernetes allows you to do this.

This is especially convenient because Kubernetes can be run anywhere, and it works through the API. By launching minikube locally, either in AWS or in the Google Cloud Engine, you get Kubernetes right out of the box and work the same everywhere: press a button and everything is fine right away.

It would seem that naturally Helm allows you to do this. Because otherwise, what was the point of creating Helm in general?

But it turns out, no!

There is no automation of the environment.

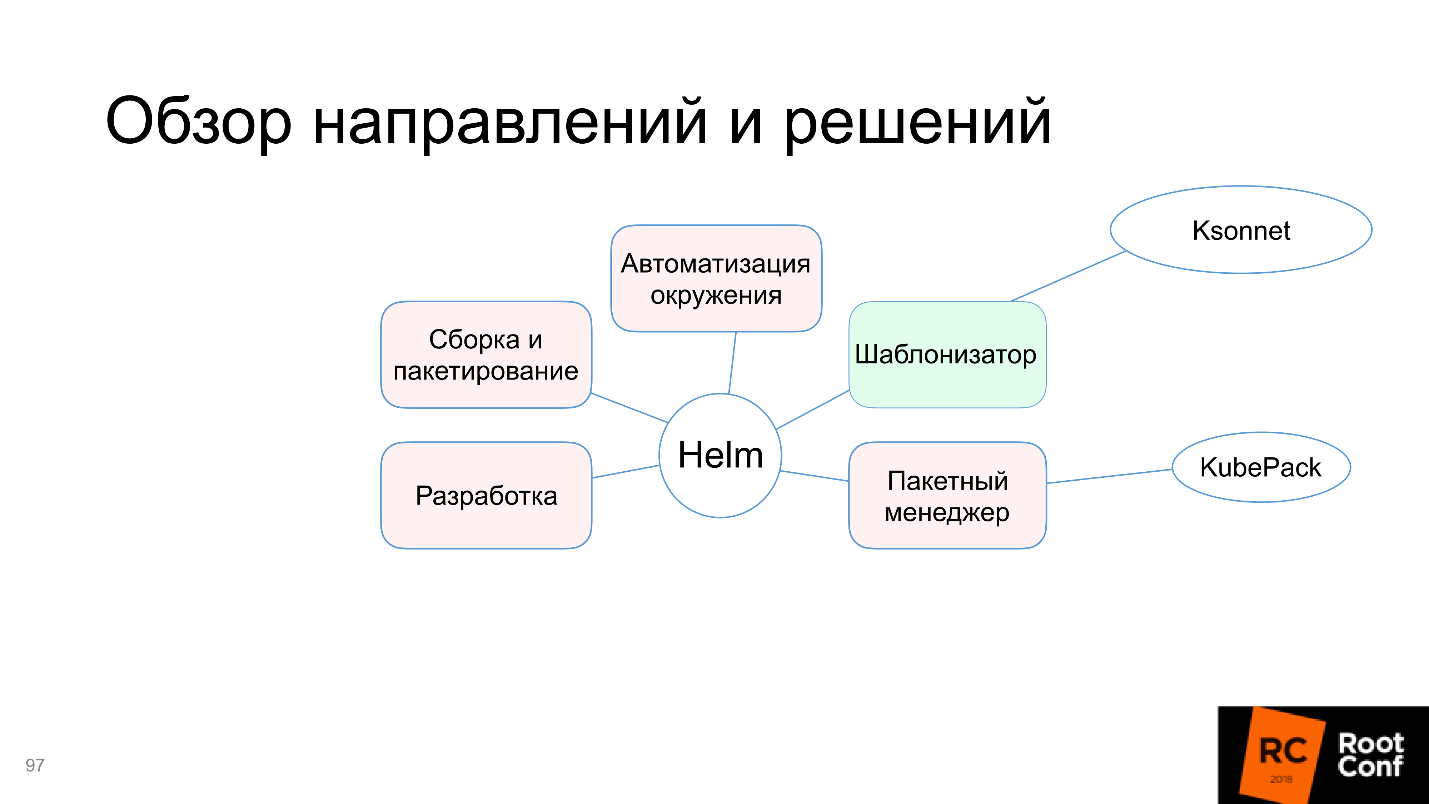

Alternatives

When there is an application from Kubernetes that everyone uses (it is now in fact the solution number 1), but Helm has the problems discussed above, the community could not help but respond. It began to create alternative tools and solutions.

Template engines

It would seem, as a template engine, Helm solved all the problems, but still the community creates alternatives. Let me remind you the problems of the template engine: verbosity and code reuse.

A good representative here is Ksonnet. It uses a fundamentally different model of data and concepts, and does not work with Kubernetes resources, but with its own definitions:

prototype (params) -> component -> application -> environments.

There are parts that make up the prototype. The prototype is parameterized by external data, and component appears. Several components make up an application that you can run. It runs in different environments. There are some clear links to Kubernetes resources here, but there may not be a direct analogy.

The main goal of Ksonnet was, of course, to reuse resources . They wanted to make sure that once you wrote the code, you could later use it anywhere, which increases the speed of development. If you create a large external library, people can constantly post their resources there, and the entire community will be able to reuse them.

Theoretically, this is convenient. I practically did not use it.

Package managers

The problem here, as we recall, is nested dependencies, end-to-end configs, transitive dependencies. Ksonnet does not solve them. Ksonnet has a very similar model to Helm, which in the same way defines a list of dependencies in a file, it is uploaded to a specific directory, etc. The difference is that you can make patches, that is, you are preparing a folder in which to put patches for specific packages.

When you upload a folder, these patches are superimposed, and the result obtained by merging several patches can be used. Plus there is validation of configurations for dependencies. It may be convenient, but it is still very crude, there is almost no documentation, and the version froze at 0.1. I think it's too early to use it.

So the package manager is KubePack, and other alternatives I have not seen.

Development

Solutions fall into several different categories:

1. Development on top of Helm

A good representative is Draft . Its purpose is the ability to try the application before the code is commited, that is, to see the current state. Draft uses the programming method - Heroku-style:

This can be done in any directory with code, everything seems to be fast, easy and good.

But in the future, it’s better to start working with Helm anyway, because Draft creates Helm resources, and when your code reaches the production ready state, you should not hope that Draft will create Helm resources well. You will still have to create them manually.

It turns out that Draft is needed to quickly start and try at the very beginning before you write at least one Helm resource. Draft is the first contender for this direction.

2. Development without Helm

Development without Helm Charts involves building the same Kubernetes manifests that would otherwise be built through Helm Charts. I offer three alternatives:

They are all very similar to Helm, the differences are in small details. In particular, part of the solutions assumes that you will use the command line interface, and Chart assumes that you will do git push and manage hooks.

In the end, you still run docker build, docker push and kubectl rollout anyway. All the problems that we listed for Helm cannot be solved. It is simply an alternative with the same drawbacks.

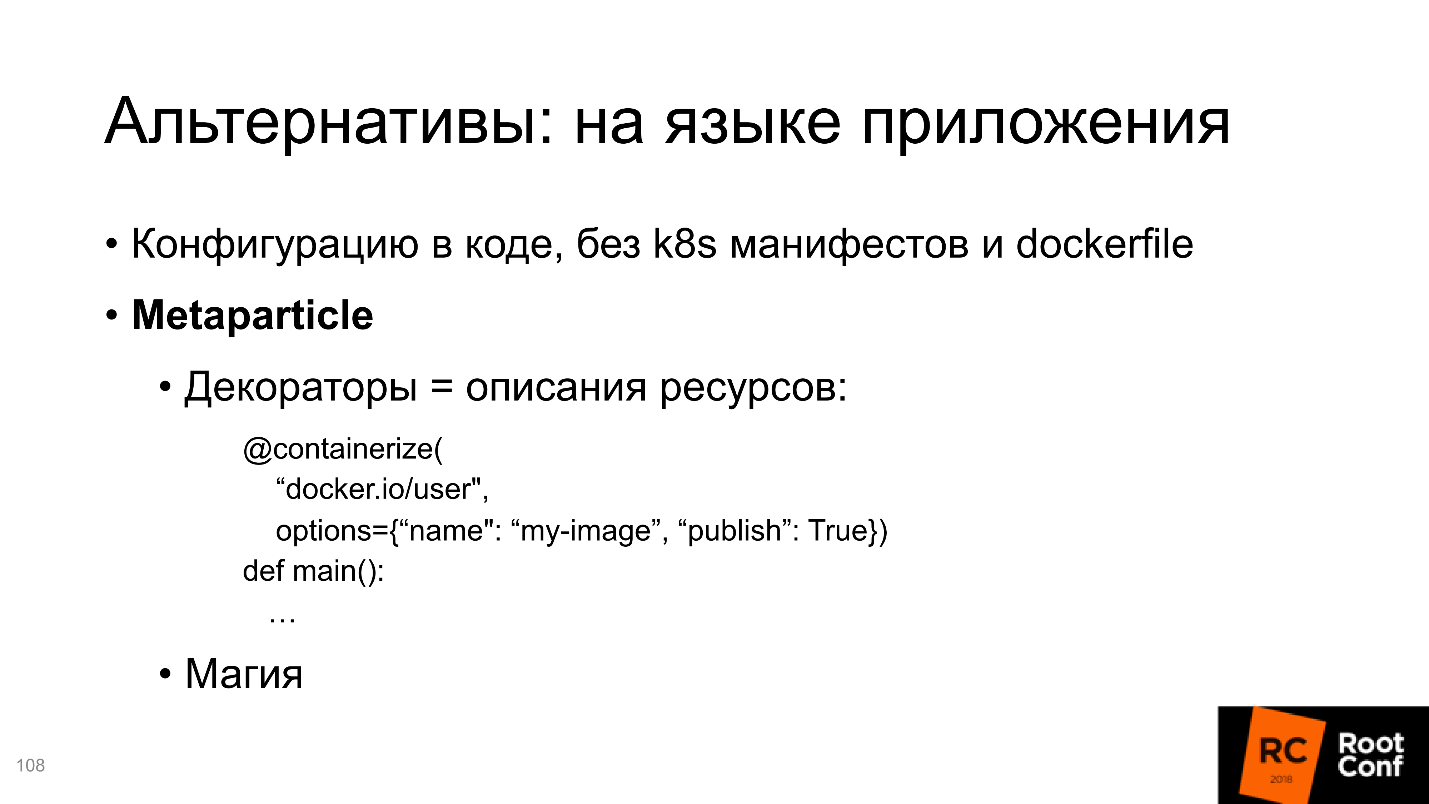

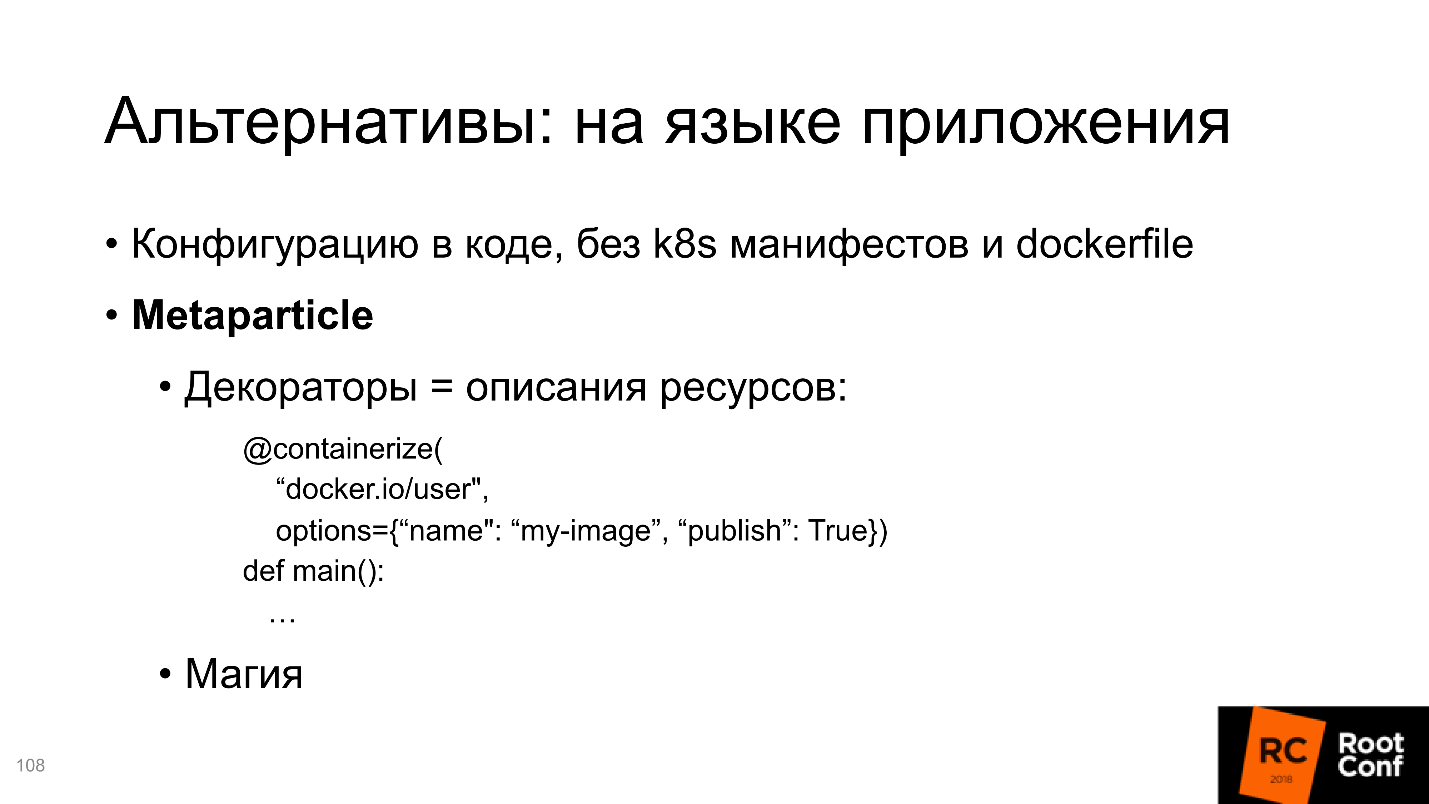

3. Development in the application language

The next alternative is application language development. A good example here is the Metaparticle . Let's say you write code in Python, and right inside Python you start to think what you want from the application.

If you correctly describe the working application, what parts it consists of, how they interact, theoretically some magic will help turn this knowledge from the point of view of the application into Kubernetes resources.

Using decorators, we determine: where is the repository, how to push it correctly; what services are and how they interact with each other; there should be so many replicas on the cluster, etc.

I don’t know about you, but personally, I don’t like it, when instead of me some kind of magic decides that such a Kubernetes config should be made from Python definitions. And if I need something else?

All this works to some extent, as long as the application is fairly standard. After that, the problems begin. Let’s say, I want the preinstall container to start before the main container starts, which will perform some actions to configure the future container. This is all done in the framework of Kubernetes-configs, but I do not know if this is done in the framework of the Metaparticle.

I give simple trivial examples, and there are much more of them, in the specification of Kubernetes-configs there are a lot of parameters. I am sure that they are not fully present in decorators like Metaparticle.

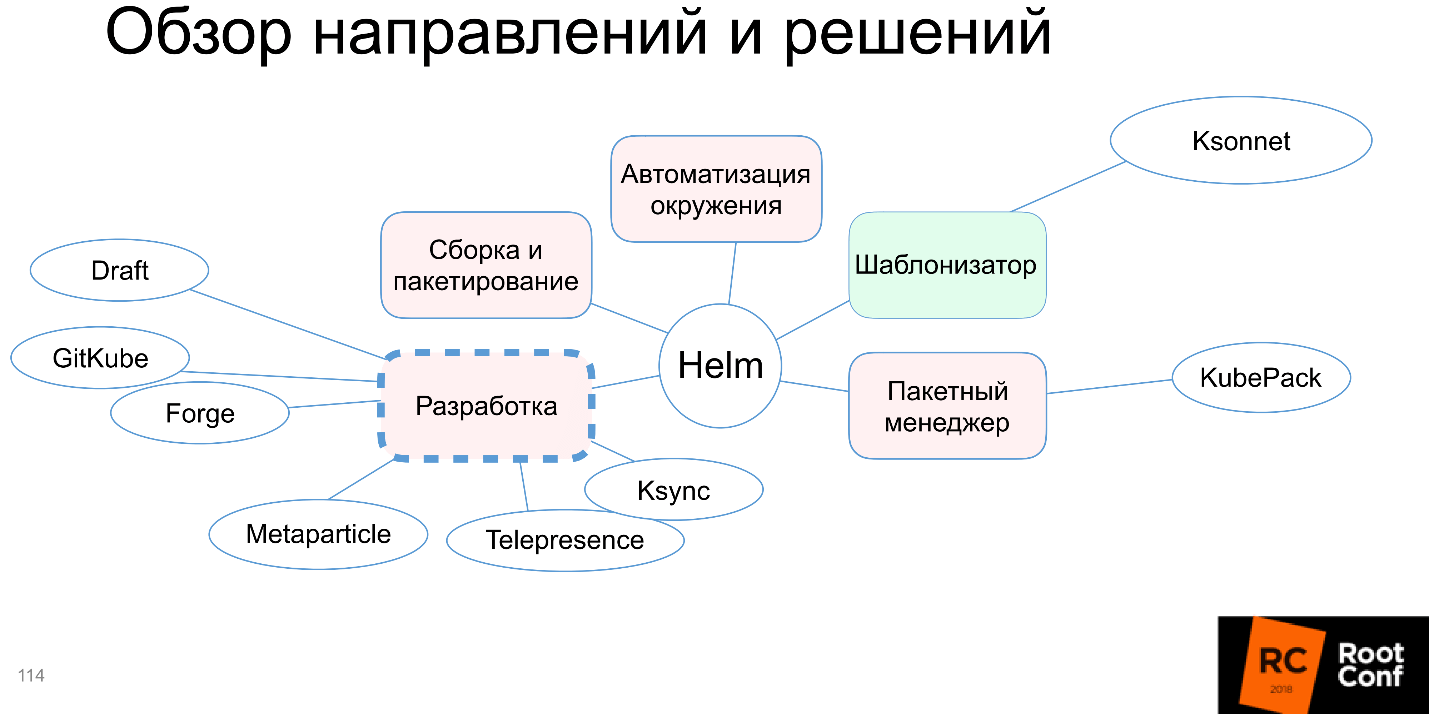

A Metaparticle appears in the diagram, and we discussed three alternative Helm approaches. However, there are additional ones, and they are very promising in my opinion.

Telepresence / Ksync - one of them. Suppose you have an application already written, there are Helm resources that are also written. You installed the application, it started somewhere in the cluster, and at this moment you want to try something, for example, change one line in your code. Of course, I’m not talking about Production clusters, although some people rule something on Production.

The problem with Kubernetes is that you need to transfer these local edits through the Docker update, through the registry, to Kubernetes. But you can convey one changed line to the cluster in other ways. You can synchronize the local and remote folder, which is located below.

Yes, of course, at the same time there must be a compiler in the image, everything necessary for Development should be there in place. But what a convenience: we install the application, change several lines, synchronization works automatically, updated code becomes on the hearth, we run compilation and tests - nothing breaks, nothing updates, no destructive updates, like in Helm, happen, we get an already updated one Appendix.

In my opinion, this is a great solution to the problem.

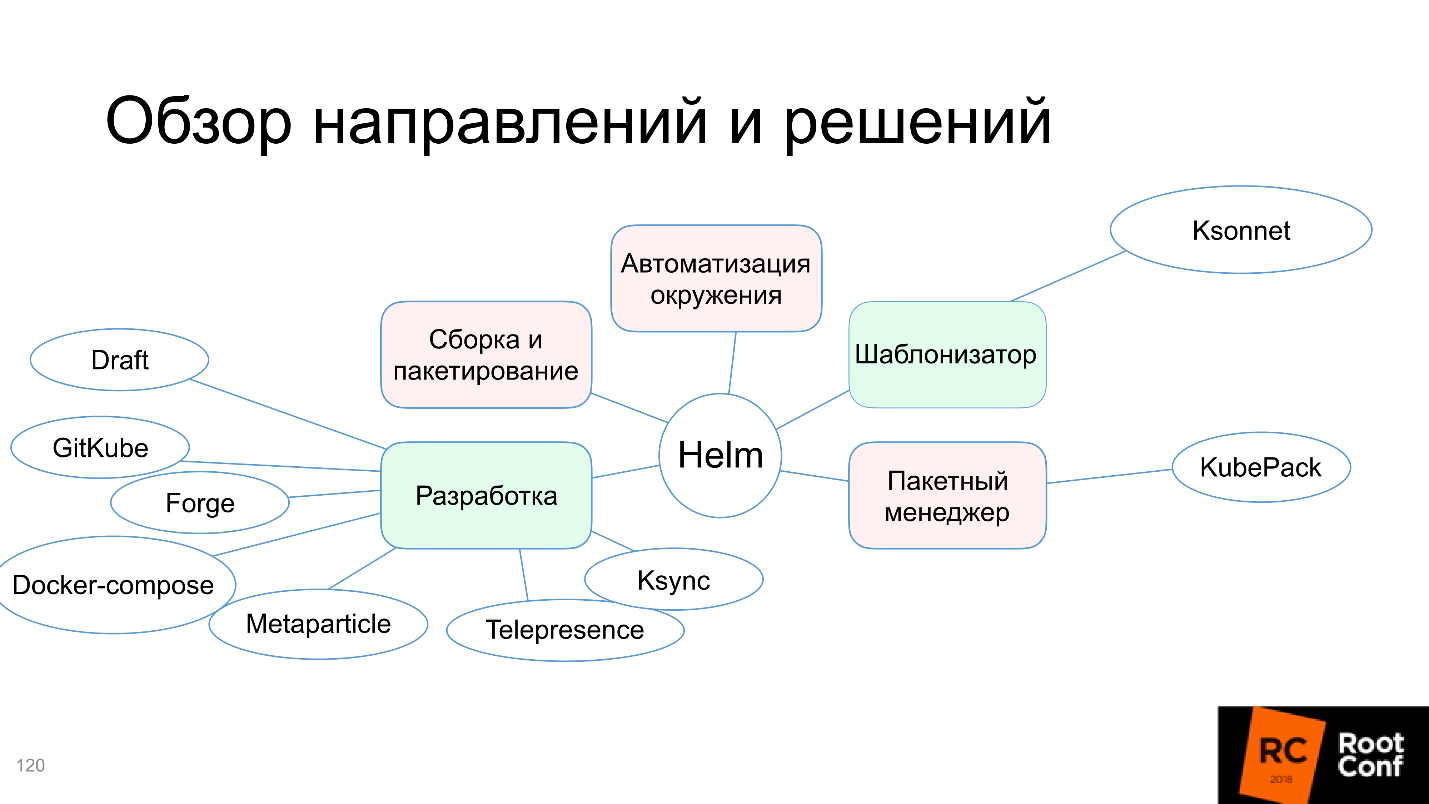

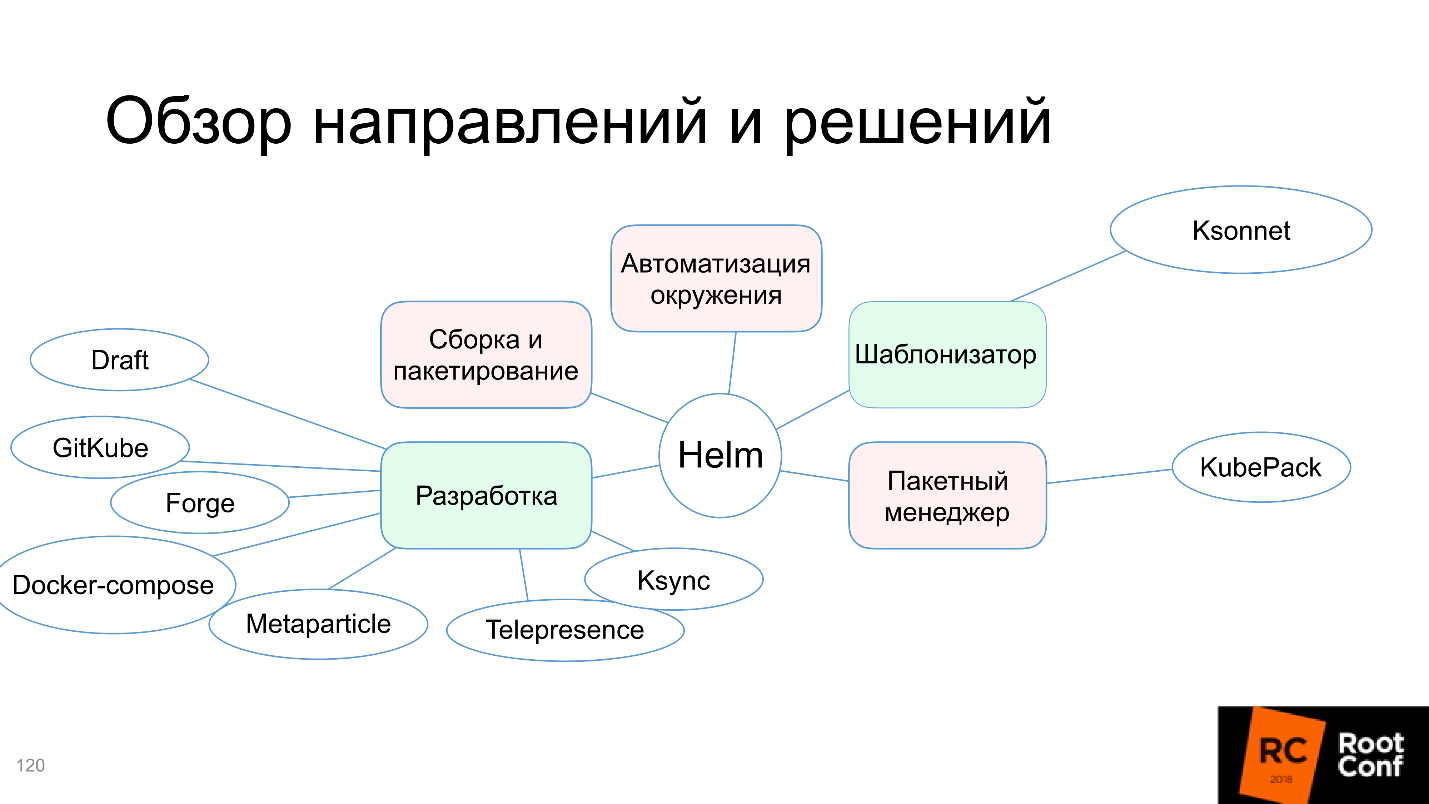

4. Development for Kubernetes without Kubernetes

Previously, it seemed to me that there was no point in working with Kubernetes without Kubernetes. I thought that it is better to make Helm definitions correctly once and use the appropriate tools so that in local development you have common configs for everything. But over time, I came across reality and saw applications for which it is extremely difficult to do. Now I have come to the conclusion that it is easier to write a Docker-compose file.

When you make a Docker-compose file, you launch all the same images, and mount just like in the previous case, the local folder on the folder in the Docker container, just not inside Kubernetes, but simply in Docker-compose, which is launched locally . Then just run the compiler, and everything works fine. The downside is that you need to have additional configs for Docker. The plus is speed and simplicity.

In my example, I tried to run in minikube the same thing as I tried to do with Docker-compose, and the difference was huge. It worked poorly, there were incomprehensible problems, and Docker-compose - in 10 lines you raise and everything works. Since you are working with the same images, this guarantees repeatability.

Docker-compose is added to our scheme, and as a whole it turns out that the community in total with all these solutions closed the development problem.

Assembly and packaging

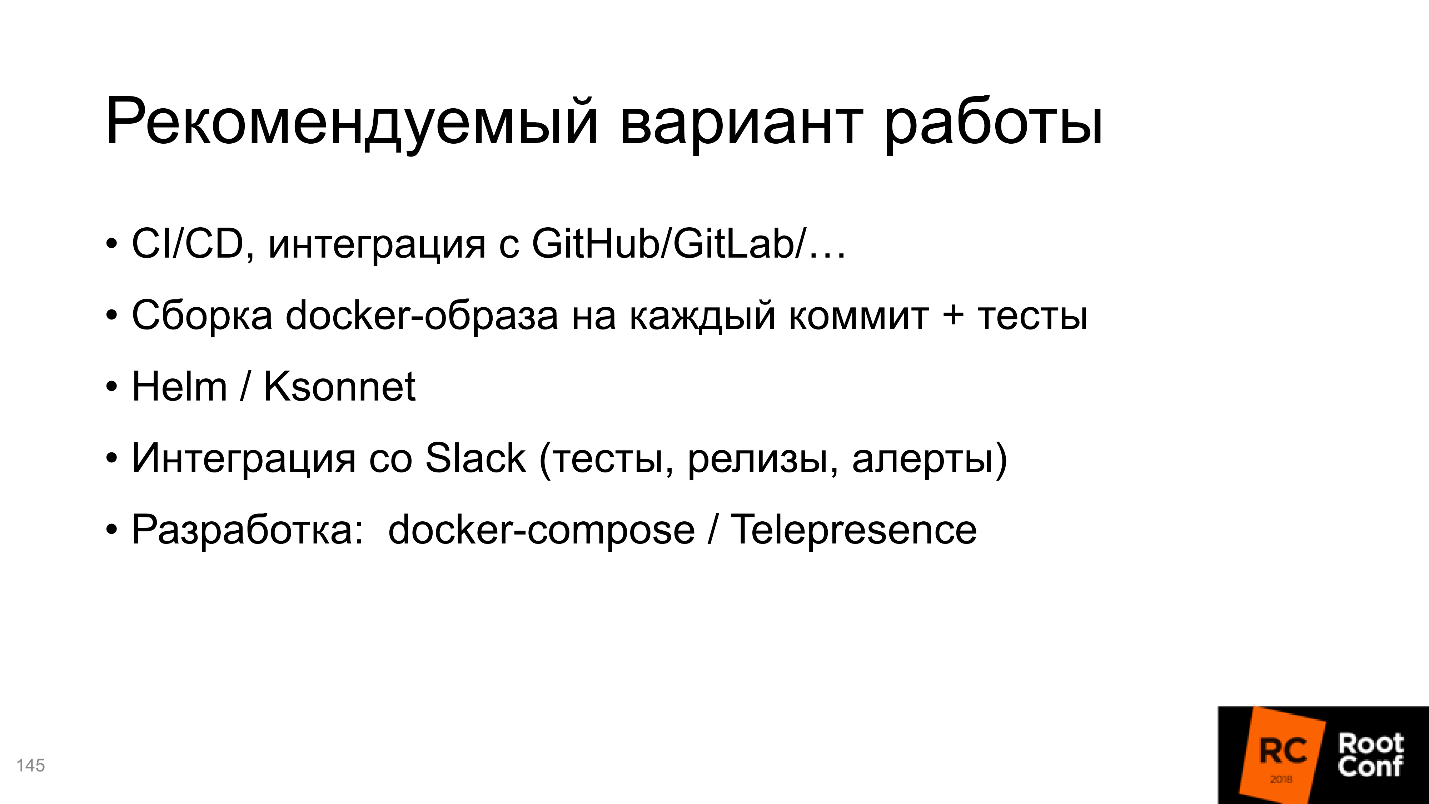

Yes, building and packaging is Helm's problem, but probably Helm developers were right. Each company has its own CI / CD system, which collects artifacts, validates and tests. If it already exists - why close this problem in Helm when everyone has their own? Perhaps one correct solution will not work, each will have modifications.

If you have CI / CD, there is integration with an external repository, dockers are automatically collected for each commit, set tests are run, and you can click the button and deploy it all, you have solved the problem - it is gone.

CI / CD is really a solution to the build and packaging problem, and we paint it green.

Summary

Of the 5 directions, Helm itself closes only the template engine. It immediately becomes clear why it was created. The community added the remaining solutions jointly; development, assembly and packaging problems were completely solved by external solutions. This is not completely convenient, it is not always easy to do within the framework of the established traditions within the company, but at least it is possible.

The future of Helm

I'm afraid that none of us knows for sure what Helm should come to. As we have seen, Helm developers sometimes know better what we need to do. I think that most of the problems that we examined will not be covered by the next releases of Helm.

Let's see what has been added to the current Road Map. There is such a Kuberneres Helm repository in the community , which has development plans and good documentation on what will be in the next version of Helm V3 .

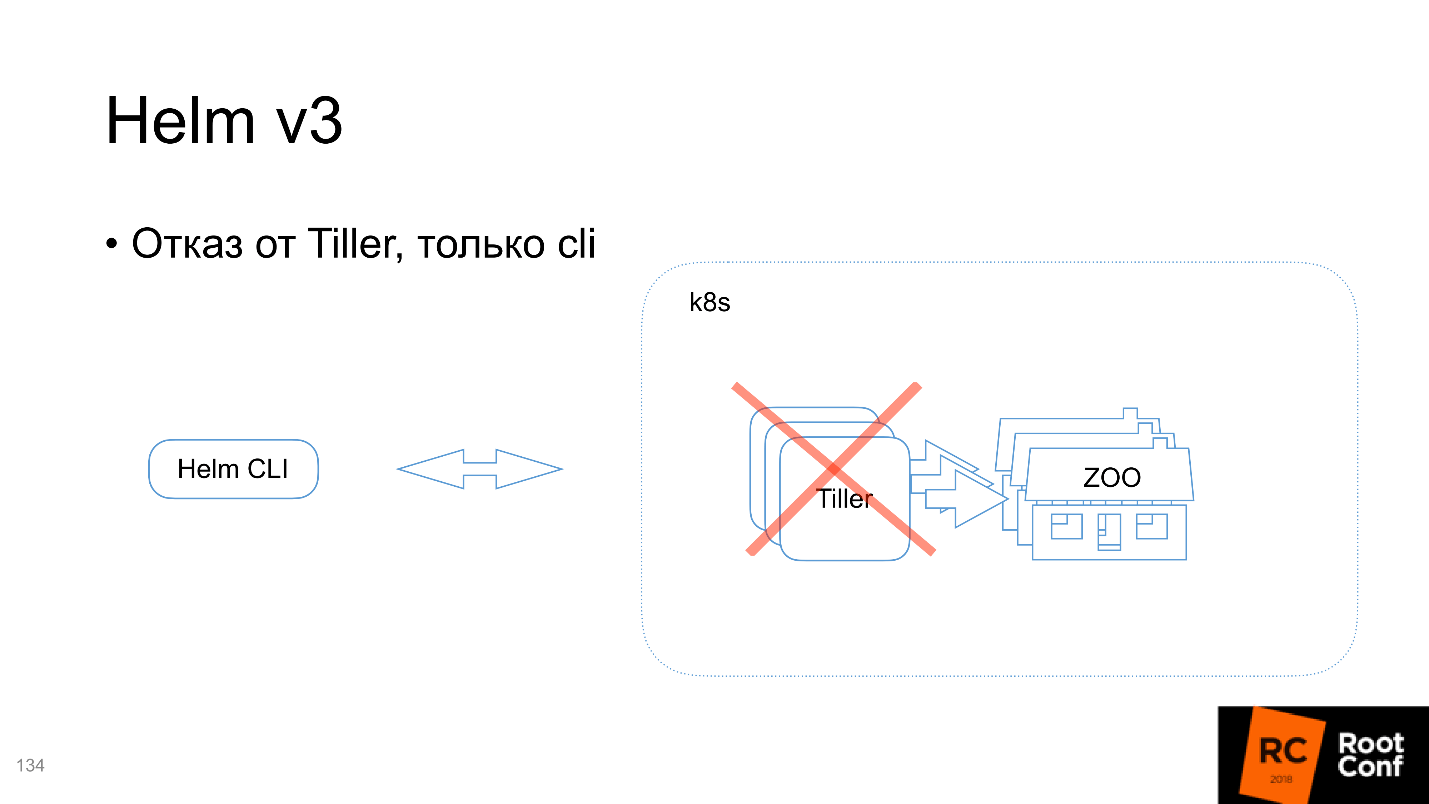

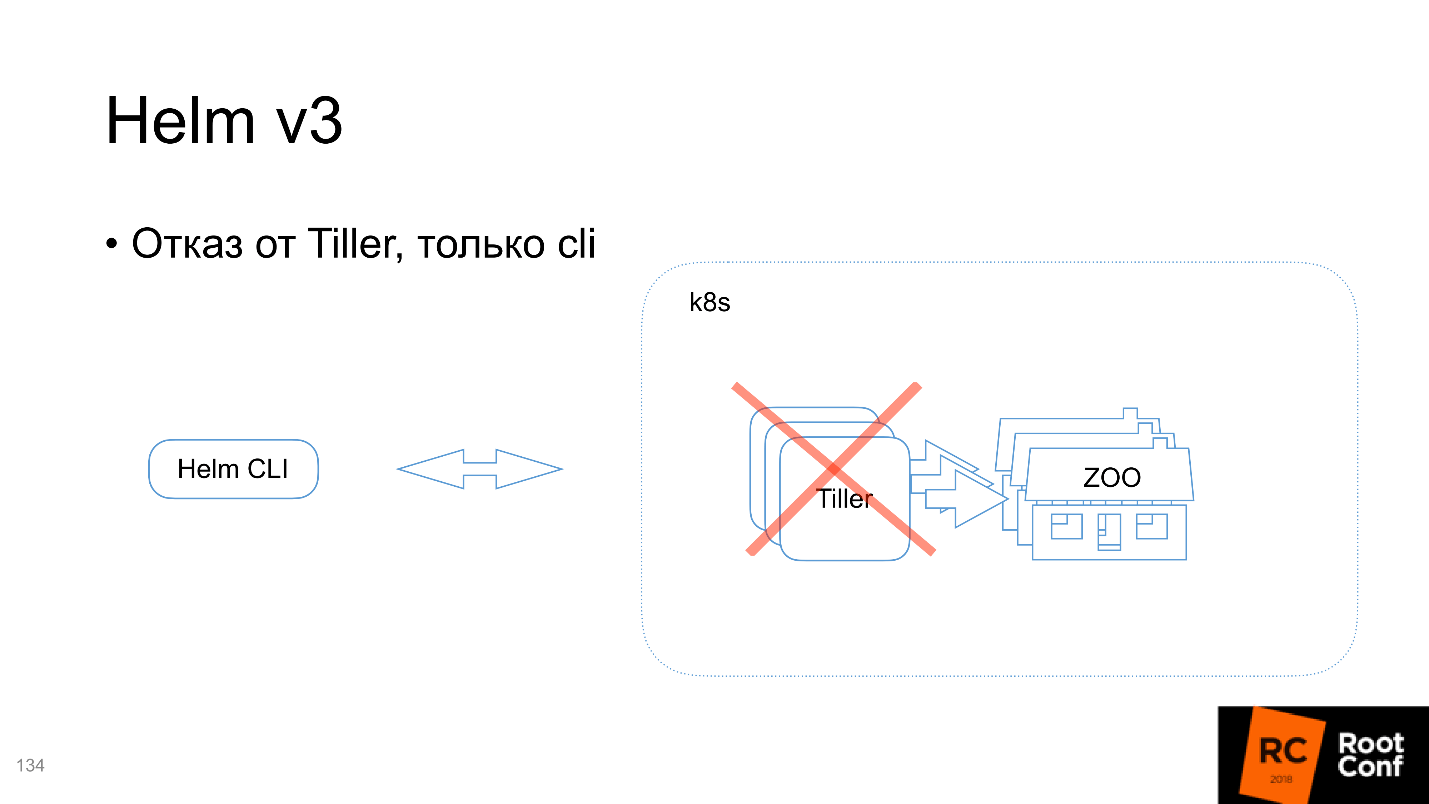

Failure from Tiller, only cli

We have not discussed these details yet, so let’s take a look now. Helm architecture consists of two parts:

Tiller handles the requests you send through the Command Line Interface. So you say: “I want to install this Chart” - and in fact Helm collects it, packs it, sends it to Tiller, and he already decides: “Oh, something has come to me! It looks like this is projected into the following Kubernetes resources, ”and it launches.

According to Helm developers, one Tiller per cluster should work and manage the entire technology zoo, which is in the cluster. But it turned out that in order to correctly share access, it is more profitable for us to have not one, but several Tiller'ov - for each namespace its own. So that Tiller can only create resources in the namespace in which it is running, and has no right to go to neighboring ones. This makes it possible to share the area of responsibility, the scope for different teams.

The next version of V3 Tiller will not.

Why is he needed at all? Essentially, it contains information passed through the Command Line Interface, which it uses to run resources in Kubernetes. It turns out that Kubernetes already contains exactly the same information that is contained in Tiller. But I can do the same with kubectl cli.

Instead of Tiller, an event system is introduced . There will be events on all new resources that you send to Kubernetes via the Command Line Interface: installs, changes, deletions, pre- and post-events. These events are quite a lot.

Chart Lua scripts

You cannot edit part of them, you can part, and it is possible to do this with the help of lua-scripts . At the stage of creating the Chart, you add a collection of lua scripts, which you put in a special folder. They will fully track the processing of external events. This is probably convenient. In fact, part of our problems that we discussed earlier can be solved with this approach.

Lua and events can close development problems because it will be possible to control what needs to be done when something happened, both on the server side and on the automation side of the environment too.

Unfortunately, there is no implementation yet, one can only speculate. But theoretically, the main problem for me in automating the environment we can completely close. You can write a new application in Kubernetes, send some configs to it, and with the help of a mechanism that you program yourself, the application will install anything you want. Let's see what happens.

Release object + secret release versions

In order to fully track the release process, a Release object appears with information about which Release was written. It has not yet been announced what kind of release object it will be, how it will be created, maybe it will be a CRD, or maybe not.

Snap to namespace release

This Release object will be created in the namespace in which everything was started, and accordingly, there is no need to bind Tiller to namespace - that is the problem that I talked about a little earlier.

CRD: controller

Additionally, in the distant future, developers are thinking of creating a CRD controller for Helm for those cases that cannot be covered by the standard push model. But there is still no information about the implementation of this.

Recipe Collection

Total in total, as I recommend using the system.

Of course, this is Helm . It is created by the community, all alternative solutions are created by independent teams, about which we are not sure how long they will exist. Unfortunately, the day after tomorrow they can abandon their projects, and you will be left with nothing. And Helm is still part of Kubernetes. In addition, he will somehow develop and, perhaps, solve problems.

Of course, CI / CD , automatic build by commit. In our company, we made integration with Slack, we have a bot that reports when a new build passed in master, and that all tests were successful. You tell him: “I want to install this in Staging” - and he installs, you say: “I want to run a test there!” - and he starts. Pretty comfortable.

For development use Docker-compose or Telepresence.

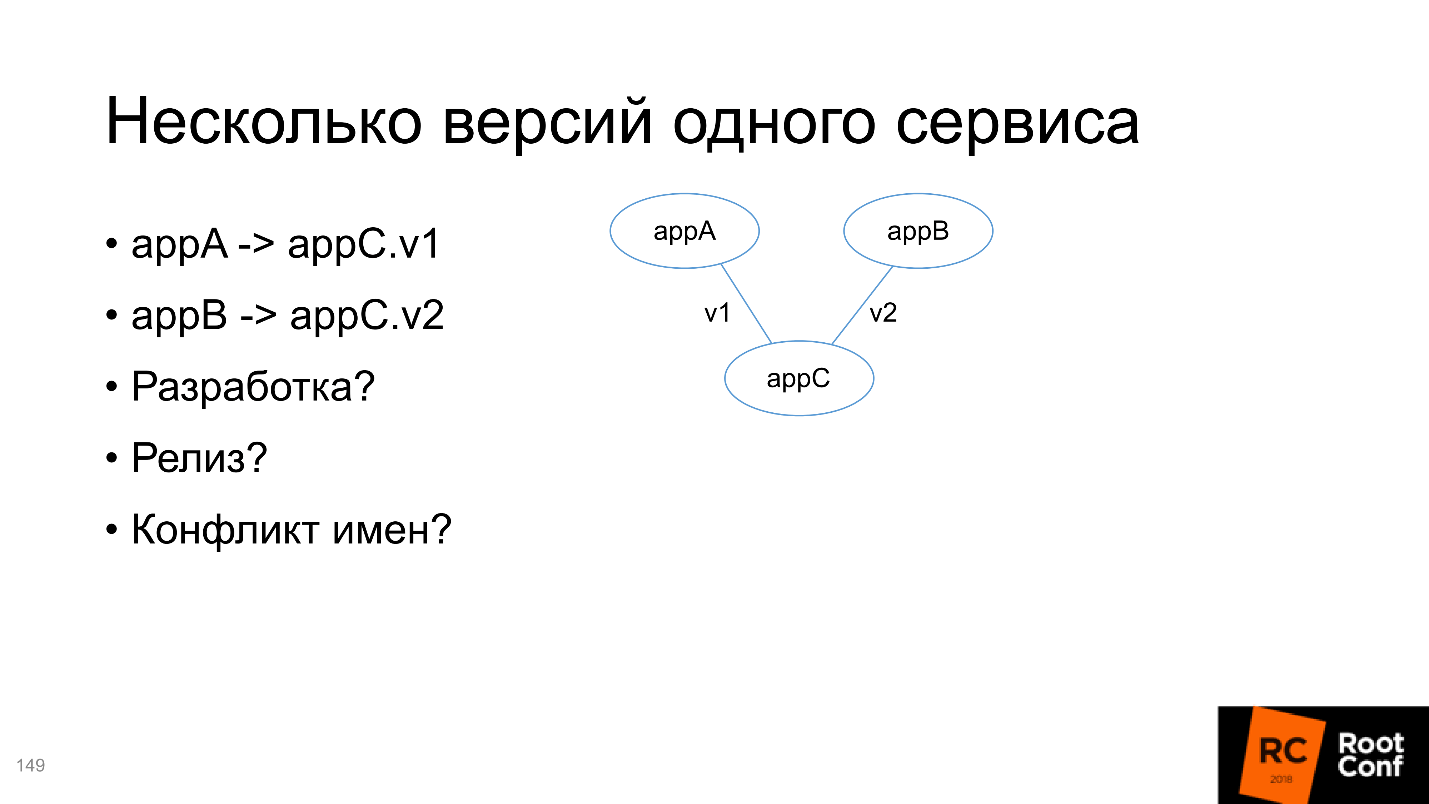

Multiple versions of one service

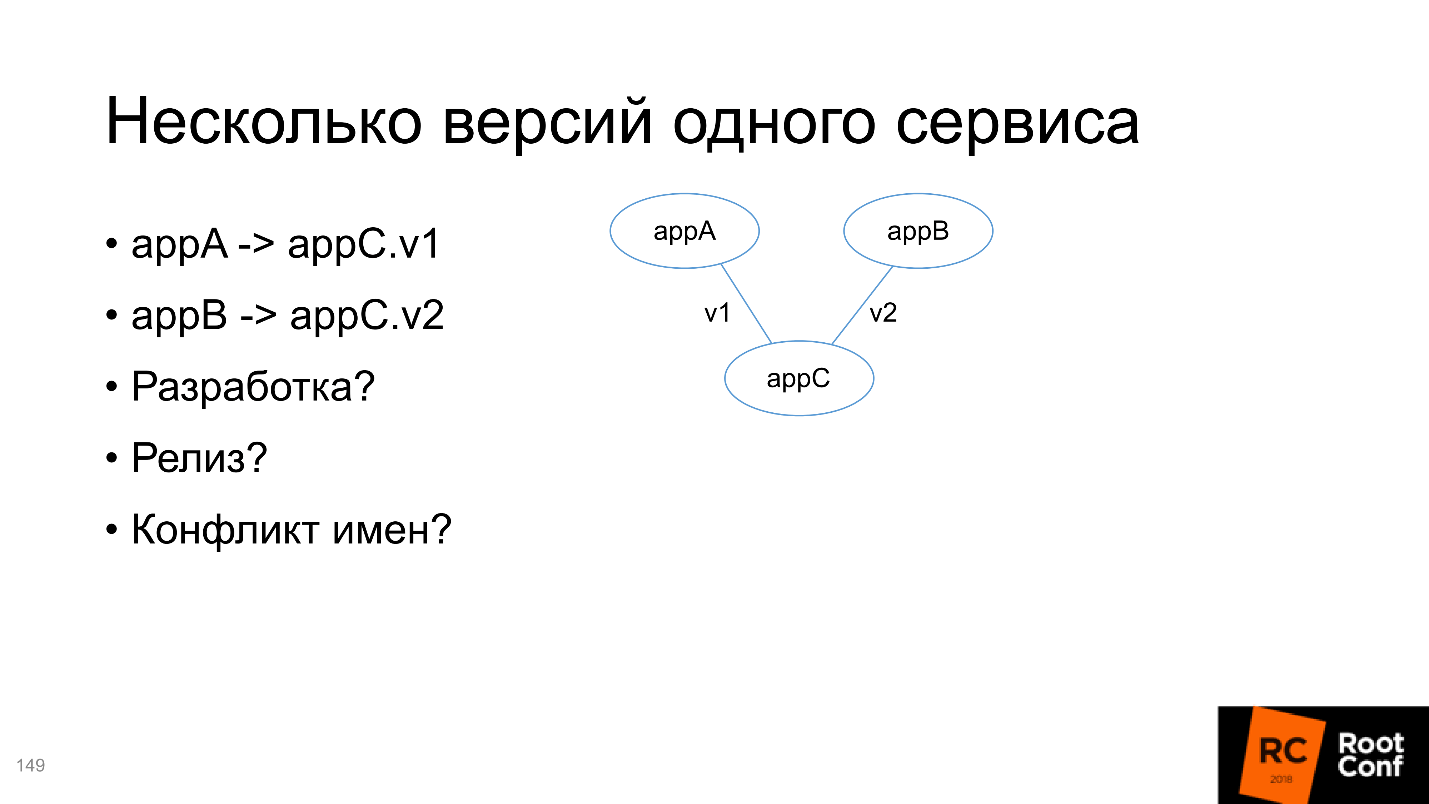

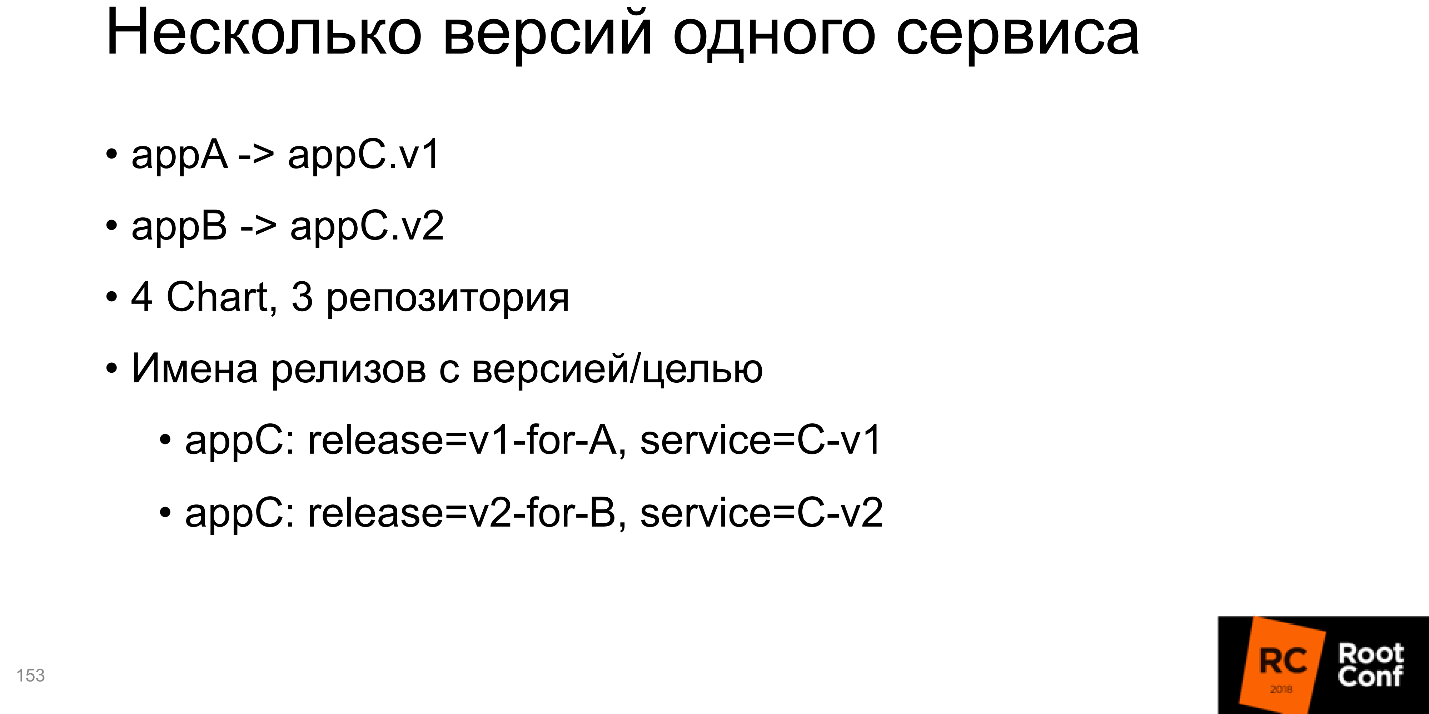

In the end, we will analyze the situation when there are two applications A and B, which depend on C, but C of different versions. Need to solve this problem:

In fact, Kubernetes decides everything for us - you just need to use it correctly.

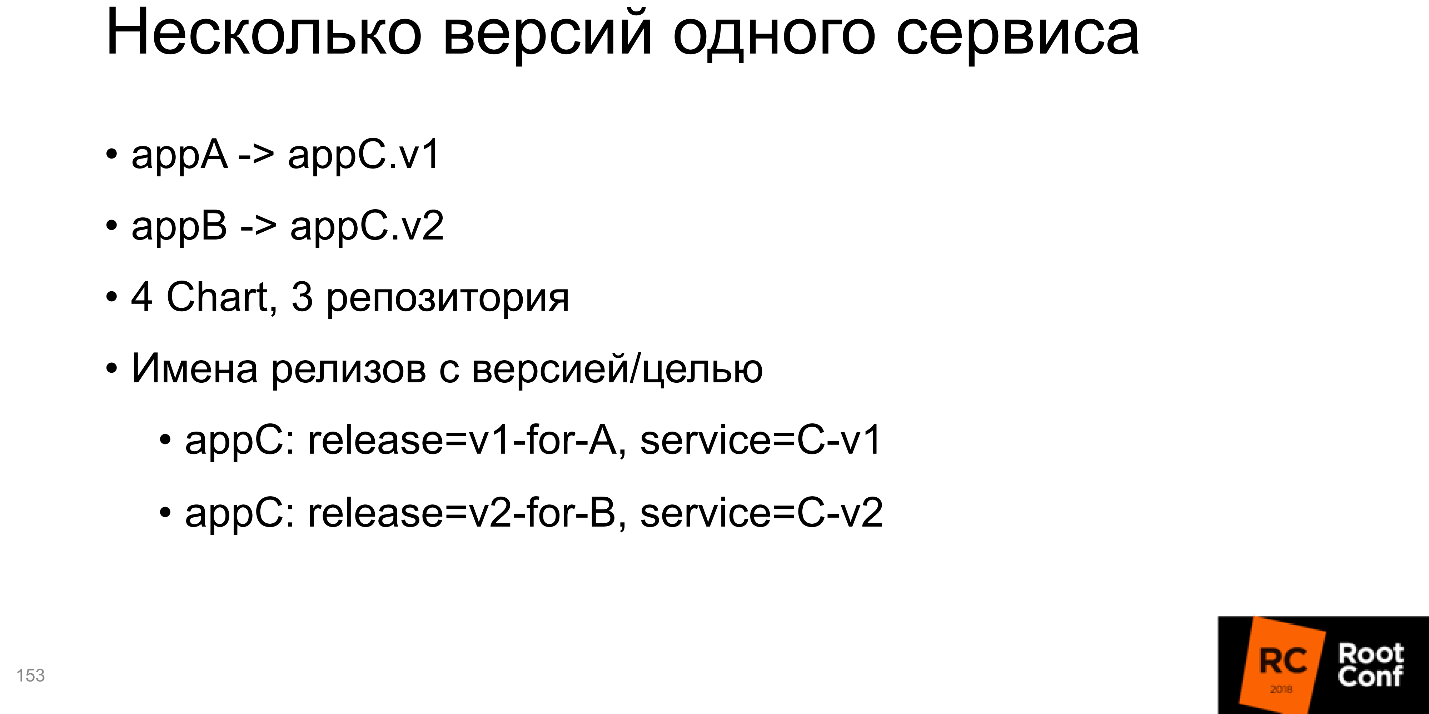

I would advise creating 4 Charts in terms of Helm, 3 repositories (for the C repository, this will just be two different branches). What is most interesting, all installations for v1 and for v2 should contain inside themselves information about the version or for what service it was created. One solution on the slide, appendix C; the release name indicates that this is version v1 for service A; the service name also contains the version. This is the simplest example, you can do it completely differently. But the most important thing is that the names are unique.

The second is transitive dependencies, and here it is more complicated.

For example, you are developing a chain of services and want to test A. For this, you must pass all the dependencies on which A depends, including transitive, to the Helm definition of your package. But at the same time, you want to develop B and also test it - how to do this is incomprehensible, because you need to put all the transitive dependencies in it just as well.

Therefore, I advise you not to add all the dependencies inside each package, but to make them independent and from the outside control what is running. This is inconvenient, but it is the lesser of two evils.

useful links

• Draft

• GitKube

• Helm

• Ksonnet

• Telegram stickers: one , two

• Sig-Apps

• KubePack

• Metaparticle

• Skaffold

• Helm v3

• Docker-compose

• Ksync

• Telepresence

• Drone

• Forge

Profile speaker Ivan Glushkov on GitHub , on twitter , on Habré .

We will understand why in this diagram only the template function is highlighted in green, and what are the problems with assembly and packaging, automation of the environment, and more. But do not worry, the article does not end with the fact that everything is bad. The community could not come to terms with this and offers alternative tools and solutions - we will deal with them.

Ivan Glushkov ( gli ) helped us with this with his report on RIT ++, a video and text version of this detailed and detailed presentation below.

Videos of this and other DevOps speeches on RIT ++ are published and open for free viewing on our youtube channel - go in search of answers to your working questions.

About the speaker: Ivan Glushkov has been developing software for 15 years. I managed to work in MZ, in Echo on a platform for comments, participate in the development of compilers for the Elbrus processor in MCST. He is currently involved in infrastructure projects at Postmates. Ivan is one of the leading DevZen podcast in which they talk about our conferences: here is about RIT ++, and here about HighLoad ++.

Package managers

Although everyone uses some kind of package managers, there is no single agreement on what it is. There is a common understanding, and each has its own.

Let's recall what types of package managers come to mind first:

- Standard package managers of all operating systems: rpm, dpkg, portage , ...

- Package managers for different programming languages: cargo, cabal, rebar3, mix , ...

Their main function is to execute commands for installing a package, updating a package, uninstalling a package, and managing dependencies. In package managers inside programming languages, things are a little more complicated. For example, there are commands like “launch a package” or “create a release” (build / run / release). It turns out that this is already a build system, although we also call it a package manager.

All this is only due to the fact that you can’t just take it and ... let Haskell lovers forgive this comparison. You can run the binary file, but you cannot run the program in Haskell or C, first you need to prepare it somehow. And this preparation is rather complicated, and users want everything to be done automatically.

Development

The one who worked with GNU libtool, which was made for a large project consisting of a large number of components, does not laugh at the circus. This is really very difficult, and some cases cannot be resolved in principle, but can only be circumvented.

Compared to it, modern package language managers like cargo for Rust are much more convenient - you press the button and everything works. Although in fact, under the hood, a large number of problems are solved. Moreover, all these new functions require something additional, in particular, a database. Although in the package manager itself it can be called whatever you like, I call it a database, because data is stored there: about installed packages, about their versions, connected repositories, versions in these repositories. All this must be stored somewhere, so there is an internal database.

Development in this programming language, testing for this programming language, launches - all this is built-in and located inside, the work becomes very convenient . Most modern languages have supported this approach. Even those who did not support begin to support, because the community presses and says that in the modern world it is impossible without it.

But any solution always has not only advantages, but also disadvantages . The downside here is that you need wrappers, additional utilities and a built-in “database”.

Docker

Do you think Docker is a package manager or not?

No matter how, but essentially yes. I do not know a more correct utility in order to completely put the application together with all the dependencies, and to make it work at the click of a button. What is this if not a package manager? This is a great package manager!

Maxim Lapshin has already said that with Docker it has become much easier, and this is so. Docker has a built-in build system, all of these databases, bindings, utilities.

What is the price of all the benefits? Those who work with Docker give little thought to industrial applications. I have such an experience, and the price is actually very high:

- The amount of information (image size) that must be stored in the Docker image. All dependencies, parts of utilities, libraries must be packaged inside, the image is large and you need to be able to work with it.

- It is much more complicated that a paradigm shift is taking place .

For example, I had a task to transfer one program to use Docker. The program was developed over the years by a team. I come, we do everything that is written in books: we paint users stories, roles, see what and how they do, their standard routines.

I say:

- Docker can solve all your problems. Watch how this is done.

- Everything will be on the button - great! But we want SSH to do inside Kubernetes containers.

- Wait, no SSH anywhere.

- Yes, yes, everything is fine ... But is SSH possible?

In order to turn the perception of users into a new direction, it takes a lot of time, it takes educational work and a lot of effort.

Another price factor is that the docker-registry - An external repository for images, it needs to be installed and controlled somehow. It has its own problems, a garbage collector and so on, and it can often fall if you do not follow it, but this is all solved.

Kubernetes

Finally we got to Kubernetes. This is a cool OpenSource application management system that is actively supported by the community. Although it originally left one company, Kubernetes now has a huge community, and it is impossible to keep up with it, there are practically no alternatives.

Interestingly, all Kubernetes nodes work in Kubernetes itself through containers, and all external applications work through containers - everything works through containers ! This is both a plus and a minus.

Kubernetes has a lot of useful functionality and properties: distribution, fault tolerance, the ability to work with different cloud services, and orientation towards microservice architecture. All this is interesting and cool, but how to install the application in Kubernetes?

How to install the app?

Install the Docker image in the Docker-registry.

Behind this phrase lies an abyss. You imagine - you have an application written, say, in Ruby, and you must put the Docker image in the Docker registry. This means you must:

- Prepare a Docker image

- understand how it is going, on which versions it is based;

- be able to test it;

- collect, fill in the Docker-registry, which you, by the way, installed before.

In fact, this is a big, big pain in one line.

Plus, you still need to describe the application manifest in terms (resources) of k8s. The easiest option:

- describe deployment + pod, service + ingress (possibly);

- run the kubectl apply -f resources.yaml command, and transfer all resources to this command.

Gandhi rubs his hands on the slide - it looks like I found the package manager in Kubernetes. But kubectl is not a package manager. He just says that I want to see such a final state of the system. This is not installing a package, not working with dependencies, not building - it's just "I want to see this final state."

Helm

Finally we came to Helm. Helm is a multi-purpose utility. Now we will consider what areas of development of Helm and work with it are.

Template Engine

Firstly, Helm is a template engine. We discussed what resources need to be prepared, and the problem is to write in terms of Kubernetes (and not only in yaml). The most interesting thing is that these are static files for your specific application in this particular environment.

However, if you work with several environments and you have not only Production, but also Staging, Testing, Development and different environments for different teams, you need to have several similar manifests. For example, because in one of them there are several servers, and you need to have a large number of replicas, and in the other - only one replica. There is no database, access to RDS, and you need to install PostgreSQL inside. And here we have the old version, and we need to rewrite everything a bit.

All this diversity leads to the fact that you have to take your manifest for Kubernetes, copy it everywhere and fix it everywhere: here replace one digit, here is something else. This is becoming very uncomfortable.

The solution is simple - you need to enter templates . That is, you form a manifest, define variables in it, and then submit the variables defined externally as a file. The template creates the final manifest. It turns out to reuse the same manifest for all environments, which is much more convenient.

For example, the manifest for Helm.

- The most important part in Helm is Chart.yaml , which describes what kind of manifest it is, what versions, how it works.

- templates are just Kubernetes resource templates that contain some kind of variables within themselves. These variables must be defined in an external file or on the command line, but always externally.

- values.yaml is the standard name for the file with variables for these templates.

The simplest startup command for installing chart is helm install ./wordpress (folder). To redefine some parameters, we say: "I want to redefine precisely these parameters and set such and such values."

Helm copes with this task, so in the diagram we mark it green.

True, cons appear:

- Verbosity . Resources are defined completely in terms of Kubernetes, concepts of additional levels of abstraction are not introduced: we simply write everything that we would like to write for Kubernetes and replace variables there.

- Don't repeat yourself - not applicable. It is often necessary to repeat the same thing. If you have two similar services with different names, you need to completely copy the entire folder (most often they do this) and change the necessary files.

Before plunging into the direction of Helm - a package manager, for which I tell you all this, let's see how Helm works with dependencies.

Work with dependencies

Helm is difficult to work with dependencies. Firstly, there is a requirements.yaml file that fits into what we depend on. While working with requirements, he does requirements.lock - this is the current state (nugget) of all the dependencies. After that, he downloads them to a folder called / charts.

There are tools to manage: whom, how, where to connect - tags and conditions , with which it is determined in which environment, depending on what external parameters, to connect or not to connect some dependencies.

Let's say you have PostgreSQL for the Staging environment (or RDS for Production, or NoSQL for tests). By installing this package in Production, you will not install PostgreSQL, because it is not needed there - just using tags and conditions.

What is interesting here?

- Helm mixes all the resources of all the dependencies and applications;

- sort -> install / update

After we have downloaded all the dependencies in / charts (these dependencies may be, for example, 100), Helm takes and copies all the resources inside. After he rendered the templates, he collects all the resources in one place and sorts in some sort of his own order. You cannot influence this order. You must determine for yourself what your package depends on, and if the package has transitive dependencies, then you need to include all of them in the description in requirements.yaml. This must be borne in mind.

Package manager

Helm installs applications and dependencies, and you can tell Helm install - and it will install the package. So this is a package manager.

At the same time, if you have an external repository where you upload the package, you can access it not as a local folder, but simply say: “From this repository, take this package, install it with such and such parameters.”

There are open repositories with lots of packages. For example, you can run: helm install -f prod / values.yaml stable / wordpress

From the stable repository, you will take wordpress and install it on you. You can do everything: search / upgrade / delete. It turns out, really, Helm is a package manager.

But there are cons: all transitive dependenciesmust be included inside. This is a big problem when transitive dependencies are independent applications and you want to work with them separately for testing and development.

Another minus is end-to - end configuration . When you have a database and its name needs to be transferred to all packages, this can be, but it is difficult to do.

More often than not, you have installed one small packet and it works. The world is complex: the application depends on the application, which in turn also depends on the application - you need to configure them somehow cleverly. Helm does not know how to support this, or supports it with big problems, and sometimes you have to dance a lot with a tambourine to make it work. This is bad, so the “package manager" in the diagram is highlighted in red.

Assembly and packaging

“You can’t just get and run” the application in Kubernetes. You need to assemble it, that is, make a Docker image, write it to the Docker registry, etc. Although the whole package definition in Helm is. We determine what the package is, what functions and fields should be there, signatures and authentication (your company’s security system will be very happy). Therefore, on the one hand, assembly and packaging seem to be supported, and on the other hand, work with Docker images is not configured.

Helm does not allow you to run the application without a Docker image. At the same time, Helm is not configured for assembly and packaging, that is, in fact, it does not know how to work with Docker images.

This is the same as if, to make an upgrade install for some small library, you would be sent to a distant folder to run the compiler.

Therefore, we say that Helm does not know how to work with images.

Development

The next headache is development. In development, we want to quickly and conveniently change our code. The time has passed when you punched holes on punch cards, and the result was obtained after 5 days. Everyone is used to replacing one letter with another in the editor, pressing compilation, and the already modified program works.

It turns out here that when changing the code, a lot of additional actions are needed: prepare a Docker file; Run Docker so that it builds the image; to push it somewhere; deploy to Kubernetes cluster. And only then you will get what you want on Production, and you can check the code.

There are still inconveniences due to destructive updateshelm upgrade. You looked at how everything works, through kubectl exec you looked inside the container, everything is fine. At this point, you start the update, a new image is downloaded, new resources are launched, and the old ones are deleted - you need to start everything from the very beginning.

The biggest pain is big images. Most companies do not work with small applications. Often, if not a supermonolith, then at least a small monolithic. Over time, annual rings grow, the code base increases, and gradually the application becomes quite large. I have repeatedly come across Docker images larger than 2 GB. Imagine now that you are making a change in one byte in your program, press a button, and a two-gigabyte Docker image begins to assemble. Then you press the next button, and the transfer of 2 GB to the server begins.

Docker allows you to work with layers, i.e. checks if there is one layer or another and sends the missing one. But the world is such that most often it will be one large layer. While 2 GB will go to the server, while they will go to Kubernetes with the Docker-registry, they will be rolled out in all ways, until you finally start, you can safely have tea.

Helm does not offer any help with large Docker images. I believe that this should not be, but Helm developers know better than all users, and Steve Jobs smiles at it.

The block with the development also turned red.

Environment Automation

The last direction - automation of the environment - is an interesting area. Before the world of Docker (and Kubernetes, as a related model), it was not possible to say: “I want to install my application on this server or on these servers so that there are n replicas, 50 dependencies, and it all works automatically!” Such, one might say, what was, but was not!

Kubernetes provides this and it’s logical to use it somehow, for example, to say: “I’m deploying a new environment here and I want all the development teams that prepared their applications to just be able to click a button and all these applications would be automatically installed in the new environment” . Theoretically, Helm should help in this, so that the configuration can be taken from an external data source - S3, GitHub - from anywhere.

It is advisable that there is a special button in Helm “Do me good already finally!” - and it would immediately become good. Kubernetes allows you to do this.

This is especially convenient because Kubernetes can be run anywhere, and it works through the API. By launching minikube locally, either in AWS or in the Google Cloud Engine, you get Kubernetes right out of the box and work the same everywhere: press a button and everything is fine right away.

It would seem that naturally Helm allows you to do this. Because otherwise, what was the point of creating Helm in general?

But it turns out, no!

There is no automation of the environment.

Alternatives

When there is an application from Kubernetes that everyone uses (it is now in fact the solution number 1), but Helm has the problems discussed above, the community could not help but respond. It began to create alternative tools and solutions.

Template engines

It would seem, as a template engine, Helm solved all the problems, but still the community creates alternatives. Let me remind you the problems of the template engine: verbosity and code reuse.

A good representative here is Ksonnet. It uses a fundamentally different model of data and concepts, and does not work with Kubernetes resources, but with its own definitions:

prototype (params) -> component -> application -> environments.

There are parts that make up the prototype. The prototype is parameterized by external data, and component appears. Several components make up an application that you can run. It runs in different environments. There are some clear links to Kubernetes resources here, but there may not be a direct analogy.

The main goal of Ksonnet was, of course, to reuse resources . They wanted to make sure that once you wrote the code, you could later use it anywhere, which increases the speed of development. If you create a large external library, people can constantly post their resources there, and the entire community will be able to reuse them.

Theoretically, this is convenient. I practically did not use it.

Package managers

The problem here, as we recall, is nested dependencies, end-to-end configs, transitive dependencies. Ksonnet does not solve them. Ksonnet has a very similar model to Helm, which in the same way defines a list of dependencies in a file, it is uploaded to a specific directory, etc. The difference is that you can make patches, that is, you are preparing a folder in which to put patches for specific packages.

When you upload a folder, these patches are superimposed, and the result obtained by merging several patches can be used. Plus there is validation of configurations for dependencies. It may be convenient, but it is still very crude, there is almost no documentation, and the version froze at 0.1. I think it's too early to use it.

So the package manager is KubePack, and other alternatives I have not seen.

Development

Solutions fall into several different categories:

- trying to work on top of Helm;

- instead of Helm;

- use a fundamentally different approach, in which they try to work directly in the programming language;

- and other variations about which later.

1. Development on top of Helm

A good representative is Draft . Its purpose is the ability to try the application before the code is commited, that is, to see the current state. Draft uses the programming method - Heroku-style:

- there are packages for your languages (pack);

- write, say, in Python "Hello, world!";

- press the button, the Docker file is automatically created (you do not write it);

- resources are automatically created, it all starts, goes to docker-registry, which you had to configure;

- The application automatically starts to work.

This can be done in any directory with code, everything seems to be fast, easy and good.

But in the future, it’s better to start working with Helm anyway, because Draft creates Helm resources, and when your code reaches the production ready state, you should not hope that Draft will create Helm resources well. You will still have to create them manually.

It turns out that Draft is needed to quickly start and try at the very beginning before you write at least one Helm resource. Draft is the first contender for this direction.

2. Development without Helm

Development without Helm Charts involves building the same Kubernetes manifests that would otherwise be built through Helm Charts. I offer three alternatives:

- GitKube

- Skaffold

- Forge.

They are all very similar to Helm, the differences are in small details. In particular, part of the solutions assumes that you will use the command line interface, and Chart assumes that you will do git push and manage hooks.

In the end, you still run docker build, docker push and kubectl rollout anyway. All the problems that we listed for Helm cannot be solved. It is simply an alternative with the same drawbacks.

3. Development in the application language

The next alternative is application language development. A good example here is the Metaparticle . Let's say you write code in Python, and right inside Python you start to think what you want from the application.

I see this as a very interesting concept, because more often than not, the developer does not want to think about how the application should work on servers, what is the correct configuration to write in sysconfig, etc. He needs a working application.

If you correctly describe the working application, what parts it consists of, how they interact, theoretically some magic will help turn this knowledge from the point of view of the application into Kubernetes resources.

Using decorators, we determine: where is the repository, how to push it correctly; what services are and how they interact with each other; there should be so many replicas on the cluster, etc.

I don’t know about you, but personally, I don’t like it, when instead of me some kind of magic decides that such a Kubernetes config should be made from Python definitions. And if I need something else?

All this works to some extent, as long as the application is fairly standard. After that, the problems begin. Let’s say, I want the preinstall container to start before the main container starts, which will perform some actions to configure the future container. This is all done in the framework of Kubernetes-configs, but I do not know if this is done in the framework of the Metaparticle.

I give simple trivial examples, and there are much more of them, in the specification of Kubernetes-configs there are a lot of parameters. I am sure that they are not fully present in decorators like Metaparticle.

A Metaparticle appears in the diagram, and we discussed three alternative Helm approaches. However, there are additional ones, and they are very promising in my opinion.

Telepresence / Ksync - one of them. Suppose you have an application already written, there are Helm resources that are also written. You installed the application, it started somewhere in the cluster, and at this moment you want to try something, for example, change one line in your code. Of course, I’m not talking about Production clusters, although some people rule something on Production.

The problem with Kubernetes is that you need to transfer these local edits through the Docker update, through the registry, to Kubernetes. But you can convey one changed line to the cluster in other ways. You can synchronize the local and remote folder, which is located below.

Yes, of course, at the same time there must be a compiler in the image, everything necessary for Development should be there in place. But what a convenience: we install the application, change several lines, synchronization works automatically, updated code becomes on the hearth, we run compilation and tests - nothing breaks, nothing updates, no destructive updates, like in Helm, happen, we get an already updated one Appendix.

In my opinion, this is a great solution to the problem.

4. Development for Kubernetes without Kubernetes

Previously, it seemed to me that there was no point in working with Kubernetes without Kubernetes. I thought that it is better to make Helm definitions correctly once and use the appropriate tools so that in local development you have common configs for everything. But over time, I came across reality and saw applications for which it is extremely difficult to do. Now I have come to the conclusion that it is easier to write a Docker-compose file.

When you make a Docker-compose file, you launch all the same images, and mount just like in the previous case, the local folder on the folder in the Docker container, just not inside Kubernetes, but simply in Docker-compose, which is launched locally . Then just run the compiler, and everything works fine. The downside is that you need to have additional configs for Docker. The plus is speed and simplicity.

In my example, I tried to run in minikube the same thing as I tried to do with Docker-compose, and the difference was huge. It worked poorly, there were incomprehensible problems, and Docker-compose - in 10 lines you raise and everything works. Since you are working with the same images, this guarantees repeatability.

Docker-compose is added to our scheme, and as a whole it turns out that the community in total with all these solutions closed the development problem.

Assembly and packaging

Yes, building and packaging is Helm's problem, but probably Helm developers were right. Each company has its own CI / CD system, which collects artifacts, validates and tests. If it already exists - why close this problem in Helm when everyone has their own? Perhaps one correct solution will not work, each will have modifications.

If you have CI / CD, there is integration with an external repository, dockers are automatically collected for each commit, set tests are run, and you can click the button and deploy it all, you have solved the problem - it is gone.

CI / CD is really a solution to the build and packaging problem, and we paint it green.

Summary

Of the 5 directions, Helm itself closes only the template engine. It immediately becomes clear why it was created. The community added the remaining solutions jointly; development, assembly and packaging problems were completely solved by external solutions. This is not completely convenient, it is not always easy to do within the framework of the established traditions within the company, but at least it is possible.

The future of Helm

I'm afraid that none of us knows for sure what Helm should come to. As we have seen, Helm developers sometimes know better what we need to do. I think that most of the problems that we examined will not be covered by the next releases of Helm.

Let's see what has been added to the current Road Map. There is such a Kuberneres Helm repository in the community , which has development plans and good documentation on what will be in the next version of Helm V3 .

Failure from Tiller, only cli

We have not discussed these details yet, so let’s take a look now. Helm architecture consists of two parts:

- The client part that you run on the local computer (cmd, etc.).

- Tiller is the second part that runs on a server inside Kubernetes.

Tiller handles the requests you send through the Command Line Interface. So you say: “I want to install this Chart” - and in fact Helm collects it, packs it, sends it to Tiller, and he already decides: “Oh, something has come to me! It looks like this is projected into the following Kubernetes resources, ”and it launches.

According to Helm developers, one Tiller per cluster should work and manage the entire technology zoo, which is in the cluster. But it turned out that in order to correctly share access, it is more profitable for us to have not one, but several Tiller'ov - for each namespace its own. So that Tiller can only create resources in the namespace in which it is running, and has no right to go to neighboring ones. This makes it possible to share the area of responsibility, the scope for different teams.

The next version of V3 Tiller will not.

Why is he needed at all? Essentially, it contains information passed through the Command Line Interface, which it uses to run resources in Kubernetes. It turns out that Kubernetes already contains exactly the same information that is contained in Tiller. But I can do the same with kubectl cli.

Instead of Tiller, an event system is introduced . There will be events on all new resources that you send to Kubernetes via the Command Line Interface: installs, changes, deletions, pre- and post-events. These events are quite a lot.

Chart Lua scripts

You cannot edit part of them, you can part, and it is possible to do this with the help of lua-scripts . At the stage of creating the Chart, you add a collection of lua scripts, which you put in a special folder. They will fully track the processing of external events. This is probably convenient. In fact, part of our problems that we discussed earlier can be solved with this approach.

Lua and events can close development problems because it will be possible to control what needs to be done when something happened, both on the server side and on the automation side of the environment too.

Unfortunately, there is no implementation yet, one can only speculate. But theoretically, the main problem for me in automating the environment we can completely close. You can write a new application in Kubernetes, send some configs to it, and with the help of a mechanism that you program yourself, the application will install anything you want. Let's see what happens.

Release object + secret release versions

In order to fully track the release process, a Release object appears with information about which Release was written. It has not yet been announced what kind of release object it will be, how it will be created, maybe it will be a CRD, or maybe not.

Snap to namespace release

This Release object will be created in the namespace in which everything was started, and accordingly, there is no need to bind Tiller to namespace - that is the problem that I talked about a little earlier.

CRD: controller

Additionally, in the distant future, developers are thinking of creating a CRD controller for Helm for those cases that cannot be covered by the standard push model. But there is still no information about the implementation of this.

Recipe Collection

Total in total, as I recommend using the system.

Of course, this is Helm . It is created by the community, all alternative solutions are created by independent teams, about which we are not sure how long they will exist. Unfortunately, the day after tomorrow they can abandon their projects, and you will be left with nothing. And Helm is still part of Kubernetes. In addition, he will somehow develop and, perhaps, solve problems.

Of course, CI / CD , automatic build by commit. In our company, we made integration with Slack, we have a bot that reports when a new build passed in master, and that all tests were successful. You tell him: “I want to install this in Staging” - and he installs, you say: “I want to run a test there!” - and he starts. Pretty comfortable.

For development use Docker-compose or Telepresence.

Multiple versions of one service

In the end, we will analyze the situation when there are two applications A and B, which depend on C, but C of different versions. Need to solve this problem:

- for development, because in fact we have to develop the same thing, but two different versions;

- for release;

- for a name conflict, because in all standard package managers, installing two packages of different versions can cause problems.

In fact, Kubernetes decides everything for us - you just need to use it correctly.

I would advise creating 4 Charts in terms of Helm, 3 repositories (for the C repository, this will just be two different branches). What is most interesting, all installations for v1 and for v2 should contain inside themselves information about the version or for what service it was created. One solution on the slide, appendix C; the release name indicates that this is version v1 for service A; the service name also contains the version. This is the simplest example, you can do it completely differently. But the most important thing is that the names are unique.

The second is transitive dependencies, and here it is more complicated.

For example, you are developing a chain of services and want to test A. For this, you must pass all the dependencies on which A depends, including transitive, to the Helm definition of your package. But at the same time, you want to develop B and also test it - how to do this is incomprehensible, because you need to put all the transitive dependencies in it just as well.

Therefore, I advise you not to add all the dependencies inside each package, but to make them independent and from the outside control what is running. This is inconvenient, but it is the lesser of two evils.

useful links

• Draft

• GitKube

• Helm

• Ksonnet

• Telegram stickers: one , two

• Sig-Apps

• KubePack

• Metaparticle

• Skaffold

• Helm v3

• Docker-compose

• Ksync

• Telepresence

• Drone

• Forge

Profile speaker Ivan Glushkov on GitHub , on twitter , on Habré .

Great news

On our youtube channel we opened a video of all the reports on DevOps from the RIT ++ festival . This is a separate playlist , but in the full list of videos there are many useful things from other conferences.

Better yet, subscribe to the channel and newsletter , because in the coming year we will have a lot of devops : in May, the framework of RIT ++; in spring, summer and autumn as a section of HighLoad ++, and a separate autumn DevOpsConf Russia .