Reliable javascript: chasing the myth

JavaScript is often called the “most popular language,” but it seems that no one thinks of JS development as “the safest,” and the number of underlying problems in the ecosystem is large. How to effectively circumvent them?

Ilya Klimov thought about this when the error was very expensive (literally) - and as a result he gave a report to HolyJS. And since the audience reviews turned out to be excellent, we now prepared a text version of this report for Habr. Under the cut - and the text and video.

Hello. My name is Ilya Klimov, I'm from Kharkov, Ukraine. I have my own small, up to ten people, outsourcing company. We do everything that money is paid for ... in the sense that we program in JavaScript in all industries. Today, talking about reliable JavaScript, I want to share my experiences somewhere over the past year, since this topic has begun to bother me quite seriously and seriously.

Those who work in outsourcing, perfectly understand the contents of the next slide:

Everything that we will talk about, of course, has nothing to do with reality. As they say in South Park, all the characters are parodied, and pathetic. Naturally, those places where there is a suspicion of a violation of the NDA, were agreed with the representatives of the customers.

Nothing influenced my thoughts about reliability and the like, like setting up my own company. When you start your own company, it suddenly turns out that you can be a very cool programmer, you can have very cool guys, but sometimes incredible things happen, a couple of impossible and completely crazy.

I have the Ninja JavaScript educational project. Sometimes I give promises. Sometimes I even do them. I promised to record a video about Kubernetes in 2017 as part of an educational project. I realized that I made this promise and it would be good to fulfill it, on December 31. And I sat down to write ( here's the result ).

Since I like to record videos that are as close to reality as possible, I used examples of a real project. As a result, in the demonstration cluster, I deployed a piece that took real orders from real production and put them in a separate Kubernetes database in my demonstration cluster.

Since it was December 31, part of the orders went nowhere. Written off for seasonality: everyone left to drink tea. When the customer woke up, about January 12-13, the total cost of the video was about $ 500,000. I haven’t had such an expensive production yet.

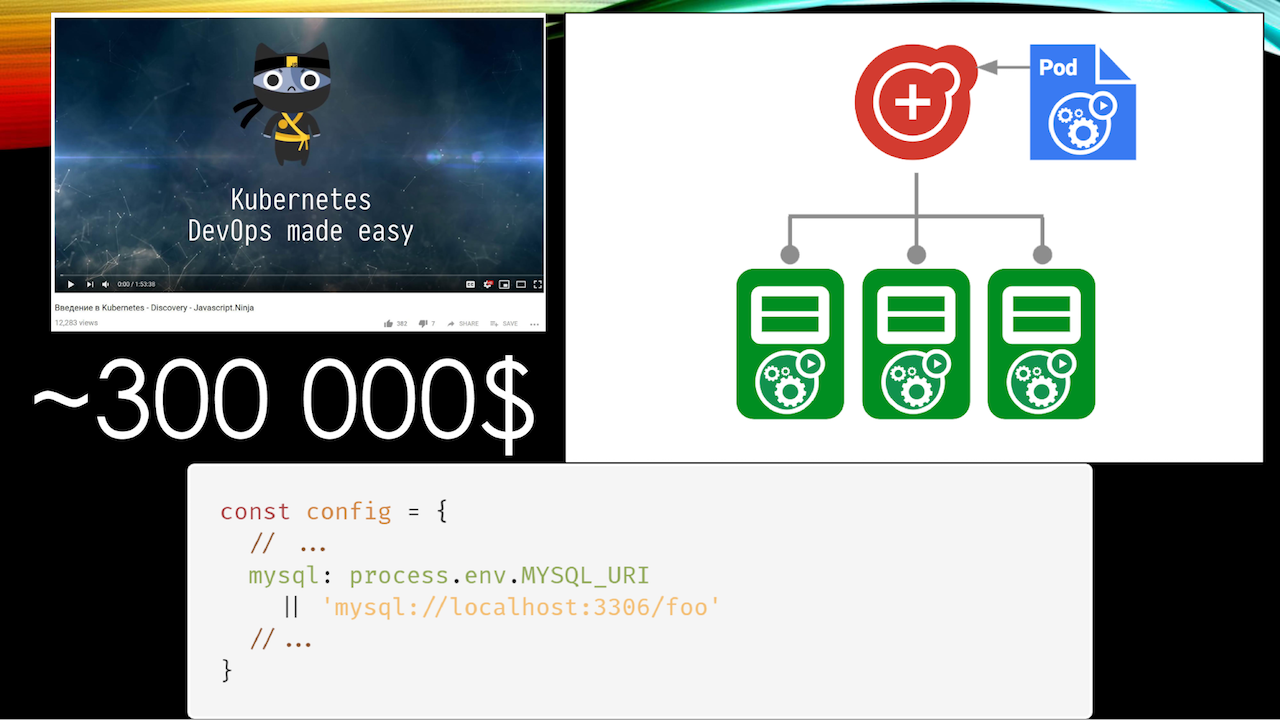

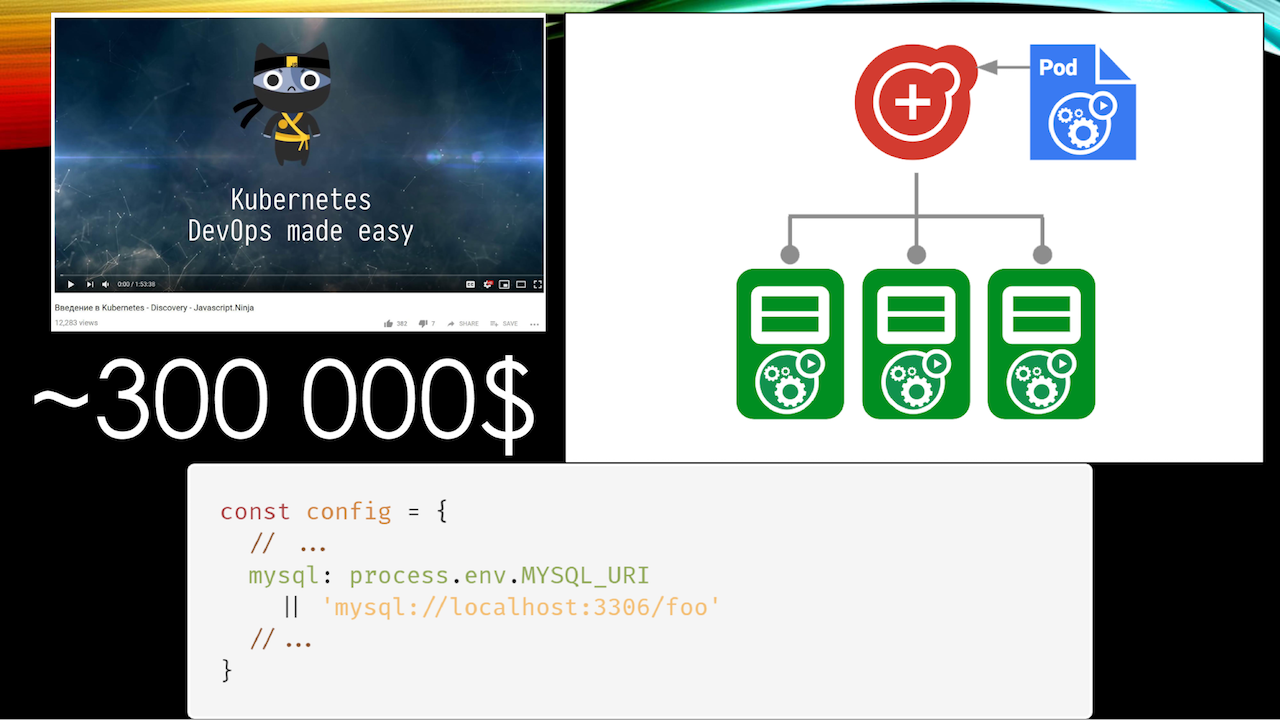

Example number two: the next cluster Kubernetes. New-fashioned ecosystem Infrastructure as a Сode: everything that can be described by code and configs, Kubernetes is twitched programmatically from JavaScript shells and so on. Cool, everyone likes. A little change the deployment procedure, and there comes a time when you need to deploy a new cluster. The following situation occurs:

Many of you probably also have such a line of code in your configs. That is, we take the config from a mysql variable or take a local database.

Because of a typo in the deployment system, it turned out that the system was again configured as a production, but the MySQL database used a test — a local one, which was used for tests. This time the money was spent less - only $ 300,000. Fortunately, this was not my company, but the place where I worked as a consultant.

You might think that all this does not concern you as a front-end, since I was talking about DevOps (by the way, I am delighted with the name of the DevOops conference , it perfectly describes the essence). But I will tell about one more situation.

There is a system that monitors veterinary epidemics in Ethiopia, developed under the auspices of the UN. It contains one of the interface elements when it comes to a person, and he manually enters the coordinates: when and where there were flashes of a certain disease.

There is an outbreak of some regular foot and mouth disease (I am not strong in cow diseases), and in a hurry the operator accidentally presses the “add” button twice, leaving the fields empty. Since we have JavaScript, and JavaScript and types are very good, empty fields of latitude and longitude happily lead to zero coordinates.

No, we did not send the doctor far to the ocean, but it turned out that for display on the map, building reports, analytics, and analyzing the placement of people on the backend, all this is preliminarily calculated from the point of view of clustering. We are trying to combine the outbreak points.

As a result, the system is paralyzed during the day, because the backend is trying to calculate the cluster with the inclusion of this point, all the data become irrelevant, the doctors receive completely irresponsible orders from the series “travel 400 kilometers”. 400 kilometers across Ethiopia is a dubious pleasure.

The total loss estimate is about a million dollars. The report on this situation was written "We were the victim of an unfortunate set of circumstances." But we know that it's JavaScript!

And the last example. Unfortunately, although this story was long enough, I still can not name the company. But this is a company that has its own airline, its own hotels, and so on. She works very interactively with businesses, that is, provides them with a separate interface for booking tickets and so on.

One day, by ridiculous chance, an order for booking tickets from New York to Los Angeles comes in the amount of 999,999 pieces. The system of the company happily bought all the flights of its own airline, found that there were not enough seats, and sent the data to an international reservation system to compensate for the shortage. The international booking system, seeing a request for approximately 950,000 tickets, happily disconnected this airline from its system.

Since the shutdown is an extraordinary event, after that the problem was solved within seven minutes. However, for these seven minutes, the cost of the fines that had to be paid was only $ 100,000.

Fortunately, this all happened not in one year. But these cases made me think about the issues of ensuring reliability and ask two genuinely Russian questions: who is to blame and what to do with it?

If you analyze a lot of stories, you will find that there are more stories about similar issues related to JavaScript than with a different programming language. This is not my subjective impression, but the results of news mining on Hacker News. On the one hand, this is a hipster and subjective source, but, on the other hand, it is quite difficult to find any sane source from the packs in the field of programming.

Moreover, a year ago I was passing a competition, where I had to solve algorithmic problems every day. Since I was bored, I solved them in javascript using functional programming. I wrote an absolutely clean function, and it actually worked correctly 1197 times in actual Chrome, and produced a different result 3 times. It was just a small bug in the TurboFan optimizer that just got into the mainstream Chrome.

Of course, it was corrected, but you know: this means, for example, that if your unit tests passed once, this does not mean that they will work in the system. That is, we executed the code about 1197 times, then the optimizer came and said: “Wow! Hot feature! Let's optimize it. ” And in the process of optimization led to the wrong result.

In other words, one of the first reasons why this is happening, we can call the youth of the ecosystem. JavaScript is a fairly young industry in the realm of serious programming, those cases where millions are spinning, where the cost of an error is measured by five or six characters.

For a long time, JavaScript was perceived as a toy. Because of this (not because we do not take it seriously) we still have problems with the lack of tools.

Therefore, in order to fight this cause, which is the fundamental fundamental principle of everything I will talk about today, I tried to formulate the rules of reliability that I could impose on my company or pass on as a consultant to others. As the saying goes, "rule number one is not to talk about reliability." But more seriously, then ...

Including, by the way, and spell checking:

It all starts with the simplest things. It would seem that everyone has been writing for a long time on Prettier. But only in 2018 this thing that we all use is good and healthy, learned to work with git add -p, when we partially add files to the git repository, and we want to format the code nicely, say, before sending it to the main repository. Absolutely the same problem has a fairly well-known realinstaged utility, which allows you to check only those files that have been changed.

Continue to play Captain Evidence: ESLint. I will not ask who uses it here, because it makes no sense for the whole hall to raise their hands (well, I hope so and don’t want to be disappointed). Better raise your hands, who have their own custom-written rules in ESLint.

Such rules are one of the very powerful ways to automate the mess that occurs in projects where people write junior level and the like.

We all want a certain level of isolation, but sooner or later a situation arises: “Look, here is this helper Vasya implemented somewhere in the directory of his component just next to it. I will not endure it in common, then I will. ” The magic word "later." This leads to the fact that in the project not vertical dependencies begin to appear (when the upper elements connect the lower ones, the lower ones never crawl behind the upper ones), but component A depends on component B, which is located in a completely different branch. As a result, component A becomes not so portable to other components.

By the way, I express Respect to Alfa-Bank, they have a very good and beautifully written library of components on React, it is a pleasure to use it precisely in terms of code quality.

The banal ESLint-rule, which follows where you import entities, allows you to significantly increase the quality of the code and save the mental model during code review.

I am already in terms of the world of the front end old. Recently, in the Kharkiv region, a large serious company, PricewaterhouseCoopers, has completed a study, and the average age of the front-endder was about 24-25 years. It's hard for me to think about all this, I want to focus on business logic when reviewing a pull request. Therefore, I am happy to write ESLint-rules in order not to think about such things.

It would seem that under this you can tune the usual rules, but the reality usually frustrates a lot more, because it turns out that some Redux selectors are needed from the Reactor component (unfortunately, it is still alive). And these selectors are somewhere in a completely different hierarchy, so "../../../ ..".

Or, even worse, webpack alias, which breaks about 20% of other tools, because not everyone understands how to work with it. For example, my beloved Flow.

Therefore, the next time before you want to yell at the junior (and the programmer has such a favorite activity), think about whether you can somehow automate it in order to avoid mistakes in the future. In an ideal world, of course, you will write an instruction that no one reads anyway. Here, the speakers of HolyJS are talented specialists with a lot of experience, but when it was proposed at the internal rally to write instructions for the speakers, they said “yes, they won't read it”. And these are the people with whom we must take an example!

The last of the trials, and move on to the tin. These are any tools to run precommit hooks. We use husky , and I couldn't help but insert this beautiful photo of a husky, but you can use something else.

If you think that this is all very simple - as they say, hold my beer, we will soon see that everything is more complicated than you think. A few more points:

If you are not writing in TypeScript, you may want to think about it. I don't like TypeScript, I traditionally haply Flow, but we can talk a little about it later, and here from the stage I will promote the mainstream solution with disgust.

Why is that? The TC39 program committee recently had a very big discussion about where the language is going. A very funny conclusion to which they came: in TC39 there are always “swan, cancer and pike”, which are dragging the language in different directions, but there is one thing that everyone always wants - this is performance.

TC39 unofficially, in an internal discussion, issued such a tirade: “we will always make JavaScript so that it remains productive, and those who don’t like it will take some language that is compiled into JavaScript”.

TypeScript is a pretty good alternative with an adult ecosystem. I can not fail to mention my love for GraphQL. He is really good, unfortunately, no one will allow him to implement on a huge number of existing projects, where we already have to work.

There were already GraphQL reports at the conference, which is why just one stroke to the question of reliability: if you use, say, Express GraphQL, then each time in addition to a specific resolver you can hang certain validators that allow you to toughen the requirements for the value compared to standard graphQL types.

For example, I would like the amount of transfer between two representatives of any banks to be positive. Because just yesterday a pop-up in my online banking happily proclaimed that I have -2 unread messages from the bank. And this is sort of like the leading bank in my country.

As for these validators, imposing additional rigor: using them is a good and sensible idea, just do not use them as suggested by, say, GraphQL. You are very much attached to GraphQL as a platform. At the same time, the validation that you do is needed at the same time in two places: on the frontend before we send and receive data, and on the backend.

I regularly have to explain to the customer why we took JavaScript as the backend, not X. Moreover, X is usually some kind of PHP, not pretty Go and the like. I have to explain that we are able to reuse code as efficiently as possible, including between the client and the backend, due to the fact that they are written in the same programming language. Unfortunately, as practice shows, often this thesis remains just a phrase at a conference and does not find embodiment in real life.

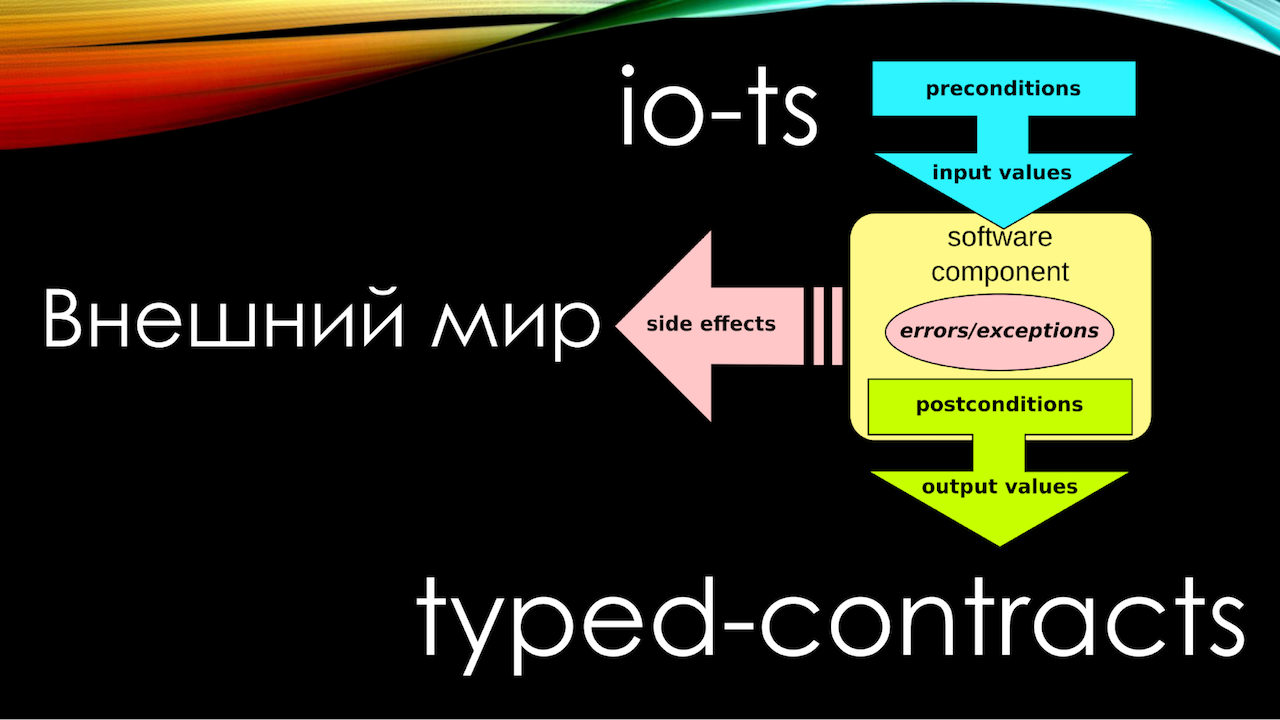

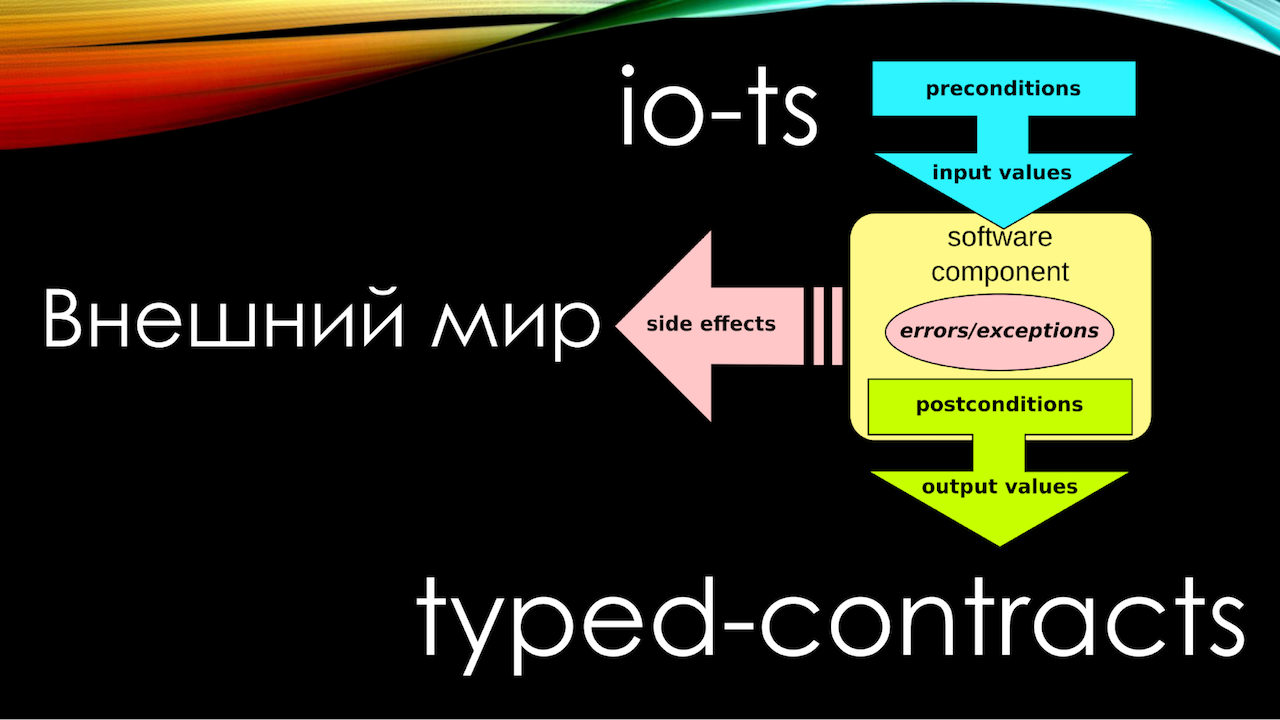

I have already spoken about the youth of the ecosystem. Contract programming has existed for over 25 years as a basic approach. If you write in TypeScript, take io-ts, if you write on Flow, like me, take typed-contract, and get a very important thing: the ability to describe runtime contracts from which to output static types.

There is no worse for a programmer than the presence of more than one source of truth. I know people who have lost five-digit dollars in dollars simply because their type is described in a static-typed language (they used TypeScript - well, of course, this is just a coincidence), and the runtime type (like using tcomb ) slightly different.

Therefore, in compile-time, the error was not caught, simply because why check it? Unit tests for it was not, because we also checked this static typewriter. It makes no sense to check the things that were checked in the layer below, everyone remembers the testing hierarchy.

Due to the fact that over time the synchronization between the two contracts was disrupted, once a wrong translation was made to the address that went to the wrong address. Since this was a cryptocurrency, it is impossible to roll back a transaction a little more than in principle. Fork ether for you again, no one will. Therefore, contracts and interactions in contract programming are the first things you should start doing right tomorrow.

The next problem is isolation. It is multifaceted and many-sided. When I worked for a company related to hotels and flights, they had an application on Angular 1. It was a long time ago, so excusable. A team of 80 people worked on this application. Everything was covered in tests. everything was fine, until one day I did my feature, did not freeze it, and found that I broke absolutely incredible parts of the system as part of testing, which I didn’t even touch.

It turned out I have a problem with creativity. It turned out that I accidentally called the service in the same way as another service that existed in the system. Since it was Angular 1, and the system of services there was not strictly typed, but stringly typed - stringed, Angular quite calmly began to slip my service to completely different places and, ironically, a couple of methods coincided in naming.

This, naturally, was not a coincidence: you understand that if two services are named the same, they are likely to do the same thing, plus or minus. It was a service related to the calculation of discounts. Only one module was engaged in calculating discounts for corporate clients, and the second module with my name was related to the calculation of discounts on shares.

Obviously, when an application is sawed by 80 people, it means that it is large. The application has implemented code splitting, and this means that the sequence of connecting modules directly depended on the user's journey through the site. To make it even more interesting, it turned out that not a single end-to-end test that tested the user's behavior and passage through the site, that is, a specific business scenario, caught this error. Because it seems that no one will ever need to simultaneously communicate with both discount modules. True, it completely paralyzed the work of the site admins, but with whom it does not happen.

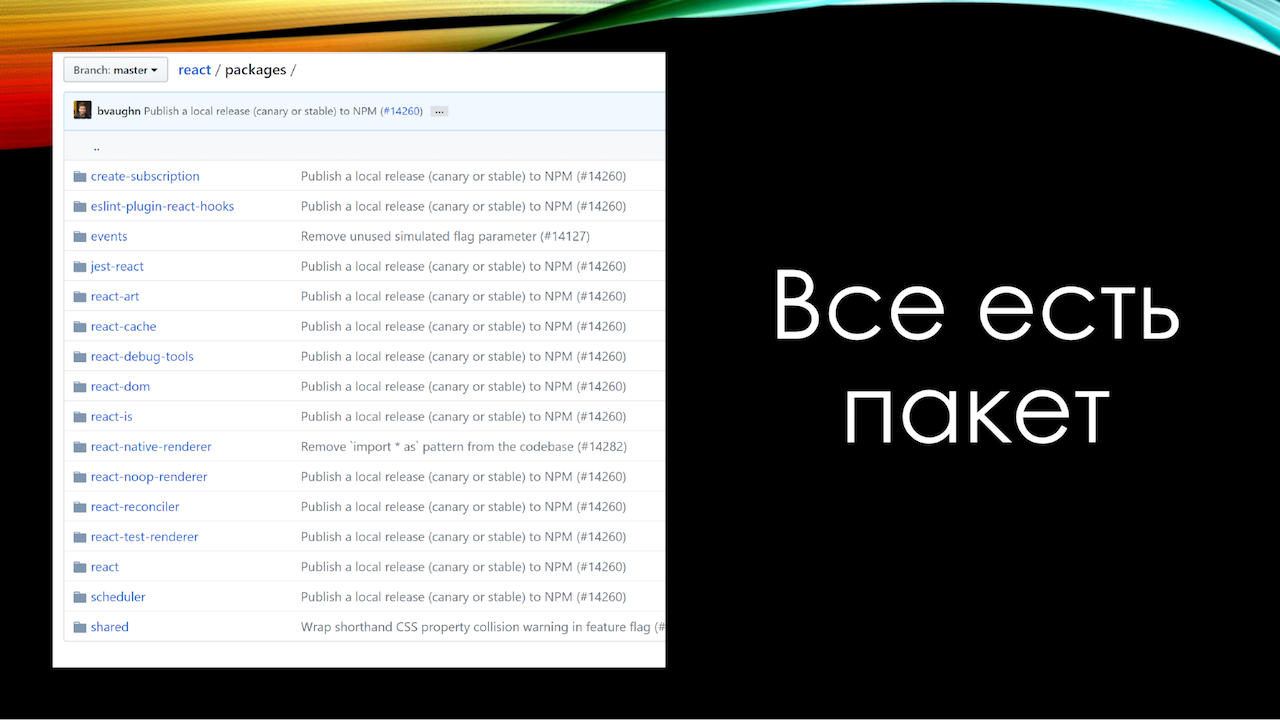

The problem of isolation is very well illustrated by the logo of one of the projects that partially solve this problem. This is Lerna.

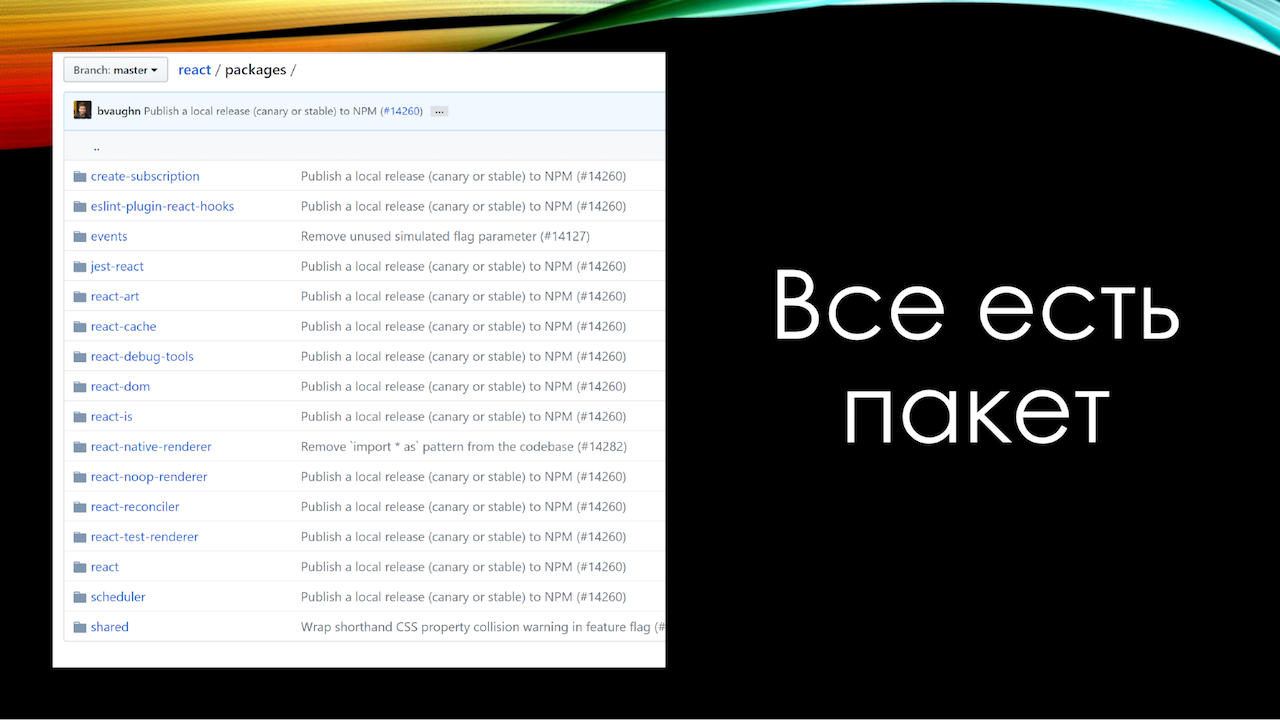

Lerna is an excellent tool for managing multiple npm packages in the repository. When you have a hammer in your hands, everything becomes suspiciously like a nail. When you have a unix-like system with the correct philosophy, everything becomes suspiciously similar to a file. Everyone knows that in unix-systems everything is a file. There are systems where this is brought to the highest degree (almost said “to the point of absurdity”), like Plan 9 .

I know organizations that, having suffered with ensuring the reliability of a giant application, came up with one simple idea: everything is a package.

When you take out some element of functionality, be it a component or something else, in a separate package, you automatically provide an insulation layer. Just because you can’t normally reach out from one package to another. And also because the system of working with packages that are collected in a monorepository via npm-link or Yarn Workspaces, is so awful and unpredictable in terms of how it is organized inside that you can't even resort to a hack and connect some a file through “node_modules something”, simply because different people have it all going in a different structure. This especially depends directly on the version of Yarn. There, in one of the versions, the mechanism was completely changed on the sly, as Yarn Workspaces organizes work with packages.

The second example of isolation, to show that the problem is multifaceted, is the package that I try to use everywhere now - it is cls-hooked . You may be aware of another package that implements the same thing - this is continuation-local-storage . It solves a very important problem that, for example, PHP developers do not encounter, for example.

Speech about isolation of each specific request. In PHP, on average in the hospital, all requests are isolated, we can’t interact between them only if you don’t use any Shared Memory perversions, we cannot, everything is good, peaceful, beautiful. In essence, cls-hooked adds the same thing, allowing you to create execution contexts, put local variables in them, and then, most importantly, automatically destroy these contexts so that they do not continue to eat your memory.

This cls-hooked is built on async_hooks, which in node.js is still in experimental status, but I know more than one and not two companies that use them in a fairly harsh production and are happy. There is a slight drop in performance, but the possibility of automatic garbage collection is invaluable.

When we start talking about isolation issues, about pushing different things into different node-modules.

I would have disagreed with the first criterion, which I derived for myself ten years ago. Because you would squeal that JavaScript is a dynamic language, and you deprive me of all the beauty and expressiveness of a language. This is a grep test.

What it is? Many people write in vim. This means that if you do not use any relatively new-fangled type of Language Server type, the only way to find a mention of an atom is, roughly speaking, grep. Of course, there are variations on the topic, but the general idea is clear. The idea behind the grep test is that you have to find all the function calls and their definition by the usual grep. You say, everyone does it, nothing strange.

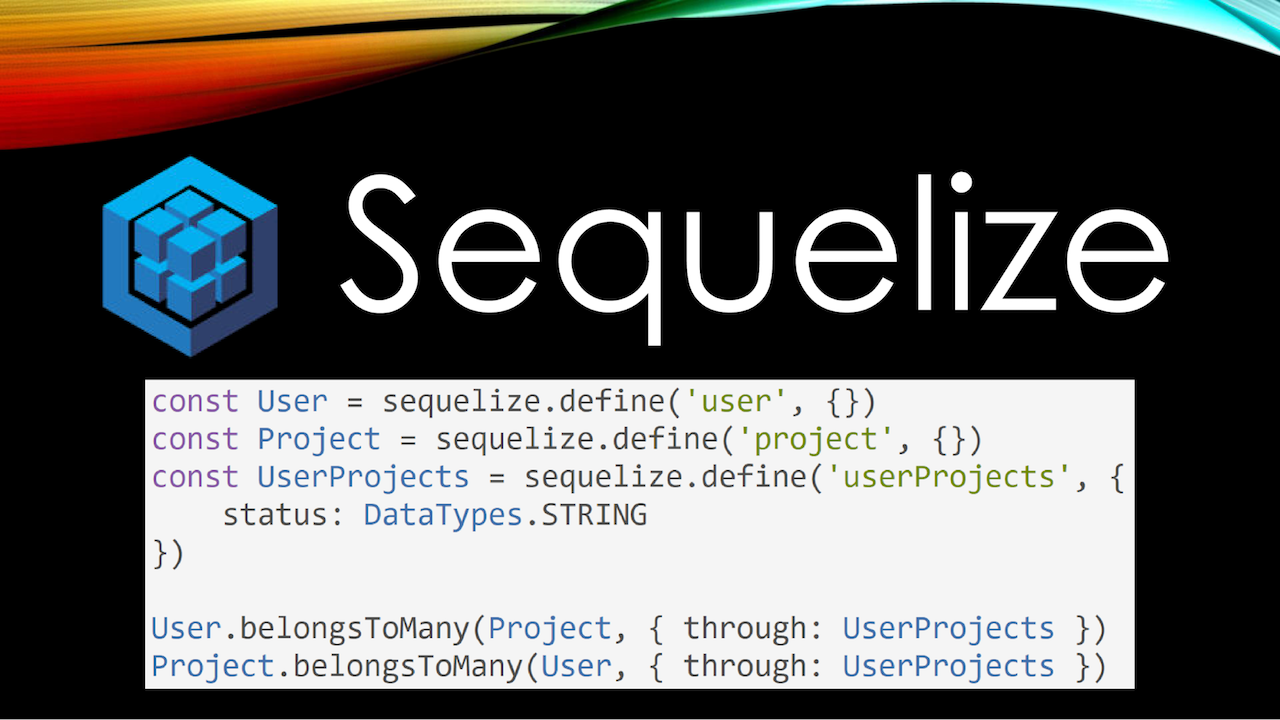

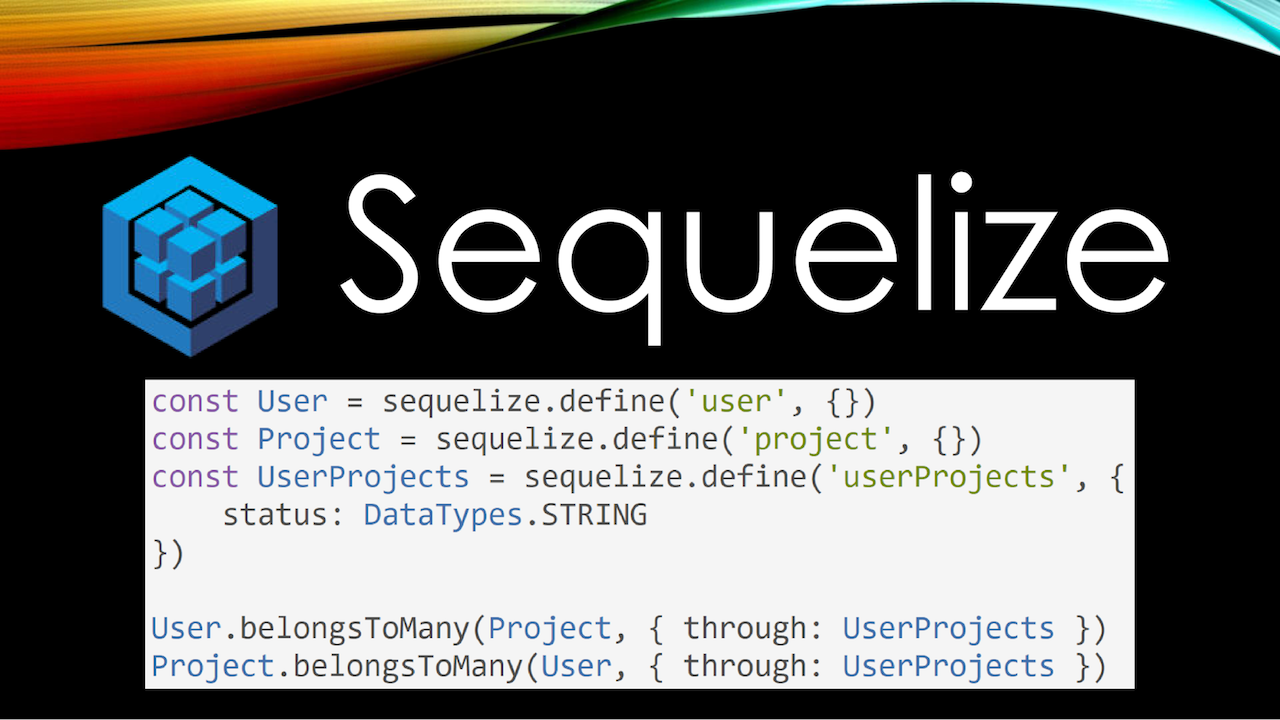

Take Sequelize. This is the most popular ORM for relational databases. And take a completely simple code user.getProjects (). Where do you think the getProjects method comes from? It appears due to magic.

Every time I need to describe the relationships between the tables in Sequelize, I get depressed because I always confuse hasMany, belongsToMany. It's not that difficult, but every time I feel my brain being forced to strain, and sooner or later I still make a mistake in this. What is the worst thing in this approach, these things are very easy to miss on the review.

I talk a lot about code review, because we can automate anything, but we can never foresee everything. The last stronghold in ensuring reliability will always be people. Our task is to simplify the work of this person as much as possible so that he has to think about the minimum number of things.

I have repeated this phrase several times already, but I still like it: “merge request for 20 lines - 30 comments, merge request for 5000 lines - looks good to me”. This is absolutely true, I myself am so.

We have a person in JavaScript Ninja, who seems to have taken the position of junior developer, and against our will shares with us the successes of his refactoring. Yesterday I posted a screenshot of “translated half of the project to react and redux”, there were 8,000 lines added, 10,000 deleted ”I look forward to how it will be reinstated, I look forward to“ the release of the new season ”. At the same time, according to him, he was told “everything is in order”, and he sincerely believes that this merge request cannot be divided into separate parts.

Those who believe that such merge request is justified, and they really can not be broken into new parts, I advise you to take an example from the Linux kernel. This is an excellent example of a repository where everyone works not with commits, but with patch sets. That is, patches are sent to you by mail, which you must roll onto your git. You know, mail is not the best medium for working with patches. Revising a large code in the mail is inconvenient, in particular, it is difficult for you to leave a comment on a specific line. In the mail very quickly the context starts to get lost.

As a result, the user is forced to break his patch sets into separate isolated pieces. And these individual pieces must be reviewed separately. As a result, even some complicated refactoring begins with the fact that in the beginning we roll in new functionality into the very basis, then roll in some infrastructure on top of this functionality and only at the end we start the subsystem after the subsystem to transfer it to a new basis.

I know what I'm talking about. My Microsoft Surface laptop works almost perfectly under Linux, but the battery status is not displayed there. And I watch very carefully how people reverse the protocol and how they gradually prepare patches for inclusion in the main branch of the kernel, it is very difficult. And the skill to break a large code into separate small patch sets is fundamentally important to ensure reliability.

Magic is not only "forbidden outside Hogwarts," but also "harmful and useful." For example, most of you are unlikely to represent all the magic of the React collector. Because if we are talking about the sixteenth React, Fiber is a huge difficulty.

In fact, the Fiber developers completely implemented a call stack from scratch (which was almost completely removed from OCaml) inside JavaScript, in order to be able to handle component renders asynchronously. Then they introduced a scheduler, which very much resembles the code of the first primitive schedulers of operating systems. Then they realized that the scheduler is very difficult, so they created a proposal to implement it directly in JavaScript. Scheduler is the proposal stage 0.

But nevertheless, the magic of React is how it does something as quickly as possible. That is, what it does is clear: we have rendered, it uses a virtual DOM. But how he does it quickly is magic. This is useful magic because it is controllable.

I will give an example of another framework - Vue.js. Who of you likes Vue? I'm glad you are getting smaller. I have long admired Vue, now a period of great disappointment, now I will explain why.

An abstract project on Vue is much faster than an abstract project on React. The project, written by Junior on Vue, absolutely definitely works much faster than the project, written by Junior on React.

The point is reactivity magic. Vue is able to track which elements of a state, and no matter where this state was located, depend on which components and each time it rerenders only the necessary components. Very cool idea that allows you not to think about performance. Vue won me over in due time.

Second cool idea. Surely many of you, if not all, are familiar with the philosophy of Web Components and the philosophy of transclusion, when we have slots, and the content from the child is inserted into a hole in another component. The simplest example of using a slot is: let's say we have a pop-up that contains overlays, a cross, and so on, and we need to transfer some content there. Just hand over a piece - sensibly.

In Vue there are slots on steroids - scoped slots. They are needed when we need to transfer some more data to the slot. The simplest example of using a scoped slot is when there is a table, and you want to customize each row. That is, you need a custom render for each line. In React, smart hipsters now use Render Proper for this: beautiful, declarative. In Vue, you simply insert a piece of the template and do not think that in fact it also compiles into the render function.

As soon as you have render slots in your component, your acclaimed Vue reactivity system turns into a pumpkin. Right in the Vue source code it says: check if the child component that we are updating has scoped slots, make forceUpdate. As soon as the parent is updated, the child is also redrawn. This becomes a disaster for performance in certain situations.

In React with all its magic, we can control this magic. We have, say, shouldComponentUpdate (), which prevents the component from being redrawn. With Vue, we have a long gaze to look at it, we have no mechanism, for example, to prevent the component from being redrawn. We have to invent completely phenomenal crutches for this. This is fixed in the third Vue. This is a separate story, someday it will definitely come out.

The last item is Jest . Excellent testing framework from Facebook. I absolutely honestly believe it is currently the leading framework in the world of JavaScript testing: beautiful, expressive, effective. He knows how to mock imports. It works great as long as it works.

The problem is that imports, as defined by ECMAScript 2015, are static. You cannot substitute the implementation of imports, you cannot declare an import in if, depending on require. Require you can apply, but no import. Imports, in principle, cannot be replaceable, they must be calculated before the process of calculating a component. The jest compiles with Babel the imports in Require and then substitutes them.

One day, you decide to be a hipster and use the NGS modules in Node, which are a proposal, but very soon become the standard. Because it is unnatural to have two module management systems in the JavaScript world: one with Require, the other with imports. I want to write imports everywhere. And here you find that the Jest with NGS-modules is powerless, because the node members decided to strictly follow the specification, and it is impossible in principle to replace imports. That is, the result of the calculation of the import is a frozen object that cannot be thawed.

In the end, after pain and suffering, you come to what other programming languages came to many, many years ago. You understand that you need an Inversion of Control pattern and preferably some Dependency Injection container.

And, as you understand, this philosophy has long been on the front end. Angular is built entirely around IoC / DI philosophy. In React, Mr. Abramov himself ... By the way, do you know why React is cooler than Vue? Because the site dan.church is registered, but there is no evan.church site yet.

So, Abramov said that the context in React is in essence the implementation of the Dependency Injection pattern, it allows you to add some dependencies to the context and pull them to the levels below. In Vue itself, even the words are called inject and provide. That is, at the front, this pattern has long been present in an implicit form.

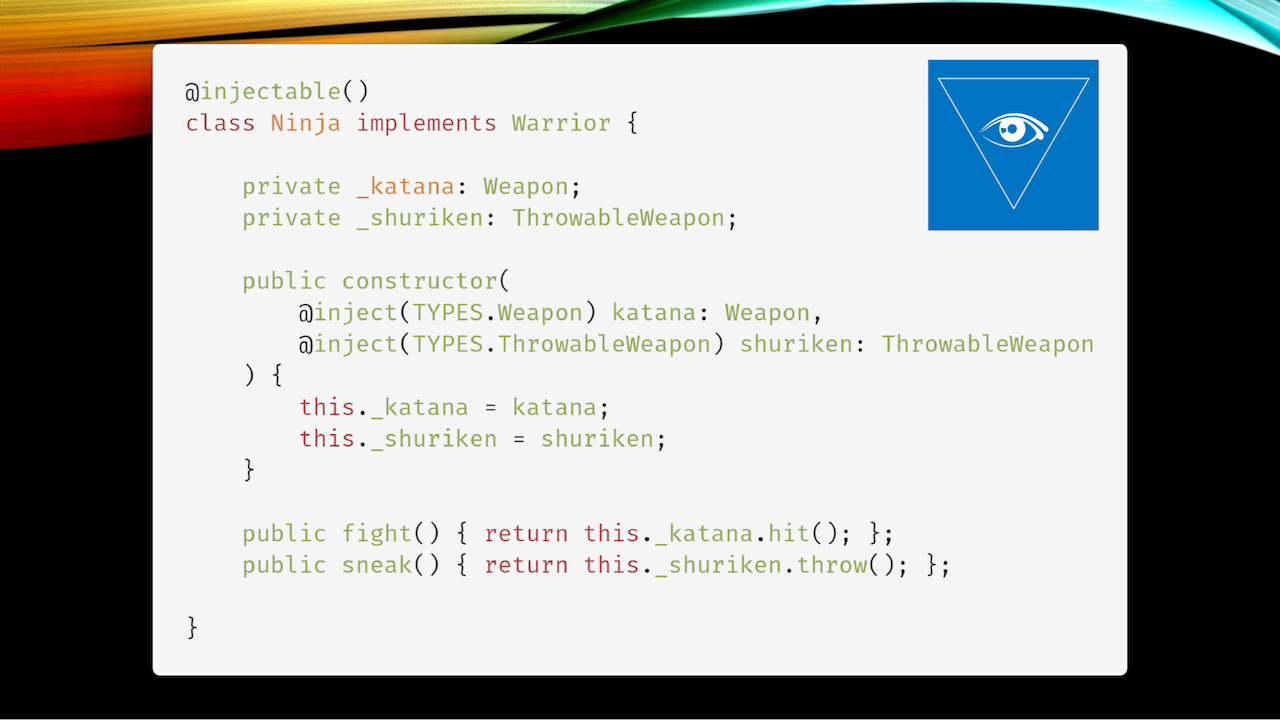

As for the backend, we, too, are beginning to appear things that implement this. For example, NestJS . I use InversifyJS. It is built with a love for TypeScript, which, however, does not prevent it from working using regular JavaScript. And here we rest against the fact that our ecosystem is still not sufficiently adult.

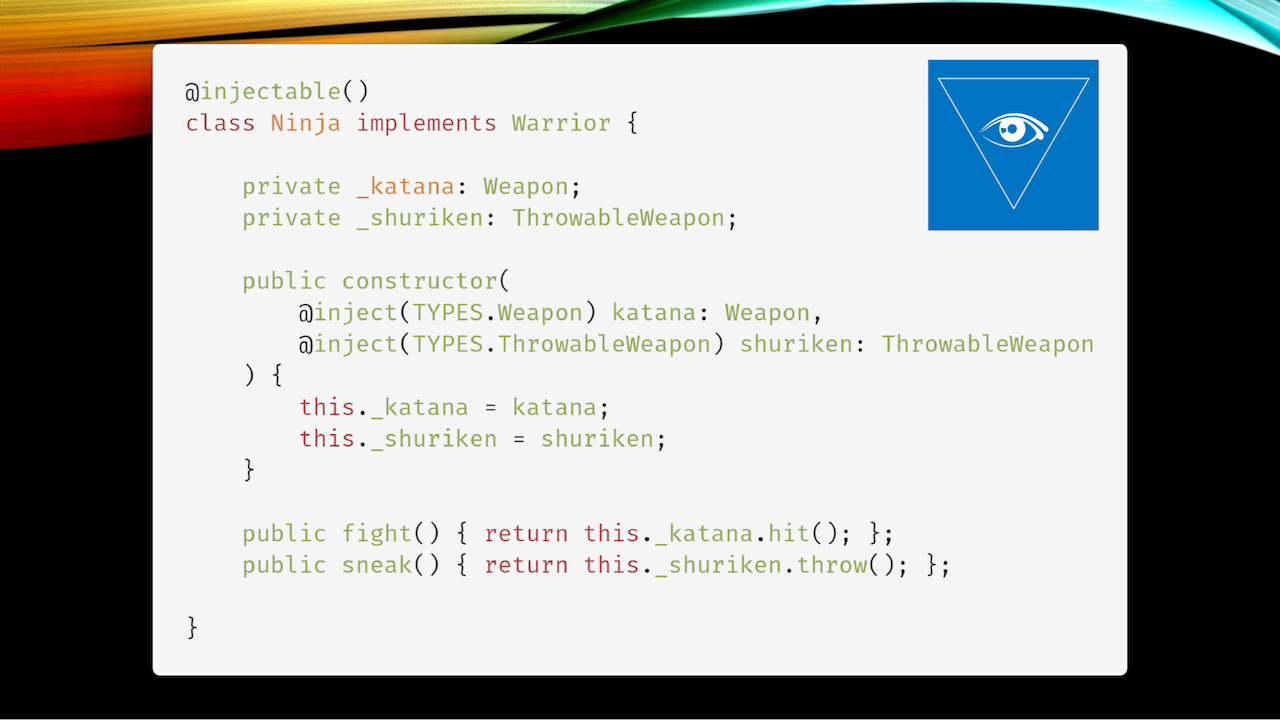

The code on Typescript is taken from the Inversify documentation. Let's look at the constructor of the code in which we say: inject (TYPES.Weapon) katana: Weapon. What do you think, at what stage will it be checked that an object of type TYPES.Weapon satisfies the class Weapon? The answer is never.

Roughly speaking, if you are sealed into what you insert into an object, the vaunted TypeScript (vaunted Flow will behave the same way) will not be able to verify it in any way, because the inject procedure and the whole dependency injection work at runtime, and there information about not.

What does “almost no” mean? If you use Weapon not as an interface, but as an abstract class, TypeScript will be able to test it, because we have abstract classes. Abstract classes in TypeScript are compiled into classes, and classes are first-class citizen in JavaScript, they remain after compilation. But the interfaces are ephemeral entities that evaporate, and at runtime you will no longer have any information that the katana element should satisfy the Weapon interface. And this is a problem.

In some C ++, which exists for more years than I live, there is RTTI: run-time type information, which allows to get information about how it works at runtime. In C # and Java, there is reflection, which also allows you to get the necessary information. TypeScript developers, who have a separate section “that we will NOT implement,” said they did not intend to provide any tools for RTTI.

Comes to ridiculous. Fifteen seconds of insiders. Vue developers rewrite Vue 3 with TypeScript, and found that Vue's approach is incompatible with TypeScript philosophy so much that they asked Microsoft: “Can we write our TypeScript plugin for it to work?” Those who write in Vue will understand: when you pass props to a component, they magically appear on this. Obviously, the type of transmitted props and the type of objects appearing on this must be the same. But in TypeScript you can neither verify nor describe it. Surprise.

The Microsoft developers refused, otherwise we know you: now let's allow Vue to do it, then Angular will pull, who have been sick for a long time. He also had to add his own compiler to bypass the TypeScript restrictions. Then React will be tightened, and we will not have a typing system, but it is not clear what.

But still, the problem still remains. Oddly enough, in this regard, I am impressed by Dart, who has mirrors that work everywhere except Flutter. Even if mirrors are not available, there are tools with which you can pull out all the necessary information through the code generation at the compilation stage, save it and still get access in runtime.

The same solution I am now sawing for Inversify, in order to use the Babel plugin, which in the compilation process, when there are more types, pulls out information, generates certain runtime checks to ensure that everything works well.

The last remark about the immaturity of the ecosystem: in general, with the types we have everything is very bad and strange. Here we have typed code. It contains information with which parameters the function will be called, with which arguments, and what types of return values. And we throw all this away so that the V8, after launching, will start doing the same.

V8 begins to collect information about types, because it is fundamentally important for an optimizing compiler to work. Moreover, there are certain tools that allow you to pull out of the V8, if you collect it with certain patches, information about the types that it has collected. It is very funny to compare the conclusions in the types that turned out in V8 and the conclusions that you had in TypeScript.

But from the point of view of any normal programming language, this is crazy. We throw out the information that we have, so that we can heroically collect it.

When I talk about the words "correct" or "wrong" code, in this context, "correct" is the code that arranges the command. Each team has certain conventions and agreements, this is especially important in frameworks like Vue, where one task can be solved in many ways. Someone uses slots, someone passes a function to a props, and so on.

Here the code generation shines with all the colors. Who uses typed-css-modules? What are we talking about: you write CSS, then connect it to CSS Modules, and then refer to specific fields of the resulting object.

There is a ready-made solution of typed-css-modules , which, using code generation, will allow you to verify that you are accessing the css-file class, which is definitely there.

Over the past year, I participated in 12 projects as a consultant who used CSS modules and existed for at least six months. And in 11 of 12 I found a class undefined on the generated page, because CSS styles evolved, changed and so on, but as a result a class that does not exist joyfully connects from the style object, and it turns out to be undefined, it happens.

Yeoman is well known to everyone, but the problem with his and all such tools is that trying to build a too general tool for code generation leads to the fact that it is almost useless in particular cases. To apply it for a specific project, you have to think a lot, suffer and try. Therefore, I am much more impressed by the initiative Angular CLI, which calls Blueprints (damn, I hired Angular for two years, and in two days on GDG SPB I will tell you how cool it is and how I loved it).

This initiative allows you to effectively, in the context of Anguar, describe how your services, components, etc. should look. All this entirely allows you to effectively build the work of the team with a minimum amount of effort from the side of the team at the start of the project.

Another example where “correct” code is easier to write than “incorrect” is good, large frameworks. In general, the reference example, when the "correct" code is easier to write - functional programming. As soon as you know Tao functional programming, you understand what a monad is, and at the same time having lost the opportunity to explain it to others, you understand that you either write the correct code in a functional style, or you don’t get it at all.

Another thing is that there is a threshold for entry - it is easier to hang oneself. I have junior teams in my team, and they are important for me in order not to lose their separation from reality, to understand how people in general in the real world write code. Therefore, for example, React-hooks did not begin to take the concept of pure functional programming.

They long and painfully searched for a compromise that, on the one hand, would be close to functional programming, and we could use some ESLInt to do something to verify, and on the other hand, which would be understandable to most ordinary mortals.

When the framework imposes on you how to do tasks, it is very cool. When we talk about backend, examples of such frameworks are NestJS and Sails.js .

It would seem that both the one and the other framework solve the same problem, they impose a specific architecture, and describe how you should solve this and that. I will not now criticize Sails for the completely poor ORM that comes with it. Waterline is the first thing everyone throws away. But the problem is that with all this Sails.js provides too much magic. You can make blueprint controllers from which a bunch of magical properties and things are then generated. This is not just slow, but very fast, and it’s wildly difficult to debug others.

But if we are talking about Nest, then there is an excellent compromise - the framework simply gives components, each of which is not necessary to use. You have Dependency Injection, which underlies everything, and there is a rule for describing middleweight controllers and so on.

But on occasion, each element is easily replaceable. Every time you want to understand whether the system is safe enough, in order to play with it for a long time, ask yourself one simple question: “Will I be able to replace some component of the system if necessary?”

If we are talking about Angular, then - yes, we have a huge amount of well-fitted components to each other, but if we don’t take the dependency injection mechanism, which is the foundation, everything is completely interchangeable.

If we take some Vue (sorry, today we have a light haute towards him), we find that the scorched earth begins outside the mainstream ecosystem of Vue. No one saws anything outside the Vue ecosystem, so if you choose one of these two things, the conclusion is fairly obvious - take Nest. It is cool, I really like where it develops, despite the fact that TypeScript.

A couple of common things that are relevant not only for JavaScript. If you came to our world from another, normal programming language, all of this is obvious to you, but let's go over, because we should not forget about it, but at a fundamental level we are constantly forgotten.

The first is besides testing, which I deliberately said almost nothing about, because everyone understands that you need to write tests, and everyone understands that there is no time to do this: hrenak-hrenak and in production. When you have any serious production associated with losing money, you need to measure the metrics.

We use Grafana, I really like it, but whatever you choose is absolutely unimportant. I witnessed when the platform for creating chat bots did not take into account one interesting scenario that allowed to loop the bot. Since each of his sentences addressed Microsoft’s speech recognition API, by the time they discovered an error, the bill was about 20 thousand dollars. The bot tried to recognize the phrase, recognized it, went into error, began to try again to do something.

The next important point: CI / CD. We like GitLab, but this is a matter of taste, everyone has their own impressions. But now I feel that GitLab is the most JavaScript-friendly environment in terms of how it works, what to do with it, and so on.

In the front row began to watch the clock. You know, with delayed guests, the look will be much more eloquent if you transfer it from a watch to a gun hanging next to it ... Do not worry, it remains a little bit.

Blue / Green deployment: If you have any important service or microservice, think about keeping two instances in the cloud. If these words are unfamiliar to you, google, then you can sell yourself more expensive!

Ilya Klimov thought about this when the error was very expensive (literally) - and as a result he gave a report to HolyJS. And since the audience reviews turned out to be excellent, we now prepared a text version of this report for Habr. Under the cut - and the text and video.

Hello. My name is Ilya Klimov, I'm from Kharkov, Ukraine. I have my own small, up to ten people, outsourcing company. We do everything that money is paid for ... in the sense that we program in JavaScript in all industries. Today, talking about reliable JavaScript, I want to share my experiences somewhere over the past year, since this topic has begun to bother me quite seriously and seriously.

Those who work in outsourcing, perfectly understand the contents of the next slide:

Everything that we will talk about, of course, has nothing to do with reality. As they say in South Park, all the characters are parodied, and pathetic. Naturally, those places where there is a suspicion of a violation of the NDA, were agreed with the representatives of the customers.

Nothing influenced my thoughts about reliability and the like, like setting up my own company. When you start your own company, it suddenly turns out that you can be a very cool programmer, you can have very cool guys, but sometimes incredible things happen, a couple of impossible and completely crazy.

I have the Ninja JavaScript educational project. Sometimes I give promises. Sometimes I even do them. I promised to record a video about Kubernetes in 2017 as part of an educational project. I realized that I made this promise and it would be good to fulfill it, on December 31. And I sat down to write ( here's the result ).

Since I like to record videos that are as close to reality as possible, I used examples of a real project. As a result, in the demonstration cluster, I deployed a piece that took real orders from real production and put them in a separate Kubernetes database in my demonstration cluster.

Since it was December 31, part of the orders went nowhere. Written off for seasonality: everyone left to drink tea. When the customer woke up, about January 12-13, the total cost of the video was about $ 500,000. I haven’t had such an expensive production yet.

Example number two: the next cluster Kubernetes. New-fashioned ecosystem Infrastructure as a Сode: everything that can be described by code and configs, Kubernetes is twitched programmatically from JavaScript shells and so on. Cool, everyone likes. A little change the deployment procedure, and there comes a time when you need to deploy a new cluster. The following situation occurs:

const config = {

// …

mysql: process.env.MYSQL_URI

|| ‘mysql://localhost:3306/foo’

// ...

}

Many of you probably also have such a line of code in your configs. That is, we take the config from a mysql variable or take a local database.

Because of a typo in the deployment system, it turned out that the system was again configured as a production, but the MySQL database used a test — a local one, which was used for tests. This time the money was spent less - only $ 300,000. Fortunately, this was not my company, but the place where I worked as a consultant.

You might think that all this does not concern you as a front-end, since I was talking about DevOps (by the way, I am delighted with the name of the DevOops conference , it perfectly describes the essence). But I will tell about one more situation.

There is a system that monitors veterinary epidemics in Ethiopia, developed under the auspices of the UN. It contains one of the interface elements when it comes to a person, and he manually enters the coordinates: when and where there were flashes of a certain disease.

There is an outbreak of some regular foot and mouth disease (I am not strong in cow diseases), and in a hurry the operator accidentally presses the “add” button twice, leaving the fields empty. Since we have JavaScript, and JavaScript and types are very good, empty fields of latitude and longitude happily lead to zero coordinates.

No, we did not send the doctor far to the ocean, but it turned out that for display on the map, building reports, analytics, and analyzing the placement of people on the backend, all this is preliminarily calculated from the point of view of clustering. We are trying to combine the outbreak points.

As a result, the system is paralyzed during the day, because the backend is trying to calculate the cluster with the inclusion of this point, all the data become irrelevant, the doctors receive completely irresponsible orders from the series “travel 400 kilometers”. 400 kilometers across Ethiopia is a dubious pleasure.

The total loss estimate is about a million dollars. The report on this situation was written "We were the victim of an unfortunate set of circumstances." But we know that it's JavaScript!

And the last example. Unfortunately, although this story was long enough, I still can not name the company. But this is a company that has its own airline, its own hotels, and so on. She works very interactively with businesses, that is, provides them with a separate interface for booking tickets and so on.

One day, by ridiculous chance, an order for booking tickets from New York to Los Angeles comes in the amount of 999,999 pieces. The system of the company happily bought all the flights of its own airline, found that there were not enough seats, and sent the data to an international reservation system to compensate for the shortage. The international booking system, seeing a request for approximately 950,000 tickets, happily disconnected this airline from its system.

Since the shutdown is an extraordinary event, after that the problem was solved within seven minutes. However, for these seven minutes, the cost of the fines that had to be paid was only $ 100,000.

Fortunately, this all happened not in one year. But these cases made me think about the issues of ensuring reliability and ask two genuinely Russian questions: who is to blame and what to do with it?

Why this happens: the youth of the ecosystem

If you analyze a lot of stories, you will find that there are more stories about similar issues related to JavaScript than with a different programming language. This is not my subjective impression, but the results of news mining on Hacker News. On the one hand, this is a hipster and subjective source, but, on the other hand, it is quite difficult to find any sane source from the packs in the field of programming.

Moreover, a year ago I was passing a competition, where I had to solve algorithmic problems every day. Since I was bored, I solved them in javascript using functional programming. I wrote an absolutely clean function, and it actually worked correctly 1197 times in actual Chrome, and produced a different result 3 times. It was just a small bug in the TurboFan optimizer that just got into the mainstream Chrome.

Of course, it was corrected, but you know: this means, for example, that if your unit tests passed once, this does not mean that they will work in the system. That is, we executed the code about 1197 times, then the optimizer came and said: “Wow! Hot feature! Let's optimize it. ” And in the process of optimization led to the wrong result.

In other words, one of the first reasons why this is happening, we can call the youth of the ecosystem. JavaScript is a fairly young industry in the realm of serious programming, those cases where millions are spinning, where the cost of an error is measured by five or six characters.

For a long time, JavaScript was perceived as a toy. Because of this (not because we do not take it seriously) we still have problems with the lack of tools.

Therefore, in order to fight this cause, which is the fundamental fundamental principle of everything I will talk about today, I tried to formulate the rules of reliability that I could impose on my company or pass on as a consultant to others. As the saying goes, "rule number one is not to talk about reliability." But more seriously, then ...

Reliability Rule # 1: everything that can be automated should be automatized

Including, by the way, and spell checking:

Hidden text

Reliability Rule # 1: everything that can be automated should be auth About disaggregated as

It all starts with the simplest things. It would seem that everyone has been writing for a long time on Prettier. But only in 2018 this thing that we all use is good and healthy, learned to work with git add -p, when we partially add files to the git repository, and we want to format the code nicely, say, before sending it to the main repository. Absolutely the same problem has a fairly well-known realinstaged utility, which allows you to check only those files that have been changed.

Continue to play Captain Evidence: ESLint. I will not ask who uses it here, because it makes no sense for the whole hall to raise their hands (well, I hope so and don’t want to be disappointed). Better raise your hands, who have their own custom-written rules in ESLint.

Such rules are one of the very powerful ways to automate the mess that occurs in projects where people write junior level and the like.

We all want a certain level of isolation, but sooner or later a situation arises: “Look, here is this helper Vasya implemented somewhere in the directory of his component just next to it. I will not endure it in common, then I will. ” The magic word "later." This leads to the fact that in the project not vertical dependencies begin to appear (when the upper elements connect the lower ones, the lower ones never crawl behind the upper ones), but component A depends on component B, which is located in a completely different branch. As a result, component A becomes not so portable to other components.

By the way, I express Respect to Alfa-Bank, they have a very good and beautifully written library of components on React, it is a pleasure to use it precisely in terms of code quality.

The banal ESLint-rule, which follows where you import entities, allows you to significantly increase the quality of the code and save the mental model during code review.

I am already in terms of the world of the front end old. Recently, in the Kharkiv region, a large serious company, PricewaterhouseCoopers, has completed a study, and the average age of the front-endder was about 24-25 years. It's hard for me to think about all this, I want to focus on business logic when reviewing a pull request. Therefore, I am happy to write ESLint-rules in order not to think about such things.

It would seem that under this you can tune the usual rules, but the reality usually frustrates a lot more, because it turns out that some Redux selectors are needed from the Reactor component (unfortunately, it is still alive). And these selectors are somewhere in a completely different hierarchy, so "../../../ ..".

Or, even worse, webpack alias, which breaks about 20% of other tools, because not everyone understands how to work with it. For example, my beloved Flow.

Therefore, the next time before you want to yell at the junior (and the programmer has such a favorite activity), think about whether you can somehow automate it in order to avoid mistakes in the future. In an ideal world, of course, you will write an instruction that no one reads anyway. Here, the speakers of HolyJS are talented specialists with a lot of experience, but when it was proposed at the internal rally to write instructions for the speakers, they said “yes, they won't read it”. And these are the people with whom we must take an example!

The last of the trials, and move on to the tin. These are any tools to run precommit hooks. We use husky , and I couldn't help but insert this beautiful photo of a husky, but you can use something else.

If you think that this is all very simple - as they say, hold my beer, we will soon see that everything is more complicated than you think. A few more points:

Typing

If you are not writing in TypeScript, you may want to think about it. I don't like TypeScript, I traditionally haply Flow, but we can talk a little about it later, and here from the stage I will promote the mainstream solution with disgust.

Why is that? The TC39 program committee recently had a very big discussion about where the language is going. A very funny conclusion to which they came: in TC39 there are always “swan, cancer and pike”, which are dragging the language in different directions, but there is one thing that everyone always wants - this is performance.

TC39 unofficially, in an internal discussion, issued such a tirade: “we will always make JavaScript so that it remains productive, and those who don’t like it will take some language that is compiled into JavaScript”.

TypeScript is a pretty good alternative with an adult ecosystem. I can not fail to mention my love for GraphQL. He is really good, unfortunately, no one will allow him to implement on a huge number of existing projects, where we already have to work.

There were already GraphQL reports at the conference, which is why just one stroke to the question of reliability: if you use, say, Express GraphQL, then each time in addition to a specific resolver you can hang certain validators that allow you to toughen the requirements for the value compared to standard graphQL types.

For example, I would like the amount of transfer between two representatives of any banks to be positive. Because just yesterday a pop-up in my online banking happily proclaimed that I have -2 unread messages from the bank. And this is sort of like the leading bank in my country.

As for these validators, imposing additional rigor: using them is a good and sensible idea, just do not use them as suggested by, say, GraphQL. You are very much attached to GraphQL as a platform. At the same time, the validation that you do is needed at the same time in two places: on the frontend before we send and receive data, and on the backend.

I regularly have to explain to the customer why we took JavaScript as the backend, not X. Moreover, X is usually some kind of PHP, not pretty Go and the like. I have to explain that we are able to reuse code as efficiently as possible, including between the client and the backend, due to the fact that they are written in the same programming language. Unfortunately, as practice shows, often this thesis remains just a phrase at a conference and does not find embodiment in real life.

Contracts

I have already spoken about the youth of the ecosystem. Contract programming has existed for over 25 years as a basic approach. If you write in TypeScript, take io-ts, if you write on Flow, like me, take typed-contract, and get a very important thing: the ability to describe runtime contracts from which to output static types.

There is no worse for a programmer than the presence of more than one source of truth. I know people who have lost five-digit dollars in dollars simply because their type is described in a static-typed language (they used TypeScript - well, of course, this is just a coincidence), and the runtime type (like using tcomb ) slightly different.

Therefore, in compile-time, the error was not caught, simply because why check it? Unit tests for it was not, because we also checked this static typewriter. It makes no sense to check the things that were checked in the layer below, everyone remembers the testing hierarchy.

Due to the fact that over time the synchronization between the two contracts was disrupted, once a wrong translation was made to the address that went to the wrong address. Since this was a cryptocurrency, it is impossible to roll back a transaction a little more than in principle. Fork ether for you again, no one will. Therefore, contracts and interactions in contract programming are the first things you should start doing right tomorrow.

Why is this happening: isolation

The next problem is isolation. It is multifaceted and many-sided. When I worked for a company related to hotels and flights, they had an application on Angular 1. It was a long time ago, so excusable. A team of 80 people worked on this application. Everything was covered in tests. everything was fine, until one day I did my feature, did not freeze it, and found that I broke absolutely incredible parts of the system as part of testing, which I didn’t even touch.

It turned out I have a problem with creativity. It turned out that I accidentally called the service in the same way as another service that existed in the system. Since it was Angular 1, and the system of services there was not strictly typed, but stringly typed - stringed, Angular quite calmly began to slip my service to completely different places and, ironically, a couple of methods coincided in naming.

This, naturally, was not a coincidence: you understand that if two services are named the same, they are likely to do the same thing, plus or minus. It was a service related to the calculation of discounts. Only one module was engaged in calculating discounts for corporate clients, and the second module with my name was related to the calculation of discounts on shares.

Obviously, when an application is sawed by 80 people, it means that it is large. The application has implemented code splitting, and this means that the sequence of connecting modules directly depended on the user's journey through the site. To make it even more interesting, it turned out that not a single end-to-end test that tested the user's behavior and passage through the site, that is, a specific business scenario, caught this error. Because it seems that no one will ever need to simultaneously communicate with both discount modules. True, it completely paralyzed the work of the site admins, but with whom it does not happen.

The problem of isolation is very well illustrated by the logo of one of the projects that partially solve this problem. This is Lerna.

Lerna is an excellent tool for managing multiple npm packages in the repository. When you have a hammer in your hands, everything becomes suspiciously like a nail. When you have a unix-like system with the correct philosophy, everything becomes suspiciously similar to a file. Everyone knows that in unix-systems everything is a file. There are systems where this is brought to the highest degree (almost said “to the point of absurdity”), like Plan 9 .

I know organizations that, having suffered with ensuring the reliability of a giant application, came up with one simple idea: everything is a package.

When you take out some element of functionality, be it a component or something else, in a separate package, you automatically provide an insulation layer. Just because you can’t normally reach out from one package to another. And also because the system of working with packages that are collected in a monorepository via npm-link or Yarn Workspaces, is so awful and unpredictable in terms of how it is organized inside that you can't even resort to a hack and connect some a file through “node_modules something”, simply because different people have it all going in a different structure. This especially depends directly on the version of Yarn. There, in one of the versions, the mechanism was completely changed on the sly, as Yarn Workspaces organizes work with packages.

The second example of isolation, to show that the problem is multifaceted, is the package that I try to use everywhere now - it is cls-hooked . You may be aware of another package that implements the same thing - this is continuation-local-storage . It solves a very important problem that, for example, PHP developers do not encounter, for example.

Speech about isolation of each specific request. In PHP, on average in the hospital, all requests are isolated, we can’t interact between them only if you don’t use any Shared Memory perversions, we cannot, everything is good, peaceful, beautiful. In essence, cls-hooked adds the same thing, allowing you to create execution contexts, put local variables in them, and then, most importantly, automatically destroy these contexts so that they do not continue to eat your memory.

This cls-hooked is built on async_hooks, which in node.js is still in experimental status, but I know more than one and not two companies that use them in a fairly harsh production and are happy. There is a slight drop in performance, but the possibility of automatic garbage collection is invaluable.

When we start talking about isolation issues, about pushing different things into different node-modules.

Reliability Rule # 2: Bad and “Wrong” Code Should Look Wrong

I would have disagreed with the first criterion, which I derived for myself ten years ago. Because you would squeal that JavaScript is a dynamic language, and you deprive me of all the beauty and expressiveness of a language. This is a grep test.

What it is? Many people write in vim. This means that if you do not use any relatively new-fangled type of Language Server type, the only way to find a mention of an atom is, roughly speaking, grep. Of course, there are variations on the topic, but the general idea is clear. The idea behind the grep test is that you have to find all the function calls and their definition by the usual grep. You say, everyone does it, nothing strange.

Take Sequelize. This is the most popular ORM for relational databases. And take a completely simple code user.getProjects (). Where do you think the getProjects method comes from? It appears due to magic.

Every time I need to describe the relationships between the tables in Sequelize, I get depressed because I always confuse hasMany, belongsToMany. It's not that difficult, but every time I feel my brain being forced to strain, and sooner or later I still make a mistake in this. What is the worst thing in this approach, these things are very easy to miss on the review.

I talk a lot about code review, because we can automate anything, but we can never foresee everything. The last stronghold in ensuring reliability will always be people. Our task is to simplify the work of this person as much as possible so that he has to think about the minimum number of things.

I have repeated this phrase several times already, but I still like it: “merge request for 20 lines - 30 comments, merge request for 5000 lines - looks good to me”. This is absolutely true, I myself am so.

We have a person in JavaScript Ninja, who seems to have taken the position of junior developer, and against our will shares with us the successes of his refactoring. Yesterday I posted a screenshot of “translated half of the project to react and redux”, there were 8,000 lines added, 10,000 deleted ”I look forward to how it will be reinstated, I look forward to“ the release of the new season ”. At the same time, according to him, he was told “everything is in order”, and he sincerely believes that this merge request cannot be divided into separate parts.

Those who believe that such merge request is justified, and they really can not be broken into new parts, I advise you to take an example from the Linux kernel. This is an excellent example of a repository where everyone works not with commits, but with patch sets. That is, patches are sent to you by mail, which you must roll onto your git. You know, mail is not the best medium for working with patches. Revising a large code in the mail is inconvenient, in particular, it is difficult for you to leave a comment on a specific line. In the mail very quickly the context starts to get lost.

As a result, the user is forced to break his patch sets into separate isolated pieces. And these individual pieces must be reviewed separately. As a result, even some complicated refactoring begins with the fact that in the beginning we roll in new functionality into the very basis, then roll in some infrastructure on top of this functionality and only at the end we start the subsystem after the subsystem to transfer it to a new basis.

I know what I'm talking about. My Microsoft Surface laptop works almost perfectly under Linux, but the battery status is not displayed there. And I watch very carefully how people reverse the protocol and how they gradually prepare patches for inclusion in the main branch of the kernel, it is very difficult. And the skill to break a large code into separate small patch sets is fundamentally important to ensure reliability.

Why this happens: magic

Magic is not only "forbidden outside Hogwarts," but also "harmful and useful." For example, most of you are unlikely to represent all the magic of the React collector. Because if we are talking about the sixteenth React, Fiber is a huge difficulty.

In fact, the Fiber developers completely implemented a call stack from scratch (which was almost completely removed from OCaml) inside JavaScript, in order to be able to handle component renders asynchronously. Then they introduced a scheduler, which very much resembles the code of the first primitive schedulers of operating systems. Then they realized that the scheduler is very difficult, so they created a proposal to implement it directly in JavaScript. Scheduler is the proposal stage 0.

But nevertheless, the magic of React is how it does something as quickly as possible. That is, what it does is clear: we have rendered, it uses a virtual DOM. But how he does it quickly is magic. This is useful magic because it is controllable.

I will give an example of another framework - Vue.js. Who of you likes Vue? I'm glad you are getting smaller. I have long admired Vue, now a period of great disappointment, now I will explain why.

An abstract project on Vue is much faster than an abstract project on React. The project, written by Junior on Vue, absolutely definitely works much faster than the project, written by Junior on React.

The point is reactivity magic. Vue is able to track which elements of a state, and no matter where this state was located, depend on which components and each time it rerenders only the necessary components. Very cool idea that allows you not to think about performance. Vue won me over in due time.

Second cool idea. Surely many of you, if not all, are familiar with the philosophy of Web Components and the philosophy of transclusion, when we have slots, and the content from the child is inserted into a hole in another component. The simplest example of using a slot is: let's say we have a pop-up that contains overlays, a cross, and so on, and we need to transfer some content there. Just hand over a piece - sensibly.

In Vue there are slots on steroids - scoped slots. They are needed when we need to transfer some more data to the slot. The simplest example of using a scoped slot is when there is a table, and you want to customize each row. That is, you need a custom render for each line. In React, smart hipsters now use Render Proper for this: beautiful, declarative. In Vue, you simply insert a piece of the template and do not think that in fact it also compiles into the render function.

As soon as you have render slots in your component, your acclaimed Vue reactivity system turns into a pumpkin. Right in the Vue source code it says: check if the child component that we are updating has scoped slots, make forceUpdate. As soon as the parent is updated, the child is also redrawn. This becomes a disaster for performance in certain situations.

In React with all its magic, we can control this magic. We have, say, shouldComponentUpdate (), which prevents the component from being redrawn. With Vue, we have a long gaze to look at it, we have no mechanism, for example, to prevent the component from being redrawn. We have to invent completely phenomenal crutches for this. This is fixed in the third Vue. This is a separate story, someday it will definitely come out.

The last item is Jest . Excellent testing framework from Facebook. I absolutely honestly believe it is currently the leading framework in the world of JavaScript testing: beautiful, expressive, effective. He knows how to mock imports. It works great as long as it works.

The problem is that imports, as defined by ECMAScript 2015, are static. You cannot substitute the implementation of imports, you cannot declare an import in if, depending on require. Require you can apply, but no import. Imports, in principle, cannot be replaceable, they must be calculated before the process of calculating a component. The jest compiles with Babel the imports in Require and then substitutes them.

One day, you decide to be a hipster and use the NGS modules in Node, which are a proposal, but very soon become the standard. Because it is unnatural to have two module management systems in the JavaScript world: one with Require, the other with imports. I want to write imports everywhere. And here you find that the Jest with NGS-modules is powerless, because the node members decided to strictly follow the specification, and it is impossible in principle to replace imports. That is, the result of the calculation of the import is a frozen object that cannot be thawed.

In the end, after pain and suffering, you come to what other programming languages came to many, many years ago. You understand that you need an Inversion of Control pattern and preferably some Dependency Injection container.

IoC / DI

And, as you understand, this philosophy has long been on the front end. Angular is built entirely around IoC / DI philosophy. In React, Mr. Abramov himself ... By the way, do you know why React is cooler than Vue? Because the site dan.church is registered, but there is no evan.church site yet.

So, Abramov said that the context in React is in essence the implementation of the Dependency Injection pattern, it allows you to add some dependencies to the context and pull them to the levels below. In Vue itself, even the words are called inject and provide. That is, at the front, this pattern has long been present in an implicit form.

As for the backend, we, too, are beginning to appear things that implement this. For example, NestJS . I use InversifyJS. It is built with a love for TypeScript, which, however, does not prevent it from working using regular JavaScript. And here we rest against the fact that our ecosystem is still not sufficiently adult.

The code on Typescript is taken from the Inversify documentation. Let's look at the constructor of the code in which we say: inject (TYPES.Weapon) katana: Weapon. What do you think, at what stage will it be checked that an object of type TYPES.Weapon satisfies the class Weapon? The answer is never.

Roughly speaking, if you are sealed into what you insert into an object, the vaunted TypeScript (vaunted Flow will behave the same way) will not be able to verify it in any way, because the inject procedure and the whole dependency injection work at runtime, and there information about not.

What does “almost no” mean? If you use Weapon not as an interface, but as an abstract class, TypeScript will be able to test it, because we have abstract classes. Abstract classes in TypeScript are compiled into classes, and classes are first-class citizen in JavaScript, they remain after compilation. But the interfaces are ephemeral entities that evaporate, and at runtime you will no longer have any information that the katana element should satisfy the Weapon interface. And this is a problem.

In some C ++, which exists for more years than I live, there is RTTI: run-time type information, which allows to get information about how it works at runtime. In C # and Java, there is reflection, which also allows you to get the necessary information. TypeScript developers, who have a separate section “that we will NOT implement,” said they did not intend to provide any tools for RTTI.

Comes to ridiculous. Fifteen seconds of insiders. Vue developers rewrite Vue 3 with TypeScript, and found that Vue's approach is incompatible with TypeScript philosophy so much that they asked Microsoft: “Can we write our TypeScript plugin for it to work?” Those who write in Vue will understand: when you pass props to a component, they magically appear on this. Obviously, the type of transmitted props and the type of objects appearing on this must be the same. But in TypeScript you can neither verify nor describe it. Surprise.

The Microsoft developers refused, otherwise we know you: now let's allow Vue to do it, then Angular will pull, who have been sick for a long time. He also had to add his own compiler to bypass the TypeScript restrictions. Then React will be tightened, and we will not have a typing system, but it is not clear what.

But still, the problem still remains. Oddly enough, in this regard, I am impressed by Dart, who has mirrors that work everywhere except Flutter. Even if mirrors are not available, there are tools with which you can pull out all the necessary information through the code generation at the compilation stage, save it and still get access in runtime.

The same solution I am now sawing for Inversify, in order to use the Babel plugin, which in the compilation process, when there are more types, pulls out information, generates certain runtime checks to ensure that everything works well.

The last remark about the immaturity of the ecosystem: in general, with the types we have everything is very bad and strange. Here we have typed code. It contains information with which parameters the function will be called, with which arguments, and what types of return values. And we throw all this away so that the V8, after launching, will start doing the same.

V8 begins to collect information about types, because it is fundamentally important for an optimizing compiler to work. Moreover, there are certain tools that allow you to pull out of the V8, if you collect it with certain patches, information about the types that it has collected. It is very funny to compare the conclusions in the types that turned out in V8 and the conclusions that you had in TypeScript.

But from the point of view of any normal programming language, this is crazy. We throw out the information that we have, so that we can heroically collect it.

Reliability Rule # 3: It should be easier to write “correct” code than “incorrect”

When I talk about the words "correct" or "wrong" code, in this context, "correct" is the code that arranges the command. Each team has certain conventions and agreements, this is especially important in frameworks like Vue, where one task can be solved in many ways. Someone uses slots, someone passes a function to a props, and so on.

Here the code generation shines with all the colors. Who uses typed-css-modules? What are we talking about: you write CSS, then connect it to CSS Modules, and then refer to specific fields of the resulting object.

There is a ready-made solution of typed-css-modules , which, using code generation, will allow you to verify that you are accessing the css-file class, which is definitely there.

Over the past year, I participated in 12 projects as a consultant who used CSS modules and existed for at least six months. And in 11 of 12 I found a class undefined on the generated page, because CSS styles evolved, changed and so on, but as a result a class that does not exist joyfully connects from the style object, and it turns out to be undefined, it happens.

Yeoman is well known to everyone, but the problem with his and all such tools is that trying to build a too general tool for code generation leads to the fact that it is almost useless in particular cases. To apply it for a specific project, you have to think a lot, suffer and try. Therefore, I am much more impressed by the initiative Angular CLI, which calls Blueprints (damn, I hired Angular for two years, and in two days on GDG SPB I will tell you how cool it is and how I loved it).

This initiative allows you to effectively, in the context of Anguar, describe how your services, components, etc. should look. All this entirely allows you to effectively build the work of the team with a minimum amount of effort from the side of the team at the start of the project.

Another example where “correct” code is easier to write than “incorrect” is good, large frameworks. In general, the reference example, when the "correct" code is easier to write - functional programming. As soon as you know Tao functional programming, you understand what a monad is, and at the same time having lost the opportunity to explain it to others, you understand that you either write the correct code in a functional style, or you don’t get it at all.

Another thing is that there is a threshold for entry - it is easier to hang oneself. I have junior teams in my team, and they are important for me in order not to lose their separation from reality, to understand how people in general in the real world write code. Therefore, for example, React-hooks did not begin to take the concept of pure functional programming.

They long and painfully searched for a compromise that, on the one hand, would be close to functional programming, and we could use some ESLInt to do something to verify, and on the other hand, which would be understandable to most ordinary mortals.

When the framework imposes on you how to do tasks, it is very cool. When we talk about backend, examples of such frameworks are NestJS and Sails.js .

It would seem that both the one and the other framework solve the same problem, they impose a specific architecture, and describe how you should solve this and that. I will not now criticize Sails for the completely poor ORM that comes with it. Waterline is the first thing everyone throws away. But the problem is that with all this Sails.js provides too much magic. You can make blueprint controllers from which a bunch of magical properties and things are then generated. This is not just slow, but very fast, and it’s wildly difficult to debug others.

But if we are talking about Nest, then there is an excellent compromise - the framework simply gives components, each of which is not necessary to use. You have Dependency Injection, which underlies everything, and there is a rule for describing middleweight controllers and so on.